Catalogue of series articles

Article catalogue

catalogue

1. Introduction to API interface GetImagebuffer of Haikang industrial camera

2. Modify and add QImage class in Haikang official demo for display

preface

In the use of industrial vision, as an essential visual embodiment of software, the interface plays a very important role in field application. In the use of python for industrial camera, it is essential to select pyqt to edit the interface, and the image display in pyqt has become an important implementation, so it is briefly introduced in this paper;

1, A rough introduction to QImage class

The role of QImage class in pyqt is mainly I/O and direct pixel by pixel access to image data. QImage class provides a hardware independent image representation method, which can be accessed pixel by pixel and used for drawing equipment; Therefore, the QImage class can display the image data obtained by the industrial camera;

Pixel formats that QImage class can support:

Format_A2BGR30_Premultiplied = 20

Format_A2RGB30_Premultiplied = 22

Format_Alpha8 = 23

Format_ARGB32 = 5

Format_ARGB32_Premultiplied = 6

Format_ARGB4444_Premultiplied = 15

Format_ARGB6666_Premultiplied = 10

Format_ARGB8555_Premultiplied = 12

Format_ARGB8565_Premultiplied = 8

Format_BGR30 = 19

Format_Grayscale8 = 24

Format_Indexed8 = 3

Format_Invalid = 0

Format_Mono = 1

Format_MonoLSB = 2

Format_RGB16 = 7

Format_RGB30 = 21

Format_RGB32 = 4

Format_RGB444 = 14

Format_RGB555 = 11

Format_RGB666 = 9

Format_RGB888 = 13

Format_RGBA8888 = 17

Format_RGBA8888_Premultiplied = 18

Format_RGBX8888 = 16

InvertRgb = 0

InvertRgba = 1This article mainly uses the format in QImage class in the calling process_ Rgb888 format and Format_Indexed8 format is mainly because the RGB format is used by default in QImage class, which is opposite to the RGB format channel in opencv;

2, Use the API interface GetImagebuffer of Haikang industrial camera and QImage class in pyqt to display the interface

1. Introduction to API interface GetImagebuffer of Haikang industrial camera

In the underlying SDK provided by Haikang, there is the interface of getimagebuffer, which is related to active streaming. In the SDK development guide of industrial camera under the installation directory, there is a specific introduction to this interface, as follows:

Interface: MV_CC_GetImageBuffer()

The interfaces corresponding to C language are as follows:

MV_CAMCTRL_API int __stdcall MV_CC_GetImageBuffer ( IN void * handle,

OUT MV_FRAME_OUT * pstFrame,

IN unsigned int nMsec

)

Parameters:

. Handle: device handle

. pstFrame: image data and image information

. nMsec: wait timeout time. When you enter INFINITE, it means to wait indefinitely until a frame of data is received or stream fetching is stopped,

return:

. The call is successful and MV is returned_ OK

. Call failed, return error code

Note:

1. Call MV before calling this interface to obtain image data frame_ CC_ Startgrabbing() starts image acquisition. This interface is active to obtain frame data. The upper application program needs to control the frequency of calling this interface according to the frame rate. The interface supports setting the timeout time, waiting inside the SDK until there is data to return, which can increase the stability of stream fetching. It is suitable for occasions with high stability requirements.

2. The interface is connected with MV_ CC_ The freeimagebuffer () interface is used together. After processing the obtained data, you need to use MV_CC_FreeImageBuffer() interface releases the data pointer permission in pstFrame;

3. The interface is connected with MV_ CC_ Compared with getoneframetimeout() interface, this interface has higher efficiency, and the allocation of streaming cache is automatically allocated by SDK, while MV_CC_GetOneFrameTimeout() interface needs to be allocated by ourselves;

4. The interface calls MV_ After the ccdisplay() interface, the stream cannot be accessed;

5. This interface does not support Cameralink devices, only GigE and USB devices;

According to the above description, MV_ CC_ The getimagebuffer() interface needs to be used after starting the image acquisition interface call, that is, the interface call in the official routine, as follows:

# Define a function for the thread

def work_thread(cam=0, pData=0, nDataSize=0):

stOutFrame = MV_FRAME_OUT()

memset(byref(stOutFrame), 0, sizeof(stOutFrame))

while True:

ret = cam.MV_CC_GetImageBuffer(stOutFrame, 1000)

if None != stOutFrame.pBufAddr and 0 == ret:

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

nRet = cam.MV_CC_FreeImageBuffer(stOutFrame)

else:

print ("no data[0x%x]" % ret)

if g_bExit == True:

breakAfter the stream fetching is enabled, we can actively capture the image data and information in the buffer at the bottom of the SDK through this interface. Through this interface, we can send the image data to us, but the official routine does not provide the relevant content of relevant data analysis and display, which leads to the need for us to redevelop this link, The third part of this paper will do the relevant code implementation for the analysis and display of image data;

And in the above code, you can see the interface and MV_ CC_ The freeimagebuffer () interface is used together. After the image data is taken, the data pointer permission in pstOutFrame is released before the next image data is taken;

2. Modify and add QImage class in Haikang official demo for display

In the official demo of Haikang, MV is used_ CC_ After the getimagebuffer interface obtains the image data, it needs to be displayed, but the interface needs to be defined before using pyqt to display in the interface. The interface definition is as follows:

from PyQt5.QtWidgets import *

from PyQt5.QtGui import *

from PyQt5.QtCore import *

# Window settings

class initform(QWidget):

def __init__(self):

super().__init__()

return self.initUI()

def initUI(self):

# Set the upper left margin, width and height of the window

self.setGeometry(300, 300, 1200, 900)

self.setWindowTitle("Industrial camera")

self.lable = QLabel("image", self)

self.lable.setAlignment(Qt.AlignLeft)

self.lable.setAlignment(Qt.AlignTop)

self.lable.setGeometry(0, 0, 800, 600)

self.lable.setScaledContents(True)

self.lable.move(400,300)

self.show()

def SetPic(self, img):

self.lable.setPixmap(QPixmap.fromImage(img))For ease of explanation, the interface size is set to be much larger than the image, which can be used to add other parameter settings on the interface in pyqt;

Modify the stream fetching thread based on the official demo, and directly transfer the image data in the stream fetching thread to the interface for display. The code is as follows:

# Define a function for the thread

def work_thread(cam=0, pData=0, nDataSize=0):

stOutFrame = MV_FRAME_OUT()

memset(byref(stOutFrame), 0, sizeof(stOutFrame))

while True:

ret = cam.MV_CC_GetImageBuffer(stOutFrame, 1000)

if None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 35127316: # RGB8_Packed format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight * 3)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth, 3)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 17301505: # Mono8 format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_Indexed8)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 17301514: # BayerGB8 format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth)

image = cv2.cvtColor(image, cv2.COLOR_BAYER_GB2BGR)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 34603039: # YUV422_Packed format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight* 2)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth,2)

image = cv2.cvtColor(image, cv2.COLOR_YUV2RGB_Y422)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 34603058: # YUV_422_YUYV format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight* 2)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth,2)

image = cv2.cvtColor(image, cv2.COLOR_YUV2RGB_YUYV)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 35127317: # BGR8_Packed format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight * 3)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth, 3)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

else:

print ("no data[0x%x]" % ret)

ex.SetPic(image_show)

nRet = cam.MV_CC_FreeImageBuffer(stOutFrame)

if g_bExit == True:

breakAmong them, according to different pixel formats, they are converted respectively, and then the data is transferred to QImage class for display;

The interface display needs to be run before the code gets the image, and then the image is displayed on the interface. Therefore, the interface part is registered and displayed before the code is run. The code is as follows:

if __name__ == "__main__":

app = QApplication(sys.argv)

ex = initform()

deviceList = MV_CC_DEVICE_INFO_LIST()

tlayerType = MV_GIGE_DEVICE | MV_USB_DEVICE

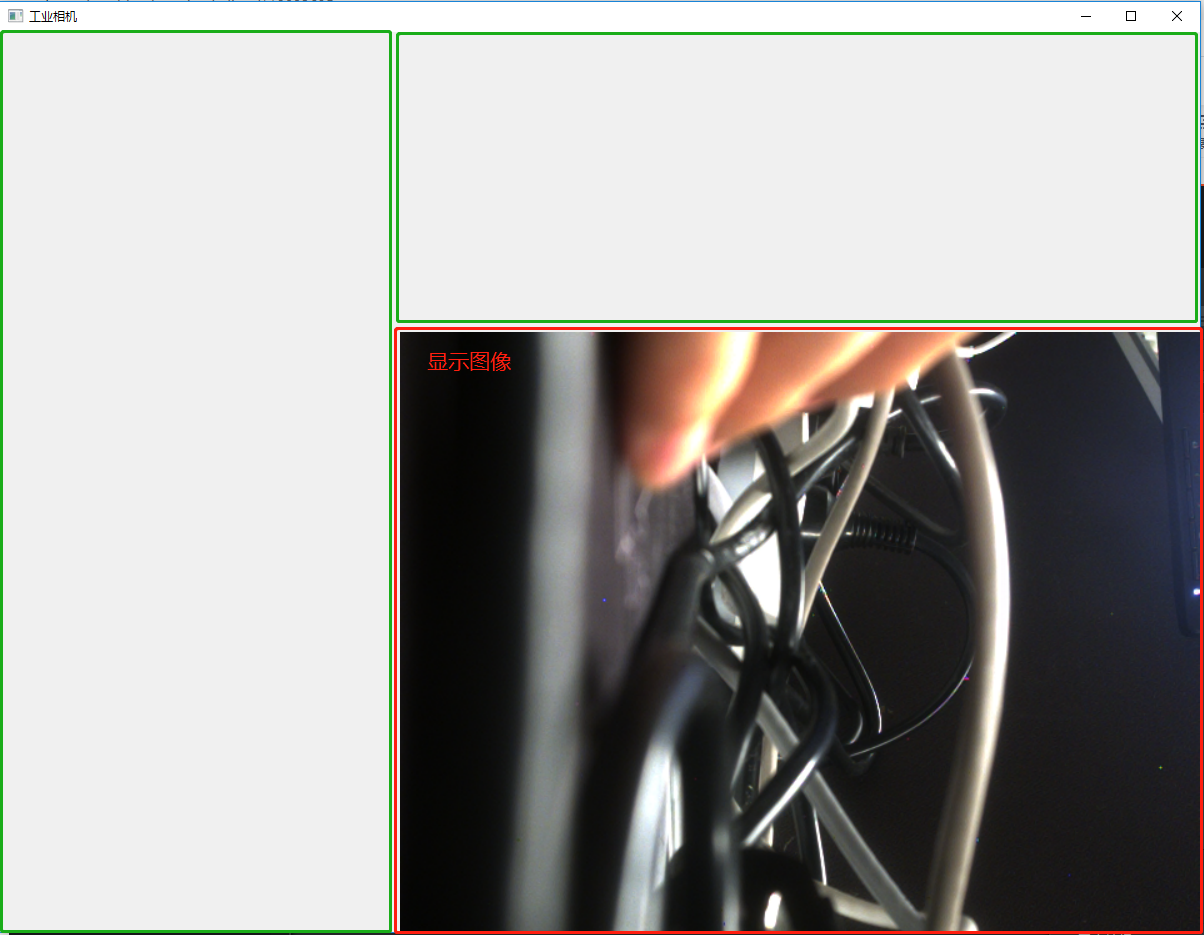

. . . . . . . . . . The initial interface is as follows:

Because the interface should be displayed first after the thread starts, and then the data should be displayed in the interface, the following code is added after the thread to close the interface after the thread ends;

try:

hThreadHandle = threading.Thread(target=work_thread, args=(cam, None, None))

hThreadHandle.start()

# hThreadHandle_1 = threading.Thread(target=image_control, args=(image_data))

# hThreadHandle_1.start()

app.exec_()

except:

print ("error: unable to start thread")3. Final display result and code

Part of the green box is reserved to facilitate the subsequent addition of some interface controls such as parameter settings;

The red frame part is the display effect of the image data obtained by the industrial camera;

Overall implementation code:

# -- coding: utf-8 --

import sys

import threading

import msvcrt

import numpy as np

from ctypes import *

import cv2

sys.path.append("../MvImport")

from MvCameraControl_class import *

from PyQt5.QtWidgets import *

from PyQt5.QtGui import *

from PyQt5.QtCore import *

# Window settings

class initform(QWidget):

def __init__(self):

super().__init__()

return self.initUI()

def initUI(self):

# Set the upper left margin, width and height of the window

self.setGeometry(300, 300, 1200, 900)

self.setWindowTitle("Industrial camera")

self.lable = QLabel("image", self)

self.lable.setAlignment(Qt.AlignLeft)

self.lable.setAlignment(Qt.AlignTop)

self.lable.setGeometry(0, 0, 800, 600)

self.lable.setScaledContents(True)

self.lable.move(400,300)

self.show()

def SetPic(self, img):

self.lable.setPixmap(QPixmap.fromImage(img))

g_bExit = False

# Define a function for the thread

def work_thread(cam=0, pData=0, nDataSize=0):

stOutFrame = MV_FRAME_OUT()

memset(byref(stOutFrame), 0, sizeof(stOutFrame))

while True:

ret = cam.MV_CC_GetImageBuffer(stOutFrame, 1000)

if None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 35127316: # RGB8_Packed format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight * 3)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth, 3)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 17301505: # Mono8 format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_Indexed8)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 17301514: # BayerGB8 format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth)

image = cv2.cvtColor(image, cv2.COLOR_BAYER_GB2BGR)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 34603039: # YUV422_Packed format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight* 2)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth,2)

image = cv2.cvtColor(image, cv2.COLOR_YUV2RGB_Y422)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 34603058: # YUV_422_YUYV format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight* 2)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*2),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth,2)

image = cv2.cvtColor(image, cv2.COLOR_YUV2RGB_YUYV)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

elif None != stOutFrame.pBufAddr and 0 == ret and stOutFrame.stFrameInfo.enPixelType == 35127317: # BGR8_Packed format

print ("get one frame: Width[%d], Height[%d], nFrameNum[%d]" % (stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, stOutFrame.stFrameInfo.nFrameNum))

pData = (c_ubyte * stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight * 3)()

memmove(byref(pData), stOutFrame.pBufAddr, stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3)

data = np.frombuffer(pData, count=int(stOutFrame.stFrameInfo.nWidth * stOutFrame.stFrameInfo.nHeight*3),dtype=np.uint8)

image = data.reshape(stOutFrame.stFrameInfo.nHeight ,stOutFrame.stFrameInfo.nWidth, 3)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image_show = QImage(image, stOutFrame.stFrameInfo.nWidth, stOutFrame.stFrameInfo.nHeight, QImage.Format_RGB888)

else:

print ("no data[0x%x]" % ret)

ex.SetPic(image_show)

nRet = cam.MV_CC_FreeImageBuffer(stOutFrame)

if g_bExit == True:

break

if __name__ == "__main__":

app = QApplication(sys.argv)

ex = initform()

deviceList = MV_CC_DEVICE_INFO_LIST()

tlayerType = MV_GIGE_DEVICE | MV_USB_DEVICE

# ch: enumerate devices | en:Enum device

ret = MvCamera.MV_CC_EnumDevices(tlayerType, deviceList)

if ret != 0:

print ("enum devices fail! ret[0x%x]" % ret)

sys.exit()

if deviceList.nDeviceNum == 0:

print ("find no device!")

sys.exit()

print ("Find %d devices!" % deviceList.nDeviceNum)

for i in range(0, deviceList.nDeviceNum):

mvcc_dev_info = cast(deviceList.pDeviceInfo[i], POINTER(MV_CC_DEVICE_INFO)).contents

if mvcc_dev_info.nTLayerType == MV_GIGE_DEVICE:

print ("\ngige device: [%d]" % i)

strModeName = ""

for per in mvcc_dev_info.SpecialInfo.stGigEInfo.chModelName:

strModeName = strModeName + chr(per)

print ("device model name: %s" % strModeName)

nip1 = ((mvcc_dev_info.SpecialInfo.stGigEInfo.nCurrentIp & 0xff000000) >> 24)

nip2 = ((mvcc_dev_info.SpecialInfo.stGigEInfo.nCurrentIp & 0x00ff0000) >> 16)

nip3 = ((mvcc_dev_info.SpecialInfo.stGigEInfo.nCurrentIp & 0x0000ff00) >> 8)

nip4 = (mvcc_dev_info.SpecialInfo.stGigEInfo.nCurrentIp & 0x000000ff)

print ("current ip: %d.%d.%d.%d\n" % (nip1, nip2, nip3, nip4))

elif mvcc_dev_info.nTLayerType == MV_USB_DEVICE:

print ("\nu3v device: [%d]" % i)

strModeName = ""

for per in mvcc_dev_info.SpecialInfo.stUsb3VInfo.chModelName:

if per == 0:

break

strModeName = strModeName + chr(per)

print ("device model name: %s" % strModeName)

strSerialNumber = ""

for per in mvcc_dev_info.SpecialInfo.stUsb3VInfo.chSerialNumber:

if per == 0:

break

strSerialNumber = strSerialNumber + chr(per)

print ("user serial number: %s" % strSerialNumber)

nConnectionNum = input("please input the number of the device to connect:")

if int(nConnectionNum) >= deviceList.nDeviceNum:

print ("intput error!")

sys.exit()

# ch: create camera object

cam = MvCamera()

# ch: select device and create handle

stDeviceList = cast(deviceList.pDeviceInfo[int(nConnectionNum)], POINTER(MV_CC_DEVICE_INFO)).contents

ret = cam.MV_CC_CreateHandle(stDeviceList)

if ret != 0:

print ("create handle fail! ret[0x%x]" % ret)

sys.exit()

# ch: open device | en:Open device

ret = cam.MV_CC_OpenDevice(MV_ACCESS_Exclusive, 0)

if ret != 0:

print ("open device fail! ret[0x%x]" % ret)

sys.exit()

# ch: detection network optimal package size (it only works for the GigE camera)

if stDeviceList.nTLayerType == MV_GIGE_DEVICE:

nPacketSize = cam.MV_CC_GetOptimalPacketSize()

if int(nPacketSize) > 0:

ret = cam.MV_CC_SetIntValue("GevSCPSPacketSize",nPacketSize)

if ret != 0:

print ("Warning: Set Packet Size fail! ret[0x%x]" % ret)

else:

print ("Warning: Get Packet Size fail! ret[0x%x]" % nPacketSize)

stBool = c_bool(False)

ret =cam.MV_CC_GetBoolValue("AcquisitionFrameRateEnable", stBool)

if ret != 0:

print ("get AcquisitionFrameRateEnable fail! ret[0x%x]" % ret)

sys.exit()

# ch: set trigger mode as off

ret = cam.MV_CC_SetEnumValue("TriggerMode", MV_TRIGGER_MODE_OFF)

if ret != 0:

print ("set trigger mode fail! ret[0x%x]" % ret)

sys.exit()

# ch: start grab image

ret = cam.MV_CC_StartGrabbing()

if ret != 0:

print ("start grabbing fail! ret[0x%x]" % ret)

sys.exit()

try:

hThreadHandle = threading.Thread(target=work_thread, args=(cam, None, None))

hThreadHandle.start()

# hThreadHandle_1 = threading.Thread(target=image_control, args=(image_data))

# hThreadHandle_1.start()

app.exec_()

except:

print ("error: unable to start thread")

print ("press a key to stop grabbing.")

msvcrt.getch()

g_bExit = True

hThreadHandle.join()

# hThreadHandle_1.join()

# ch: stop stream fetching | en:Stop grab image

ret = cam.MV_CC_StopGrabbing()

if ret != 0:

print ("stop grabbing fail! ret[0x%x]" % ret)

sys.exit()

# ch: Close device

ret = cam.MV_CC_CloseDevice()

if ret != 0:

print ("close deivce fail! ret[0x%x]" % ret)

sys.exit()

# ch: Destroy handle

ret = cam.MV_CC_DestroyHandle()

if ret != 0:

print ("destroy handle fail! ret[0x%x]" % ret)

sys.exit()

summary

Based on the combination of python, opencv and pyqt, this paper only makes the display of simple images in pyqt and the interface controls without setting parameters. After subsequent addition, upload relevant articles and codes to pay off the comments of all big guys on the Internet!