ALSA framework

ALSA is the abbreviation of Advanced Linux Sound Architecture, that is, Advanced Linux Sound Architecture. It provides support for audio and MIDI (music instrument digital interface) on Linux operating system. In Linux 2 After version 6 kernel, ALSA has become the default sound subsystem to replace OSS (Open Sound System) in version 2.4 kernel.

ALSA is a completely open source audio driver set, which is an open source project maintained by volunteers, while OSS is a commercial product provided by the company. The ALSA system includes seven subprojects: the driver package ALSA driver (integrated in the kernel source code), the development package ALSA LIBS, the development package plug-in alsalibplugins, the setting management toolkit ALSA utils, other sound related processing applet packages ALSA tools, the special audio firmware support package ALSA firmware, and the OSS interface compatible simulation layer tool ALSA OSS, Only ALSA driver is required. In addition to ALSA driver, ALSA includes ALSA lib function library in user space, which has a more friendly programming interface and is fully compatible with OSS. Developers can use drivers through these advanced APIs without directly interacting with kernel driver APIs.

ALSA has the following characteristics:

1) Support a variety of sound card devices

2) Modular kernel drivers

3) Support SMP (symmetric multiprocessing) and multithreading

4) Provide application development function library

5) Compatible with OSS applications.

ALSA can be divided into two parts in Linux system. In the device driver layer of Kernel space, ALSA provides ALSA driver, which is the core part of the whole ALSA framework; At the same time, in the Linux User space, ALSA provides ALSA lib to encapsulate the system call API of ALSA driver. As long as the application calls the API provided by ALSA lib, it can complete the control of the underlying audio hardware.

Environment construction

For ALSA collection under Linux, you must first configure the kernel to support ALSA, and then transplant ALSA-LIB

ALSA cross compilation and migration

Download source code alsa lib alsa util

alsa-lib

CC=arm-cortex_a9-linux-gnueabi-gcc ./configure --host=arm-linux –

prefix=/home/swann/SDK/EXYNOS6818/SDK/alsa-lib/

make

makeinstall

alsa-util

CC=arm-cortex_a9-linux-gnueabi-gcc ./configure --prefix=/home/swann/SDK/EXYNOS6818/SDK/alsa-util/ --host=arm-linux --with-alsa-incprefix=/home/swann/SDK/EXYNOS6818/SDK/alsa-lib/include --with-alsaprefix=/home/swann/SDK/EXYNOS6818/SDK/alsa-lib/lib --disable-alsamixer --disablexmlto --disable-nls

make

make install

Test the two libraries to the board

Add profile

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/lib/alsa-lib/lib

export ALSA_CONFIG_PATH=/usr/lib/alsa-lib/share/alsa/alsa.conf

export ALSA_CONFIG_DIR=/usr/lib/alsa-lib/share/alsa

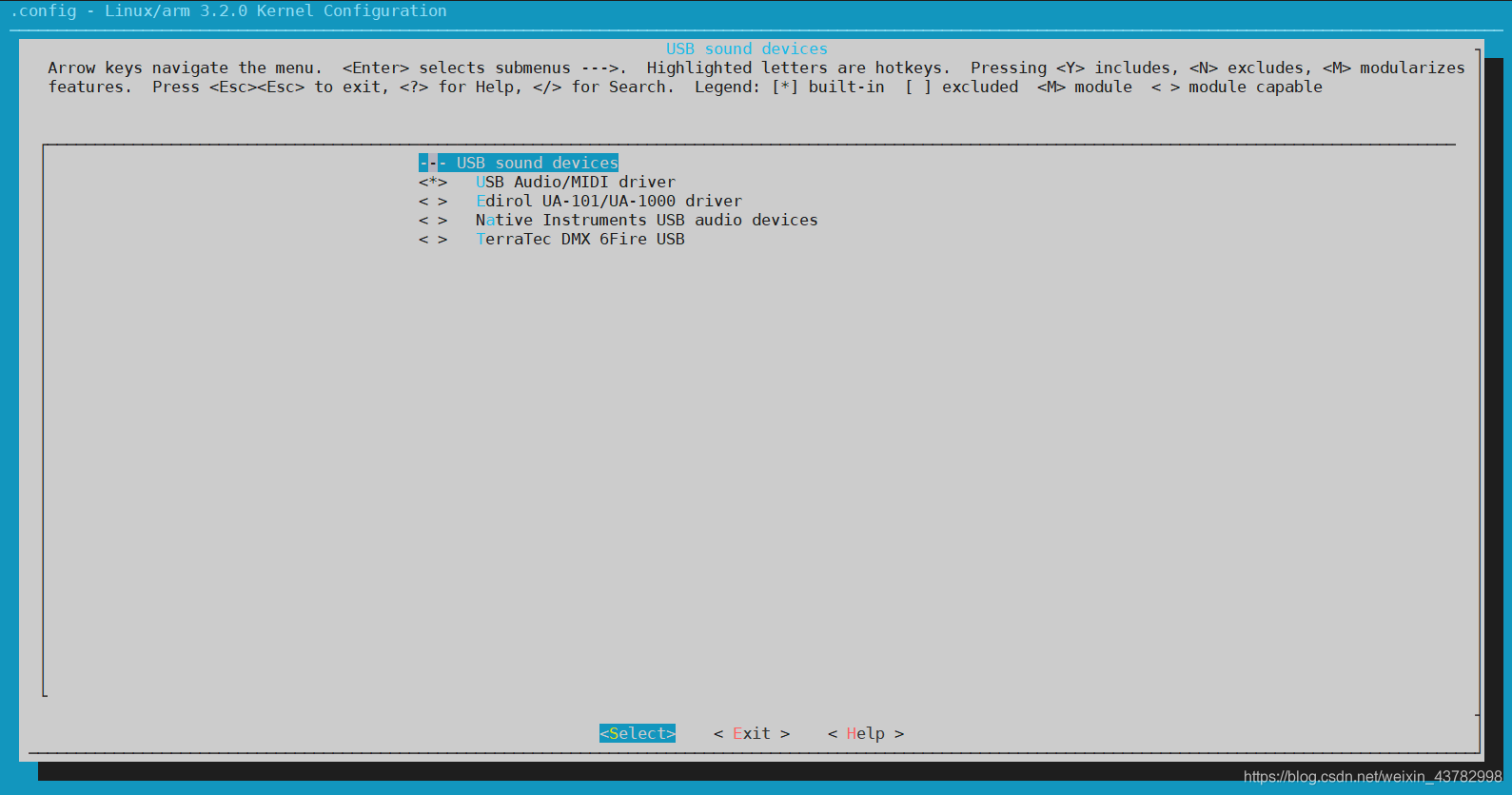

Configure USB sound card

Check the sound card device and test it

View audio card:

arecord -l

**** List of CAPTURE Hardware Devices **** card 0: xxsndcard [xx-snd-card], device 1: TDM_Capture (*) [] Subdevices: 1/1 Subdevice #0: subdevice #0 card 0: xxsndcard [xx-snd-card], device 2: DMIC_Capture (*) [] Subdevices: 1/1 Subdevice #0: subdevice #0 card 0: xxsndcard [xx-snd-card], device 3: AWB_Record (*) [] Subdevices: 1/1 Subdevice #0: subdevice #0

Through the above command, you can get the equipment that can be used for recording, such as card x device x

Recording test

According to the above information, if we want to use TDM_Capture recording, you can enter the following commands

arecord -Dhw:0,1 -d 10 -f cd -r 44100 -c 2 -t wav test.wav

Parameter analysis

-D specifies the recording device. 0,1 means card 0 device 1, that is, TDM_Capture

-d specifies the duration of recording, in hours and seconds

-f specifies the recording format. It is known from the above information that only cd cdr dat is supported

-r specifies the sampling rate in Hz

-c specify the number of channel s

-t specifies the format of the generated file

Play test

Play WAV

aplay test.wav

Play raw data

aplay -t raw -r 44100 -f S16_LE -c 2 decoder.raw

Common parameters of audio acquisition

First of all, we should understand some information of audio processing: sampling rate / bit depth / number of channels

Sampling rate:

The frequency of how much sound data is collected per second in seconds

Bit depth:

The sampling rate we mentioned above will collect sound data once every time. Since the size of the sound data this time is bit depth, the unit must be bit

Number of channels:

Related to hardware parameters, there are several devices to collect sound sources

OK, for example:

The sampling rate is 48000, the bit depth is 16bit, and the number of channels is 2

If we know these three parameters, we basically know

How much audio data can the device collect in one second

48000 * 16 * 2 = 1536000 bits

48000 * 16 * 2 / 8 = 192000 bytes

That is, my device can collect 192000 in one second

Next, how to understand the frame rate of audio? 48000 times per second are collected. This is the total collection times. Maybe we should divide it into 100 times, 4800 times each time, or 50 times, 9600 times each time,.

This depends on the specific hardware, so the hardware will certainly open an interface

Let you get a minbufsize, which means how many bytes are extracted each time

WAV file header

//ckid: 4-byte RIFF flag, uppercase

wavHeader[0] = 'R';

wavHeader[1] = 'I';

wavHeader[2] = 'F';

wavHeader[3] = 'F';

//cksize: 4-byte file length, excluding the "RIFF" flag (4 bytes) and the bytes occupied by the file length itself (4 bytes), that is, the length is equal to the entire file length - 8

wavHeader[4] = (byte)(totalDataLen & 0xff);

wavHeader[5] = (byte)((totalDataLen >> 8) & 0xff);

wavHeader[6] = (byte)((totalDataLen >> 16) & 0xff);

wavHeader[7] = (byte)((totalDataLen >> 24) & 0xff);

//fcc type: 4-byte "WAVE" type block ID, in uppercase

wavHeader[8] = 'W';

wavHeader[9] = 'A';

wavHeader[10] = 'V';

wavHeader[11] = 'E';

//ckid: 4 bytes represent the beginning of "fmt" chunk. This block includes the internal format information of the file, in lowercase, and the last character is a space

wavHeader[12] = 'f';

wavHeader[13] = 'm';

wavHeader[14] = 't';

wavHeader[15] = ' ';

//cksize: 4 bytes, the size of the internal format information data of the file, and filter bytes (generally 00000010H)

wavHeader[16] = 0x10;

wavHeader[17] = 0;

wavHeader[18] = 0;

wavHeader[19] = 0;

//FormatTag: 2 bytes, encoding mode of audio data. 1: indicates PCM encoding

wavHeader[20] = 1;

wavHeader[21] = 0;

//Channels: 2 bytes, number of channels, 1 for mono and 2 for dual channels

wavHeader[22] = (byte) channels;

wavHeader[23] = 0;

//SamplesPerSec: 4 bytes, sampling rate, e.g. 44100

wavHeader[24] = (byte)(sampleRate & 0xff);

wavHeader[25] = (byte)((sampleRate >> 8) & 0xff);

wavHeader[26] = (byte)((sampleRate >> 16) & 0xff);

wavHeader[27] = (byte)((sampleRate >> 24) & 0xff);

//BytesPerSec: 4 bytes, audio data transmission rate, in bytes. Its value is the sampling rate × The size of each sample. The playback software can use this value to estimate the size of the buffer;

//bytePerSecond = sampleRate * (bitsPerSample / 8) * channels

wavHeader[28] = (byte)(bytePerSecond & 0xff);

wavHeader[29] = (byte)((bytePerSecond >> 8) & 0xff);

wavHeader[30] = (byte)((bytePerSecond >> 16) & 0xff);

wavHeader[31] = (byte)((bytePerSecond >> 24) & 0xff);

//BlockAlign: 2 bytes, size of each sampling = sampling accuracy * number of channels / 8 (unit: bytes)// This is also the smallest unit of byte alignment. For example, the value of 16bit stereo here is 4 bytes.

//The playback software needs to process multiple byte data of this value size at one time in order to use its value for buffer adjustment

wavHeader[32] = (byte)(bitsPerSample * channels / 8);

wavHeader[33] = 0;

//BitsPerSample: 2 bytes, sampling accuracy of each channel; For example, the value of 16bit here is 16. If there are multiple channels, the sampling accuracy of each channel is the same;

wavHeader[34] = (byte) bitsPerSample;

wavHeader[35] = 0;

//ckid: 4 bytes, data flag (data), indicating the beginning of "data" chunk. This block contains audio data in lowercase;

wavHeader[36] = 'd';

wavHeader[37] = 'a';

wavHeader[38] = 't';

wavHeader[39] = 'a';

//cksize: length of audio data, 4 bytes, audioDataLen = totalDataLen - 36 = fileLenIncludeHeader - 44

wavHeader[40] = (byte)(audioDataLen & 0xff);

wavHeader[41] = (byte)((audioDataLen >> 8) & 0xff);

wavHeader[42] = (byte)((audioDataLen >> 16) & 0xff);

wavHeader[43] = (byte)((audioDataLen >> 24) & 0xff);

Programming to realize the playback of recording

audio.cpp

#include "audio.h"

void audio::audio_write_frame(unsigned char* data)

{

int ret;

if(audio_type!=AUDIO_SPEAKER){

fprintf(stderr,"IT'S NOT A SPEAKER \r\n");

return;

}

buffer_out=data;

ret = snd_pcm_writei(capture_handle, buffer_out, frame_size);

if (ret == -EPIPE) {

/* EPIPE means underrun */

fprintf(stderr, "underrun occurred\n");

snd_pcm_prepare(capture_handle);

} else if (ret < 0) {

fprintf(stderr,"error from writei: %s\n",snd_strerror(ret));

}

}

void audio::audio_close_speaker(void)

{

if(audio_type!=AUDIO_SPEAKER){

fprintf(stderr,"IT'S NOT A SPEAKER \r\n");

return;

}

snd_pcm_drain(capture_handle);

snd_pcm_close(capture_handle);

}

void audio::audio_open_speaker(void)

{

if(audio_type!=AUDIO_SPEAKER){

fprintf(stderr,"IT'S NOT A SPEAKER \r\n");

return;

}

int ret,dir;

/* Open PCM device for playback. */

ret = snd_pcm_open(&capture_handle, audio_path.c_str(),SND_PCM_STREAM_PLAYBACK, 0);

if (ret < 0) {

fprintf(stderr,"unable to open pcm device: %s\n",snd_strerror(ret));

exit(1);

}

/* Allocate a hardware parameters object. */

snd_pcm_hw_params_alloca(&hw_params);

/* Fill it in with default values. */

snd_pcm_hw_params_any(capture_handle, hw_params);

/* Set the desired hardware parameters. */

/* Interleaved mode */

snd_pcm_hw_params_set_access(capture_handle, hw_params,SND_PCM_ACCESS_RW_INTERLEAVED);

/* Signed 16-bit little-endian format */

snd_pcm_hw_params_set_format(capture_handle, hw_params,format);

/* Two channels (stereo) */

snd_pcm_hw_params_set_channels(capture_handle, hw_params, channel);

snd_pcm_hw_params_set_rate_near(capture_handle, hw_params,&sample_rate, &dir);

/* Set period size to 32 frames. */

ret = snd_pcm_hw_params(capture_handle, hw_params);

if (ret < 0) {

fprintf(stderr,"unable to set hw parameters: %s\n",snd_strerror(ret));

exit(1);

}

}

/**

* @description: Turn off the microphone

* @param {*}

* @return {*}

* @author: YURI

*/

void audio::audio_close_micophone(void){

if(audio_type!=AUDIO_MICPHONE){

fprintf(stderr,"IT'S NOT A MICPHONE \r\n");

return;

}

// Free data buffer

free(buffer_in);

fprintf(stdout, "buffer_in freed\n");

// Turn off the audio acquisition card hardware

snd_pcm_close (capture_handle);

fprintf(stdout, "audio interface closed\n");

}

/**

* @description: Turn on the microphone and set the parameters

* @param {*}

* @return {*}

* @author: YURI

*/

void audio::audio_open_micophone(void){

if(audio_type!=AUDIO_MICPHONE){

fprintf(stderr,"IT'S NOT A MICPHONE \r\n");

return;

}

int err;

// Open the hardware of the audio acquisition card and judge whether the hardware is opened successfully. If the opening fails, an error prompt will be printed

if ((err = snd_pcm_open (&capture_handle, audio_path.c_str(), SND_PCM_STREAM_CAPTURE, 0)) < 0)

{

fprintf (stderr, "cannot open audio device %s (%s)\n", audio_path.c_str(), snd_strerror (err));

exit (1);

}

fprintf(stdout, "audio interface opened\n");

// Allocate a hardware variable object and judge whether the allocation is successful

if ((err = snd_pcm_hw_params_malloc (&hw_params)) < 0)

{

fprintf (stderr, "cannot allocate hardware parameter structure (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "hw_params allocated\n");

// Set the hardware object according to the default setting and judge whether the setting is successful

if ((err = snd_pcm_hw_params_any (capture_handle, hw_params)) < 0)

{

fprintf (stderr, "cannot initialize hardware parameter structure (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "hw_params initialized\n");

/*

Set the data to cross mode and judge whether the setting is successful

interleaved/non interleaved:Cross / non cross mode.

Indicates whether the sampling mode is cross or non cross in the process of multi-channel data transmission.

For multi-channel data, if sampling cross mode, a buffer is used_ In, in which the data of each channel is cross transmitted;

If you use non crossover mode, you need to allocate a buffer for each channel_ In, the data of each channel is transmitted separately.

*/

if ((err = snd_pcm_hw_params_set_access (capture_handle, hw_params, SND_PCM_ACCESS_RW_INTERLEAVED)) < 0)

{

fprintf (stderr, "cannot set access type (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "hw_params access setted\n");

// Set the data coding format to PCM, signed, 16bit and LE formats, and judge whether the setting is successful

if ((err = snd_pcm_hw_params_set_format (capture_handle, hw_params, format)) < 0)

{

fprintf (stderr, "cannot set sample format (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "hw_params format setted\n");

// Set the sampling frequency and judge whether the setting is successful

if ((err = snd_pcm_hw_params_set_rate_near (capture_handle, hw_params, &sample_rate, 0)) < 0)

{

fprintf (stderr, "cannot set sample rate (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "hw_params rate setted\n");

// Set to dual channel and judge whether the setting is successful

if ((err = snd_pcm_hw_params_set_channels (capture_handle, hw_params, 2)) < 0)

{

fprintf (stderr, "cannot set channel count (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "hw_params channels setted\n");

// Write the configuration into the driver and judge whether the configuration is successful

if ((err = snd_pcm_hw_params (capture_handle, hw_params)) < 0)

{

fprintf (stderr, "cannot set parameters (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "hw_params setted\n");

// Make the acquisition card idle

snd_pcm_hw_params_free (hw_params);

fprintf(stdout, "hw_params freed\n");

// Prepare the audio interface and judge whether it is ready

if ((err = snd_pcm_prepare (capture_handle)) < 0)

{

fprintf (stderr, "cannot prepare audio interface for use (%s)\n", snd_strerror (err));

exit (1);

}

fprintf(stdout, "audio interface prepared\n");

// Configure a data buffer to buffer data

buffer_in = (unsigned char*)malloc(frame_size*snd_pcm_format_width(format) / 8 * channel);

fprintf(stdout, "buffer_in allocated\n");

}

/**

* @description: Read out a frame of data

* @param {*}

* @return {*}

* @author: YURI

*/

unsigned char *audio::audio_read_frame(void)

{

if(audio_type!=AUDIO_MICPHONE){

fprintf(stderr,"IT'S NOT A MICPHONE \r\n");

return NULL;

}

int err;

// read

if ((err = snd_pcm_readi (capture_handle, buffer_in, frame_size)) != frame_size)

{

fprintf (stderr, "read from audio interface failed (%s)\n", err, snd_strerror (err));

exit (1);

}

return buffer_in;

}

/**

* @description: Separate channel data

* @param {unsigned char **} left

* @return {*}

* @author: YURI

*/

void audio::audio_channel_split(unsigned char ** data)

{

if(audio_type!=AUDIO_MICPHONE){

fprintf(stderr,"IT'S NOT A MICPHONE \r\n");

return;

}

for(int i=0;i<channel;i++){

for(int j=0;j<frame_size;j++){

data[i][j*2]=buffer_in[j*4+2*i];

data[i][j*2+1]=buffer_in[j*4+2*i+1];

}

}

}

/**

* @description: audio Microphone initialization

* @param {string} audio_tag

* @param {int} channel

* @param {int} sample_rate

* @param {snd_pcm_format_t} pcm_format

* @return {*}

* @author: YURI

*/

audio::audio(AUDIO_TYPE type,string audio_tag,int channel,int sample_rate,snd_pcm_format_t pcm_format,int frame_size)

{

this->audio_type=type;

//Set acquisition parameters

this->audio_path=audio_tag;

this->channel=channel;

this->sample_rate=sample_rate;

this->format=pcm_format;

this->frame_size=frame_size;

}

/**

* @description: Free up space and turn off hardware

* @param {*}

* @return {*}

* @author: YURI

*/

audio::~audio()

{

if(audio_type=AUDIO_MICPHONE){

// Free data buffer

free(buffer_in);

fprintf(stdout, "buffer_in freed\n");

}

// Turn off the audio acquisition card hardware

}

audio.h

/*

* @Description:

* @Autor: YURI

* @Date: 2022-01-26 18:08:08

* @LastEditors: YURI

* @LastEditTime: 2022-02-04 20:06:39

*/

#ifndef AUDIO_H

#define AUDIO_H

#include <unistd.h>

#include <alsa/asoundlib.h>

#include <string>

#include <stdio.h>

#include <stdlib.h>

#include <iostream>

#define FRAME_ Init 1024 / / frame Division

#define MAX_CHANNEL 4

using namespace std;

typedef enum {AUDIO_MICPHONE=0,AUDIO_SPEAKER=1}AUDIO_TYPE;

class audio

{

private:

string audio_path; //ALSA audio equipment

int channel; //Number of channels of acquisition device

unsigned int sample_rate;

snd_pcm_t *capture_handle; // A handle to the PCM device

unsigned char *buffer_in; //Data collected by microphone

unsigned char *buffer_out; //Data played by microphone

snd_pcm_hw_params_t *hw_params; // This structure contains information about the hardware and can be used to specify the configuration of the PCM stream

snd_pcm_format_t format ; // Sampling bits: 16bit, LE format

AUDIO_TYPE audio_type;

public:

int frame_size;

audio(AUDIO_TYPE type,string audio_tag="default",int channel=2,int sample_rate=48000,snd_pcm_format_t pcm_format=SND_PCM_FORMAT_S16_LE,int frame_size=FRAME_INIT);

~audio();

void audio_open_micophone(void);

unsigned char* audio_read_frame(void);//Read a frame from the microphone

void audio_close_micophone(void);

void audio_channel_split(unsigned char ** data);//Separate channel data. Two data spaces need to be applied externally

void audio_open_speaker(void);//open mic

void audio_close_speaker(void);//Turn off the microphone

void audio_write_frame(unsigned char* data);//Write a frame of data

};

#endif

wav file reading interface

audio_wav.cpp

/*

* @Description:

* @Autor: YURI

* @Date: 2022-01-28 00:39:32

* @LastEditors: YURI

* @LastEditTime: 2022-02-04 08:03:03

*/

#include "audio_wave.h"

/**

* @description: Initialization used when saving wav files

* @param {string} filepath

* @param {int} rate

* @param {int} bit_rate

* @param {int} channel

* @return {*}

* @author: YURI

*/

audio_wave::audio_wave(WAVE_FILE_MODE mode,string filepath,int rate,int bit_rate,int channel)

{

if(mode!=WAVE_FILE_WRITE_MODE){

fprintf(stderr,"DON'T SUPPORT THIS MODE \r\n");

return;

}

this->sample_rate=rate;

this->bit_rate=bit_rate;

this->channel=channel;

this->file_path=filepath;

this->data_length=0;

audio_wave_head.bit_rate=bit_rate;

audio_wave_head.bits_per_sample=bit_rate;

audio_wave_head.channel=channel;

audio_wave_head.sample_rate=sample_rate;

audio_wave_head.byte_rate=sample_rate*(bit_rate/8)*channel;

audio_wave_head.block_align=(bit_rate/8)*channel;

}

/**

* @description: Open file

* @param {*}

* @return {*}

* @author: YURI

*/

void audio_wave::audio_write_start(){

file_fd=fopen(file_path.c_str(),"wb");

fseek(file_fd,44,SEEK_SET);

data_length=0;

}

/**

* @description: Write a frame to the file

* @param {unsigned char*} frame

* @param {int} size

* @return {*}

* @author: YURI

*/

void audio_wave::audio_write_frame(unsigned char* frame,int size){

fwrite(frame,size,1,file_fd);

data_length+=size;

}

/**

* @description: End wav file

* @param {*}

* @return {*}

* @author: YURI

*/

void audio_wave::audio_write_end(void)

{

audio_wave_head.data_length=data_length;

audio_wave_head.wave_length=data_length+44-8;

fseek(file_fd,0,SEEK_SET);

fwrite(&audio_wave_head,sizeof(audio_wave_head),1,file_fd);

fclose(file_fd);

}

/**

* @description: Initialization function when reading files

* @param {string} filepath

* @return {*}

* @author: YURI

*/

audio_wave::audio_wave(WAVE_FILE_MODE mode,string filepath)

{

if(mode!=WAVE_FILE_READ_MODE){

fprintf(stderr,"DON'T SUPPORT THIS MODE \r\n");

return;

}

this->file_path=filepath;

file_fd =fopen(file_path.c_str(),"rb");

}

/**

* @description: Start reading, read file header

* @param {*}

* @return {*}

* @author: YURI

*/

void audio_wave::audio_read_start(void)

{

fread((void*)&audio_wave_head,44,1,file_fd);

if(audio_wave_head.wave_header[0]!='R' ){

fprintf(stderr,"THE FILE IS NOT WAV \r\n");

return;

}

printf("CHANNEL %d \r\n",audio_wave_head.channel);

printf("SAMPLE_RATE %d \r\n",audio_wave_head.sample_rate);

}

/**

* @description: Read a frame from the file

* @param {unsigned char*} frame

* @param {int} size

* @return {*}

* @author: YURI

*/

int audio_wave::audio_read_frame(unsigned char* frame,int size)

{

int ret= fread((void*)frame,size,1,file_fd);

return ret;

}

/**

* @description: End reading close file

* @param {*}

* @return {*}

* @author: YURI

*/

void audio_wave::audio_read_end(void){

fclose(file_fd);

}

audio_wav.h

/*

* @Description: Provide interfaces for saving audio as wav files and reading wav files

* @Autor: YURI

* @Date: 2022-01-28 00:39:43

* @LastEditors: YURI

* @LastEditTime: 2022-02-04 07:09:18

*/

#ifndef AUDIO_WAVE_H

#define AUDIO_WAVE_H

#include "stdio.h"

#include <string>

using namespace std;

typedef struct _wave_header_t {

char wave_header[4]; //WAVE header 1

int wave_length; //Length of audio data + 44 - 8

char format[8]; //WAVE+fmt

int bit_rate; //How many bit s does a sampling point occupy

short pcm; //Audio data encoding method

short channel; //Number of channels

int sample_rate; //sampling rate

int byte_rate; //sampling rate × Size per sample

short block_align; //The size of each sampling = sampling accuracy * number of channels / 8 (in bytes). For example, the value of 16bit stereo here is 4 bytes

short bits_per_sample; //Sampling accuracy of each channel; For example, the value of 16bit here is 16

char fix_data[4]; //"data"

int data_length; //Length of audio data

} wave_t;

typedef enum {WAVE_FILE_READ_MODE=0,WAVE_FILE_WRITE_MODE=1}WAVE_FILE_MODE;

class audio_wave

{

private:

FILE* file_fd;

string file_path;

int channel;

int data_length;

int sample_rate;

int bit_rate;

wave_t audio_wave_head={

{'R', 'I', 'F', 'F'},

( int)-1,

{'W', 'A', 'V', 'E', 'f', 'm', 't', ' '},

bit_rate,

0x01,

channel,

sample_rate,

sample_rate*(bit_rate/8)*channel,

(bit_rate/8)*channel,

bit_rate,

{'d', 'a', 't', 'a'},

(int)-1

};

public:

audio_wave(WAVE_FILE_MODE mode,string filepath,int rate,int bit_rate=16,int channel=2);//Initialization function for writing data

audio_wave(WAVE_FILE_MODE mode,string filepath);//Read initialization function of WAV

~audio_wave(){};

//Open file

void audio_write_start();

//Write a frame to the file

void audio_write_frame(unsigned char* frame,int size);

//End wav file

void audio_write_end(void);

//Start preparing to read wav file

void audio_read_start(void);

//Read in a frame file

int audio_read_frame(unsigned char* frame,int size);

//End read in

void audio_read_end(void);

};

#endif

Play recording test code

Operation mode

./xxx hw:0,0 ./file

audio_record

/*

* @Description: Record and save as two WAV files respectively

* @Autor: YURI

* @Date: 2022-01-27 07:33:35

* @LastEditors: YURI

* @LastEditTime: 2022-02-04 20:38:57

*/

#include <stdio.h>

#include <stdlib.h>

#include <alsa/asoundlib.h>

#include "audio.h"

#include "audio_wave.h"

#include "signal.h"

#include <string>

using namespace std;

audio_wave *audio_wave1,*audio_wave2,*audio_wave3;

void record_stop(int signo)

{

printf("end \r\n");

audio_wave1->audio_write_end();

audio_wave2->audio_write_end();

audio_wave3->audio_write_end();

}

int main (int argc, char *argv[])

{

int i;

int err;

string mico_path=string(argv[1]);

string save_path=string(argv[2]);

int channel =2;

unsigned int rate = 48000; // Sampling frequency: 44100Hz

snd_pcm_format_t format = SND_PCM_FORMAT_S16_LE; // Sampling bits: 16bit, LE format

signal(SIGINT, record_stop);

audio* aud=new audio(AUDIO_MICPHONE,mico_path,channel,rate,format);

aud->audio_open_micophone();

unsigned char * buffer;

unsigned char * data[2];

data[0]=(unsigned char*)malloc(2*aud->frame_size) ;

data[1]=(unsigned char*)malloc(2*aud->frame_size) ;

char file[20];

sprintf(file,"%s_0.wav",save_path.c_str());

audio_wave1=new audio_wave(WAVE_FILE_WRITE_MODE,string(file),48000,16,1);

audio_wave1->audio_write_start();

sprintf(file,"%s_1.wav",save_path.c_str());

audio_wave2=new audio_wave(WAVE_FILE_WRITE_MODE,string(file),48000,16,1);

audio_wave2->audio_write_start();

sprintf(file,"%s.wav",save_path.c_str());

audio_wave3=new audio_wave(WAVE_FILE_WRITE_MODE,string(file),48000,16,2);

audio_wave3->audio_write_start();

// Start collecting audio pcm data

for(int i=0;i<300;i++)

{

buffer=aud->audio_read_frame();

aud->audio_channel_split(data);

audio_wave1->audio_write_frame(data[0],2*aud->frame_size);

audio_wave2->audio_write_frame(data[1],2*aud->frame_size);

audio_wave3->audio_write_frame(buffer,4*aud->frame_size);

}

audio_wave1->audio_write_end();

audio_wave2->audio_write_end();

audio_wave3->audio_write_end();

return 0;

}

audio_play

/*

* @Description: Read WAV file and play

* @Autor: YURI

* @Date: 2022-02-04 06:55:42

* @LastEditors: YURI

* @LastEditTime: 2022-02-04 20:51:15

*/

#include <stdio.h>

#include <stdlib.h>

#include <alsa/asoundlib.h>

#include "audio.h"

#include "audio_wave.h"

#include "signal.h"

#include <string>

using namespace std;

audio_wave *audio_wave1;

unsigned char* buffer;

audio *aud;

int main(int argc,void** argv)

{

string speaker_path=string(( char*)argv[1]);

int channel =2;

unsigned int rate = 48000; // Sampling frequency: forty-eight thousand

snd_pcm_format_t format = SND_PCM_FORMAT_S16_LE; // Sampling bits: 16bit, LE format

aud=new audio(AUDIO_SPEAKER,speaker_path,channel,rate,format);

aud->audio_open_speaker();

audio_wave1=new audio_wave(WAVE_FILE_READ_MODE,string((char*)argv[2]));

audio_wave1->audio_read_start();

buffer=(unsigned char*)malloc(4*aud->frame_size);

while(audio_wave1->audio_read_frame(buffer,4*aud->frame_size) >0){

aud->audio_write_frame(buffer);

}

aud->audio_close_speaker();

audio_wave1->audio_read_end();

return 0;

}