Reprinted from AI Studio

Project link https://aistudio.baidu.com/aistudio/projectdetail/3352265

Competition background

Competition link: http://data.sd.gov.cn/cmpt/cmptDetail.html?id=67

Urban grid management is to divide the urban management area into unit grids according to certain standards. By strengthening the inspection of the cell grid, a management and service mode with mutual separation of supervision and disposal is established. In order to take the initiative to find problems, deal with problems in time, and strengthen the management ability and problem handling speed of the city.

By deeply mining all kinds of resource data, using big data ideas and tools, focusing on information discovery, analysis and utilization, refining the massive data value in the database, changing passive services into active services, and creating a solid data support foundation for the actual business operation process. Starting from the real scene and practical application, this competition will add more challenging and practical tasks. We hope that the contestants can learn from each other and make common progress in these tasks.

Competition task

Based on the grid event data, the event content in the grid is extracted and analyzed, and the categories of events are divided. Specifically, the government types of events are divided according to the event description provided.

No external data can be used in this competition. The competition adopts AB list. The time of list A is from the opening and submission of competition questions to January 18, 2022, and the time of List B is from January 19, 2022 to January 21, 2022.

Competition data

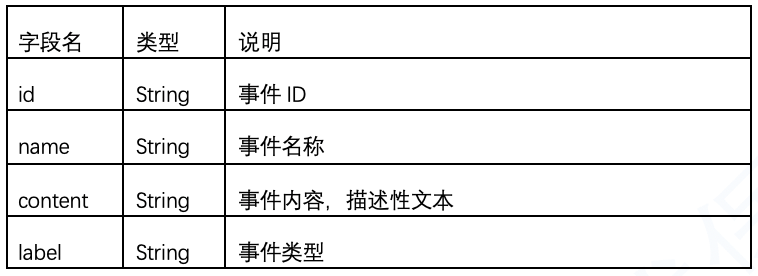

Download data is provided for this competition. Players can debug the algorithm locally and submit the results on the competition page. The contest will provide up to 28000 pieces of data, including training sets and test sets. The data shall be subject to the actual provision. The training data set data samples are as follows:

The test set data sample does not contain a label field. In order to ensure the fairness of the competition, this competition is only allowed to use official data and labels, otherwise the competition results will be deemed invalid.

Results the evaluation index is the classification accuracy: that is, the number of work orders correctly classified / the number of all work orders.

# Install ernie !pip install paddle-ernie > log.log

import numpy as np import paddle as P from ernie.tokenizing_ernie import ErnieTokenizer from ernie.modeling_ernie import ErnieModel

import sys import numpy as np import pandas as pd from sklearn.metrics import f1_score import paddle as P from ernie.tokenizing_ernie import ErnieTokenizer from ernie.modeling_ernie import ErnieModelForSequenceClassification

# Read the data set and splice the text

train_df = pd.read_csv('train.csv', sep=',')

train_df = train_df.dropna()

train_df['content'] = train_df['name'] + ',' + train_df['content']

test_df = pd.read_csv('testa_nolabel.csv', sep=',')

test_df['content'] = test_df['name'] + ',' + test_df['content']

train_df.head()

| id | name | content | label | |

|---|---|---|---|---|

| 0 | 0 | There is grass in the canal | There is grass in the canal. At 8:40 a.m. on September 9, * * * village grid member * * * found a row when he visited the north head of our village | 0 |

| 1 | 1 | Clear the sundries in the corridor | Clean up the sundries in the corridor and the sundries in the corridor within the jurisdiction | 0 |

| 2 | 2 | Street lamp repair | On September 8, 2020, * * * village grid member * * * found that our village | 0 |

| 3 | 3 | Shop investigation | Shop inspection: on February 1, 2021, the * * * seventh grid member * * * inspected the shops in * * * community for potential safety hazards. | 0 |

| 4 | 4 | Clean up the feces on the north side of * * * 4 * * * | Clean up the feces on the north side of * * * 4 * * * at 8:10 on September 7, 2020 * * * community neighborhood committee * * * first grid * * * | 0 |

train_df['content'].apply(len).mean()

80.11235733963007

# Encode dataset text

def make_data(df):

data = []

for i, row in enumerate(df.iterrows()):

text, label = row[1].content, row[1].label

text_id, _ = tokenizer.encode(text) # ErnieTokenizer will automatically add special tokens required by ERNIE, such as [CLS], [SEP]

text_id = text_id[:MAX_SEQLEN]

text_id = np.pad(text_id, [0, MAX_SEQLEN-len(text_id)], mode='constant')

data.append((text_id, label))

return data

# Build the dataset text into batch

def get_batch_data(data, i):

d = data[i*BATCH: (i + 1) * BATCH]

feature, label = zip(*d)

feature = np.stack(feature) # Integrate BATCH row samples into a numpy In array

label = np.stack(list(label))

feature = P.to_tensor(feature) # Use to_variable will numpy Convert array to paddle tensor

label = P.to_tensor(label)

return feature, label

from sklearn.model_selection import StratifiedKFold

skf = StratifiedKFold(n_splits=6)

# Model hyperparameter

BATCH=32

MAX_SEQLEN=300

LR=5e-5

EPOCH=13

# Multi fold training

fold_idx = 0

for train_idx, val_idx in skf.split(train_df['label'], train_df['label']):

print(train_idx, val_idx)

# ernie classification model

ernie = ErnieModelForSequenceClassification.from_pretrained('ernie-1.0', num_labels=25)

# Gradient clipping

clip = P.nn.ClipGradByValue(min=-0.1, max=0.1)

# Model optimizer

optimizer = P.optimizer.Adam(LR,parameters=ernie.parameters(), grad_clip=clip)

tokenizer = ErnieTokenizer.from_pretrained('ernie-1.0')

train_data = make_data(train_df.iloc[train_idx])

val_data = make_data(train_df.iloc[val_idx])

# Training and verification of single epoch model

for i in range(EPOCH):

np.random.shuffle(train_data)

ernie.train()

best_val_acc = 0

for j in range(len(train_data) // BATCH):

feature, label = get_batch_data(train_data, j)

loss, _ = ernie(feature, labels=label)

loss.backward()

optimizer.minimize(loss)

ernie.clear_gradients()

if j % 50 == 0:

print('Train %d/%d: loss %.5f' % (j, len(train_data) // BATCH, loss.numpy()))

# evaluate

if j % 100 == 0:

all_pred, all_label = [], []

with P.no_grad():

ernie.eval()

for j in range(len(val_data) // BATCH):

feature, label = get_batch_data(val_data, j)

loss, logits = ernie(feature, labels=label)

all_pred.extend(logits.argmax(-1).numpy())

all_label.extend(label.numpy())

ernie.train()

acc = (np.array(all_label) == np.array(all_pred)).astype(np.float32).mean()

if acc > best_val_acc:

best_val_acc = acc

P.save(ernie.state_dict(), str(fold_idx) + '.bin')

print('Val acc %.5f' % acc)

fold_idx += 1

downloading https://ernie-github.cdn.bcebos.com/model-ernie1.0.1.tar.gz: 1%| | 8597/788477 [00:00<00:20, 38223.93KB/s]

[ 2653 2679 2722 ... 15243 15244 15245] [ 0 1 2 ... 3958 4443 4471]

downloading https://ernie-github.cdn.bcebos.com/model-ernie1.0.1.tar.gz: 788478KB [00:13, 59626.31KB/s]

W1229 14:33:03.098490 102 device_context.cc:404] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1

W1229 14:33:03.103865 102 device_context.cc:422] device: 0, cuDNN Version: 7.6.

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ernie/modeling_ernie.py:296: DeprecationWarning: The 'warn' method is deprecated, use 'warning' instead

log.warn('param:%s not set in pretrained model, skip' % k)

[WARNING] 2021-12-29 14:33:11,718 [modeling_ernie.py: 296]: param:classifier.weight not set in pretrained model, skip

[WARNING] 2021-12-29 14:33:11,719 [modeling_ernie.py: 296]: param:classifier.bias not set in pretrained model, skip

Train 0/381: loss 3.55544

Val acc 0.02072

Train 50/381: loss 1.88277

Train 100/381: loss 1.69001

Val acc 0.58717

Train 150/381: loss 1.36154

Train 200/381: loss 1.21011

Val acc 0.62204

Train 250/381: loss 1.08967

Train 300/381: loss 1.02198

Val acc 0.61711

Train 350/381: loss 1.18056

Train 0/381: loss 1.07412

Val acc 0.64671

Train 50/381: loss 1.13818

Train 100/381: loss 1.13416

Val acc 0.64539

Train 150/381: loss 1.46287

Train 200/381: loss 0.87446

Val acc 0.65658

Train 250/381: loss 0.71587

Train 300/381: loss 0.92604

Val acc 0.64803

Train 350/381: loss 1.28124

Train 0/381: loss 0.72489

Val acc 0.66546

Train 50/381: loss 0.74182

Train 100/381: loss 1.04708

Val acc 0.68125

Train 150/381: loss 0.99416

Train 200/381: loss 0.53880

Val acc 0.67599

Train 250/381: loss 1.10793

Train 300/381: loss 0.98753

Val acc 0.68257

Train 350/381: loss 0.94299

Train 0/381: loss 0.50831

Val acc 0.68322

Train 50/381: loss 0.66269

Train 100/381: loss 0.57772

Val acc 0.68684

Train 150/381: loss 0.43587

Train 200/381: loss 0.70022

Val acc 0.67862

Train 250/381: loss 0.65823

Train 300/381: loss 0.63757

Val acc 0.68191

Train 350/381: loss 0.33489

Train 0/381: loss 0.57369

Val acc 0.68980

Train 50/381: loss 0.48750

Train 100/381: loss 0.60838

Val acc 0.68717

Train 150/381: loss 0.22357

Train 200/381: loss 0.73708

Val acc 0.67928

Train 250/381: loss 0.51203

Train 300/381: loss 0.63198

Val acc 0.68520

Train 350/381: loss 0.27435

Train 0/381: loss 0.49044

Val acc 0.66579

Train 50/381: loss 0.29290

Train 100/381: loss 0.35392

Val acc 0.69112

Train 150/381: loss 0.50911

Train 200/381: loss 0.50242

Val acc 0.68520

Train 250/381: loss 0.34875

Train 300/381: loss 0.50026

Val acc 0.69342

Train 350/381: loss 0.60847

Train 0/381: loss 1.38546

Val acc 0.59408

Train 50/381: loss 0.66777

Train 100/381: loss 0.29971

Val acc 0.69605

Train 150/381: loss 0.10559

Train 200/381: loss 0.13398

Val acc 0.69276

Train 250/381: loss 0.22911

Train 300/381: loss 0.47197

Val acc 0.68224

Train 350/381: loss 0.58273

Train 0/381: loss 0.31320

Val acc 0.67072

Train 50/381: loss 0.27460

Train 100/381: loss 0.59036

Val acc 0.65921

Train 150/381: loss 0.22631

Train 200/381: loss 0.37249

Val acc 0.67467

Train 250/381: loss 0.50558

Train 300/381: loss 0.12548

Val acc 0.68355

Train 350/381: loss 0.46684

Train 0/381: loss 0.27444

Val acc 0.68289

Train 50/381: loss 0.26523

Train 100/381: loss 0.25900

Val acc 0.68059

Train 150/381: loss 0.32721

Train 200/381: loss 0.12822

Val acc 0.68520

Train 250/381: loss 0.24605

Train 300/381: loss 0.59573

Val acc 0.65822

Train 350/381: loss 0.33798

Train 0/381: loss 0.13392

Val acc 0.69211

Train 50/381: loss 0.04590

Train 100/381: loss 0.06209

Val acc 0.68849

Train 150/381: loss 0.17020

Train 200/381: loss 0.05582

Val acc 0.68224

Train 250/381: loss 0.21385

Train 300/381: loss 0.11703

Val acc 0.68224

Train 350/381: loss 0.08684

[ 0 1 2 ... 15243 15244 15245] [2653 2679 2722 ... 7321 7606 7650]

[WARNING] 2021-12-29 15:19:22,368 [modeling_ernie.py: 296]: param:classifier.weight not set in pretrained model, skip

[WARNING] 2021-12-29 15:19:22,369 [modeling_ernie.py: 296]: param:classifier.bias not set in pretrained model, skip

Train 0/381: loss 3.17975

Val acc 0.07105

Train 50/381: loss 1.97960

Train 100/381: loss 1.57657

Val acc 0.54638

Train 150/381: loss 1.33006

Train 200/381: loss 1.07533

Val acc 0.59605

Train 250/381: loss 0.64593

Train 300/381: loss 1.17791

Val acc 0.63026

Train 350/381: loss 1.05878

Train 0/381: loss 1.05816

Val acc 0.63586

Train 50/381: loss 1.06569

Train 100/381: loss 1.12862

Val acc 0.63586

Train 150/381: loss 0.92812

Train 200/381: loss 0.75556

Val acc 0.64836

Train 250/381: loss 0.98756

Train 300/381: loss 0.96614

Val acc 0.65691

Train 350/381: loss 1.00483

Train 0/381: loss 0.66806

Val acc 0.64342

Train 50/381: loss 0.95530

Train 100/381: loss 1.01876

Val acc 0.66480

Train 150/381: loss 0.84060

Train 200/381: loss 1.15432

Val acc 0.65987

Train 250/381: loss 1.05941

Train 300/381: loss 0.98219

Val acc 0.67467

Train 350/381: loss 0.90607

Train 0/381: loss 0.71226

Val acc 0.67566

Train 50/381: loss 0.85803

Train 100/381: loss 0.56040

Val acc 0.67829

Train 150/381: loss 0.66480

Train 200/381: loss 0.80573

Val acc 0.68322

Train 250/381: loss 0.53809

Train 300/381: loss 0.67504

Val acc 0.67993

Train 350/381: loss 0.98953

Train 0/381: loss 0.58755

Val acc 0.68816

Train 50/381: loss 0.73488

Train 100/381: loss 0.84386

Val acc 0.68191

Train 150/381: loss 0.98317

Train 200/381: loss 1.47266

Val acc 0.67434

Train 250/381: loss 0.93344

Train 300/381: loss 0.57652

Val acc 0.68914

Train 350/381: loss 0.50576

Train 0/381: loss 0.50948

Val acc 0.69638

Train 50/381: loss 0.84004

Train 100/381: loss 0.56445

Val acc 0.68586

Train 150/381: loss 0.35402

Train 200/381: loss 0.33610

Val acc 0.68421

Train 250/381: loss 0.77689

Train 300/381: loss 0.34096

Val acc 0.69112

Train 350/381: loss 0.93121

Train 0/381: loss 0.21750

Val acc 0.68355

Train 50/381: loss 0.57992

Train 100/381: loss 0.38603

Val acc 0.68388

Train 150/381: loss 0.15227

Train 200/381: loss 0.52117

Val acc 0.68092

Train 250/381: loss 0.32740

Train 300/381: loss 0.51278

Train 350/381: loss 0.24217

Train 0/381: loss 0.44215

Val acc 0.67961

Train 50/381: loss 0.16680

Train 100/381: loss 0.15252

Val acc 0.68355

Train 150/381: loss 0.31004

Train 200/381: loss 0.18911

Val acc 0.68651

Train 250/381: loss 0.11511

Train 300/381: loss 1.61287

Val acc 0.59211

Train 350/381: loss 0.61160

Train 0/381: loss 0.51912

Val acc 0.64408

Train 50/381: loss 0.36144

Train 100/381: loss 0.18402

Val acc 0.67007

Train 150/381: loss 0.32190

Train 200/381: loss 0.33891

Val acc 0.68158

Train 250/381: loss 0.26074

Train 300/381: loss 0.13387

Val acc 0.68092

Train 350/381: loss 0.26407

Train 0/381: loss 0.31835

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

/tmp/ipykernel_102/2210071886.py in <module>

39 loss, logits = ernie(feature, labels=label)

40

---> 41 all_pred.extend(logits.argmax(-1).numpy())

42 all_label.extend(label.numpy())

43 ernie.train()

KeyboardInterrupt:

import glob

test_df['content'] = test_df['content'].fillna('')

test_df['label'] = 0

test_data = make_data(test_df.iloc[:])

# Model multi fold dependency

test_pred_tta = None

for path in glob.glob('./*.bin'):

ernie = ErnieModelForSequenceClassification.from_pretrained('ernie-1.0', num_labels=25)

ernie.load_dict(P.load(path))

test_pred = []

with P.no_grad():

ernie.eval()

for j in range(len(test_data) // BATCH+1):

feature, label = get_batch_data(test_data, j)

loss, logits = ernie(feature, labels=label)

test_pred.append(logits.numpy())

if test_pred_tta is None:

test_pred_tta = np.vstack(test_pred)

else:

test_pred_tta += np.vstack(test_pred)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/ernie/modeling_ernie.py:296: DeprecationWarning: The 'warn' method is deprecated, use 'warning' instead

log.warn('param:%s not set in pretrained model, skip' % k)

[WARNING] 2021-12-29 16:04:32,465 [modeling_ernie.py: 296]: param:classifier.weight not set in pretrained model, skip

[WARNING] 2021-12-29 16:04:32,467 [modeling_ernie.py: 296]: param:classifier.bias not set in pretrained model, skip

[WARNING] 2021-12-29 16:05:08,231 [modeling_ernie.py: 296]: param:classifier.weight not set in pretrained model, skip

[WARNING] 2021-12-29 16:05:08,233 [modeling_ernie.py: 296]: param:classifier.bias not set in pretrained model, skip

# Generate submission results

pd.DataFrame({

'id': test_df['id'],

'label': test_pred_tta.argmax(1)

}).to_csv('submit.csv', index=None)

Improvement direction

- The original token Dictionary of the model can be added.

- Confrontation training can be used to increase the accuracy of the model.

- Multiple models can be integrated.