Use jieba, forward maximum matching algorithm and backward maximum matching algorithm to segment words

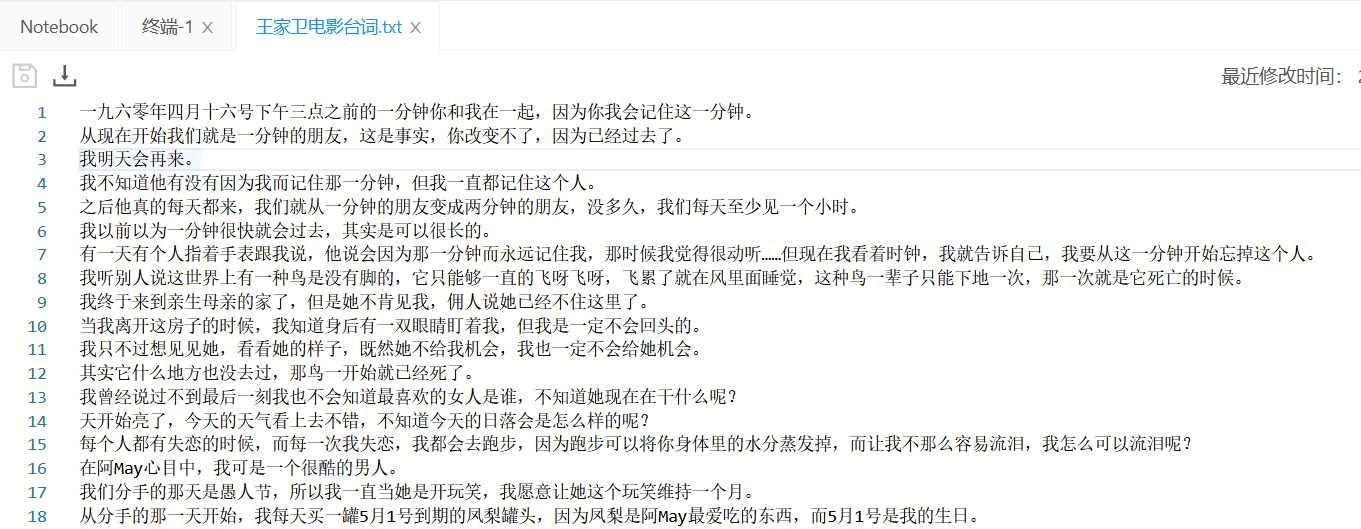

Today, we start word segmentation. I have sorted out some lines in Wong Kar Wai's films, with a total of 79 lines, which are from the more famous "east evil and West poison", "spring light and sudden release" and "Chongqing forest". This data set is like this.

Forward maximum matching algorithm

The idea of this algorithm is very simple. It is to find the longest word matching it in the dictionary from the beginning of the sentence, and then push back until the word matching of the whole sentence is completed.

- Build dictionary

- Loop through matching words

First of all, it's really difficult for me to build a dictionary with so many old words. As a result, it's really difficult for me to use ebjia first

The code is as follows:

import jieba import re import jieba.posseg as pseg filepath1 = 'Wong Kar Wai movie lines.txt' dict=[]

##Create dictionary

with open(filepath1, 'r', encoding = 'utf-8') as sourceFile:

lines = sourceFile.readlines()

for line in lines:

line1 = line.replace(' ','') # Remove spaces from text

pattern = re.compile("[^\u4e00-\u9fa5^a-z^A-Z^0-9]") #Keep only Chinese and English, numbers, and remove symbols

line2= re.sub(pattern,'',line1) #Replace the matching characters in the text with empty characters

seg = jieba.lcut(line2, cut_all = False)

for i in range(len(seg)):

if seg[i] not in dict:

dict.append(seg[i])

print(len(dict))

print(dict)

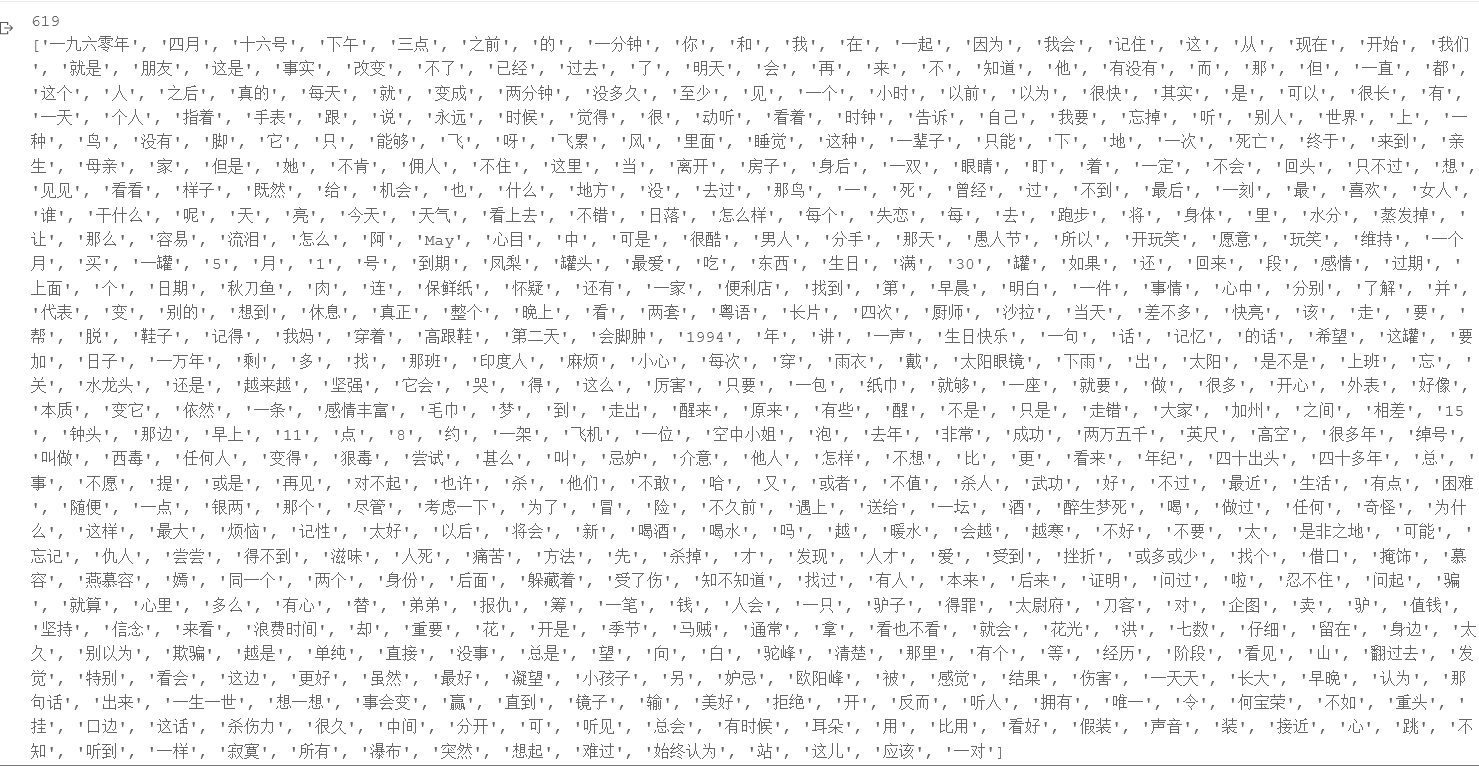

Take a look at this dictionary:

A total of 619 words

Next, you can segment words. Read them line by line:

Next, you can segment words. Read them line by line:

##Forward maximum matching algorithm

def forword_Match(text, Dict):

word_list = []

pi = 0 #initial position

#Find the length of the longest word in the dictionary

m = max([len(word) for word in Dict])

while pi != len(text):

n = len(text[pi:]) #The length of the current pointer to the end of the string

if n < m:

m = n

for index in range(m,0,-1): #Take m Chinese characters as words from the current pi

if text[pi:pi+index] in Dict:

word_list.append(text[pi:pi+index])

pi = pi + index # Modify the pointer pi according to the length of the word

break

print('/'.join(word_list),len(word_list))

##Output the word segmentation result and the number of words in each sentence sentence sentence by sentence

with open(filepath1, 'r', encoding = 'utf-8') as sourceFile:

lines = sourceFile.readlines()

for line in lines:

line1 = line.replace(' ','') # Remove spaces from text

pattern = re.compile("[^\u4e00-\u9fa5^a-z^A-Z^0-9]") #Keep only Chinese and English, numbers, and remove symbols

line2= re.sub(pattern,'',line1) #Replace the matching characters in the text with empty characters

forword_Match(line2, dict)

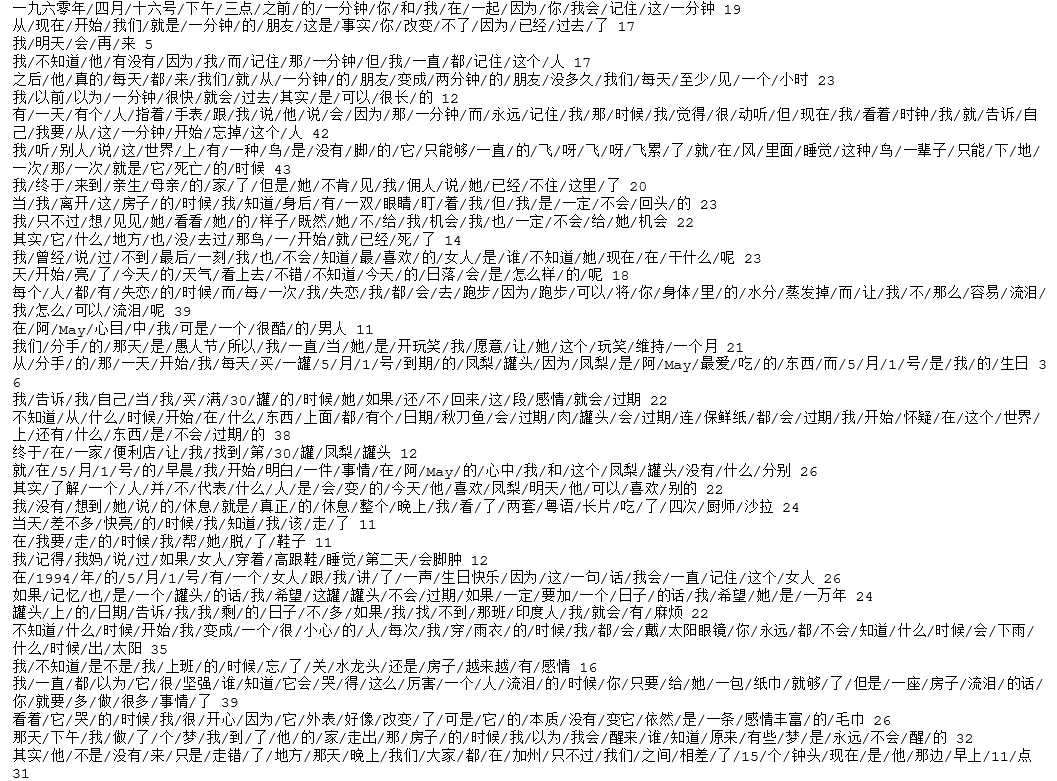

Let's look at the word segmentation results

jieba algorithm

jieba participle is very simple, just direct participle.

##Word by word and sentence by sentence

##Output the word segmentation result and the number of words in each sentence sentence sentence by sentence

with open(filepath1, 'r', encoding = 'utf-8') as sourceFile:

lines = sourceFile.readlines()

for line in lines:

line1 = line.replace(' ','') # Remove spaces from text

pattern = re.compile("[^\u4e00-\u9fa5^a-z^A-Z^0-9]") #Keep only Chinese and English, numbers, and remove symbols

line2= re.sub(pattern,'',line1) #Replace the matching characters in the text with empty characters

seg = jieba.lcut(line2, cut_all = False)

print("/".join(seg),len(seg))

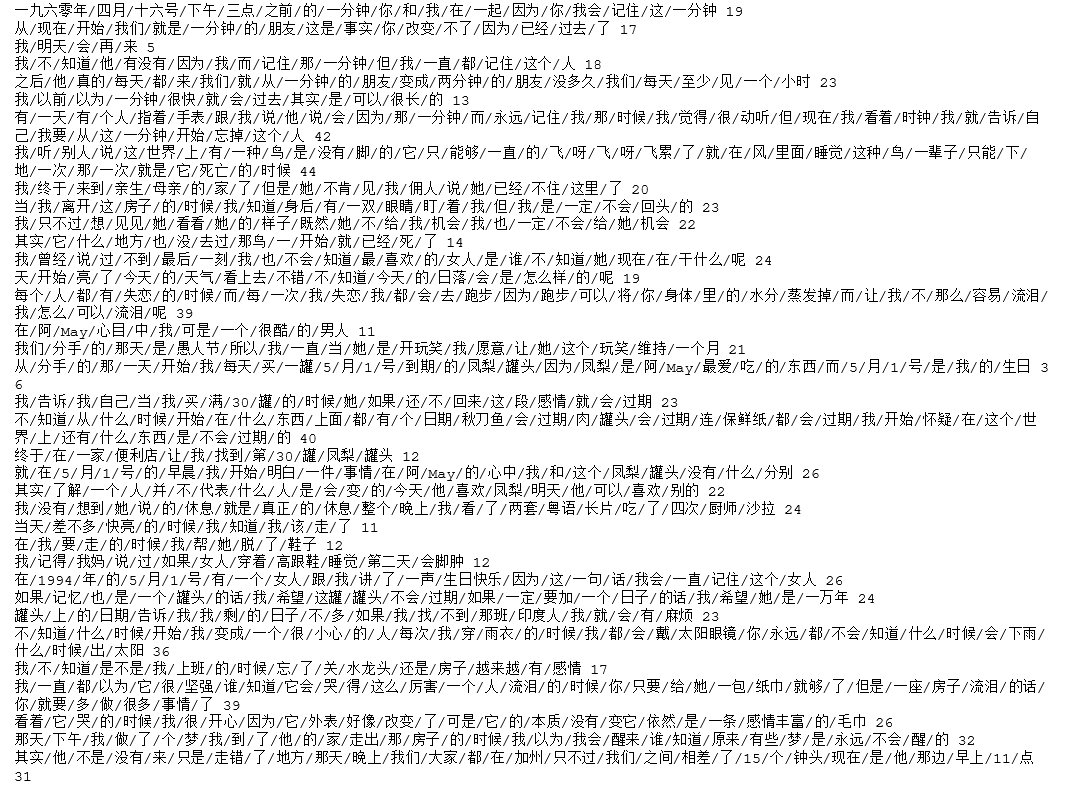

Let's see the results

Backward maximum matching algorithm

##Reverse maximum matching

def back_Match(text, Dict):

word_list = []

pi = len(text) - 1

m = max(len(word) for word in Dict)

while pi >= 0:

n = len(text[0:pi+1])

if n < m:

m = n

for index in range(m-1,-1,-1):

if text[pi-index:pi+1] in Dict:

word_list.append(text[pi-index:pi+1])

pi = pi - index -1

break

print('/'.join(word_list[::-1]))

with open(filepath1, 'r', encoding = 'utf-8') as sourceFile:

lines = sourceFile.readlines()

for line in lines:

line1 = line.replace(' ','') # Remove spaces from text

pattern = re.compile("[^\u4e00-\u9fa5^a-z^A-Z^0-9]") #Keep only Chinese and English, numbers, and remove symbols

line2= re.sub(pattern,'',line1) #Replace the matching characters in the text with empty characters

back_Match(line2, dict)

The complete project and data set have been uploaded to the resources, but I found a problem. Although I have set it to 0 points download, CSDN doesn't know how to operate internally. All of them have become 2 points download, so if you don't have points, you can chat with me privately or comment on me.

This time I set it to 1.9 yuan to download, because I don't quite understand how to operate the points. If I'm not in a hurry to download, I can chat or comment privately. I can send it privately.