preface

Use Python requests + selenium to crawl the national recruitment data in Zhilian recruitment. If you read my previous articles, you should know that we have written a crawler that uses selenium to climb Zhilian recruitment

Tip: the following is the main content of this article. The following cases can be used for reference

My purpose is to enter the page to get the link of the recruitment details page, and then crawl the data through the link

1, Get list page URL

The following is the url of the list page, where jl can be directly replaced by province, kw is the search keyword, and p is the number of pages

https://sou.zhaopin.com/?jl=532&kw=%E6%95%B0%E6%8D%AE%E5%88%86%E6%9E%90&p=1 After modification: https://sou.zhaopin.com/?jl = Province & kW = keyword & P = number of pages

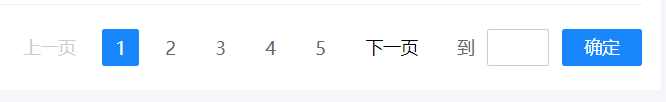

But one thing is particularly important. If we want to achieve the whole station crawling, we must be inseparable from the cycle, but the total number of pages corresponding to each province is different. We must obtain the total number of pages corresponding to each province, and then create a url list to obtain the details page link

However, as shown in the figure above, the total number of pages is hidden. At this time, the method I think of is to use selenium access to enter a value far greater than the number of pages in the number of pages box, and he will automatically return the number of pages. One thing to note is that every time Zhilian recruitment enters a website access, you will scan the code and log in again, so you can get cookie s on the first visit page, For next visit

def Get_Page(self):

page_list=[]

listCookies=[]

print("Start to get the maximum number of pages corresponding to the province")

for jl in self.jl_list:

url="https://sou.zhaopin.com/?jl=%s&kw=%s"%(jl,self.keyword)

if len(listCookies) >0:

for cookie in listCookies[0]:

self.bro.add_cookie(cookie)

else:

pass

self.bro.get(url)

sleep(9)

listCookies.append(self.bro.get_cookies())

try:

self.bro.execute_script('window.scrollTo(0, document.body.scrollHeight)') # Pull down one screen

self.bro.find_element_by_xpath('//div[@class="soupager__pagebox"]/input[@type="text"]').send_keys(1000) # enter a value much larger than the number of pages in the number of pages box

button=self.bro.find_element_by_xpath('//div[@class="soupager__pagebox"]/button[@class="soupager__btn soupager__pagebox__gobtn"]')

self.bro.execute_script("arguments[0].click();", button)

self.bro.execute_script('window.scrollTo(0, document.body.scrollHeight)') # Pull down one screen

page = self.bro.find_element_by_xpath('//div[@class="soupager"]/span[last()]').text

except Exception as e:

print(e)

page=1

pass

page_list.append(page)

self.bro.quit()

return page_list

We know the composition of the list url and get the maximum number of pages in each province, so we can generate the list url in batches and accurately. I use "data analysis" as the keyword, and finally get more than 300 list URLs

def Get_list_Url(self):

list_url=[]

print("Start splicing list page url")

for a, b in zip(self.jl_list,self.Get_Page()):

for i in range(int(b)):

url = "https://sou.zhaopin.com/?jl=%s&kw=%s&p=%d" % (a,self.keyword,int(b))

with open("list_url.txt","a", encoding="utf-8") as f:

f.write(url)

f.write("\n")

list_url.append(url)

print("Co splicing%d List pages url"%(len(list_url)))

return list_url

Now we have the list url, so we can access the url to get the url of each recruitment information detail page

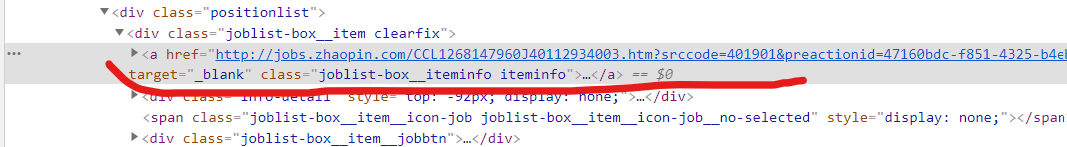

2, Get details page URL

The purpose of accessing all the list URLs is to obtain the detailed url of each recruitment information. Right click the element to find that the detailed list is in the url a tag

We can write code for this

def Parser_Url(self, url):

list_header = {

'user-agent': '',

'cookie': ''

}

try:

text = requests.get(url=url, headers=list_header).text

html = etree.HTML(text)

urls = html.xpath('//div[@class="joblist-box__item clearfix"]/a[1]/@href')

for url in urls:

with open("detail_url.txt","a", encoding="utf-8") as f:

f.write(url)

f.write("\n")

except Exception as e:

print(e)

pass

Now we need to get the details from the list url

def Get_detail_Url(self):

print("Start getting details page url")

with open("list_url.txt","r",encoding="utf-8") as f:

list_url=f.read().split("\n")[0:-1]

for url in list_url:

self.Parser_Url(url)

3, Get data

I got more than 7000 detailed URLs, and finally we can use these URLs to obtain data

First write a data analysis function

def Parser_Data(self,text):

html = etree.HTML(text)

dic = {}

try:

dic["name"] = html.xpath('//h3[@class="summary-plane__title"]/text()')[0]

except:

dic["name"] = ""

try:

dic["salary"] = html.xpath('//span[@class="summary-plane__salary"]/text()')[0]

except:

dic["salary"] = ""

try:

dic["city"] = html.xpath('//ul[@class="summary-plane__info"]/li[1]/a/text()')[0]

except:

dic["city"] = ""

with open(".//zhilian.csv", "a", encoding="utf-8") as f:

writer = csv.DictWriter(f, dic.keys())

writer.writerow(dic)

Finally, we call the parse data function through the url of the detail page

def Get_Data(self):

print("Start getting data")

detail_header = {

'user-agent': '',

'cookie': ''

}

with open("detail_url.txt","r",encoding="utf-8") as f:

detail_url=f.read().split("\n")[0:-1]

for url in detail_url:

'''use selenium visit'''

# self.bro.get(url)

# self.Parser_Data(self.bro.page_source)

'''use requests visit'''

# text=requests.get(url,headers=detail_header,proxies={"http":'http://213.52.38.102:8080'}).text

# self.Parser_Data(text)

sleep(0.2)

If you use requests to access, you need to modify the request header information

summary

The method of using requests+selenium accelerates the speed of obtaining data, which greatly shortens the time compared with the previous method of using selenium only

Finally, I said I wrote a zhaopi recruitment for Zhaopin, but I just used selenium to get data, and now I wrote a little better. I will put two codes in the official account "Python" and reply to "Zhaopin Crawler".

Thank you for watching. If you think it's good, please praise it 👍