1 sample processing

1.1 load sample code --- Titanic forecast Py (Part 1)

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from scipy import stats

import pandas as pd

import matplotlib.pyplot as plt

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

def moving_average(a, w=10):#Define a function to calculate the moving average loss value

if len(a) < w:

return a[:]

return [val if idx < w else sum(a[(idx-w):idx])/w for idx, val in enumerate(a)]

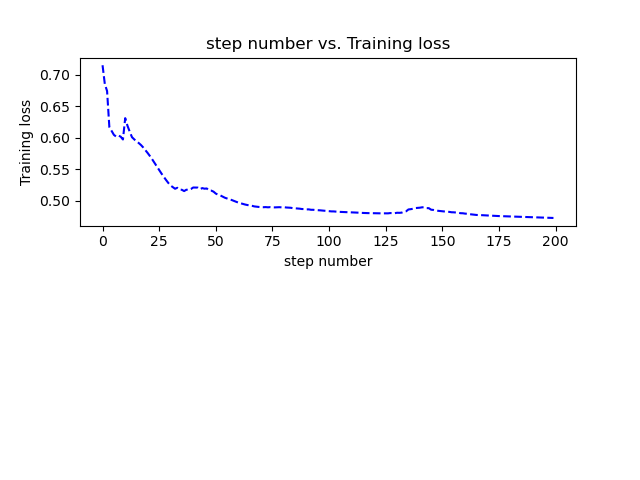

def plot_losses(losses):

avgloss= moving_average(losses) #Obtain the moving average of the loss value

plt.figure(1)

plt.subplot(211)

plt.plot(range(len(avgloss)), avgloss, 'b--')

plt.xlabel('step number')

plt.ylabel('Training loss')

plt.title('step number vs. Training loss')

plt.show()

###1.1 loading samples

titanic_data = pd.read_csv('csv_list/titanic3.csv')

print(titanic_data.columns)

# Output: index (['pclass', 'survived', 'name', 'sex', 'age', 'sibsp', 'patch', 'ticket', 'fare', 'cabin', 'embanked', 'boat', 'body', 'home. Dest'], dtype ='object ')

1.2 discrete data

1.2.1 characteristics of discrete data

Data without any continuity between data is called discrete data, such as men and women in the data.

Discrete data can usually be processed into one hot coding or word vector, which can be divided into two categories:

① Samples with fixed categories (gender): easy to handle and transform according to the total category

② Samples without fixed categories (names): processed by hash algorithm or other hash algorithm, and then transformed by word vector technology

1.2.2 characteristics of continuous data

Data with continuity between data is called continuous data, such as ticket price and age

For continuous data with feature changes, logarithmic operation or normalization is used to make it have a unified value range

1.2.3 conversion of continuous data and discrete data

There are three methods for data preprocessing for a feature attribute with a large span:

① Normalize according to the maximum and minimum values

② Use logarithmic operation

③ It is divided into several categories according to the distribution, and then discretized

1.3 processing discrete data and NAn values in samples

1.3.1 convert discrete data into one hot coding code --- Titanic forecast Py (Part 2)

###1.2 processing discrete data and Nan values in samples

# Convert discrete data to hot one

# get_dummies() will convert the discrete values in the specified column into one hot code, and put the new column generated after conversion behind the original data. The data in the new column is indicated by 0 and 1 to indicate whether it has the attribute of the column.

titanic_data = pd.concat(

[titanic_data,

pd.get_dummies(titanic_data['sex']),

pd.get_dummies(titanic_data['embarked'],prefix="embark"),

pd.get_dummies(titanic_data['pclass'],prefix="class")],axis=1

)

print(titanic_data.columns)

# Output: index (['pclass', 'survived', 'name', 'sex', 'age', 'sibsp', 'patch', 'ticket', 'fare', 'cabin', 'embanked', 'boat', 'body', 'home. Dest', 'female', 'male', 'embark_c', 'embark_q', 'embark_s',' class_1 ',' class_2 ',' class_3 '], dtype ='object')

print(titanic_data['sex'])

print(titanic_data['female']) # In the sex column, the row whose value is female has a value of 1 in the female columnFilter the data in Titan code -- -1.2 Py (Part 3)

Nan value processing is performed for two data columns with continuous attributes, age and far.

# Filter and fill Nan value # Call fillna() to filter the NAn value of a specific column and populate it with the average value of that column titanic_data["age"] = titanic_data["age"].fillna(titanic_data["age"].mean()) # Passenger age titanic_data["fare"] = titanic_data["fare"].fillna(titanic_data["fare"].mean()) # Passenger Fare

1.3.3 eliminate useless data column codes -- Titanic forecast Py (Part 4)

This part excludes the data columns irrelevant to the disaster.

## Remove data columns irrelevant to whether to get or not titanic_data = titanic_data.drop(['name','ticket','cabin','boat','body','home.dest','sex','embarked','pclass'], axis=1) print(titanic_data.columns ) # Output the data columns that really need to be processed

1.4 separate samples and labels and make data set code --- Titanic forecast Py (Part 5)

The suivived column is extracted from the data set, and the remaining data in the data column is used as the input sample.

### 1.3 separating samples and labels and making data sets

# Separate samples

labels = titanic_data["survived"].to_numpy()

titanic_data = titanic_data.drop(['survived'],axis=1)

data = titanic_data.to_numpy()

# Sample attribute name

feature_names = list(titanic_data.columns)

# The samples are divided into two parts: training and testing

np.random.seed(10) # Set random seeds to ensure the consistency of samples in each run

# The set() function creates an unordered set of non repeating elements, and x-y returns a new set, including the elements in set x but not in set y

# random. The choice (a = 5, size = 3, replace = false, P = none) parameter means to randomly select three from a with probability P. when p is not specified, it is equivalent to a consistent distribution

# Extract by line number

train_indices = np.random.choice(len(labels),int(0.7 * len(labels)),replace = False)

print('train_indices++++',train_indices)

test_indices = list(set(range(len(labels))) - set(train_indices)) #Set the rest as the test set

print('train_indices++++',train_indices)

# data [:] is equivalent to copying a list again. The list is a variable object. If a direct reference is passed to a function, any variable will be modified, and the other variables will be changed accordingly. However, this situation can be avoided in this way.

train_features = data[train_indices]

train_labels = labels[train_indices]

test_features = data[test_indices]

test_labels = labels[test_indices]

print('Number of test samples',len(test_labels)) # Number of test samples 3932 training model

2.1 define Mish activation function and multi-layer fully connected network code -- Titanic forecast Py (Part 6)

###Define Mish activation function and multi-layer fully connected network

# A class with three layers of fully connected networks is defined. Each network layer uses Mish as the activation function, and the model uses the cross entropy loss function

class Mish(nn.Module):

def __init__(self):

super().__init__()

def forward(self,x):

x = x * (torch.tanh(F.softplus(x)))

return x

torch.manual_seed(0) # Set random seed function

class ThreeLinearModel(nn.Module):

def __init__(self):

super().__init__()

self.linear1 = nn.Linear(12,12)

self.mish1 = Mish()

self.linear2 = nn.Linear(12,8)

self.mish2 = Mish()

self.linear3 = nn.Linear(8,2)

self.softmax = nn.Softmax(dim = 1)

self.criterion = nn.CrossEntropyLoss() #Define cross entropy

def forward(self,x): # Define a fully connected network

lin1_out = self.linear1(x)

out_1 = self.mish1(lin1_out)

out_2 = self.mish2(self.linear2(out_1))

return self.softmax(self.linear3(out_2))

def getloss(self,x,y): # Implement the loss value calculation interface of the class

y_pred = self.forward(x)

loss = self.criterion(y_pred,y)

return loss2.2 train the model and output the result code --- Titanic forecast Py (Part 7)

### Train the model and output the results

if __name__ == '__main__':

net = ThreeLinearModel()

num_epochs = 200

optimizer = torch.optim.Adam(net.parameters(),lr = 0.04)

# Convert the input sample label to scalar

input_tensor = torch.from_numpy(train_features).type(torch.FloatTensor)

label_tensor = torch.from_numpy(train_labels)

losses = [] # Define loss value list

for epoch in range(num_epochs):

loss = net.getloss(input_tensor, label_tensor)

losses.append(loss.item())

optimizer.zero_grad() # Gradient before emptying

loss.backward() # Back propagation loss value

optimizer.step() # Update parameters

if epoch % 20 == 0:

print('Epoch {}/{} => Loss: {:.2f}'.format(epoch + 1, num_epochs, loss.item()))

os.makedirs('models', exist_ok=True)

torch.save(net.state_dict(), 'models/titanic_model.pt')

plot_losses(losses)

# Output training results

# tensor.detach(): detach from the calculation diagram and return a new tensor. The new tensor shares data memory with the original tensor. (this means that modifying the value of one tensor will change the other.),

# However, gradient calculation is not involved. When converting from tensor to numpy, if the tensor before conversion is in the calculation diagram (requires_grad = True), then at this time, only the detach operation can be carried out first to convert to numpy

out_probs = net(input_tensor).detach().numpy()

out_classes = np.argmax(out_probs, axis=1)

print("Train Accuracy:", sum(out_classes == train_labels) / len(train_labels))

# test model

test_input_tensor = torch.from_numpy(test_features).type(torch.FloatTensor)

out_probs = net(test_input_tensor).detach().numpy()

out_classes = np.argmax(out_probs, axis=1)

print("Test Accuracy:", sum(out_classes == test_labels) / len(test_labels))