In the network of deep learning, I think the most basic is the residual network. What I share today is not the theoretical part of the residual network. Just remember that the idea of the residual network runs through many network structures behind. If you understand the residual network structure, then some advanced network structures behind are also easy to understand.

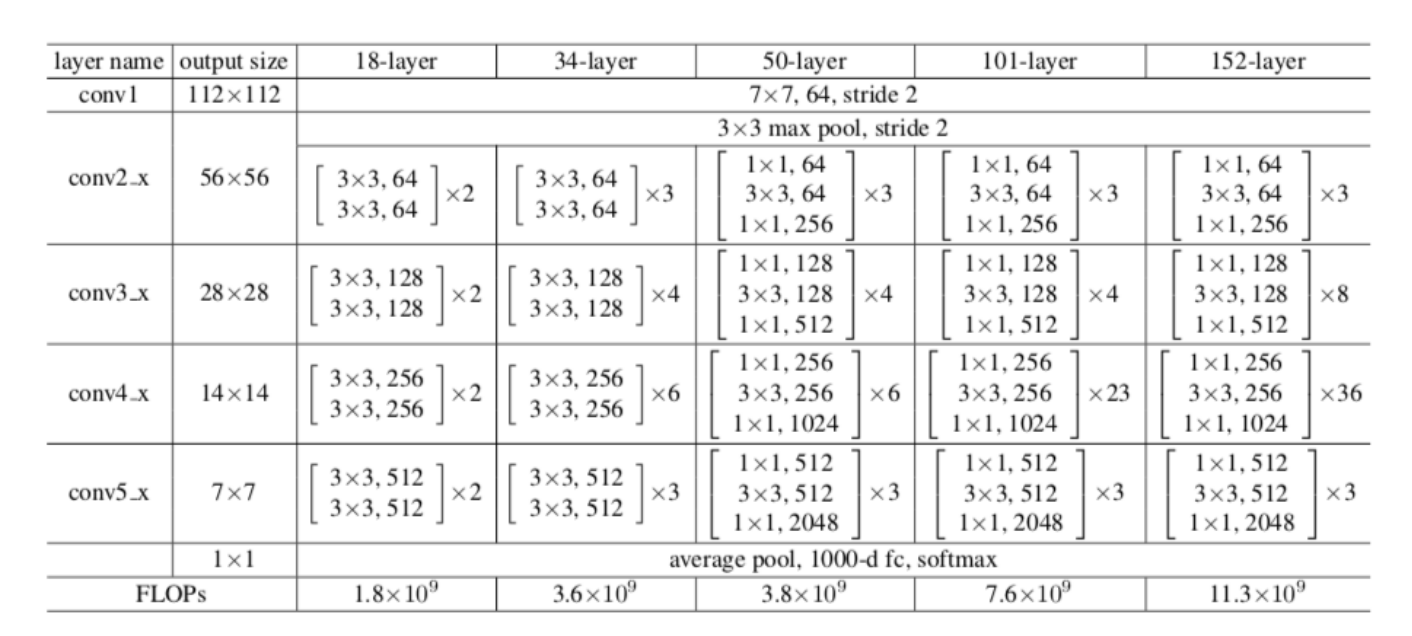

Overall structure of residual network

Overall structure of residual network

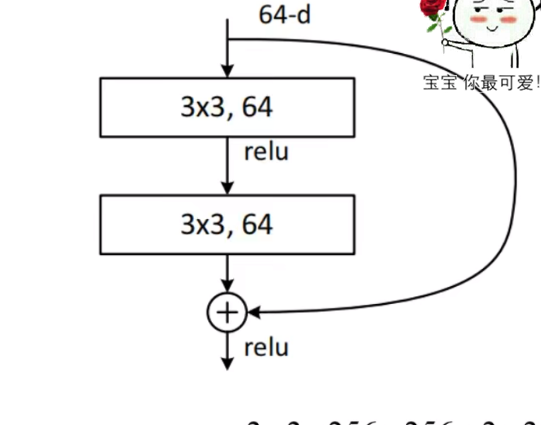

1, Residual block structure

The residual block structure corresponding to the first 50 layers (excluding the 50th layer) code is as follows:

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None, **kwargs):#downsample=None indicates the residual structure of the dotted line

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

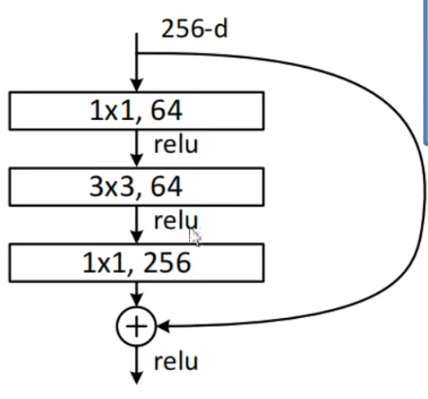

The residual block structure (including the 50th layer) code corresponding to the last 50 layers is as follows:

class Bottleneck(nn.Module):

"""

Note: in the original paper, on the main branch of the dotted line residual structure, the first 1 x1 The step of convolution layer is 2 and the second is 3 x3 The convolution layer step is 1.

But in pytorch The official implementation process is the first 1 x1 The step of convolution layer is 1 and the second is 3 x3 The convolution layer step is 2,

The advantage of doing so is to be able to top1 Up about 0.5%Accuracy.

Can refer to Resnet v1.5 https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch

"""

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None,

groups=1, width_per_group=64):

super(Bottleneck, self).__init__()

width = int(out_channel * (width_per_group / 64.)) * groups

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=width,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=width, out_channels=width, groups=groups,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv3 = nn.Conv2d(in_channels=width, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

Why do beginners feel puzzled when they see two pieces of code? ① In fact, these two residual blocks are for different network layers. The first residual structure is for shallow residual networks, such as resnet18 and resnet34, while the second residual structure is for deep residual structures, such as resnet50,resnet101 and resnet152.

② These two residual blocks will be implemented in the code to facilitate the change of the number of layers of the network. For the residual Block structure, the general network is always named Block. So look at the code, look at the diagram.

Secondly, it should be noted that 3x3 convolution kernel is generally used to reduce the size of feature map, and 1x1 convolution is generally used to reduce or increase the number of channels.

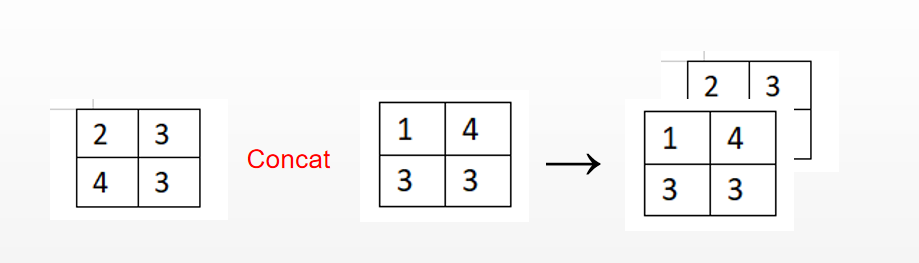

II. Difference between concat and add

For beginners, they are still confused or unable to understand these two words. So pay attention to this,

concat operation: it is generally necessary to have the same size of the feature map before splicing on the corresponding channel dimension, for example, as shown in the following figure:

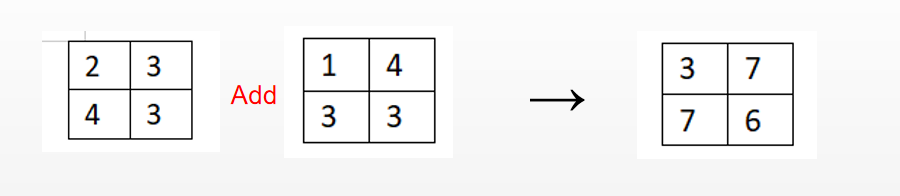

add operation: generally, the size of the feature map and the number of channels need to be the same. For example, the two graphs on the left of the figure below have the feature size of 2x2 and the number of channels is 1, so they can be added at the corresponding position.

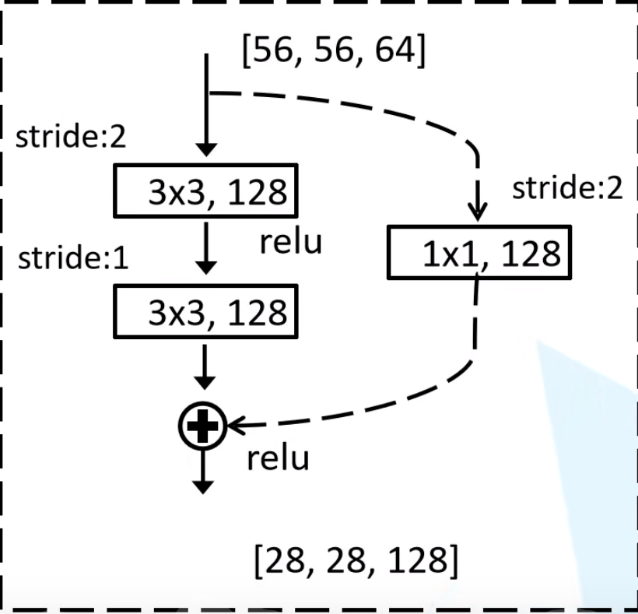

III. why does the residual edge need to be down sampled

See the figure below. You will find that one of the residual edges of the above two residual blocks does not have the 1x1128 style shown in the figure below. I can only tell you that this is the author's default that you have started in-depth learning, so you didn't write it. Let's carefully analyze the figure below. First [56,56,64] passes through 3x3128, and the convolution kernel with step length of 2 will become [28,28128], and then passes through 3x3128, and the convolution kernel with step length of 1, It will become [28,28128], but it is inconsistent with the size and number of channels of [56,56,64], so [56,56,64] performs a convolution kernel of 3x3128 with step size of 2 on the residual edge, so as to obtain [28,28128], and the last two [28,28128] are added.

The code is as follows:

import torch.nn as nn

import torch

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None, **kwargs):#downsample=None indicates the residual structure of the dotted line

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = nn.Conv2d(in_channels=in_channel,out_channels=out_channel,kernel_size=1,stride=2)

self.bn3 = nn.BatchNorm2d(out_channel)

def forward(self, x):

identity = x

if self.downsample is not None:

identity =self.relu(self.bn3(self.downsample(x)))

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

if __name__ == '__main__':

a=torch.randn((1,64,56,56))

model=BasicBlock(in_channel=64,out_channel=128,stride=2,downsample=True)

out=model(a)

print(out.shape)The complete resnet network code is as follows:

import torch.nn as nn

import torch

#The following class is the residual structure of 3x3

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None, **kwargs):#downsample=None indicates the residual structure of the dotted line

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

#This represents the residual structure of the next 50 layers

class Bottleneck(nn.Module):

"""

Note: in the original paper, on the main branch of the dotted line residual structure, the first 1 x1 The step of convolution layer is 2 and the second is 3 x3 The convolution layer step is 1.

But in pytorch The official implementation process is the first 1 x1 The step of convolution layer is 1 and the second is 3 x3 The convolution layer step is 2,

The advantage of doing so is to be able to top1 Up about 0.5%Accuracy.

Can refer to Resnet v1.5 https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch

"""

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None,

groups=1, width_per_group=64):

super(Bottleneck, self).__init__()

width = int(out_channel * (width_per_group / 64.)) * groups

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=width,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=width, out_channels=width, groups=groups,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv3 = nn.Conv2d(in_channels=width, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self,

block,

blocks_num, #For 34 layers 3,4,6,3

num_classes=1000,

include_top=True,#In order to build more complex networks

groups=1,

width_per_group=64):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.groups = groups

self.width_per_group = width_per_group

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, blocks_num[0])

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2)

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2)

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

def _make_layer(self, block, channel, block_num, stride=1):

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel * block.expansion))

layers = []

layers.append(block(self.in_channel,

channel,

downsample=downsample,

stride=stride,

groups=self.groups,

width_per_group=self.width_per_group))

self.in_channel = channel * block.expansion

for _ in range(1, block_num):

layers.append(block(self.in_channel,

channel,

groups=self.groups,

width_per_group=self.width_per_group))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet34(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet50(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet50-19c8e357.pth

return ResNet(Bottleneck, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet101-5d3b4d8f.pth

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

def resnext50_32x4d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth

groups = 32

width_per_group = 4

return ResNet(Bottleneck, [3, 4, 6, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

def resnext101_32x8d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth

groups = 32

width_per_group = 8

return ResNet(Bottleneck, [3, 4, 23, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

if __name__ == '__main__':

net=resnet34()

print(net)So far, the network structure description is completed! I hope you have something to gain. If you have any questions, please comment!