This article was first published in Nebula Graph Community official account

Solution ideas

The most convenient way to solve the problem that K8s cannot connect to the cluster after deploying the Nebula Graph cluster is to run the nebula algorithm / Nebula spark in the same network namespace as the nebula operator, and fill in the MetaD domain name of show hosts meta: the address in port format into the configuration.

Note: the version 2.6.2 or later is required here. Only the nebula spark connector / Nebula algorithm supports the MetaD address in the form of domain name.

Here's the specific network configuration:

- Get MetaD address

(root@nebula) [(none)]> show hosts meta +------------------------------------------------------------------+------+----------+--------+--------------+---------+ | Host | Port | Status | Role | Git Info Sha | Version | +------------------------------------------------------------------+------+----------+--------+--------------+---------+ | "nebula-metad-0.nebula-metad-headless.default.svc.cluster.local" | 9559 | "ONLINE" | "META" | "d113f4a" | "2.6.2" | +------------------------------------------------------------------+------+----------+--------+--------------+---------+ Got 1 rows (time spent 1378/2598 us) Mon, 14 Feb 2022 08:22:33 UTC

Here, you need to record the Host name so that it can be used in subsequent configuration files.

- Fill in the configuration file of Nepal algorithm

Reference documents https://github.com/vesoft-inc/nebula-algorithm/blob/master/nebula-algorithm/src/main/resources/application.conf . There are two ways to fill in the configuration file: modify the TOML file or add configuration information to the nebula spark connector code.

Method 1: modify the TOML file

# ...

nebula: {

# algo's data source from Nebula. If data.source is nebula, then this nebula.read config can be valid.

read: {

# Fill in the Host name of the meta just obtained here. If multiple addresses are separated by commas under English characters;

metaAddress: "nebula-metad-0.nebula-metad-headless.default.svc.cluster.local:9559"

#...

Method 2: call the code of nebula spark connector

Ref: https://github.com/vesoft-inc/nebula-spark-connector

val config = NebulaConnectionConfig

.builder()

// Fill in the Host name of the meta just obtained here

.withMetaAddress("nebula-metad-0.nebula-metad-headless.default.svc.cluster.local:9559")

.withConenctionRetry(2)

.build()

val nebulaReadVertexConfig: ReadNebulaConfig = ReadNebulaConfig

.builder()

.withSpace("foo_bar_space")

.withLabel("person")

.withNoColumn(false)

.withReturnCols(List("birthday"))

.withLimit(10)

.withPartitionNum(10)

.build()

val vertex = spark.read.nebula(config, nebulaReadVertexConfig).loadVerticesToDF()

OK, so far, the process seems very simple. So why is such a simple process worth an article?

Configuration information is easy to ignore

Just now we talked about the specific practical operation, but there are some theoretical knowledge here:

a. MetaD implicitly needs to ensure that the address of StorageD can be accessed by Spark environment;

b. The storaged address is obtained from MetaD;

c. In the nebula K8s operator, the source of the StorageD address (service discovery) stored in MetaD is the StorageD configuration file, which is the internal address of K8s.

background knowledge

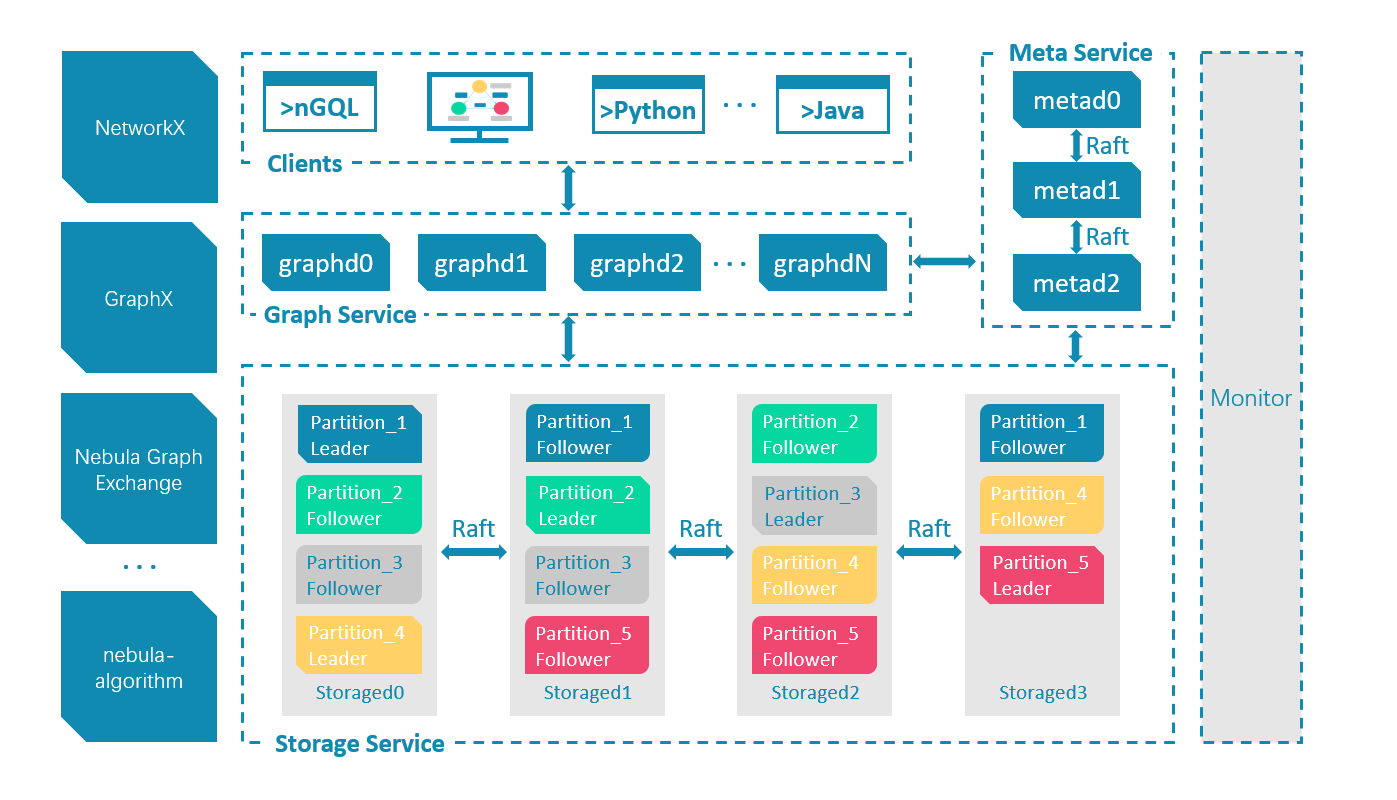

a. The reason is relatively direct, which is related to the architecture of nebula: the data of the graph is stored in the Storage Service. The query of common statements is transmitted through the Graph Service, and only the connection of graph is enough. The scenario of nebula spark connector using Nebula Graph is to scan the whole graph or sub graph, At this time, the design of separation of computing and storage enables us to bypass the query and read the graph data directly and efficiently.

So the question is, why do you need and only need the address of MetaD?

This is also related to the architecture. The Meta Service contains the distribution data of the whole map and the distribution of each partition and instance of the distributed Storage Service. On the one hand, only Meta has the information of the whole map (required), on the other hand, this information can be obtained from Meta (as long as). Here's the answer to b.

- Refer to the architecture trilogy series for detailed architecture information of Nebula Graph

Let's look at the logic behind c

c. In nebula k8s operator, the source of the StorageD address (service discovery) stored in MetaD is the StorageD configuration file, which is the internal address of k8s.

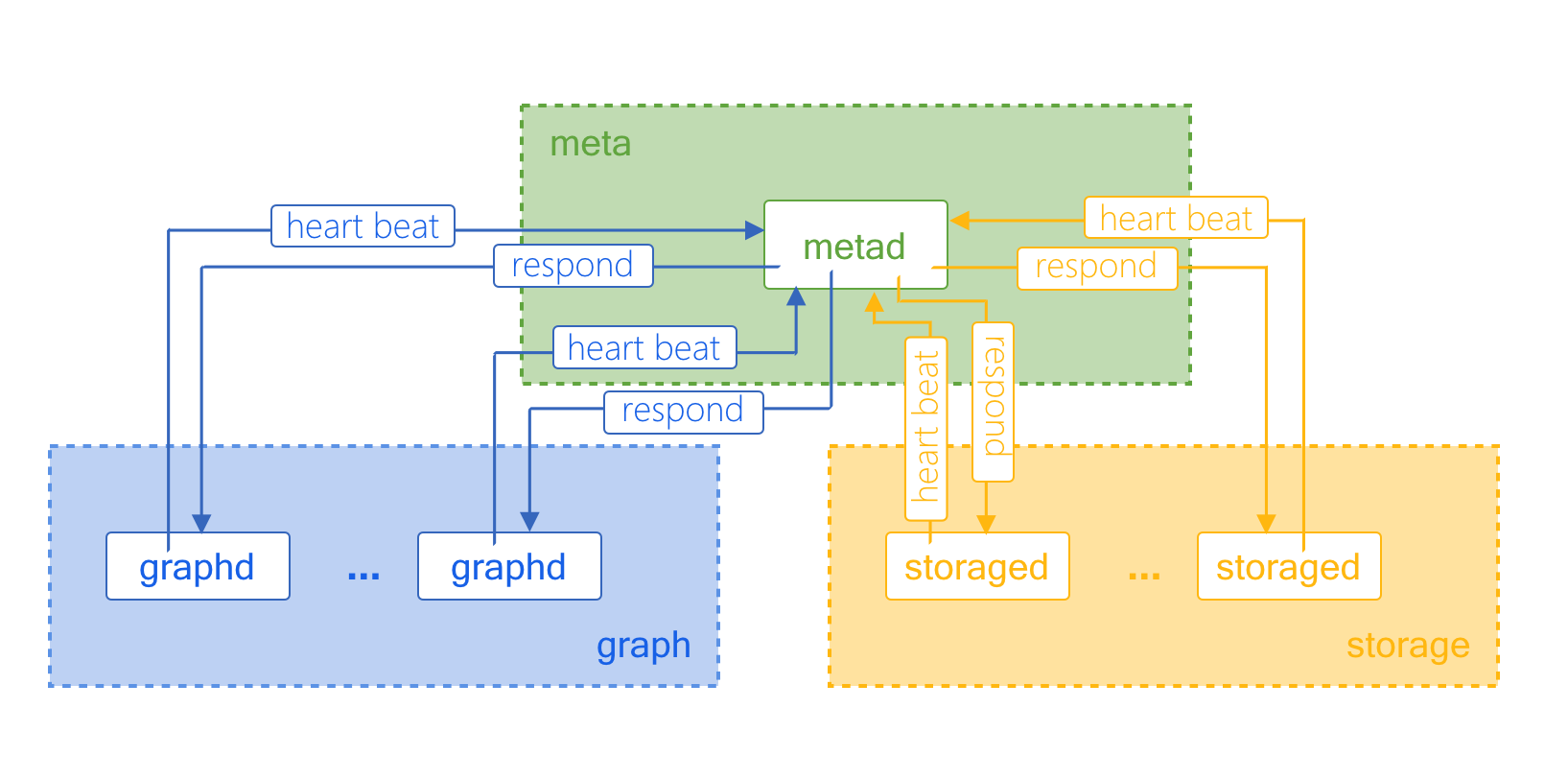

This is related to the service discovery mechanism in Nebula Graph: in the Nebula Graph cluster, Graph Service and Storage Service report their own information to Meta Service through heartbeat, and the source of the service's own address comes from the network configuration in their corresponding configuration file.

-

For the address configuration of the service itself, please refer to the document: Storage networking configuration

-

For more information about service discovery, please refer to Siwang's article: Figure Nebula Graph cluster communication of database: starting from heartbeat.

Finally, we know that the Nebula Operator is a K8s control surface application that automatically creates, maintains and expands the capacity of the Nebula cluster according to the configuration in the K8s cluster. It needs to abstract some internal resource related configurations, including the actual addresses of GraphD and StorageD instances. They are actually the configured addresses headless service address.

We can expose the external addresses of these services as follows, so we can't access them by default.

(root@nebula) [(none)]> show hosts meta +------------------------------------------------------------------+------+----------+--------+--------------+---------+ | Host | Port | Status | Role | Git Info Sha | Version | +------------------------------------------------------------------+------+----------+--------+--------------+---------+ | "nebula-metad-0.nebula-metad-headless.default.svc.cluster.local" | 9559 | "ONLINE" | "META" | "d113f4a" | "2.6.2" | +------------------------------------------------------------------+------+----------+--------+--------------+---------+ Got 1 rows (time spent 1378/2598 us) Mon, 14 Feb 2022 09:22:33 UTC (root@nebula) [(none)]> show hosts graph +---------------------------------------------------------------+------+----------+---------+--------------+---------+ | Host | Port | Status | Role | Git Info Sha | Version | +---------------------------------------------------------------+------+----------+---------+--------------+---------+ | "nebula-graphd-0.nebula-graphd-svc.default.svc.cluster.local" | 9669 | "ONLINE" | "GRAPH" | "d113f4a" | "2.6.2" | +---------------------------------------------------------------+------+----------+---------+--------------+---------+ Got 1 rows (time spent 2072/3403 us) Mon, 14 Feb 2022 10:03:58 UTC (root@nebula) [(none)]> show hosts storage +------------------------------------------------------------------------+------+----------+-----------+--------------+---------+ | Host | Port | Status | Role | Git Info Sha | Version | +------------------------------------------------------------------------+------+----------+-----------+--------------+---------+ | "nebula-storaged-0.nebula-storaged-headless.default.svc.cluster.local" | 9779 | "ONLINE" | "STORAGE" | "d113f4a" | "2.6.2" | | "nebula-storaged-1.nebula-storaged-headless.default.svc.cluster.local" | 9779 | "ONLINE" | "STORAGE" | "d113f4a" | "2.6.2" | | "nebula-storaged-2.nebula-storaged-headless.default.svc.cluster.local" | 9779 | "ONLINE" | "STORAGE" | "d113f4a" | "2.6.2" | +------------------------------------------------------------------------+------+----------+-----------+--------------+---------+ Got 3 rows (time spent 1603/2979 us) Mon, 14 Feb 2022 10:05:24 UTC

However, because the previously mentioned Nebula spark connector obtains the StorageD address through the Meta Service, and this address is found by the service, the StorageD address actually obtained by Nebula spark connector is the above headless service address, which can not be accessed directly from the outside.

Therefore, if possible, we only need to make Spark run in the same K8s network as Nebula Cluster, and everything will be solved. Otherwise, we need to:

-

Expose the L4 (TCP) of MetaD and StorageD addresses by means of Ingress.

You can refer to the documentation of the Nebula Operator: https://github.com/vesoft-inc/nebula-operator

-

Through reverse proxy and DNS, these headless services can be resolved to the corresponding StorageD.

So, is there a more convenient way?

Unfortunately, at present, the most convenient way is still to let Spark run inside Nebula Cluster, as introduced at the beginning of the article. In fact, I'm trying to promote the Nebula Spark community to support the configurable StorageAddresses option. With it, the previously mentioned 2 It's unnecessary.

More convenient Nebula algorithm + Nebula operator experience

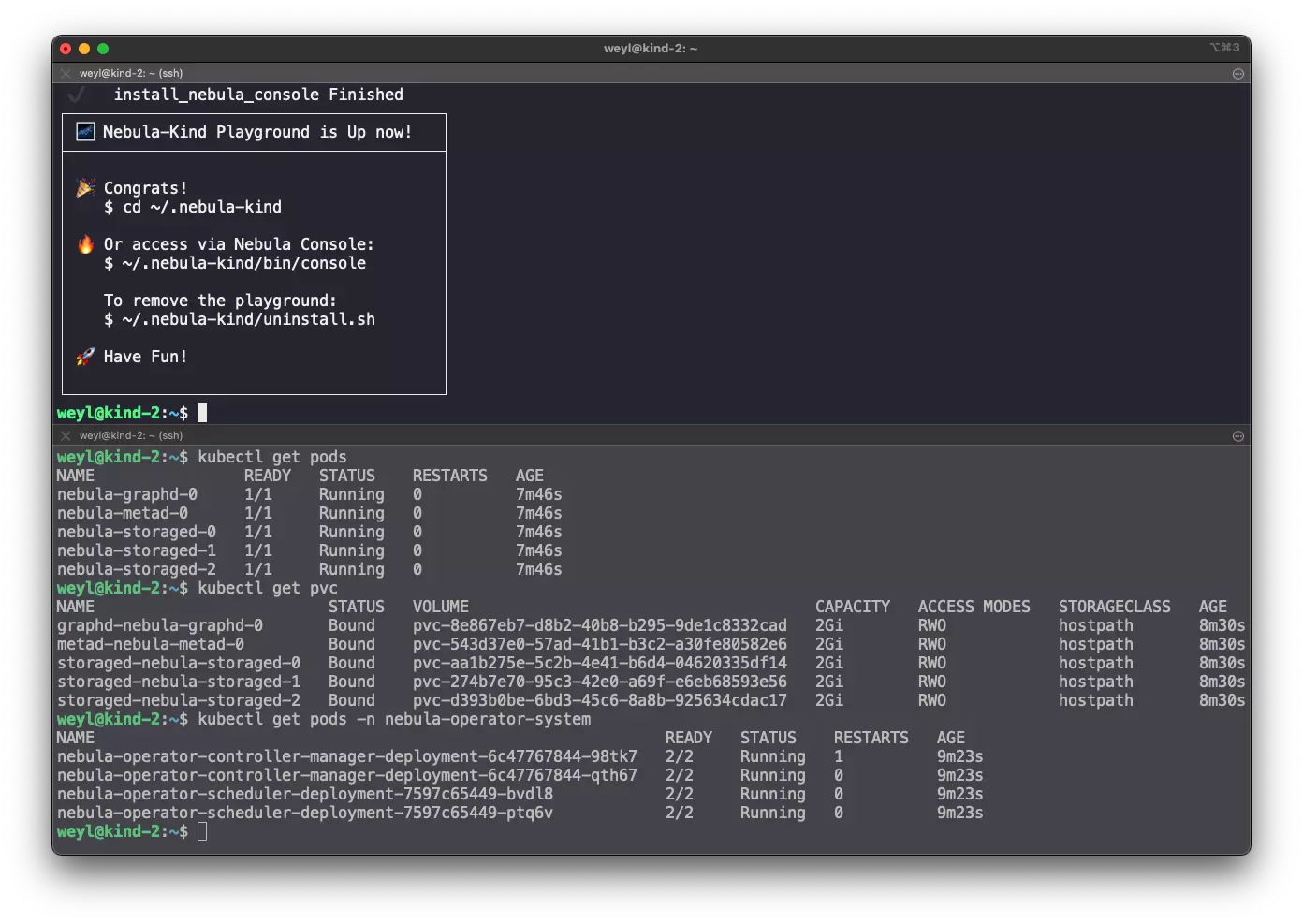

In order to make it convenient for students who try new Nebula graph and Nebula algorithm on K8s, here is a gadget written by Amway Neubla-Operator-KinD , it is a key to deploy a K8s cluster in the Docker environment, and deploy the Nebula Operator and all dependent gadgets (including storage provider) in it. Not only that, it also automatically deploys a small Nebula cluster. You can see the following steps:

Step 1: deploy k8s + Nebula operator + Nebula cluster:

curl -sL nebula-kind.siwei.io/install.sh | bash

The second step is to follow the instructions in the tool document what's next

a. Connect the cluster with console and load the sample dataset

b. Run a graph algorithm in this K8s

- Create a Spark environment

kubectl create -f http://nebula-kind.siwei.io/deployment/spark.yaml kubectl wait pod --timeout=-1s --for=condition=Ready -l '!job-name'

- After the above wait is ready, enter the pod of spark.

kubectl exec -it deploy/spark-deployment -- bash

- Download Nebula algorithm, such as version 2.6.2. For more versions, please refer to https://github.com/vesoft-inc/nebula-algorithm/.

matters needing attention:

- The official version is available here: https://repo1.maven.org/maven2/com/vesoft/nebula-algorithm/

- Because of this problem: https://github.com/vesoft-inc/nebula-algorithm/issues/42 Only version 2.6.2 or later supports domain name access to MetaD.

# Download nebula-algorithm-2.6.2 jar wget https://repo1.maven.org/maven2/com/vesoft/nebula-algorithm/2.6.2/nebula-algorithm-2.6.2.jar # Download the nebula algorithm configuration file wget https://github.com/vesoft-inc/nebula-algorithm/raw/v2.6/nebula-algorithm/src/main/resources/application.conf

- Modify the met and graph address information in the nebula algorithm.

sed -i '/^ metaAddress/c\ metaAddress: \"nebula-metad-0.nebula-metad-headless.default.svc.cluster.local:9559\"' application.conf sed -i '/^ graphAddress/c\ graphAddress: \"nebula-graphd-0.nebula-graphd-svc.default.svc.cluster.local:9669\"' application.conf ##### change space sed -i '/^ space/c\ space: basketballplayer' application.conf ##### read data from nebula graph sed -i '/^ source/c\ source: nebula' application.conf ##### execute algorithm: labelpropagation sed -i '/^ executeAlgo/c\ executeAlgo: labelpropagation' application.conf

- Execute LPA algorithm in basketball layer graph space

/spark/bin/spark-submit --master "local" --conf spark.rpc.askTimeout=6000s \

--class com.vesoft.nebula.algorithm.Main \

nebula-algorithm-2.6.2.jar \

-p application.conf

- The results are as follows:

bash-5.0# ls /tmp/count/ _SUCCESS part-00000-5475f9f4-66b9-426b-b0c2-704f946e54d3-c000.csv bash-5.0# head /tmp/count/part-00000-5475f9f4-66b9-426b-b0c2-704f946e54d3-c000.csv _id,lpa 1100,1104 2200,2200 2201,2201 1101,1104 2202,2202

Next, you can happy graphics!

AC diagram database technology? Please join the Nebula communication group first Fill out your Nebula business card , Nebula's little assistant will pull you into the group~~