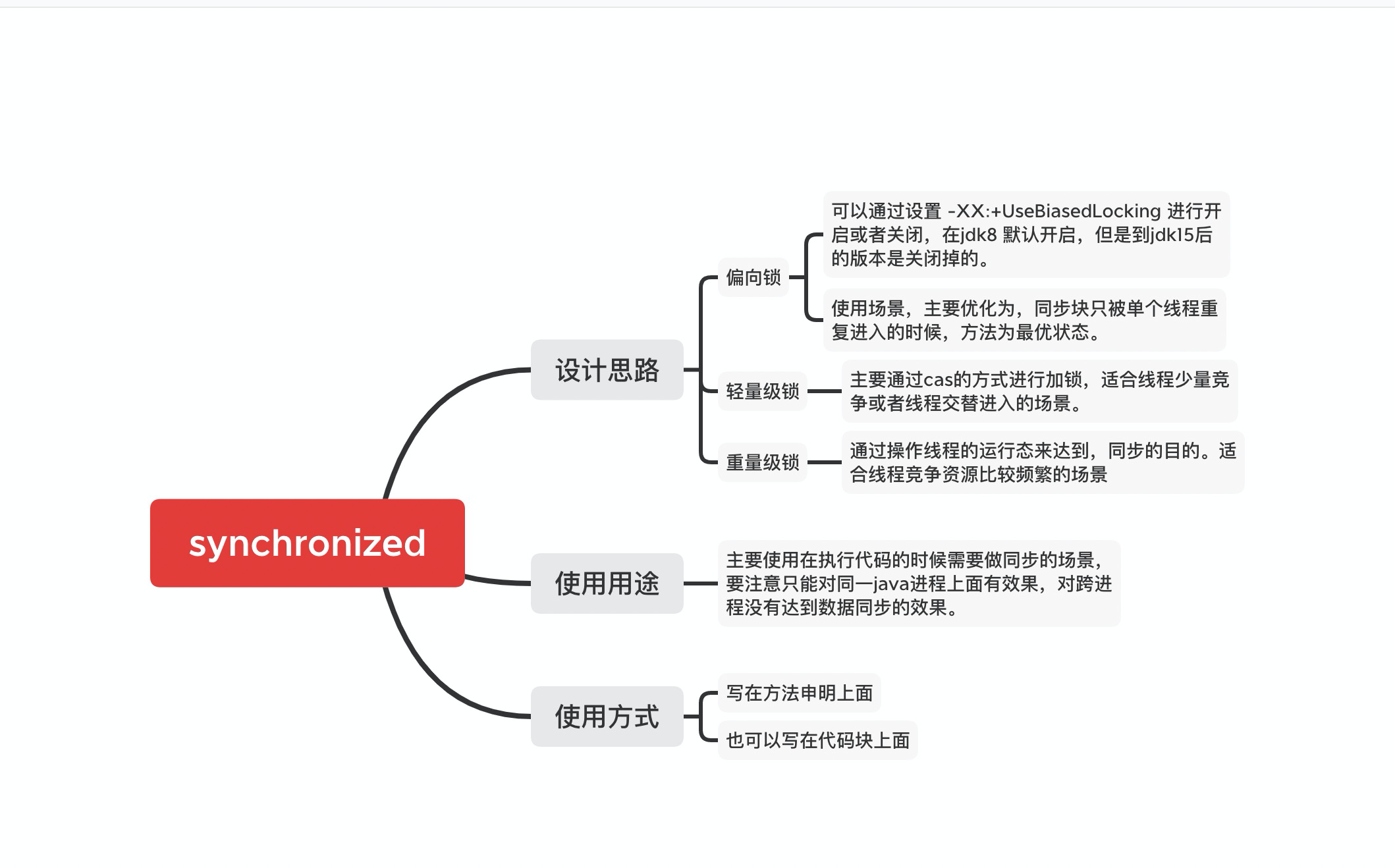

Key points of this paper

This article mainly talks about the principle of synchronized lock expansion. It will expand the logic of lock expansion from the source code and explain why the designer should do so from the source code.

synchronized analysis

Because there are many articles on the Internet about the whole synchronized analysis, this article mainly focuses on answering several points.

Before looking at the following examples, let's take a look at how the whole markword is expressed in a 64 bit system.

Is the performance of synchronized biased locks better than lightweight locks

Before analyzing the source code, first look at the following code:

*/

public class OneSingleThread {

public static void main(String[] args) {

OneSingleThread oneSingleThread = new OneSingleThread();

long before = System.currentTimeMillis();

for (int i = 0; i < 1000000; i++) {

synchronized (oneSingleThread) {

}

}

long after = System.currentTimeMillis();

System.out.println(after - before);

}

}

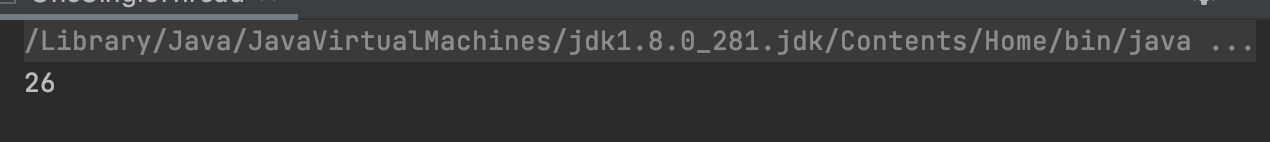

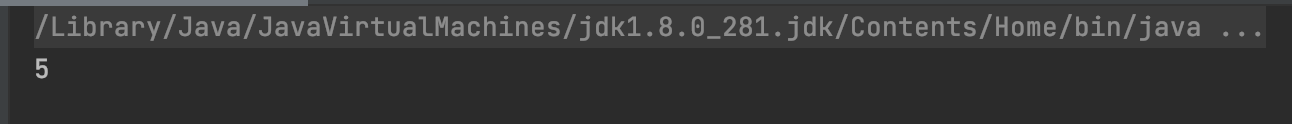

The first time we run: we turn off the bias lock

-XX:-UseBiasedLocking -XX:BiasedLockingStartupDelay=0

vm parameters during the second run

-XX:+UseBiasedLocking -XX:BiasedLockingStartupDelay=0

From the experimental comparison, we can find that in the case of single thread, the performance of biased lock is still improved. About non biased lock, 100w runs, and the performance can be optimized for 26 milliseconds. But there is a false proposition here, which is why we use a lock in the loop. When the lock is released, it can reach 100w times (the same thread). Should our program itself be optimized.

What happens to synchronized biased locks that expand into lightweight locks

package com.test;

import org.openjdk.jol.info.ClassLayout;

/**

* @Author: Peng Yujia

* @Date: 2021/12/5 11:53 morning

*/

public class TwoThreadDemo {

public static TwoThreadDemo lock = new TwoThreadDemo();

public static void main(String[] args) {

Thread a = new Thread() {

@Override

public void run() {

System.out.println("thread 1 Before lock" + ClassLayout.parseInstance(lock).toPrintable());

synchronized (lock) {

System.out.println("thread 1 Lock in" + ClassLayout.parseInstance(lock).toPrintable());

}

System.out.println("thread 1 After unlocking " + ClassLayout.parseInstance(lock).toPrintable());

}

};

Thread b = new Thread() {

@Override

public void run() {

System.out.println("thread 2 Before lock" + ClassLayout.parseInstance(lock).toPrintable());

synchronized (lock) {

System.out.println("thread 2 Lock in" + ClassLayout.parseInstance(lock).toPrintable());

}

System.out.println("thread 2 After unlocking " + ClassLayout.parseInstance(lock).toPrintable());

}

};

a.start();

//Ensure that thread 1 is executed, and then execute thread 2

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

b.start();

}

}

In the above code, we simulate the alternate running of threads under the.

Our startup parameters are also as follows:

-XX:+UseBiasedLocking -XX:BiasedLockingStartupDelay=0

thread 1 Before lock com.test.TwoThreadDemo object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 05 00 00 00 (00000101 00000000 00000000 00000000) (5)

4 4 (object header) 00 00 00 00 (00000000 00000000 00000000 00000000) (0)

8 4 (object header) 05 c1 00 f8 (00000101 11000001 00000000 11111000) (-134168315)

12 4 (loss due to the next object alignment)

Instance size: 16 bytes

Space losses: 0 bytes internal + 4 bytes external = 4 bytes total

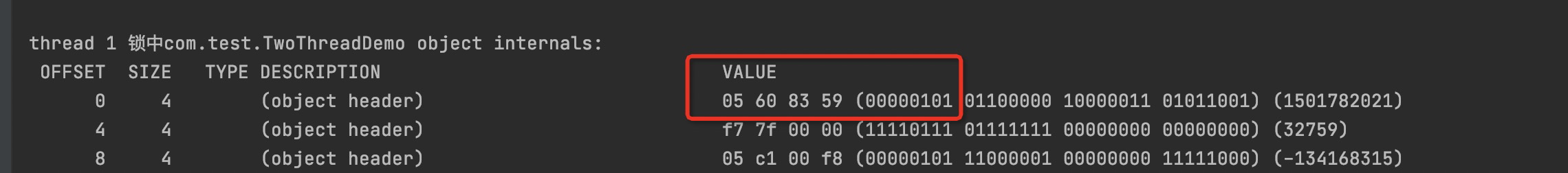

thread 1 Lock in com.test.TwoThreadDemo object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 05 60 83 59 (00000101 01100000 10000011 01011001) (1501782021)

4 4 (object header) f7 7f 00 00 (11110111 01111111 00000000 00000000) (32759)

8 4 (object header) 05 c1 00 f8 (00000101 11000001 00000000 11111000) (-134168315)

12 4 (loss due to the next object alignment)

Instance size: 16 bytes

Space losses: 0 bytes internal + 4 bytes external = 4 bytes total

thread 1 After unlocking com.test.TwoThreadDemo object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 05 60 83 59 (00000101 01100000 10000011 01011001) (1501782021)

4 4 (object header) f7 7f 00 00 (11110111 01111111 00000000 00000000) (32759)

8 4 (object header) 05 c1 00 f8 (00000101 11000001 00000000 11111000) (-134168315)

12 4 (loss due to the next object alignment)

Instance size: 16 bytes

Space losses: 0 bytes internal + 4 bytes external = 4 bytes total

thread 2 Before lock com.test.TwoThreadDemo object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 05 60 83 59 (00000101 01100000 10000011 01011001) (1501782021)

4 4 (object header) f7 7f 00 00 (11110111 01111111 00000000 00000000) (32759)

8 4 (object header) 05 c1 00 f8 (00000101 11000001 00000000 11111000) (-134168315)

12 4 (loss due to the next object alignment)

Instance size: 16 bytes

Space losses: 0 bytes internal + 4 bytes external = 4 bytes total

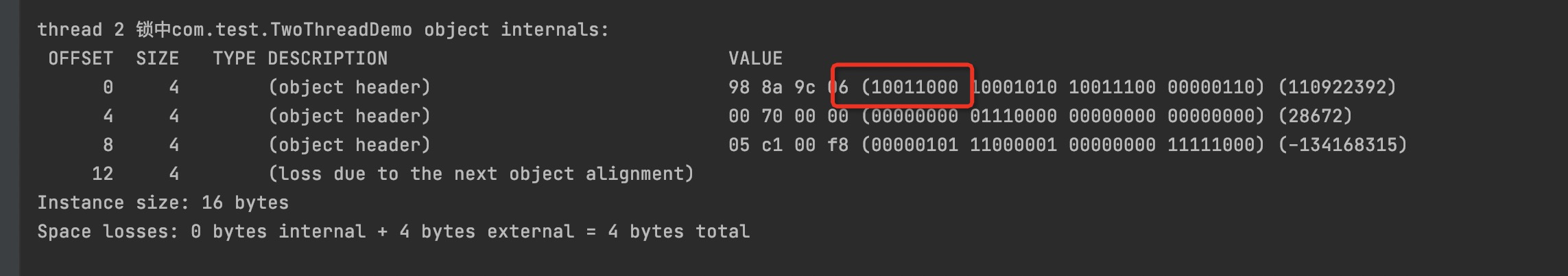

thread 2 Lock in com.test.TwoThreadDemo object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 98 8a 9c 06 (10011000 10001010 10011100 00000110) (110922392)

4 4 (object header) 00 70 00 00 (00000000 01110000 00000000 00000000) (28672)

8 4 (object header) 05 c1 00 f8 (00000101 11000001 00000000 11111000) (-134168315)

12 4 (loss due to the next object alignment)

Instance size: 16 bytes

Space losses: 0 bytes internal + 4 bytes external = 4 bytes total

thread 2 After unlocking com.test.TwoThreadDemo object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 01 00 00 00 (00000001 00000000 00000000 00000000) (1)

4 4 (object header) 00 00 00 00 (00000000 00000000 00000000 00000000) (0)

8 4 (object header) 05 c1 00 f8 (00000101 11000001 00000000 11111000) (-134168315)

12 4 (loss due to the next object alignment)

From the printed information, we can see that thread 1 uses a bias lock during execution, and thread 2 will expand into a lightweight lock because the lock has been biased to thread 1.

Summary:

From the above phenomena, we can observe that under what circumstances can bias lock be used?

Only when the bias lock is turned on, and the current lock object is not biased to any thread, or the biased thread is its own, will the bias lock continue to be used.

What is batch weight bias

Take a look at the following example

package com.test;

import org.openjdk.jol.info.ClassLayout;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.locks.LockSupport;

/**

* @Author: Peng Yujia

* @Date: 2021/12/2 8:42 afternoon

*/

public class BatchBiase {

static Thread A;

static Thread B;

static int loopFlag = 20;

public static void main(String[] args) {

final List<BatchBiase> list = new ArrayList<>();

A = new Thread() {

@Override

public void run() {

for (int i = 0; i < loopFlag; i++) {

BatchBiase object = new BatchBiase();

list.add(object);

// System.out.println("A" + i + "times" + classlayout.parseinstance (object) before locking) toPrintable());

synchronized (object) {

// System. out. Println ("the first" + i + second "+ classlayout. Parseinstance (object) in a lock) toPrintable());

}

System.out.println("A End of locking"+i+"second" + ClassLayout.parseInstance(object).toPrintable());

}

System.out.println("============thread A They're all biased locks=============");

LockSupport.unpark(B);

}

};

B = new Thread() {

@Override

public void run() {

//Prevent contention sleep thread B first

LockSupport.park();

for (int i = 0; i < loopFlag; i++) {

BatchBiase object = list.get(i);

//Because the list is biased towards thread A

System.out.println("B The first time before locking"+i+" second" + ClassLayout.parseInstance(object).toPrintable());

synchronized (object) {

//Undo the bias lock bias thread A 20 times; Then upgrade the lightweight lock to point to the lock record in the thread stack of thread B

//The subsequent sending batch is biased to thread B

// System. out. Println ("the" + i + "times" + classlayout. Parseinstance (object) in B locking) toPrintable());

}

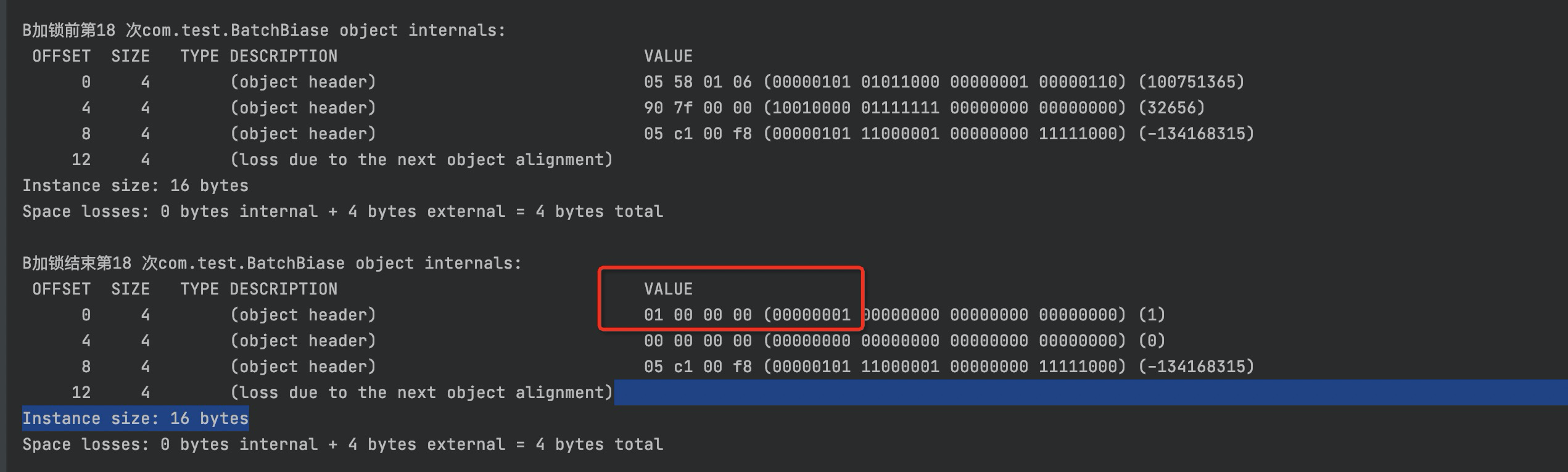

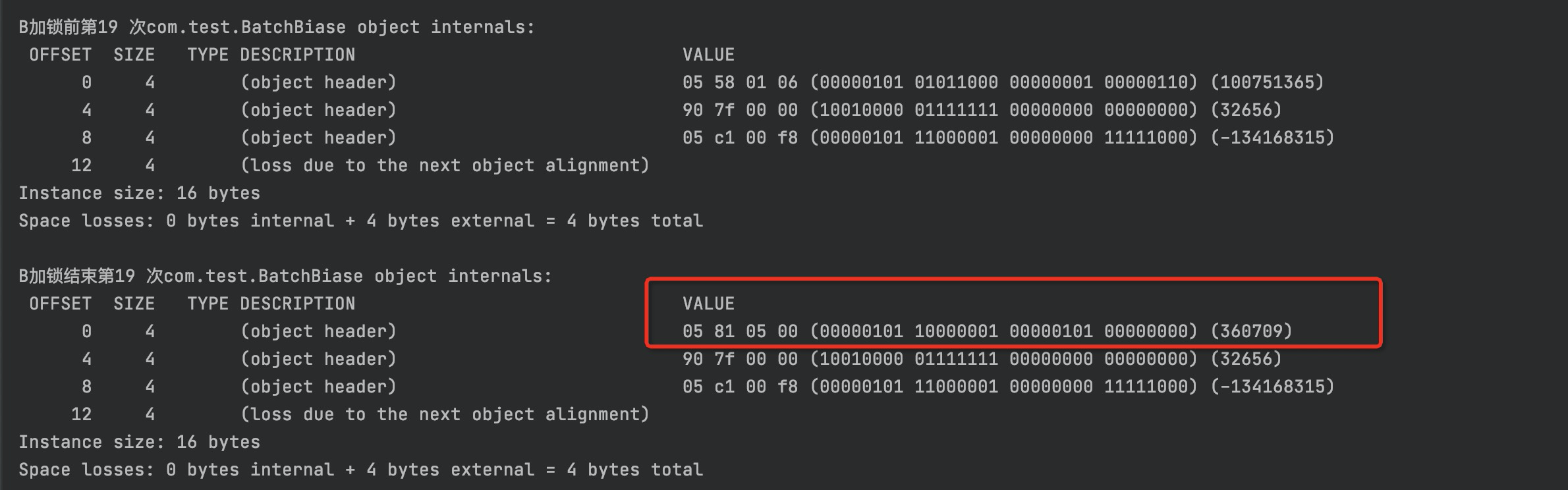

//Because the first 19 times are lightweight locks, they are unlocked after release and cannot be biased

//However, the 20th time is the biased lock. After the biased thread B is released, it is still biased to thread B

System.out.println("B End of locking"+i+" second" + ClassLayout.parseInstance(object).toPrintable());

}

}

};

A.start();

B.start();

}

}

One of the main tasks of the above program is to add and subtract locks on multiple objects of the same class,

After thread A runs, thread B runs again

You can see that when thread B runs to the 20th cycle, thread B obtains the bias lock again.

Why? Let's take a look at how the jvm handles it with questions.

synchronized source code analysis

CASE(_monitorenter): {

// Lock is the lock object

oop lockee = STACK_OBJECT(-1);

// derefing's lockee ought to provoke implicit null check

CHECK_NULL(lockee);

// code 1: find a free Lock Record

BasicObjectLock* limit = istate->monitor_base();

BasicObjectLock* most_recent = (BasicObjectLock*) istate->stack_base();

BasicObjectLock* entry = NULL;

//Traverse to find free space

while (most_recent != limit ) {

if (most_recent->obj() == NULL) entry = most_recent;

else if (most_recent->obj() == lockee) break;

most_recent++;

}

//If the entry is not null, it means that there are still free lock records

if (entry != NULL) {

// code 2: point the obj pointer of the Lock Record to the lock object

entry->set_obj(lockee);

int success = false;

uintptr_t epoch_mask_in_place = (uintptr_t)markOopDesc::epoch_mask_in_place;

// markoop is the mark word of the object header

markOop mark = lockee->mark();

intptr_t hash = (intptr_t) markOopDesc::no_hash;

// code 3: if the mark word status of the lock object is biased mode

if (mark->has_bias_pattern()) {

uintptr_t thread_ident;

uintptr_t anticipated_bias_locking_value;

thread_ident = (uintptr_t)istate->thread();

// code 4: here are several steps, which are analyzed below

anticipated_bias_locking_value =

(((uintptr_t)lockee->klass()->prototype_header() | thread_ident) ^ (uintptr_t)mark) &

~((uintptr_t) markOopDesc::age_mask_in_place);

// code 5: if the biased thread is itself and epoch is equal to epoch of class

if (anticipated_bias_locking_value == 0) {

// already biased towards this thread, nothing to do

if (PrintBiasedLockingStatistics) {

(* BiasedLocking::biased_lock_entry_count_addr())++;

}

success = true;

}

// code 6: if bias mode is off, try to undo the bias lock

else if ((anticipated_bias_locking_value & markOopDesc::biased_lock_mask_in_place) != 0) {

markOop header = lockee->klass()->prototype_header();

if (hash != markOopDesc::no_hash) {

header = header->copy_set_hash(hash);

}

// Use CAS operation to replace mark word with mark word in class

if (Atomic::cmpxchg_ptr(header, lockee->mark_addr(), mark) == mark) {

if (PrintBiasedLockingStatistics)

(*BiasedLocking::revoked_lock_entry_count_addr())++;

}

}

// code 7: if epoch is not equal to epoch in class, try redirecting

else if ((anticipated_bias_locking_value & epoch_mask_in_place) !=0) {

// Construct a mark word biased towards the current thread

markOop new_header = (markOop) ( (intptr_t) lockee->klass()->prototype_header() | thread_ident);

if (hash != markOopDesc::no_hash) {

new_header = new_header->copy_set_hash(hash);

}

// CAS replaces the mark word of the object header

if (Atomic::cmpxchg_ptr((void*)new_header, lockee->mark_addr(), mark) == mark) {

if (PrintBiasedLockingStatistics)

(* BiasedLocking::rebiased_lock_entry_count_addr())++;

}

else {

// If the re bias fails, it means that there is multi-threaded competition, then call the monitorenter method to upgrade the lock

CALL_VM(InterpreterRuntime::monitorenter(THREAD, entry), handle_exception);

}

success = true;

}

else {

// Go here to indicate that the current bias is either other threads or anonymous (that is, there is no bias to any thread)

// code 8: next, build an anonymous biased mark word and try to replace the mark word of the lock object with the CAS instruction

markOop header = (markOop) ((uintptr_t) mark & ((uintptr_t)markOopDesc::biased_lock_mask_in_place |(uintptr_t)markOopDesc::age_mask_in_place |epoch_mask_in_place));

if (hash != markOopDesc::no_hash) {

header = header->copy_set_hash(hash);

}

markOop new_header = (markOop) ((uintptr_t) header | thread_ident);

// debugging hint

DEBUG_ONLY(entry->lock()->set_displaced_header((markOop) (uintptr_t) 0xdeaddead);)

if (Atomic::cmpxchg_ptr((void*)new_header, lockee->mark_addr(), header) == header) {

// CAS modified successfully

if (PrintBiasedLockingStatistics)

(* BiasedLocking::anonymously_biased_lock_entry_count_addr())++;

}

else {

// If the modification fails, it indicates that there is multi-threaded competition, so enter the monitorenter method

CALL_VM(InterpreterRuntime::monitorenter(THREAD, entry), handle_exception);

}

success = true;

}

}

// If the bias thread is not the current thread or the bias mode is not enabled, success==false will be caused

if (!success) {

// Lightweight lock logic

//code 9: construct a unlocked Displaced Mark Word and point the lock of the Lock Record to it

markOop displaced = lockee->mark()->set_unlocked();

entry->lock()->set_displaced_header(displaced);

//Call if - XX:+UseHeavyMonitors is specified_ VM = true, which means bias lock and lightweight lock are disabled

bool call_vm = UseHeavyMonitors;

// Use CAS to replace the mark word of the object header with a pointer to Lock Record

if (call_vm || Atomic::cmpxchg_ptr(entry, lockee->mark_addr(), displaced) != displaced) {

// Determine whether lock reentry

if (!call_vm && THREAD->is_lock_owned((address) displaced->clear_lock_bits())) { //code 10: if it is lock reentry, set the Displaced Mark Word to null directly

entry->lock()->set_displaced_header(NULL);

} else {

CALL_VM(InterpreterRuntime::monitorenter(THREAD, entry), handle_exception);

}

}

}

UPDATE_PC_AND_TOS_AND_CONTINUE(1, -1);

} else {

// lock record is not enough. Execute again

istate->set_msg(more_monitors);

UPDATE_PC_AND_RETURN(0); // Re-execute

}

}

The above logic mainly focuses on two points, that is, the conditions for successful acquisition of bias lock

- When the epoch does not expire and the thread that is biased toward the lock object is its own, it will directly obtain the lock and return directly.

- If the epoch has expired and the lock object supports the biased lock mode, try cas.

- When the lock object is not biased to any lock, i.e. anonymous, try cas.

In other cases, you will enter the logic of revoking the bias lock

BiasedLocking::Condition BiasedLocking::revoke_and_rebias(Handle obj, bool attempt_rebias, TRAPS) {

//This method cannot be called at saftpoint

assert(!SafepointSynchronize::is_at_safepoint(), "must not be called while at safepoint");

//Gets the object header of the lock object

markOop mark = obj->mark();

//Judge whether mark is biased and not biased to the thread

//attempt_ If rebias is false, it will go to the next process

if (mark->is_biased_anonymously() && !attempt_rebias) {

//hash code will find here and destroy the deflectable state

markOop biased_value = mark;

//Create a non biased markword

markOop unbiased_prototype = markOopDesc::prototype()->set_age(mark->age());

//The unbiased state is assigned to this by cas

markOop res_mark = (markOop) Atomic::cmpxchg_ptr(unbiased_prototype, obj->mark_addr(), mark);

if (res_mark == biased_value) {

return BIAS_REVOKED;

}

} else if (mark->has_bias_pattern()) {

//Coming here means that the object can be biased

Klass* k = obj->klass();

//The template for the class object was found

markOop prototype_header = k->prototype_header();

//If the class object template is unbiased

if (!prototype_header->has_bias_pattern()) {

//Try to change the object to undo bias through cas

markOop biased_value = mark;

markOop res_mark = (markOop) Atomic::cmpxchg_ptr(prototype_header, obj->mark_addr(), mark);

assert(!(*(obj->mark_addr()))->has_bias_pattern(), "even if we raced, should still be revoked");

//Return bias undo

return BIAS_REVOKED;

} else if (prototype_header->bias_epoch() != mark->bias_epoch()) {

//Coming here means that the object bias state is expired

if (attempt_rebias) {

//thread is not empty

assert(THREAD->is_Java_thread(), "");

markOop biased_value = mark;

//Set thread id

markOop rebiased_prototype = markOopDesc::encode((JavaThread*) THREAD, mark->age(), prototype_header->bias_epoch());

//Bias processing by cas

markOop res_mark = (markOop) Atomic::cmpxchg_ptr(rebiased_prototype, obj->mark_addr(), mark);

//Biased towards success

if (res_mark == biased_value) {

return BIAS_REVOKED_AND_REBIASED;

}

} else {

//Revocation bias

markOop biased_value = mark;

markOop unbiased_prototype = markOopDesc::prototype()->set_age(mark->age());

markOop res_mark = (markOop) Atomic::cmpxchg_ptr(unbiased_prototype, obj->mark_addr(), mark);

if (res_mark == biased_value) {

return BIAS_REVOKED;

}

}

}

}

//Biased failure or non biased object will come here

HeuristicsResult heuristics = update_heuristics(obj(), attempt_rebias);

//Return unbiased

if (heuristics == HR_NOT_BIASED) {

return NOT_BIASED;

}

//Single undo logic goes here

else if (heuristics == HR_SINGLE_REVOKE) {

Klass *k = obj->klass();

markOop prototype_header = k->prototype_header();

if (mark->biased_locker() == THREAD &&

prototype_header->bias_epoch() == mark->bias_epoch()) {

ResourceMark rm;

if (TraceBiasedLocking) {

tty->print_cr("Revoking bias by walking my own stack:");

}

//Here is the logic of revoking bias

BiasedLocking::Condition cond = revoke_bias(obj(), false, false, (JavaThread*) THREAD);

((JavaThread*) THREAD)->set_cached_monitor_info(NULL);

assert(cond == BIAS_REVOKED, "why not?");

return cond;

} else {

VM_RevokeBias revoke(&obj, (JavaThread*) THREAD);

VMThread::execute(&revoke);

return revoke.status_code();

}

}

//Here is the place for batch undo and batch redo

assert((heuristics == HR_BULK_REVOKE) ||

(heuristics == HR_BULK_REBIAS), "?");

VM_BulkRevokeBias bulk_revoke(&obj, (JavaThread*) THREAD,

(heuristics == HR_BULK_REBIAS),

attempt_rebias);

VMThread::execute(&bulk_revoke);

return bulk_revoke.status_code();

}

Here are some important information,

- hashcode will affect the skew lock and cause the skew lock to be revoked.

- Batch revocation needs to go to the safe point and execute batch revocation through vm thread.

- In a few cases of revocation, vm thread can not be involved. For example, when competition is not involved, hashcode can enter the revocation logic.

/ All this is the logic of revoking judgment

static HeuristicsResult update_heuristics(oop o, bool allow_rebias) {

markOop mark = o->mark();

//Can the object be biased

if (!mark->has_bias_pattern()) {

//Return unbiased

return HR_NOT_BIASED;

}

Klass* k = o->klass();

jlong cur_time = os::javaTimeMillis();

jlong last_bulk_revocation_time = k->last_biased_lock_bulk_revocation_time();

//Number of times class was revoked

int revocation_count = k->biased_lock_revocation_count();

//Within this logical expression, if the number of de bias per unit time is lower than a preset value, it will be reset to 0

//BiasedLockingBulkRebiasThreshold =20

//BiasedLockingBulkRevokeThreshold =40

//BiasedLockingDecayTime=25000

if ((revocation_count >= BiasedLockingBulkRebiasThreshold) &&

(revocation_count < BiasedLockingBulkRevokeThreshold) &&

(last_bulk_revocation_time != 0) &&

(cur_time - last_bulk_revocation_time >= BiasedLockingDecayTime)) {

k->set_biased_lock_revocation_count(0);

revocation_count = 0;

}

//There is no need to increase this count without restriction

if (revocation_count <= BiasedLockingBulkRevokeThreshold) {

revocation_count = k->atomic_incr_biased_lock_revocation_count();

}

//If the threshold is exceeded, batch Undo is performed

if (revocation_count == BiasedLockingBulkRevokeThreshold) {

return HR_BULK_REVOKE;

}

//When it is equal to this value, the batch bias is executed (that is, the unit time is reduced)

if (revocation_count == BiasedLockingBulkRebiasThreshold) {

return HR_BULK_REBIAS;

}

return HR_SINGLE_REVOKE;

}

The above code can get a message

- Kclass will record a number of revocation times. Any object of kclass will increase the number of revocation times of kclass.

- In each cycle, there will be a batch reorientation and batch cancellation when it is increased to a certain number of times. Here is exactly why the third example uses bias lock.

summary

Because many other articles have analyzed the code, this article mainly explains the process of biased lock acquisition with practical examples. For the source code analysis of partial lock revocation and the logic of lightweight lock inflation, please see the following links.

Deadlock Synchronized underlying implementation - biased lock