1. High Availability Principle of ActiveMQ

Register all ActiveMQ Broker s using ZooKeeper (Cluster). Only one of the brokers can provide services, which are considered Master, while the others are standby and considered Slave. If Master fails to provide services, Zookeeper elects a Broker from Slave to act as Master.

Slave connects Master and synchronizes their storage status. Slave does not accept client connections. All storage operations will be copied to Slaves connected to Master. If Master goes down, the newly updated Slave will become Master. Fault nodes rejoin the cluster after recovery and connect Master to Slave mode.

Does it feel like Redis Sentinel's master-slave high-availability approach, where zookeeper plays the same role as sentinel in reids?

In addition, an official document warning is attached for the user's attention. replicated LevelDB does not support deferred or scheduled task messages. These messages are stored in a separate LevelDB file and will not be copied to Slave Broker if delayed or scheduled task messages are used, thus making the message highly available.

2.ActiveMQ High Availability Environment Construction

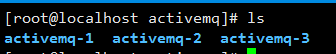

I am working on a CentOS virtual machine. test In the process of development, we need to make corresponding adjustments according to our own actual situation. On this server, three ActiveMQ are configured, as shown in the following figure:

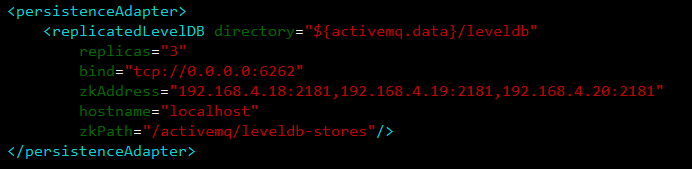

The configuration files that need to be modified later are in the ACTIVEMQ_HOME/conf folder. Firstly, modify the persistence mode of each ActiveMQ (modify ACTIVEMQ_HOME/bin/activemq.xml file). ActiveMQ uses kahaDB as persistent storage data by default, and levelDB is modified here. As shown in the following figure:

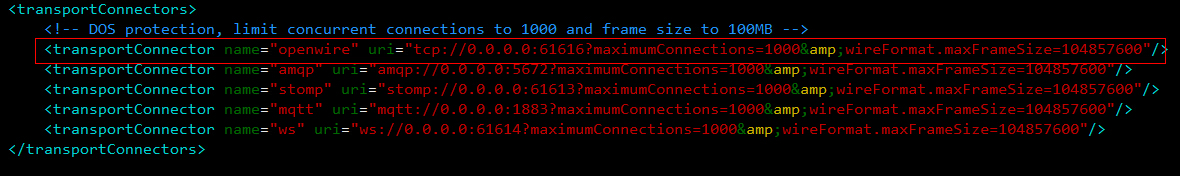

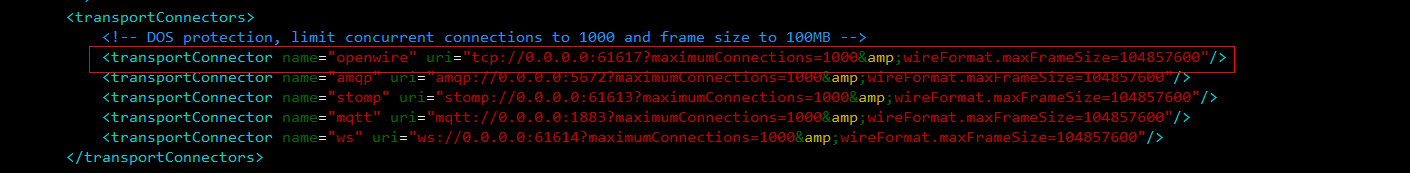

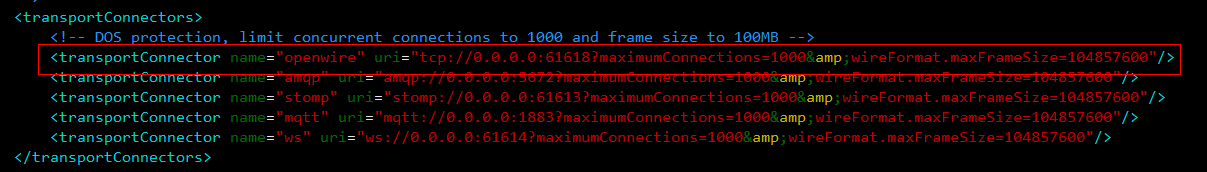

Next, modify the TCP link port number of ActiveMQ. Actemq-1 uses the default port 61616, activemq-2 is modified to 61617, and activemq-3 is modified to 6161618. As shown in the following figure (note the red box section):

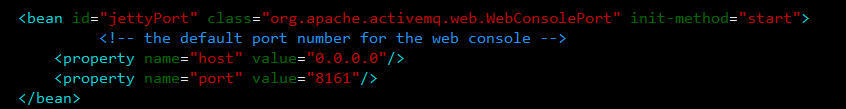

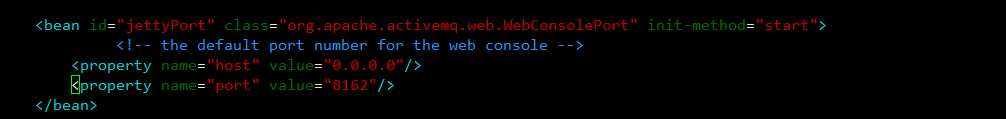

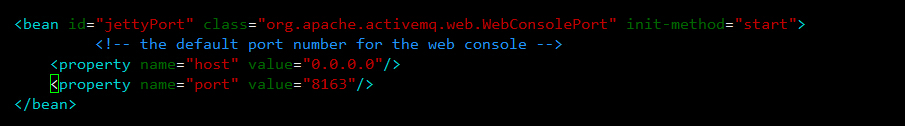

After modifying and saving, it is to modify the port number of jetty (modifying ACTIVEMQ_HOME/bin/jetty.xml file), because the second and third jetty will not start if they are not modified on the same server. Actemq-1 still uses the default port 8161, activemq-2 8162, and activemq-3 8163, as follows:

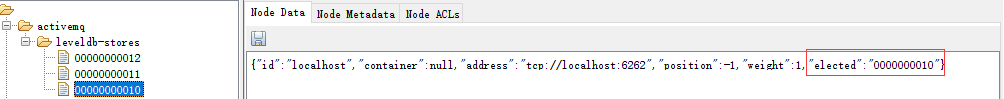

Here, ActiveMQ is configured for high availability. If zookeeper is not started, start zookeeper (see my blog about zookeeper), then activemq-1, activemq-2 and activemq-3, respectively. Enter ACTIVEMQ_HOME/data and check the activemq.log file. If there is no error, the startup is successful! On zookeeper, you can see the following data:

The s elected node, which is not empty, is denoted as Master and served by the activemq.

Okay, let's do some code testing.

ClustorProducer:

public class ClustorProducer { private ConnectionFactory factory; private Connection connection; private Session session; private Destination destination; private MessageProducer producer; public ClustorProducer() throws JMSException { this.factory = new ActiveMQConnectionFactory(ActiveMQConnectionFactory.DEFAULT_USER, ActiveMQConnectionFactory.DEFAULT_PASSWORD, "failover:(tcp://192.168.4.19:61616,tcp://192.168.4.19:61617,tcp://192.168.4.19:61618)?randomize=false"); this.connection = factory.createConnection(); connection.start(); this.session = connection.createSession(Boolean.FALSE, Session.AUTO_ACKNOWLEDGE); this.destination = session.createQueue("first"); producer = session.createProducer(destination); } public void send() throws JMSException, InterruptedException { for(int i=1; i<=50000; i++) { Message message = session.createTextMessage("Contents:" + i); producer.send(destination, message); System.out.println(message); Thread.sleep(1000); } } public static void main(String[] args) throws JMSException, InterruptedException { ClustorProducer clustorProducer = new ClustorProducer(); clustorProducer.send(); } }

ClustorConsumer:

public class ClustorConsumer { private ConnectionFactory factory; private Connection connection; private Session session; private Destination destination; private MessageConsumer consumer; public ClustorConsumer() throws JMSException { this.factory = new ActiveMQConnectionFactory(ActiveMQConnectionFactory.DEFAULT_USER, ActiveMQConnectionFactory.DEFAULT_PASSWORD, "failover:(tcp://192.168.4.19:61616,tcp://192.168.4.19:61617,tcp://192.168.4.19:61618)?randomize=false"); this.connection = factory.createConnection(); connection.start(); this.session = connection.createSession(Boolean.FALSE, Session.AUTO_ACKNOWLEDGE); this.destination = session.createQueue("first"); this.consumer = session.createConsumer(destination); } public void consume() throws JMSException, InterruptedException { while (true) { Message message = consumer.receive(); if(message == null) break; System.out.println(message); Thread.sleep(1000); } } public static void main(String[] args) throws JMSException, InterruptedException { ClustorConsumer clustorConsumer = new ClustorConsumer(); clustorConsumer.consume(); } }

The brokerUrl parameter changes to:

failover:(tcp://192.168.4.19:61616,tcp://192.168.4.19:61617,tcp://192.168.4.19:61618)?randomize=false

By stopping any of the three ActiveMQ, we can see that messages can still be sent and received. The high availability of ActiveMQ is very successful!

3.ActiveMQ Cluster Load Balancing

ActiveMQ's high-availability deployment has been implemented before. Only high-availability cluster can not achieve load balancing. Next, only simple configuration can complete the cluster function that can achieve load balancing.

Link cluster 2 in cluster 1's activemq.xml (configured before persistence adapter tag):

<networkConnectors> <networkConnector uri="static:(tcp://192.168.1.103:61616,tcp://192.168.2.103:61617,tcp://192.168.2.103:61618)" duplex="false"/> </networkConnectors> Link cluster 1 in cluster 2's activemq.xml (configured before persistence adapter tag): <networkConnectors> <networkConnector uri="static:(tcp://192.168.1.104:61616,tcp://192.168.1.104:61617,tcp://192.168.1.104:61618)" duplex="false"/> </networkConnectors>

In this way, the cluster high availability load balancing function of ActiveMQ is realized.

Client Connection:

ActiveMQ clients can only access Master Broker, while other Slave Brokers cannot. So the client connection Broker should use failover protocol.

The configuration file address should be:

failover:(tcp://192.168.1.103:61616,tcp://192.168.1.103:61617,tcp://192.168.1.103:61618)?randomize=false

Or:

failover:(tcp://192.168.1.104:61616,tcp://192.168.1.104:61617,tcp://192.168.1.104:61618)?randomize=false