- Implementation with zookeeper

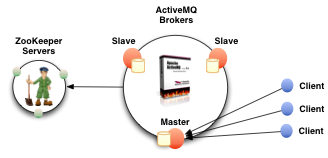

It is an effective solution for high availability of ActiveMQ. The principle of high availability is to register all ActiveMQ Broker s using ZooKeeper (cluster). Only one of the brokers can provide services (i.e. Master nodes) to the outside world, while the other brokers are standby and are considered Slave. If Master fails to provide services, then using ZooKeeper's internal election mechanism, a Broker will be selected from Slave to act as Master node and continue to provide services to the outside world.

Official documents: http://activemq.apache.org/replicated-leveldb-store.html

Reference Documents https://www.ibm.com/developerworks/cn/data/library/bd-zookeeper/ etc.

2. Introduction of Cluster Roles

There are two main roles in zookeeper cluster: leader and follower.

Leaders are responsible for initiating and deciding votes and updating system status.

Learners include follower s and observer s.

Among them, follower is used to accept the client request and want the client to return the result, and participate in the voting process.

While observer can accept client connections and forward write requests to leader, observer does not participate in the voting process and only synchronizes the state of leader. The purpose of observer is to expand the system and improve the reading speed.

3. Number of zookeeper Cluster Nodes

How many zookeeper nodes does a zookeeper cluster need to run?

You can run a zookeeper node, but it's not a cluster. If you want to run a zookeeper cluster, it is best to deploy 3, 5, and 7 zookeeper nodes. The experiment was carried out with three nodes.

The more zookeeper nodes are deployed, the more reliable the service will be. Of course, it is better to deploy odd numbers, even numbers are not possible. But the zookeeper cluster is only when the number of downtime is more than half, the whole cluster will be downtime, so odd cluster is better.

You need to give each zookeeper about 1G of memory, and if possible, you'd better have separate disks, because separate disks can ensure that zookeeper is high performance. If your cluster load is heavy, don't run zookeeper and RegionServer on the same machine, just like DataNodes and TaskTrackers.

2. Environmental preparation

zookeeper environment

| Host IP | Message Port | Communication Port | Deployment node location / usr/local |

| 192.168.0.85 | 2181 | 2287:3387 | zookeeper-3.4.10 |

| 192.168.0.171 | 2181 | 2287:3387 | zookeeper-3.4.10 |

| 192.168.0.181 | 2181 | 2287:3387 | zookeeper-3.4.10 |

3. Install and configure zookeeper

[root@zqdd:/root]#tar xvf zookeeper-3.4.10.tar.gz -C /usr/local/ #decompression [root@zqdd:/usr/local/zookeeper-3.4.10/conf]#cp zoo_sample.cfg zoo.cfg #Copy the configuration file zoo.cfg #Output zookeeper environment variables //Add the following information to / etc/profile export ZK_HOME=/usr/local/zookeeper-3.4.10 export PATH=$PATH:$ZK_HOME/bin #Enabling Environmental Variables [root@zqdd:/root]#source /etc/profile

4. Modify zookeeper master configuration file zoo.cfg

[root@zqdd:/usr/local/zookeeper-3.4.10/conf]#cat zoo.cfg # The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=5 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=2 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=/tmp/zookeeper # the port at which the clients will connect clientPort=2181 # the maximum number of client connections. # increase this if you need to handle more clients #maxClientCnxns=60 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1 dataLogDir=/var/log server.1=192.168.0.171:2888:3888 server.2=192.168.0.181:2888:3888 server.3=192.168.0.85:2888:3888 //Description of parameters####################################### #dataDir: Data Directory #dataLogDir: Log directory #clientPort: Client Connection Port #TickTime: The interval between Zookeeper servers or between clients and servers to maintain a heartbeat, i.e. each tickTime time sends a heartbeat. #initLimit: How many heartbeat intervals can Zookeeper's Reader tolerate when it accepts an initial connection from a client (Follower). When the Zookeeper server has not received the return information from the client after more than five heartbeat times (tickTime), it indicates that the client connection failed. The total length of time is 5*2000 = 10 seconds. #syncLimit: Represents the length of request and response time when a message is sent between Leader and Follower. The longest time can not exceed how many tickTime s. The total time is 2*2000=4 seconds. #server.A=B:C:D: where A is a number indicating which server it is; B is the ip address of the server; C is the port where the server exchanges information with the Leader server in the cluster; D is the port in case the Leader server in the cluster hangs up and needs a port to re-elect and select a new Leader. Ports are the ports that servers communicate with each other during elections. If it is a pseudo cluster configuration, because B is the same, different Zookeeper instances can not have the same communication port number, so they need to be assigned different port numbers.

Each instance of ZooKeeper needs to set up a separate data storage directory and a log storage directory, so the directory corresponding to the dataDir node needs to be created manually first.

5. Create ServerID identifiers

In addition to modifying the zoo.cfg configuration file, zookeeper cluster mode also configures a myid file, which needs to be placed in the dataDir directory. There is a value of A in this file (A is A in server.A=B:C:D in zoo.cfg file), and myid file is created in the dataDir path configured in zoo.cfg file. Create myid file on 192.168.0.85 server and set it to 1, corresponding to server.1 in zoo.cfg file, as follows: echo "1" > /tmp/zookeeper/myid

6. The installation and configuration of the three machines zookeeper are the same

7. Start zookeeper and view the cluster status

#See 192.168.0.85 root@agent2:/root#zkServer.sh start #start-up ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper-3.4.10/bin/../conf/zoo.cfg Starting zookeeper ... STARTED root@agent2:/root#zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: leader #role root@agent2:/root#zkServer.sh #view help ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper-3.4.10/bin/../conf/zoo.cfg Usage: /usr/local/zookeeper-3.4.10/bin/zkServer.sh {start|start-foreground|stop|restart|status|upgrade|print-cmd}

#See 192.168.0.171 [root@zqdd:/usr/local/zookeeper-3.4.10/conf]#zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper-3.4.10/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@zqdd:/usr/local/zookeeper-3.4.10/conf]#zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower #role

#See 192.168.0.181 root@agent:/root#zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper-3.4.10/bin/../conf/zoo.cfg Starting zookeeper ... STARTED root@agent:/root#zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper-3.4.10/bin/../conf/zoo.cfg Mode: follower #role

8. Setting zookeeper to boot and start itself

touch /etc/init.d/zookeeper #Create startup file chmod +x /etc/init.d/zookeeper # #The script is as follows #!/bin/bash #chkconfig:2345 20 90 #description:zookeeper #processname:zookeeper case $1 in start) /usr/local/zookeeper-3.4.10/bin/zkServer.sh start;; stop) /usr/local/zookeeper-3.4.10/bin/zkServer.sh stop;; status) /usr/local/zookeeper-3.4.10/bin/zkServer.sh status;; restart) /usr/local/zookeeper-3.4.10/bin/zkServer.sh restart;; *) echo "require start|stop|status|restart";; esac chkconfig --add zookeeper #Adding services chkconfig --level 35 zookeeper on

9.zookeeper client use

root@agent2:/usr/local/zookeeper-3.4.10/bin#zkCli.sh -timeout 5000 -server 192.168.0.85:2181 Connecting to 192.168.0.85:2181 2017-06-21 10:01:12,672 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.10-39d3a4f269333c922ed3db283be479f9deacaa0f, built on 03/23/2017 10:13 GMT 2017-06-21 10:01:12,685 [myid:] - INFO [main:Environment@100] - Client environment:host.name=agent2 2017-06-21 10:01:12,685 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.7.0_79 2017-06-21 10:01:12,694 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation 2017-06-21 10:01:12,697 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/local/jdk1.7.0_79/jre 2017-06-21 10:01:12,697 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/usr/local/zookeeper-3.4.10/bin/../build/classes:/usr/local/zookeeper-3.4.10/bin/../build/lib/*.jar:/usr/local/zookeeper-3.4.10/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper-3.4.10/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper-3.4.10/bin/../lib/netty-3.10.5.Final.jar:/usr/local/zookeeper-3.4.10/bin/../lib/log4j-1.2.16.jar:/usr/local/zookeeper-3.4.10/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper-3.4.10/bin/../zookeeper-3.4.10.jar:/usr/local/zookeeper-3.4.10/bin/../src/java/lib/*.jar:/usr/local/zookeeper-3.4.10/bin/../conf: 2017-06-21 10:01:12,700 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 2017-06-21 10:01:12,700 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp 2017-06-21 10:01:12,700 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA> 2017-06-21 10:01:12,702 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux 2017-06-21 10:01:12,702 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64 2017-06-21 10:01:12,702 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-431.el6.x86_64 2017-06-21 10:01:12,703 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root 2017-06-21 10:01:12,704 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root 2017-06-21 10:01:12,704 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/usr/local/zookeeper-3.4.10/bin 2017-06-21 10:01:12,713 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=192.168.0.85:2181 sessionTimeout=5000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@5bed2ccd Welcome to ZooKeeper! 2017-06-21 10:01:12,877 [myid:] - INFO [main-SendThread(192.168.0.85:2181):ClientCnxn$SendThread@1032] - Opening socket connection to server 192.168.0.85/192.168.0.85:2181. Will not attempt to authenticate using SASL (unknown error) 2017-06-21 10:01:12,928 [myid:] - INFO [main-SendThread(192.168.0.85:2181):ClientCnxn$SendThread@876] - Socket connection established to 192.168.0.85/192.168.0.85:2181, initiating session JLine support is enabled 2017-06-21 10:01:13,013 [myid:] - INFO [main-SendThread(192.168.0.85:2181):ClientCnxn$SendThread@1299] - Session establishment complete on server 192.168.0.85/192.168.0.85:2181, sessionid = 0x35cc85763500000, negotiated timeout = 5000 WATCHER:: WatchedEvent state:SyncConnected type:None path:null [zk: 192.168.0.85:2181(CONNECTED) 0] ls [zk: 192.168.0.85:2181(CONNECTED) 1] ls / [activemq, zookeeper] [zk: 192.168.0.85:2181(CONNECTED) 2]

Enter ls/

See the built services are zookeeper activemq, there was no activemq, because I have built the ActiveMQ cluster.

10. Deploy activemq

| Host | Cluster Communication Port | Message Port | console port | Deployment path / usr/local |

| 192.168.0.85 | 61619 | 61616 | 8161 | apache-activemq-5.14.5 |

| 192.168.0.171 | 61619 | 61616 | 8161 | apache-activemq-5.14.5 |

| 192.168.0.181 | 61619 | 61616 | 8161 | apache-activemq-5.14.5 |

11. Installation of activemq

download wget http://www.apache.org/dyn/closer.cgi?filename=/activemq/5.14.5/apache-activemq-5.14.5-bin.tar.gz //decompression tar xvf apache-activemq-5.14.5-bin.tar.gz -C /usr/local/ //Set up boot start root@agent2:/usr/local/apache-activemq-5.14.5/bin#cp activemq /etc/init.d/activemq # chkconfig: 345 63 37 # description: Auto start ActiveMQ

12. Start activemq

root@agent2:/usr/local/apache-activemq-5.14.5/bin#./activemq start //View listening ports root@agent2:/usr/local/apache-activemq-5.14.5/bin#netstat -antlp |grep "8161\|61616\|616*" tcp 0 64 192.168.0.85:22 192.168.0.61:52967 ESTABLISHED 6702/sshd tcp 0 0 :::61613 :::* LISTEN 7481/java tcp 0 0 :::61614 :::* LISTEN 7481/java tcp 0 0 :::61616 :::* LISTEN 7481/java tcp 0 0 :::8161 :::* LISTEN 7481/java

13. Actemq cluster configuration

root@agent2:/usr/local/apache-activemq-5.14.5/conf#cat activemq.xml <!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to You under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <!-- START SNIPPET: example --> <beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd http://activemq.apache.org/schema/core http://activemq.apache.org/schema/core/activemq-core.xsd"> <!-- Allows us to use system properties as variables in this configuration file --> <bean class="org.springframework.beans.factory.config.PropertyPlaceholderConfigurer"> <property name="locations"> <value>file:${activemq.conf}/credentials.properties</value> </property> </bean> <!-- Allows accessing the server log --> <bean id="logQuery" class="io.fabric8.insight.log.log4j.Log4jLogQuery" lazy-init="false" scope="singleton" init-method="start" destroy-method="stop"> </bean> <!-- The <broker> element is used to configure the ActiveMQ broker. --> #The conf/activemq.xml under the MQ installation path carries out the brokerName of mq, and each node name must be the same #brokerName= "activemq-cluster" (all three nodes need to be modified) <broker xmlns="http://activemq.apache.org/schema/core" brokerName="activemq-cluster" dataDirectory="${activemq.data}"> <destinationPolicy> <policyMap> <policyEntries> <policyEntry topic=">" > <!-- The constantPendingMessageLimitStrategy is used to prevent slow topic consumers to block producers and affect other consumers by limiting the number of messages that are retained For more information, see: http://activemq.apache.org/slow-consumer-handling.html --> <pendingMessageLimitStrategy> <constantPendingMessageLimitStrategy limit="1000"/> </pendingMessageLimitStrategy> </policyEntry> </policyEntries> </policyMap> </destinationPolicy> <!-- The managementContext is used to configure how ActiveMQ is exposed in JMX. By default, ActiveMQ uses the MBean server that is started by the JVM. For more information, see: http://activemq.apache.org/jmx.html --> <managementContext> <managementContext createConnector="false"/> </managementContext> <!-- Configure message persistence for the broker. The default persistence mechanism is the KahaDB store (identified by the kahaDB tag). For more information, see: http://activemq.apache.org/persistence.html --> <persistenceAdapter> #Release kahadb from the adapter <!-- <kahaDB directory="${activemq.data}/kahadb"/> --> #Add a new leveldb configuration <replicatedLevelDB directory="${activemq.data}/leveldb" replicas="3" bind="tcp://0.0.0.0:61619" zkAddress="192.168.0.85:2181,192.168.0.171:2181,192.168.0.181:2181" hostname="192.168.0.85" #Each of the three machines fills in its own ip zkPath="/activemq/leveldb-stores" /> </persistenceAdapter> #Enable Simple Authentication <plugins> <simpleAuthenticationPlugin> <users> <authenticationUser username="${activemq.username}" password="${activemq.password}" groups="admins,everyone"/> <authenticationUser username="mcollective" password="musingtec" groups="mcollective,admins,everyone"/> </users> </simpleAuthenticationPlugin> </plugins> <!-- The systemUsage controls the maximum amount of space the broker will use before disabling caching and/or slowing down producers. For more information, see: http://activemq.apache.org/producer-flow-control.html --> <systemUsage> <systemUsage> <memoryUsage> <memoryUsage percentOfJvmHeap="70" /> </memoryUsage> <storeUsage> <storeUsage limit="100 gb"/> </storeUsage> <tempUsage> <tempUsage limit="50 gb"/> </tempUsage> </systemUsage> </systemUsage> <!-- The transport connectors expose ActiveMQ over a given protocol to clients and other brokers. For more information, see: http://activemq.apache.org/configuring-transports.html --> <transportConnectors> <!-- DOS protection, limit concurrent connections to 1000 and frame size to 100MB --> <transportConnector name="openwire" uri="tcp://0.0.0.0:61616?maximumConnections=1000&wireFormat.maxFrameSize=104857600"/> <transportConnector name="amqp" uri="amqp://0.0.0.0:5672?maximumConnections=1000&wireFormat.maxFrameSize=104857600"/> <transportConnector name="stomp" uri="stomp://0.0.0.0:61613?maximumConnections=1000&wireFormat.maxFrameSize=104857600"/> <transportConnector name="mqtt" uri="mqtt://0.0.0.0:1883?maximumConnections=1000&wireFormat.maxFrameSize=104857600"/> <transportConnector name="ws" uri="ws://0.0.0.0:61614?maximumConnections=1000&wireFormat.maxFrameSize=104857600"/> </transportConnectors> <!-- destroy the spring context on shutdown to stop jetty --> <shutdownHooks> <bean xmlns="http://www.springframework.org/schema/beans" class="org.apache.activemq.hooks.SpringContextHook" /> </shutdownHooks> </broker> <!-- Enable web consoles, REST and Ajax APIs and demos The web consoles requires by default login, you can disable this in the jetty.xml file Take a look at ${ACTIVEMQ_HOME}/conf/jetty.xml for more details --> <import resource="jetty.xml"/> </beans> <!-- END SNIPPET: example -->

14. Cluster Services Completed

test

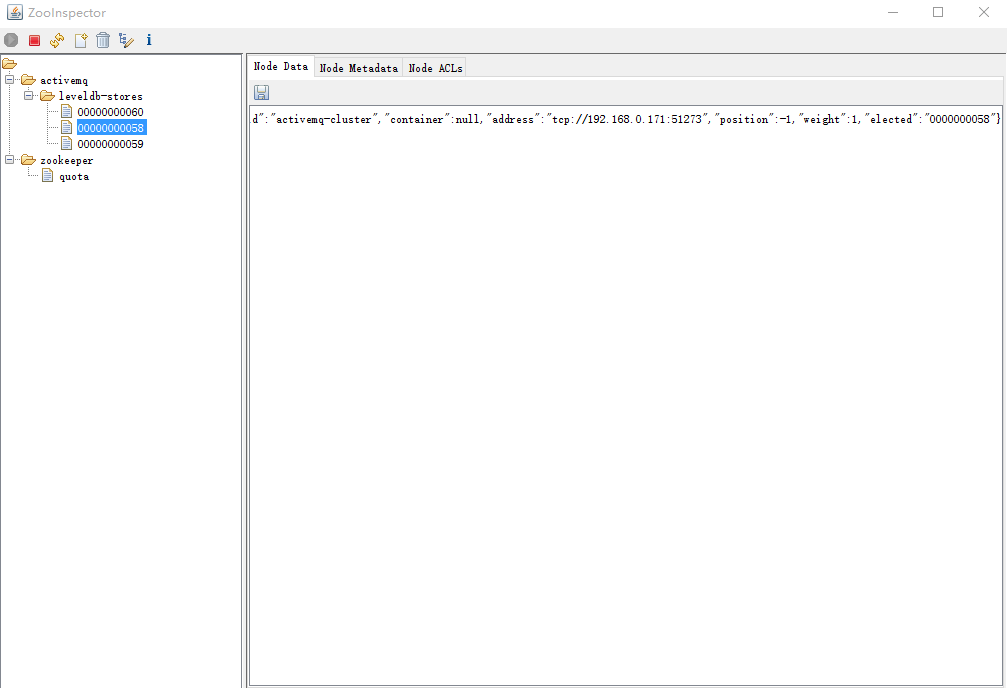

Use the debugging tool ZooInspector.zip to view the current activemq on that machine

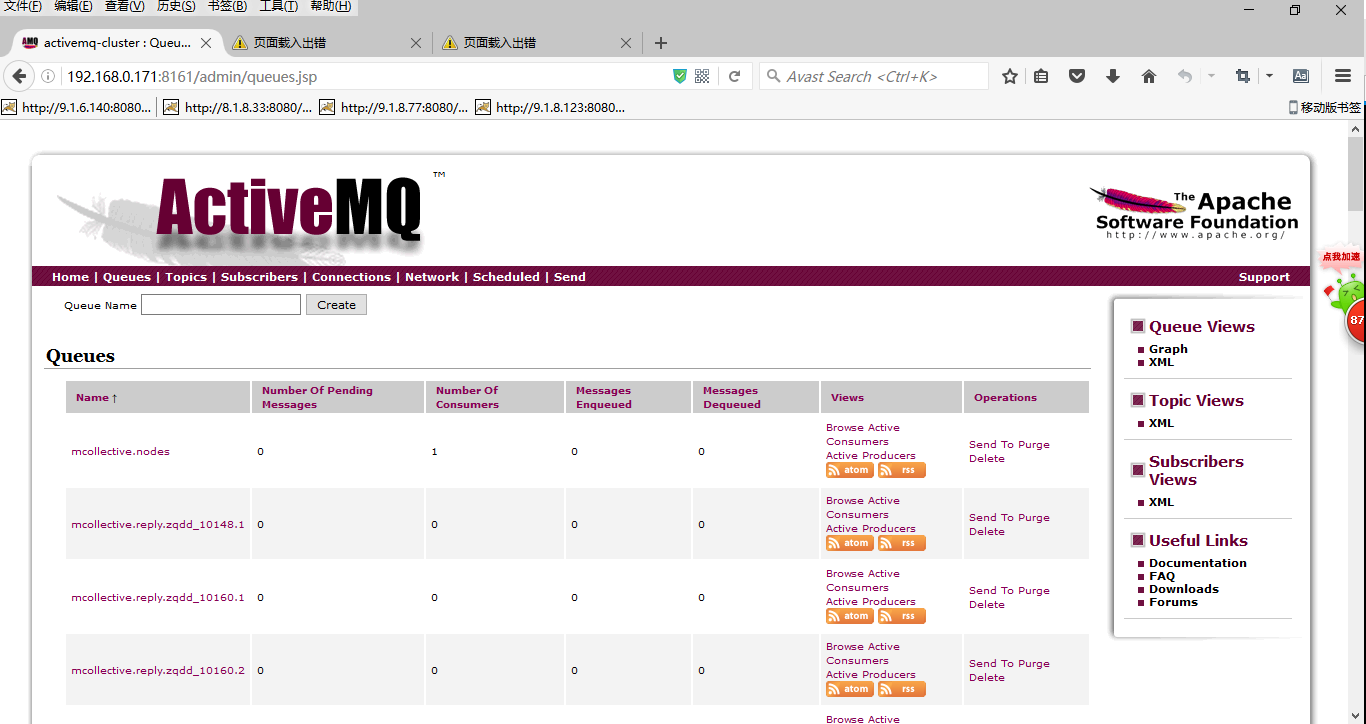

View Message Queue Console

Actemq services are not available on other machines

To be perfected...