Introduction: ※ identify MNIST program by testing this minimalist Paddle on the network, that is, use a very simple linear regression network, and preliminarily get familiar with the network architecture under Paddle. An example is also given in the tensor conversion program from numpy to Paddle.

Keywords: AI Studio, paddy, MNIST

§ 01 establishment works

Establish a NoteBook based engineering environment in AI Studio and select the MNIST database.

stay Where can I find the final version of the sample program? AI Studio-MNIST Experiment with MNIST database based on the paddy framework in AI Studio. Firstly, the minimalist test method is tested. However, some problems have been encountered in the process.

Later, after inquiry, we can know that the code in the book is slow to publish, so the AI Studio code in the class has been upgraded. Suggestion or observation AI Studio handwritten numeral recognition case , test according to the code in it.

1, Call in database

import matplotlib.pyplot as plt from numpy import * import math,time import paddle from paddle.nn import Linear import paddle.nn.functional as F import os train_dataset = paddle.vision.datasets.MNIST(mode='train')

import paddle

from paddle.nn import Linear

import paddle.nn.functional as F

import os

train_dataset = paddle.vision.datasets.MNIST(mode='train')

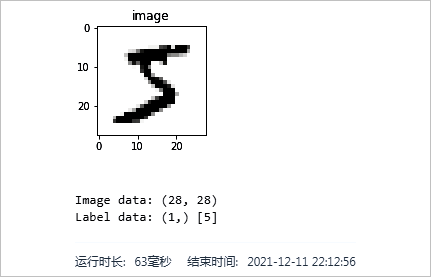

train_data0 = array(train_dataset[0][0])

train_label0 = array(train_dataset[0][1])

plt.figure('Image')

plt.figure(figsize=(5,5))

plt.imshow(train_data0, cmap=plt.cm.binary)

plt.axis('on')

plt.title('MNIST image')

plt.show()

print('Image shape: {}'.format(train_data0.shape))

print('Image label shape: {} and data: {}'.format(train_label0.shape, train_label0))

2. Common academic data sets

In the pad vision. There are some common academic data sets in datasets.

(1)paddle. Data set in vision

dir(paddle.vision.datasets)

['Cifar10', 'Cifar100', 'DatasetFolder', 'FashionMNIST', 'Flowers', 'ImageFolder', 'MNIST', 'VOC2012', '__all__', '__builtins__', '__cached__', '__doc__', '__file__', '__loader__', '__name__', '__package__', '__path__', '__spec__', 'cifar', 'flowers', 'folder', 'mnist', 'voc2012']

(2)paddle. Textdata set

dir(paddle.text.datasets)

['Conll05st', 'Imdb', 'Imikolov', 'Movielens', 'UCIHousing', 'WMT14', 'WMT16', '__all__', '__builtins__', '__cached__', '__doc__', '__file__', '__loader__', '__name__', '__package__', '__path__', '__spec__', 'conll05', 'imdb', 'imikolov', 'movielens', 'uci_housing', 'wmt14', 'wmt16']

2, Minimalist Engineering

1. Model building

class MNIST(paddle.nn.Layer):

def __init__(self, ):

super(MNIST, self).__init__()

self.fc = paddle.nn.Linear(in_features=784, out_features=1)

def forward(self, inputs):

outputs = self.fc(inputs)

return outputs

def norm_img(img):

assert len(img.shape) == 3

batch_size, img_h, img_w = img.shape[0], img.shape[1], img.shape[2]

img = img/255

img = paddle.reshape(img, [batch_size, img_h*img_w])

return img

import paddle

paddle.vision.set_image_backend('cv2')

def train(model):

model.train()

train_loader = paddle.io.DataLoader(paddle.vision.datasets.MNIST(mode='train'),

batch_size=16,

shuffle=True)

opt = paddle.optimizer.SGD(learning_rate=0.001, parameters=model.parameters())

EPOCH_NUM = 10

for epoch in range(EPOCH_NUM):

for batch_id, data in enumerate(train_loader()):

images = norm_img(data[0]).astype('float32')

labels = data[1].astype('float32')

predicts = model(images)

loss = F.square_error_cost(predicts, labels)

avg_loss = paddle.mean(loss)

if batch_id%1000 == 0:

print('epoch_id: {}, batch_id: {}, loss is: {}'.format(epoch, batch_id, avg_loss.numpy()))

avg_loss.backward()

opt.step()

opt.clear_grad()

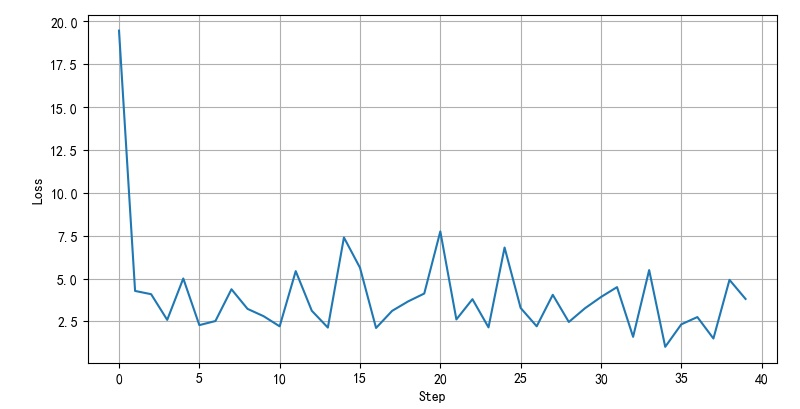

2. Training model

model = MNIST() train(model) paddle.save(model.state_dict(), './mnist.pdparms')

3. Training results

epoch_id: 0, batch_id: 0, loss is: [19.446383] epoch_id: 0, batch_id: 1000, loss is: [4.280066] epoch_id: 0, batch_id: 2000, loss is: [4.089441] epoch_id: 0, batch_id: 3000, loss is: [2.5934415] epoch_id: 1, batch_id: 0, loss is: [5.005641] epoch_id: 1, batch_id: 1000, loss is: [2.2887247] epoch_id: 1, batch_id: 2000, loss is: [2.5260096] epoch_id: 1, batch_id: 3000, loss is: [4.377707] epoch_id: 2, batch_id: 0, loss is: [3.2349763] epoch_id: 2, batch_id: 1000, loss is: [2.8085265] epoch_id: 2, batch_id: 2000, loss is: [2.2175798] epoch_id: 2, batch_id: 3000, loss is: [5.4343185] epoch_id: 3, batch_id: 0, loss is: [3.1255033] epoch_id: 3, batch_id: 1000, loss is: [2.1449356] epoch_id: 3, batch_id: 2000, loss is: [7.3950243] epoch_id: 3, batch_id: 3000, loss is: [5.631453] epoch_id: 4, batch_id: 0, loss is: [2.1221619] epoch_id: 4, batch_id: 1000, loss is: [3.1189494] epoch_id: 4, batch_id: 2000, loss is: [3.672319] epoch_id: 4, batch_id: 3000, loss is: [4.128253] epoch_id: 5, batch_id: 0, loss is: [7.7472067] epoch_id: 5, batch_id: 1000, loss is: [2.6192496] epoch_id: 5, batch_id: 2000, loss is: [3.7988458] epoch_id: 5, batch_id: 3000, loss is: [2.1571586] epoch_id: 6, batch_id: 0, loss is: [6.8091993] epoch_id: 6, batch_id: 1000, loss is: [3.2879863] epoch_id: 6, batch_id: 2000, loss is: [2.2202625] epoch_id: 6, batch_id: 3000, loss is: [4.0542073] epoch_id: 7, batch_id: 0, loss is: [2.4702597] epoch_id: 7, batch_id: 1000, loss is: [3.267303] epoch_id: 7, batch_id: 2000, loss is: [3.925469] epoch_id: 7, batch_id: 3000, loss is: [4.502317] epoch_id: 8, batch_id: 0, loss is: [1.6059736] epoch_id: 8, batch_id: 1000, loss is: [5.4941883] epoch_id: 8, batch_id: 2000, loss is: [1.0239292] epoch_id: 8, batch_id: 3000, loss is: [2.333592] epoch_id: 9, batch_id: 0, loss is: [2.7579784] epoch_id: 9, batch_id: 1000, loss is: [1.5081773] epoch_id: 9, batch_id: 2000, loss is: [4.925281] epoch_id: 9, batch_id: 3000, loss is: [3.8142138]

4. Test model

(1) View test set results

for batch_id, data in enumerate(test_loader()):

images = norm_img(data[0]).astype('float32')

labels = data[1].astype('float32')

predicts = model(images)

loss = F.square_error_cost(predicts, labels)

avg_loss = paddle.mean(loss)

print(predicts)

print(labels)

print(loss)

print(avg_loss)

break

Operation results

Tensor(shape=[16, 1], dtype=float32, place=CPUPlace, stop_gradient=False,

[[2.06245565],

[1.97789598],

[5.32851791],

[2.76517129],

[4.77754116],

[1.96410847],

[1.70493352],

[2.46705198],

[7.93237495],

[5.77034092],

[4.87852144],

[0.48723245],

[4.39118719],

[1.38979697],

[1.77543545],

[1.47215056]])

Tensor(shape=[16, 1], dtype=float32, place=CPUPlace, stop_gradient=True,

[[0.],

[1.],

[5.],

[5.],

[6.],

[1.],

[1.],

[1.],

[7.],

[4.],

[2.],

[0.],

[1.],

[1.],

[0.],

[1.]])

Tensor(shape=[16, 1], dtype=float32, place=CPUPlace, stop_gradient=False,

[[4.25372314],

[0.95628053],

[0.10792402],

[4.99445915],

[1.49440563],

[0.92950511],

[0.49693128],

[2.15224147],

[0.86932307],

[3.13410687],

[8.28588581],

[0.23739545],

[11.50015068],

[0.15194169],

[3.15217113],

[0.22292615]])

Tensor(shape=[1], dtype=float32, place=CPUPlace, stop_gradient=False,

[2.68371058])

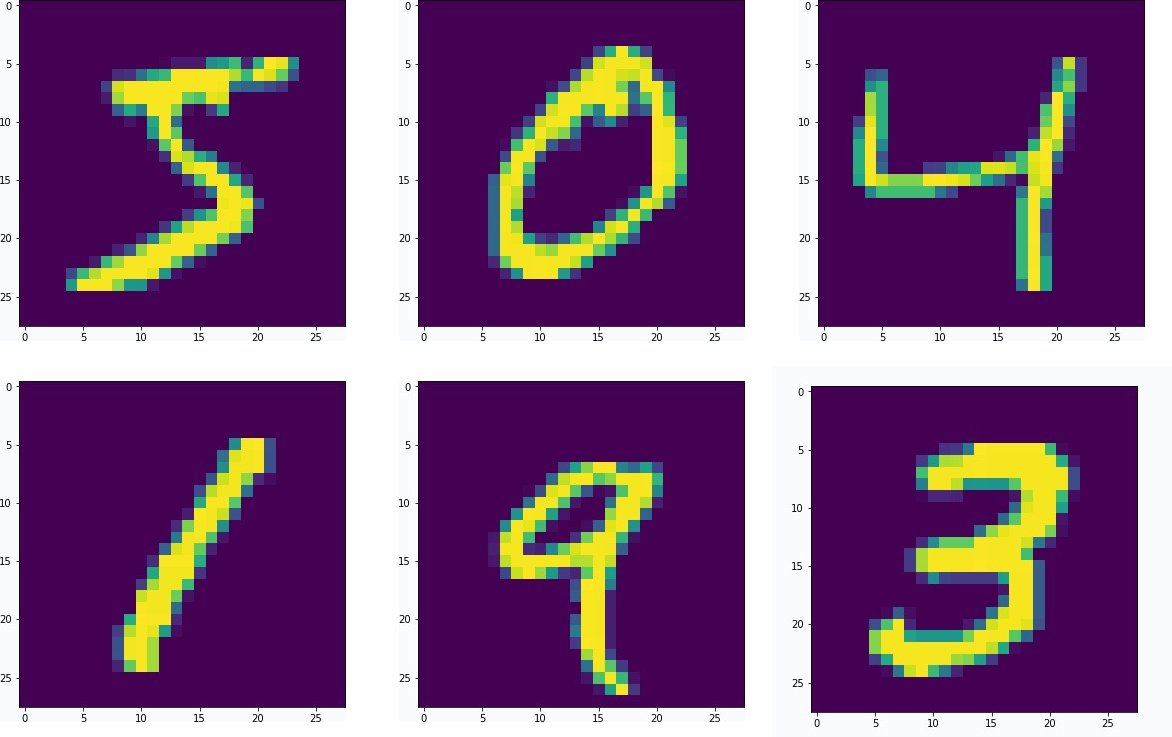

(2) Test prediction results

def showimg(img):

imgdata = img.numpy()

print(imgdata.shape)

imgblock = [i.reshape([28,28]) for i in imgdata]

imgb1 = concatenate(imgblock[:8], axis=1)

imgb2 = concatenate(imgblock[8:], axis=1)

imgb = concatenate((imgb1, imgb2))

plt.figure(figsize=(10,10))

plt.imshow(imgb)

plt.axis('off')

plt.show()

Forecast results:

for batch_id, data in enumerate(test_loader()):

showimg(images)

predicts = model(images)

print(labels.numpy().flatten().T)

print([p for p in predicts.numpy().flatten().T])

| 3. | 9. | 4. | 3. | 0. | 7. | 0. | 9. | 9. | 5. | 6. | 7. | 1. | 7. | 0. | 0. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3.3602648 | 8.111923 | 5.3560495 | 5.2887278 | 4.218868 | 5.3987856 | 2.5647051 | 8.387244 | 8.198596 | 3.977576 | 3.7429187 | 7.7407055 | 6.2851562 | 4.435977 | 2.9352028 | 3.7802896 |

5. Network reference program

import paddle

from paddle.nn import Linear

import paddle.nn.functional as F

import os

import numpy as np

import matplotlib.pyplot as plt

train_dataset = paddle.vision.datasets.MNIST(mode='train')

train_data0 = np.array(train_dataset[0][0])

train_label_0 = np.array(train_dataset[0][1])

import matplotlib.pyplot as plt

plt.figure("Image") # Image window name

plt.figure(figsize=(2,2))

plt.imshow(train_data0, cmap=plt.cm.binary)

plt.axis('on') # The coordinate axis is off

plt.title('image') # Image title

plt.show()

print("The image data shape and corresponding data are:", train_data0.shape)

print("The image label shape and corresponding data are:", train_label_0.shape, train_label_0)

print("\n Print first batch The first image of the corresponding label number is{}".format(train_label_0))

class MNIST(paddle.nn.Layer):

def __init__(self):

super(MNIST, self).__init__()

# Define a full connection layer, and the output dimension is 1

self.fc = paddle.nn.Linear(in_features=784, out_features=1)

# Define the forward calculation process of the network structure

def forward(self, inputs):

outputs = self.fc(inputs)

return outputs

model = MNIST()

def train(model):

# Start training mode

model.train()

# Load training set batch_ Set size to 16

train_loader = paddle.io.DataLoader(paddle.vision.datasets.MNIST(mode='train'),

batch_size=16,

shuffle=True)

# Define the optimizer, use the random gradient descent SGD optimizer, and set the learning rate to 0.001

opt = paddle.optimizer.SGD(learning_rate=0.001, parameters=model.parameters())

def norm_img(img):

# Verify whether the incoming data format is correct. The shape of img is [batch_size, 28, 28]

assert len(img.shape) == 3

batch_size, img_h, img_w = img.shape[0], img.shape[1], img.shape[2]

# Normalized image data

img = img / 255

# reshape the image as [batch_size, 784]

img = paddle.reshape(img, [batch_size, img_h*img_w])

return img

import paddle

paddle.vision.set_image_backend('cv2')

model = MNIST()

def train(model):

# Start training mode

model.train()

# Load training set batch_ Set size to 16

train_loader = paddle.io.DataLoader(paddle.vision.datasets.MNIST(mode='train'),

batch_size=16,

shuffle=True)

# Define the optimizer, use the random gradient descent SGD optimizer, and set the learning rate to 0.001

opt = paddle.optimizer.SGD(learning_rate=0.001, parameters=model.parameters())

EPOCH_NUM = 10

for epoch in range(EPOCH_NUM):

for batch_id, data in enumerate(train_loader()):

images = norm_img(data[0]).astype('float32')

labels = data[1].astype('float32')

#Forward calculation process

predicts = model(images)

# Calculate loss

loss = F.square_error_cost(predicts, labels)

avg_loss = paddle.mean(loss)

#Print the current Loss for every 1000 batches of data trained

if batch_id % 1000 == 0:

print("epoch_id: {}, batch_id: {}, loss is: {}".format(epoch, batch_id, avg_loss.numpy()))

#Backward propagation, the process of updating parameters

avg_loss.backward()

opt.step()

opt.clear_grad()

train(model)

paddle.save(model.state_dict(), './mnist.pdparams')

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

img_path = './work/download.png'

im = Image.open('./work/download.png')

plt.imshow(im)

plt.show()

im = im.convert('L')

print('original image shape: ', np.array(im).shape)

im = im.resize((28, 28), Image.ANTIALIAS)

plt.imshow(im)

plt.show()

print("Picture after sampling shape: ", np.array(im).shape)

def load_image(img_path):

# From img_path to read the image and convert it into a grayscale image

im = Image.open(img_path).convert('L')

# print(np.array(im))

im = im.resize((28, 28), Image.ANTIALIAS)

im = np.array(im).reshape(1, -1).astype(np.float32)

# The image is normalized to keep consistent with the data range of the data set

im = 1 - im / 255

return im

model = MNIST()

params_file_path = 'mnist.pdparams'

img_path = './work/download.png'

param_dict = paddle.load(params_file_path)

model.load_dict(param_dict)

model.eval()

tensor_img = load_image(img_path)

result = model(paddle.to_tensor(tensor_img))

print('result',result)

print("The forecast figure is", result.numpy().astype('int32'))

※ identification summary ※

By testing the minimalist pad on the network to identify the MNIST program, that is, using a very simple linear regression network, I am preliminarily familiar with the network architecture under pad. An example is also given in the tensor conversion program from numpy to Paddle.

1, Data conversion

1. From numpy to tensor

paddle.to_tensor()

2. From tensor to numpy

data.numpy()

■ links to relevant literature:

- Where can I find the final version of the sample program? AI Studio-MNIST

- AI Studio handwritten numeral recognition case

● relevant chart links: