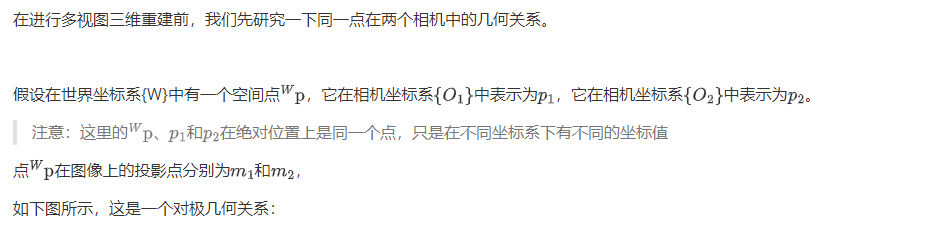

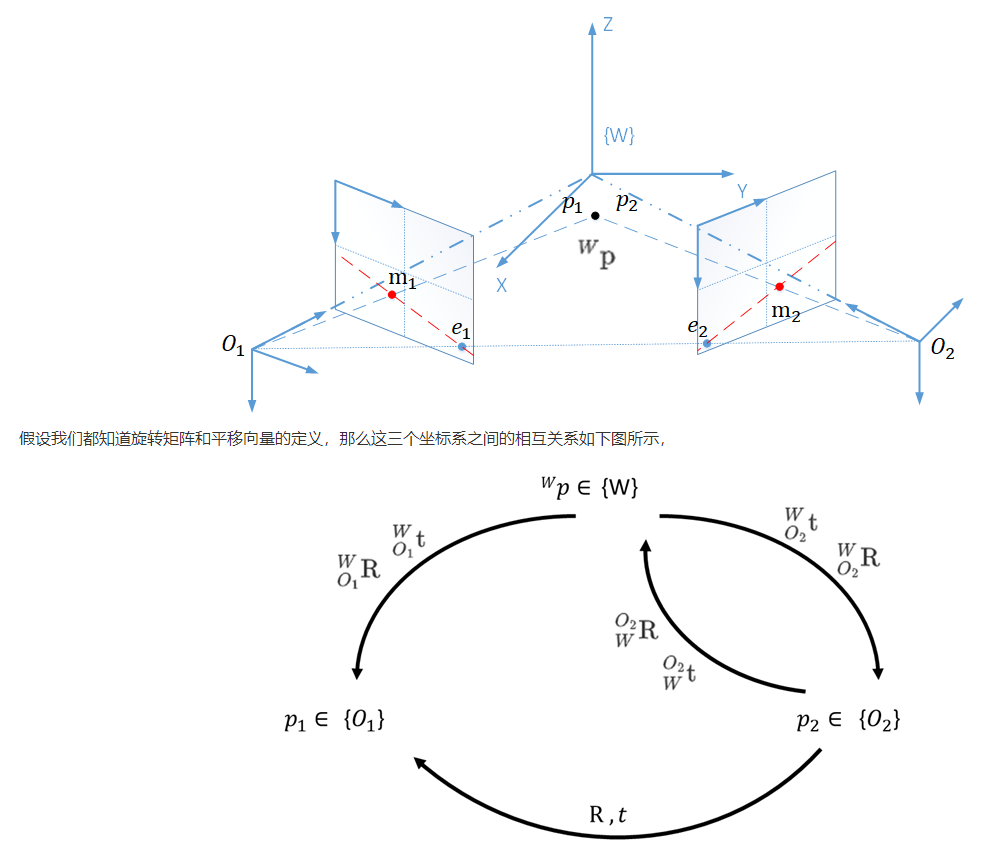

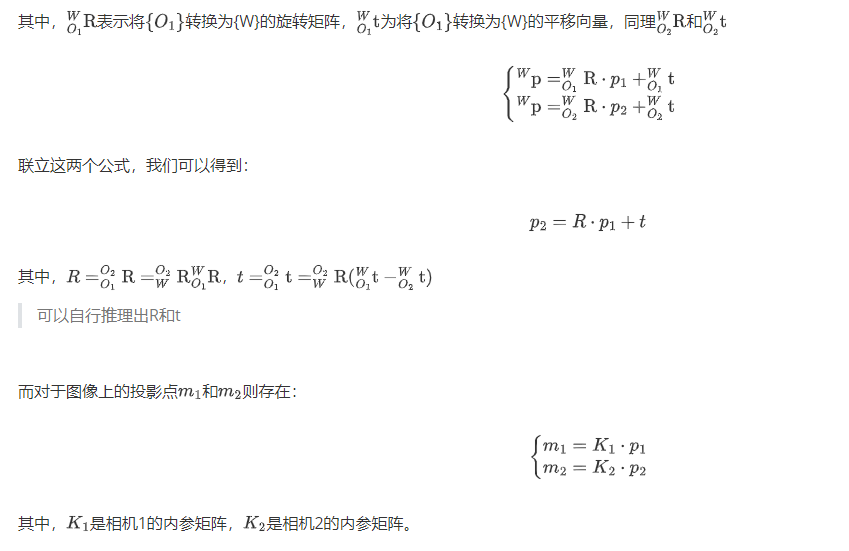

Basic principle of 3D reconstruction of multi view geometry:

Observing the same scene from two or more viewpoints has obtained multiple perceptual images of the scene from multiple different perspectives. The position deviation between image pixels is calculated by using the basic principle of triangulation to obtain the three-dimensional depth information of the scene. This process is the same as that of human observing the outer world.

SfM:

The full name of SfM is Structure from Motion, which determines the spatial and geometric relationship of the target through the movement of the camera. It is a common method of three-dimensional reconstruction. It only needs ordinary RGB camera, so it is cheaper and less constrained by the environment. It can be used indoors and outdoors. However, SfM needs the support of complex theories and algorithms, and the accuracy and speed need to be improved, so there are not many mature commercial applications at present.

This paper will implement a simple incremental SfM system.

Book: multi view geometry in computer vision: original book 2nd Edition / (Australia) Richard \ cdot Hartley Beijing: Machinery Industry Press, August 2019

Code reference: Implementation of SfM with OpenCV (II): multi eye 3D reconstruction_ arag2009 learning column - CSDN blog

Data set source: MVS Data Set – 2014 | DTU Robot Image Data Sets

Epipolar geometry & fundamental matrix

Feature point extraction and matching

From the above analysis, it can be seen that the relative relationship between the two cameras requires the corresponding points in the two images, which becomes the problem of feature point extraction and matching.

For the case of large image differences, SIFT feature is recommended, because sift has good robustness to rotation, scale and perspective. (if there is little difference, other faster features can be considered, such as SURF, ORB, etc.), Then knn nearest neighbor algorithm is used for matching, and Euclidean distance is used for distance calculation.

Since OpenCV includes SIFT in the extension, the version downloaded on the official website does not have SIFT,

To do this, you need to GitHub - opencv/opencv_contrib: Repository for OpenCV's extra modules Download the expansion pack opencv_contrib and recompile OpenCV according to the instructions inside.

When compiling opencv, you should pass cmake -DOPENCV_EXTRA_MODULES_PATH will opencv_ When compiling the module of contrib, be sure to keep the versions consistent

opencv link: GitHub - opencv/opencv: Open Source Computer Vision Library

The key point is that there are often many mismatches in the matching results. In order to eliminate these errors, the Ratio Test method is used here, that is, the KNN algorithm is used to find the two features that best match the feature. If the ratio of the matching distance of the first feature to the matching distance of the second feature is less than a certain threshold, the matching is accepted, otherwise it is regarded as a mismatching.

void match_features(

cv::Mat & query,

cv::Mat & train,

std::vector<cv::DMatch>& matches,

std::vector<std::vector<cv::DMatch>>& knn_matches

)

{

cv::BFMatcher matcher(cv::NORM_L2);

matcher.knnMatch(query, train, knn_matches, 2);

//Obtain the minimum matching distance that meets the Ratio Test

float min_dist = FLT_MAX;

for (int r = 0; r < knn_matches.size(); ++r)

{

//Ratio Test

if (knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance)

continue;

float dist = knn_matches[r][0].distance;

if (dist < min_dist) min_dist = dist;

}

matches.clear();

for (size_t r = 0; r < knn_matches.size(); ++r)

{

//Exclude points that do not meet the Ratio Test and points with too large matching distance

if (

knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance ||

knn_matches[r][0].distance > 5 * cv::max(min_dist, 10.0f)

)

continue;

//Save match points

matches.push_back(knn_matches[r][0]);

}

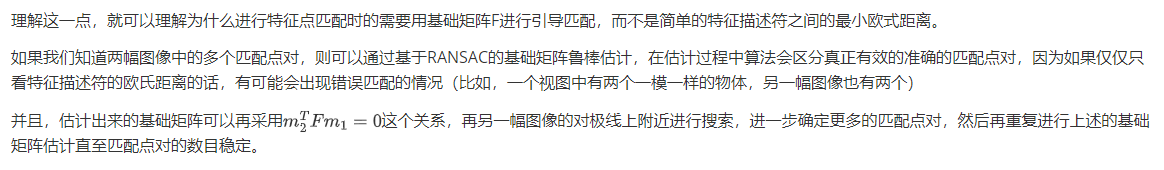

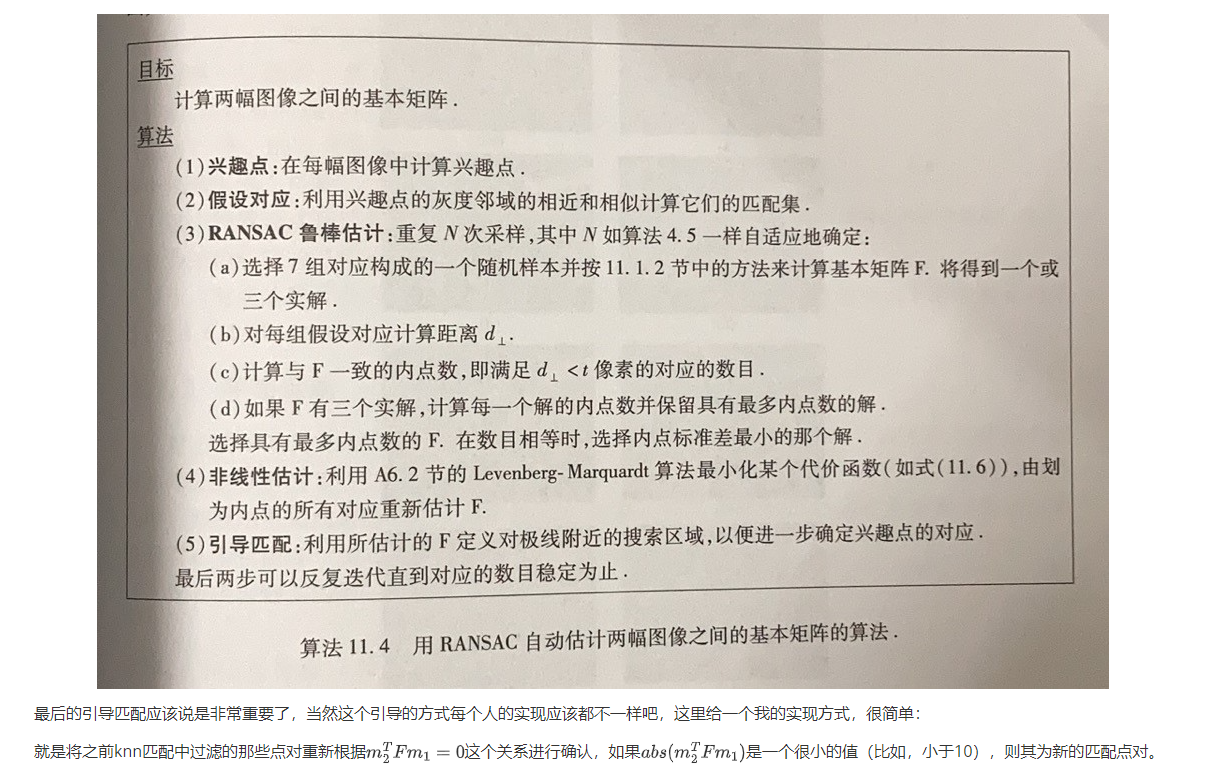

}After that, refer to Chapter 11 basic matrix estimation algorithm 11.4 of multi view geometry in computer vision

std::vector<std::vector<cv::DMatch>> knn_matches;

match_features(this->leftimage->descriptor, this->rightimage->descriptor, m_matchs,knn_matches);//Matching function above

auto& kp1 = this->leftimage->keypoints;

auto& kp2 = this->rightimage->keypoints;

cv::Mat m_Fundamental;

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);//Here, the basic matrix estimation based on RANSAC is used to refine the matching point pairs

int last_num = 0;

int next_num = 0;

double T = 10;

cv::Mat x1, x2;

cv::Point2f p1, p2;

double d;

int index_kp2;

do {

last_num = m_matchs.size();

std::vector<int> label1(kp1.size(), -1);

std::vector<int> label2(kp2.size(), -1);

for (int i = 0; i < m_matchs.size(); i++) {

label1[m_matchs[i].queryIdx] = 1;

label2[m_matchs[i].trainIdx] = 1;

}

for (int i = 0; i < knn_matches.size(); i++) {

if (label1[i] < 0) {

index_kp2 = knn_matches[i][0].trainIdx;

if (label2[index_kp2] < 0) {

p1 = kp1[knn_matches[i][0].queryIdx].pt;

p2 = kp2[index_kp2].pt;

x1 = (cv::Mat_<double>(3, 1) << p1.x, p1.y, 1);

x2 = (cv::Mat_<double>(1, 3) << p2.x, p2.y, 1);

x1 = x2*m_Fundamental*x1;

d = abs(x1.at<double>(0, 0));

if (d < T) {

m_matchs.push_back(knn_matches[i][0]);

label1[i] = 1;

label2[index_kp2] = 1;

}

}

}

}

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);//Here, the basic matrix estimation based on RANSAC is used to refine the matching point pairs

next_num = m_matchs.size();

std::cout <<"loop: "<< next_num - last_num << "\n";

} while (next_num - last_num > 0);//If the number of matching point pairs is less than the last time, the loop ends

The basic matrix estimation based on RANSAC adopts opencv's cv::findFundamentalMat and sets the parameter method=cv::FM_RANSAC

void ransace_refine_matchs(

std::vector<MyKeyPoint>& kp1, std::vector<MyKeyPoint>& kp2,

std::vector<cv::DMatch>& matchs,

cv::Mat& m_Fundamental)

{

std::vector<cv::Point2f> _p1, _p2;

for (int i = 0; i < m_matchs.size(); i++) {

_p1.push_back(kp1[m_matchs[i].queryIdx].pt);

_p2.push_back(kp2[m_matchs[i].trainIdx].pt);

}

std::vector<uchar> m_RANSACStatus;

m_Fundamental = cv::findFundamentalMat(_p1, _p2, m_RANSACStatus, cv::FM_RANSAC);//

std::vector<cv::DMatch> matchs_copy = matchs;

matchs.clear();

for (int i = 0; i < matchs_copy.size(); ++i) {

if (m_RANSACStatus[i] != 0) matchs.push_back(matchs_copy[i]);

}

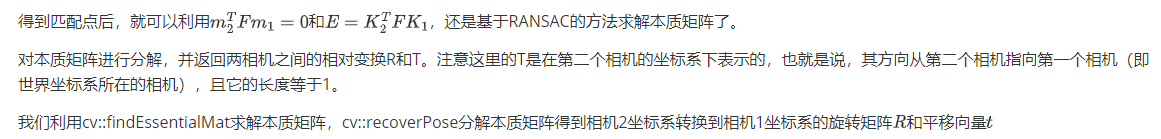

}3D reconstruction of two views

bool find_transform(cv::Mat & K, std::vector<MyKeyPoint>& p1, std::vector<MyKeyPoint>& p2, cv::Mat & R, cv::Mat & T, cv::Mat & mask)

{

//Obtain the focal length and optical center coordinates (principal point coordinates) of the camera according to the internal parameter matrix

double focal_length = 0.5*(K.at<double>(0) + K.at<double>(4));

cv::Point2d principle_point(K.at<double>(2), K.at<double>(5));

std::vector<cv::Point2f> _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//Obtain the eigenmatrix according to the matching points, and use RANSAC to further eliminate the mismatch points

cv::Mat E = cv::findEssentialMat(_p1, _p2, focal_length, principle_point, cv::RANSAC, 0.999, 1.0, mask);

if (E.empty()) return false;

double feasible_count = cv::countNonZero(mask);

std::cout << (int)feasible_count << " -in- " << p1.size() << std::endl;

//For RANSAC, when the number of outlier s is greater than 50%, the result is unreliable

if (feasible_count <= 15 || (feasible_count / p1.size()) < 0.6)

return false;

//Decompose the eigenmatrix to obtain the relative transformation

int pass_count = cv::recoverPose(E, _p1, _p2, R, T, focal_length, principle_point, mask);

//The number of points in front of both cameras should be large enough

if (((double)pass_count) / feasible_count < 0.7)

return false;

return true;

}

reference resources: https://blog.csdn.net/weixin_42587961/article/details/97241162#Ax0V_556

Here we use opencv's cv::triangulatePoints for triangulation

void reconstruct(cv::Mat & K, cv::Mat & R1, cv::Mat & T1, cv::Mat & R2, cv::Mat & T2,

std::vector<MyKeyPoint>& p1, std::vector<MyKeyPoint>& p2, std::vector<MyPoint3f>& structure)

{

std::vector<cv::Point2f> _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//Projection matrix of two cameras [R, t], triangular points only supports float type

cv::Mat proj1(3, 4, CV_32FC1);

cv::Mat proj2(3, 4, CV_32FC1);

R1.convertTo(proj1(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T1.convertTo(proj1.col(3), CV_32FC1);

R2.convertTo(proj2(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T2.convertTo(proj2.col(3), CV_32FC1);

cv::Mat fK;

K.convertTo(fK, CV_32FC1);

proj1 = fK * proj1;

proj2 = fK * proj2;

//Triangular reconstruction

cv::Mat s;

cv::triangulatePoints(proj1, proj2, _p1, _p2, s);

structure.clear();

structure.reserve(s.cols);

for (int i = 0; i < s.cols; ++i)

{

cv::Mat_<float> col = s.col(i);

col /= col(3); //Homogeneous coordinates need to be divided by the last element to be the real coordinate value

structure.push_back(MyPoint3f(cv::Point3f(col(0), col(1), col(2)), p1[i].color));

}

}

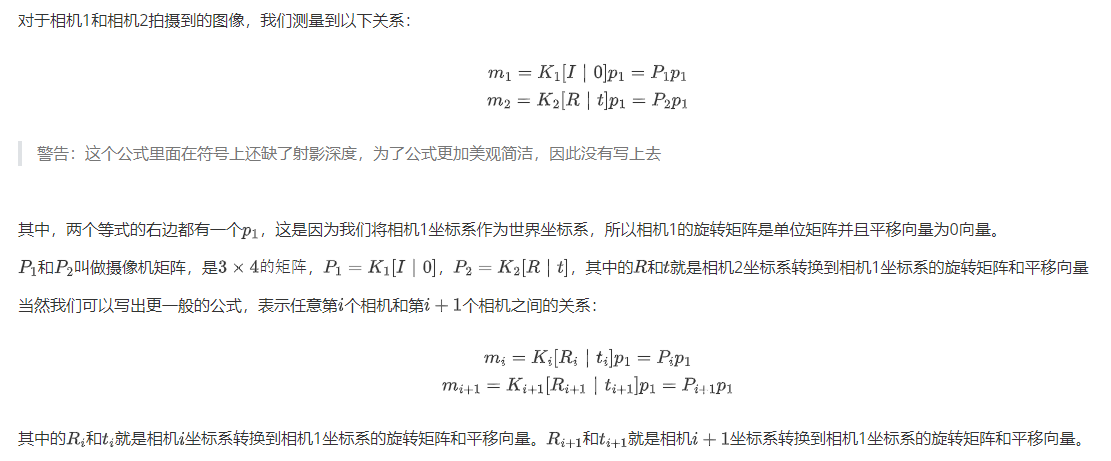

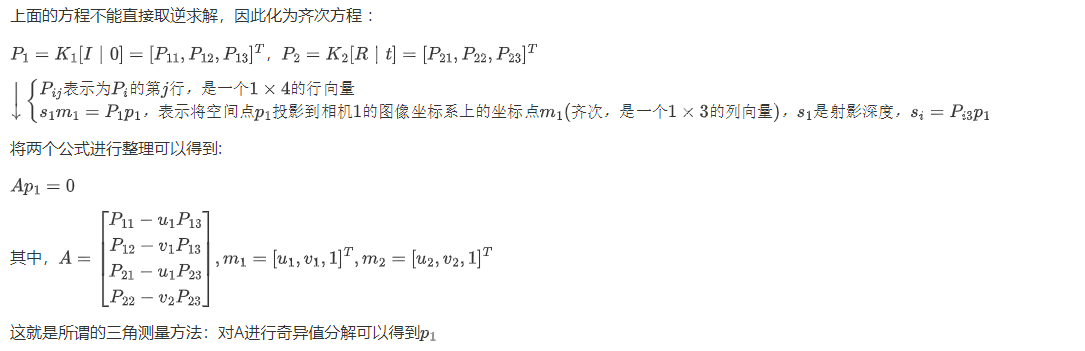

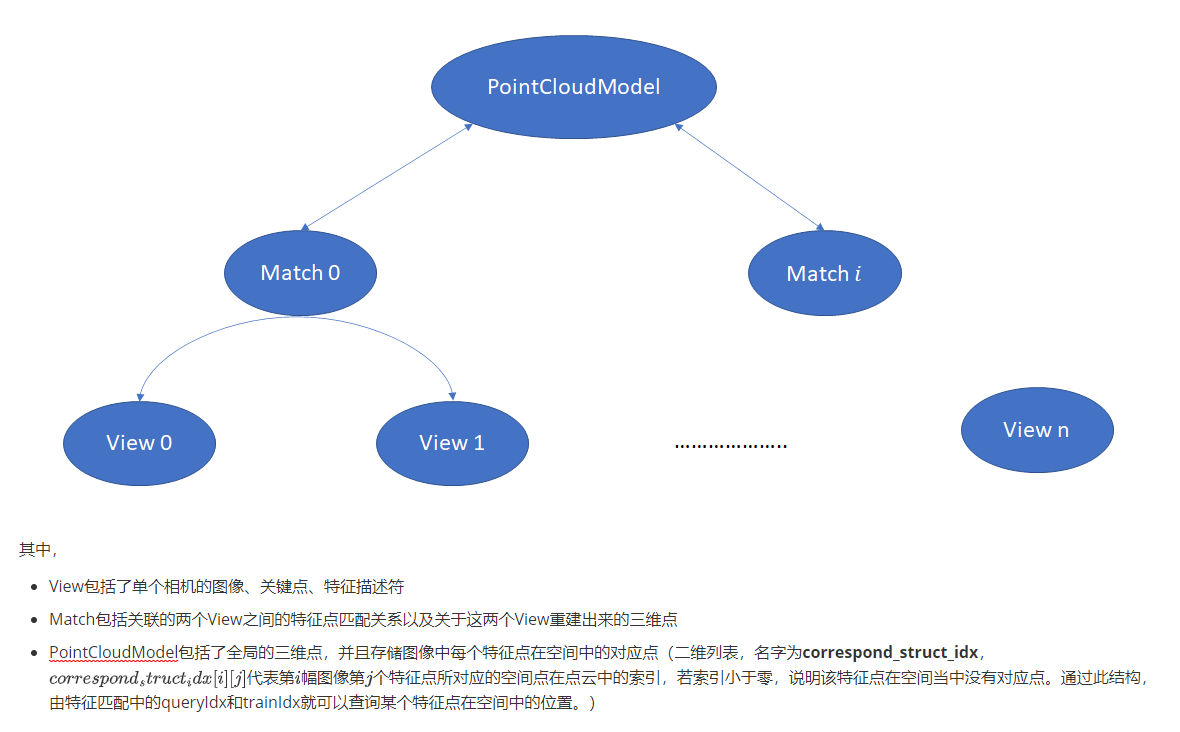

3D reconstruction of multi view (incremental SfM)

We know that the transformation matrix (rotation matrix and translation vector) between two cameras can be realized by estimating the essential matrix and decomposing the essential matrix.

So let the coordinate system of camera 1 be the world coordinate system. Now add the third view. How to determine the transformation matrix from the third camera (hereinafter referred to as camera 3) to the coordinate system of camera 1?

The simplest idea is to use the method of binocular reconstruction, that is, to extract feature points between the third image and the first image for matching, and then triangulation reconstruction.

What about adding the fourth, fifth and even more? With the increase of the number of images, the difference between the newly added image and the first image may become larger and larger, and the extraction of feature points becomes extremely difficult. At this time, the method of binocular reconstruction can no longer be used.

Now we use the PnP method, which solves the position and attitude of the camera in space according to the corresponding relationship between the points in space and the points in the image. In other words, if I know some three-dimensional space points in the coordinate system of camera 1 and that these space points match the two-dimensional points of the image of camera 3, PnP can solve the transformation matrix (rotation matrix and translation vector) between camera 3 and camera 1.

A general process of multi view 3D reconstruction:

-

Use the binocular reconstruction method to reconstruct the first two images, so as to obtain some points in space,

-

After adding the third image, make it match with the second image. Some of these matching points must also be the matching points between image 1 and image 2, that is, the spatial coordinates of some of these matching points are the spatial points under the known camera 1 coordinate system, and know the two-dimensional pixel coordinates of these points in the third image

-

All the information needed to solve PnP is available. Since the coordinates of space points are all in the camera 1 coordinate system, the camera position and attitude calculated by PnP are also in the camera 1 coordinate system, that is, the transformation matrix from camera 3 to camera 1.

This is also the reason for adding more images in the future, and the point cloud fusion should be carried out.

Complete code

GlobalVTKUtils.h

#pragma once

#ifndef GLOBALVTKUTILS_H

#define GLOBALVTKUTILS_H

#include<vector>

#include<array>

class GlobalVTKUtils {

public:

GlobalVTKUtils() {};

~GlobalVTKUtils() {};

static void ShowPoints(std::vector<std::array<float,3>> points,std::vector<std::array<float,3>> colors = std::vector<std::array<float,3>>());

};

#endif // !SHOWVTK_HGlobalVTKUtils.cpp

#include"GlobalVTKUtils.h"

#include <vtkActor.h>

#include <vtkRenderer.h>

#include <vtkRenderWindow.h>

#include <vtkRenderWindowInteractor.h>

#include <vtkProperty.h>

#include <vtkInteractorStyleTrackball.h>

#include <vtkInteractorStyleTrackballCamera.h>

#include <vtkPoints.h>

#include <vtkPolyData.h>

#include <vtkCellArray.h>

#include <vtkPolyDataMapper.h>

#include <vtkSmartPointer.h>

#include <vtkSphereSource.h>

#include <vtkXMLPolyDataWriter.h>

#include <vtkLookupTable.h>

#include <vtkPointData.h>

#include <vtkUnsignedCharArray.h>

#include "vtkFloatArray.h" / / floating point array class

#include "vtkAutoInit.h"

VTK_MODULE_INIT(vtkRenderingOpenGL2); // VTK was built with vtkRenderingOpenGL2

VTK_MODULE_INIT(vtkInteractionStyle);

VTK_MODULE_INIT(vtkRenderingFreeType);

void GlobalVTKUtils::ShowPoints(std::vector<std::array<float, 3>> points, std::vector<std::array<float, 3>> colors)

{

vtkSmartPointer<vtkRenderer> renderer = vtkSmartPointer< vtkRenderer>::New();

vtkSmartPointer<vtkRenderWindow> renderWindow = vtkSmartPointer<vtkRenderWindow>::New();

renderWindow->AddRenderer(renderer);

vtkSmartPointer<vtkRenderWindowInteractor> renderWindowInteractor = vtkSmartPointer<vtkRenderWindowInteractor>::New();

vtkSmartPointer<vtkInteractorStyleTrackballCamera> istyle = vtkSmartPointer<vtkInteractorStyleTrackballCamera>::New();

renderWindowInteractor->SetRenderWindow(renderWindow);

vtkSmartPointer<vtkInteractorStyleTrackballCamera> style = vtkSmartPointer<vtkInteractorStyleTrackballCamera>::New();

renderWindowInteractor->SetInteractorStyle(style);//Set interactionist style

std::vector<int> label(points.size(), -1);

double x1, y1, z1;

double x2, y2, z2;

double T = 0.1;

double value;

for (int i = 0; i < points.size(); i++) {

double minvalue = 1e10;

int min_index = -1;

for (int j = 0; j < points.size(); j++) {

if (j != i) {

x1 = points[i][0];y1 = points[i][1];z1 = points[i][2];

x2 = points[j][0];y2 = points[j][1];z2 = points[j][2];

value = std::sqrt(std::pow(x1 - x2, 2) + std::pow(y1 - y2, 2) + std::pow(z1 - z2, 2));

if (value < minvalue) {

minvalue = value;

min_index = j;

if (minvalue < T)break;

}

}

}

//std::cout << i << " " << minvalue << std::endl;

if (min_index != -1 && minvalue>T) {

label[i] = 1;

}

}

std::vector<std::array<float, 3>> copy = points;

std::vector<std::array<float, 3>> copy_c = colors;

points.clear();

colors.clear();

for (int i = 0; i < copy.size(); i++) {

if (label[i] < 0) {

points.push_back(copy[i]);

colors.push_back(copy_c[i]);

}

}

//MAD removing outliers

double mean_pt[3];

for (int i = 0; i < points.size(); i++) {

auto pt = points[i];

for (int j = 0; j < 3; j++) {

mean_pt[j] += pt[j];

}

}

for (int j = 0; j < 3; j++) {

mean_pt[j] /= points.size();

}

double mad = 0;

for (int i = 0; i < points.size(); i++) {

auto pt = points[i];

double x, y, z;

x = pt[0];y = pt[1];z = pt[2];

mad += std::sqrt(std::pow(x - mean_pt[0], 2) + std::pow(y - mean_pt[1], 2) + std::pow(z - mean_pt[2], 2));

}

mad/=points.size();

vtkIdType idtype;

double x, y, z;

vtkPoints *vpoints = vtkPoints::New();

vtkCellArray *vcells = vtkCellArray::New();

vtkSmartPointer<vtkUnsignedCharArray> ptColor = vtkSmartPointer<vtkUnsignedCharArray>::New();

ptColor->SetNumberOfComponents(3);

bool has_color = true;

if (colors.empty())has_color = false;

for (int i = 0; i < points.size(); i ++) {

auto pt = points[i];

x = pt[0];y = pt[1];z = pt[2];

//std::cout << pt[0] << " " << pt[1] << " " << pt[2] << "\n";

if (std::sqrt(std::pow(x - mean_pt[0], 2) + std::pow(y - mean_pt[1], 2) + std::pow(z - mean_pt[2], 2)) > 2*mad) {

continue;//Do not show outliers

}

idtype = vpoints->InsertNextPoint(x, y, z);

vcells->InsertNextCell(1, &idtype);

if (has_color) {

auto color = colors[i];

ptColor->InsertNextTuple3(color[2],color[1],color[0]);

}

}

// The rendering mechanism is unknown. It is necessary to set verts corresponding to point coordinates and point coordinates at the same time

// The id in verts must correspond to the point coordinate

vtkPolyData *polyData = vtkPolyData::New();

polyData->SetPoints(vpoints);

polyData->SetVerts(vcells);

if (has_color) {

polyData->GetPointData()->SetScalars(ptColor);

}

//The following is the normal visualization process. The color and size of the point cloud that can be set have been annotated

vtkPolyDataMapper *mapper = vtkPolyDataMapper::New();

mapper->SetInputData(polyData);

vtkActor *actor = vtkActor::New();

actor->SetMapper(mapper);

actor->GetProperty()->SetPointSize(4);

renderer->AddActor(actor);

renderer->SetBackground(0, 0, 0);//Set background color

renderWindow->Render();

renderWindow->SetSize(800, 800);//Set window size

renderWindowInteractor->Start();

vtkSmartPointer<vtkXMLPolyDataWriter> writer =

vtkSmartPointer<vtkXMLPolyDataWriter>::New();

writer->SetFileName("polyData.vtp");

writer->SetInputData(polyData);

writer->Write();

}

MultiViewStereoReconstructor.h

#pragma once

#ifndef MULTIVIEWSTEREORECONSTRUCTOR

#define MULTIVIEWSTEREORECONSTRUCTOR

#include<vector>

#include<opencv2/opencv.hpp>

#include<array>

#include<memory>

class MyKeyPoint :public cv::KeyPoint {

public:

MyKeyPoint() {};

MyKeyPoint(cv::Point2f _pt, std::vector<float> _color = std::vector<float>(),

float _size = 1, float _angle = -1, float _response = 0, int _octave = 0, int _class_id = -1)

: cv::KeyPoint(_pt,_size,_angle,_response,_octave,_class_id),color(_color){};

MyKeyPoint(cv::KeyPoint _keypoint) :cv::KeyPoint(_keypoint) {};

MyKeyPoint(cv::KeyPoint _keypoint,cv::Vec3b _color) :cv::KeyPoint(_keypoint) {

setColor(_color);

};

MyKeyPoint(cv::KeyPoint _keypoint,std::vector<float> _color) :cv::KeyPoint(_keypoint),color(_color) {};

void setColor(cv::Vec3b _color);

~MyKeyPoint() {};

bool operator==(MyKeyPoint &that);

public:

std::vector<float> color;

};

class MyPoint3f :public cv::Point3f {

public:

MyPoint3f() {};

MyPoint3f(cv::Point3f _p) :cv::Point3f(_p) {};

MyPoint3f(cv::Point3f _p,cv::Vec3b _color) :cv::Point3f(_p) {

setColor(_color);

};

MyPoint3f(cv::Point3f _p,std::vector<float> _color) :cv::Point3f(_p),color(_color) {};

void setColor(cv::Vec3b _color);

public:

std::vector<float> color;

};

class UnitView {

public:

UnitView(cv::Mat& _image) {

image = _image.clone();

};

~UnitView() {};

bool extract_feature(cv::Ptr<cv::Feature2D> _executor);

public:

cv::Mat image;

cv::Mat descriptor;

std::vector<MyKeyPoint> keypoints;

cv::Mat rotation;//Rotation matrix

cv::Mat motion;

};

class PointCloudModel {

public:

PointCloudModel() {};

~PointCloudModel() {};

public:

std::vector<std::vector<int>> correspond_struct_idx;

std::vector<MyPoint3f> structure;

cv::Mat K;

cv::Mat distCoeffs;

};

class MyMatch {

public:

MyMatch(int _type=1): match_type(_type){};

~MyMatch() {};

bool SetUp();

bool Build();

bool init_structure();

void match_features(cv::Mat& query, cv::Mat& train,

std::vector<cv::DMatch>& matches,

std::vector<std::vector<cv::DMatch>>& knn_matches);

void ransace_refine_matchs(std::vector<MyKeyPoint>& kp1, std::vector <MyKeyPoint>& kp2,

std::vector<cv::DMatch>& matchs,cv::Mat& m_Fundamental);

bool find_transform(cv::Mat& K, std::vector<MyKeyPoint>& p1, std::vector<MyKeyPoint>& p2, cv::Mat& R, cv::Mat& T, cv::Mat& mask);

void maskout_points(std::vector<MyKeyPoint>& p1, cv::Mat& mask);

void reconstruct(

cv::Mat& K,

cv::Mat& R1, cv::Mat& T1,

cv::Mat& R2, cv::Mat& T2,

std::vector<MyKeyPoint>& p1, std::vector<MyKeyPoint>& p2,

std::vector<MyPoint3f>& structure);

void get_objpoints_and_imgpoints(

std::vector<cv::DMatch>& matches,

std::vector<int>& last_struct_indices,

std::vector<MyPoint3f>& global_structure,

std::vector<MyKeyPoint>& key_points,

std::vector<cv::Point3f>& object_points,

std::vector<cv::Point2f>& image_points);

void fusion_structure(

std::vector<cv::DMatch>& matches,

std::vector<int>& last_struct_indices,

std::vector<int>& next_struct_indices,

std::vector<MyPoint3f>& global_structure,

std::vector<MyPoint3f>& local_structure);

cv::Mat get_disparity(cv::Mat& img1,cv::Mat& img2);

public:

std::shared_ptr<UnitView> leftimage;

std::shared_ptr<UnitView> rightimage;

std::shared_ptr<PointCloudModel> global_model;

int match_type = 1;//0 means that this match is the match between the first image and the second image, otherwise it is 1; The default value is 1;

private:

std::vector<MyPoint3f> m_structure;

std::vector<cv::DMatch> m_matchs;

std::vector<MyKeyPoint> m1;

std::vector<MyKeyPoint> m2;

};

class MultiViewStereoReconstructor {

public:

MultiViewStereoReconstructor() {};

~MultiViewStereoReconstructor() {};

void SetK(cv::Mat& _K) { K = _K.clone(); };

void SetDistCoeffs(cv::Mat _distCoeffs) { distCoeffs = _distCoeffs.clone(); };

void SetData(std::vector<cv::Mat>& _images);

bool Execute();

void Visualize();

public:

std::shared_ptr<PointCloudModel> global_model;

std::vector<std::shared_ptr<UnitView>> images;

std::vector<MyMatch> matchs;

cv::Mat K;

cv::Mat distCoeffs;

};

#endif // !MULTIVIEWSTEREORECONSTRUCTOR

MultiViewStereoReconstructor.cpp

#pragma once

#include "MultiViewStereoReconstructor.h"

#include <opencv2/xfeatures2d/nonfree.hpp>

#include "GlobalVTKUtils.h"

#include <omp.h>

#include <thread>

void MyKeyPoint::setColor(cv::Vec3b _color)

{

this->color.resize(3);

for (int i = 0; i < 3; i++)this->color[i] = _color[i];

}

bool MyKeyPoint::operator==(MyKeyPoint & that)

{

return this->pt == that.pt;

}

void MyPoint3f::setColor(cv::Vec3b _color)

{

this->color.resize(3);

for (int i = 0; i < 3; i++)this->color[i] = _color[i];

}

bool UnitView::extract_feature(cv::Ptr<cv::Feature2D> _executor)

{

std::vector<cv::KeyPoint> _keypoints;

_executor->detectAndCompute(this->image, cv::noArray(), _keypoints, this->descriptor);

if (_keypoints.size() <= 10) return false;

for (auto& key : _keypoints) {

this->keypoints.push_back(MyKeyPoint(key, this->image.at<cv::Vec3b>(key.pt.y, key.pt.x)));

}

}

bool MyMatch::SetUp()

{

//std::cout << "MyMatch::SetUp\n";

std::vector<std::vector<cv::DMatch>> knn_matches;

match_features(this->leftimage->descriptor, this->rightimage->descriptor, m_matchs,knn_matches);

auto& kp1 = this->leftimage->keypoints;

auto& kp2 = this->rightimage->keypoints;

cv::Mat m_Fundamental;

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);

#if 1

int last_num = 0;

int next_num = 0;

double T = 10;

cv::Mat x1, x2;

cv::Point2f p1, p2;

double d;

int index_kp2;

do {

last_num = m_matchs.size();

std::vector<int> label1(kp1.size(), -1);

std::vector<int> label2(kp2.size(), -1);

for (int i = 0; i < m_matchs.size(); i++) {

label1[m_matchs[i].queryIdx] = 1;

label2[m_matchs[i].trainIdx] = 1;

}

for (int i = 0; i < knn_matches.size(); i++) {

if (label1[i] < 0) {

index_kp2 = knn_matches[i][0].trainIdx;

if (label2[index_kp2] < 0) {

p1 = kp1[knn_matches[i][0].queryIdx].pt;

p2 = kp2[index_kp2].pt;

x1 = (cv::Mat_<double>(3, 1) << p1.x, p1.y, 1);

x2 = (cv::Mat_<double>(1, 3) << p2.x, p2.y, 1);

x1 = x2*m_Fundamental*x1;

d = abs(x1.at<double>(0, 0));

if (d < T) {

m_matchs.push_back(knn_matches[i][0]);

label1[i] = 1;

label2[index_kp2] = 1;

}

}

}

}

ransace_refine_matchs(kp1, kp2, m_matchs,m_Fundamental);

next_num = m_matchs.size();

std::cout <<"loop: "<< next_num - last_num << "\n";

} while (next_num - last_num > 0);

#endif

for (int i = 0; i < m_matchs.size(); i++) {

m1.push_back(kp1[m_matchs[i].queryIdx]);

m2.push_back(kp2[m_matchs[i].trainIdx]);

}

//std::cout << "leftimage->image.size(): " << this->leftimage->image.size() << "\n";

//std::cout << "leftimage->descriptor.size(): " << this->leftimage->descriptor.size() << "\n";

//std::cout << "rightimage->descriptor.size(): " << this->rightimage->descriptor.size() << "\n";

//std::cout << "leftimage->keypoints.size(): " << this->leftimage->keypoints.size() << "\n";

//std::cout << "rightimage->keypoints.size(): " << this->rightimage->keypoints.size() << "\n";

//std::cout << "m1.size(): " << m1.size() << "\n";

//std::cout << "m2.size(): " << m2.size() << "\n";

#if 0

int gap = 10;

std::vector<MyKeyPoint> &key1 = kp1;

std::vector<MyKeyPoint> &key2 = kp2;

std::vector<cv::KeyPoint> tkey1;

std::vector<cv::KeyPoint> tkey2;

std::vector<cv::DMatch> tMatches;

int InlinerCount = 0;

for (int i = 0; i < m_matchs.size(); i += gap) {

tkey1.push_back(key1[m_matchs[i].queryIdx]);

tkey2.push_back(key2[m_matchs[i].trainIdx]);

cv::DMatch match;

match.queryIdx = InlinerCount;

match.trainIdx = InlinerCount;

InlinerCount++;

tMatches.push_back(match);

}

cv::Mat img_matches;

cv::drawMatches(

this->leftimage->image, tkey1, this->rightimage->image, tkey2,

tMatches, img_matches, cv::Scalar::all(-1), cv::Scalar::all(-1),

std::vector<char>(), cv::DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

cv::imwrite("FASTResult.jpg", img_matches);

cv::imshow("Match0", img_matches);

cv::waitKey(0);

#endif

//std::cout << "done MyMatch::SetUp\n";

return true;

}

void MyMatch::match_features(

cv::Mat & query,

cv::Mat & train,

std::vector<cv::DMatch>& matches,

std::vector<std::vector<cv::DMatch>>& knn_matches

)

{

//std::vector<std::vector<cv::DMatch>> knn_matches;

cv::BFMatcher matcher(cv::NORM_L2);

matcher.knnMatch(query, train, knn_matches, 2);

//std::cout << knn_matches.size() << "\n";

//std::cout <<"knn_matches: "<< knn_matches.size() << "\n";

//for (int i = 0; i < knn_matches.size(); i++) {

// std::cout << i << " " << knn_matches[i][0].queryIdx << " " << knn_matches[i][0].trainIdx<<" "<<knn_matches[i][0].distance << "\n";

// std::cout << i << " " << knn_matches[i][1].queryIdx << " " << knn_matches[i][1].trainIdx<<" "<<knn_matches[i][1].distance << "\n";

// std::cout << "\n\n";

//}

//Obtain the minimum matching distance that meets the Ratio Test

float min_dist = FLT_MAX;

for (int r = 0; r < knn_matches.size(); ++r)

{

//Ratio Test

if (knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance)

continue;

float dist = knn_matches[r][0].distance;

if (dist < min_dist) min_dist = dist;

}

matches.clear();

for (size_t r = 0; r < knn_matches.size(); ++r)

{

//The distance between test and exclusion points is too large

if (

knn_matches[r][0].distance > 0.6*knn_matches[r][1].distance ||

knn_matches[r][0].distance > 5 * cv::max(min_dist, 10.0f)

)

continue;

//Save match points

matches.push_back(knn_matches[r][0]);

}

}

void MyMatch::ransace_refine_matchs(

std::vector<MyKeyPoint>& kp1, std::vector<MyKeyPoint>& kp2,

std::vector<cv::DMatch>& matchs,

cv::Mat& m_Fundamental)

{

//auto& kp1 = this->leftimage->keypoints;

//auto& kp2 = this->rightimage->keypoints;

std::vector<cv::Point2f> _p1, _p2;

for (int i = 0; i < m_matchs.size(); i++) {

_p1.push_back(kp1[m_matchs[i].queryIdx].pt);

_p2.push_back(kp2[m_matchs[i].trainIdx].pt);

}

//cv::Mat m_Fundamental;

std::vector<uchar> m_RANSACStatus;

m_Fundamental = cv::findFundamentalMat(_p1, _p2, m_RANSACStatus, cv::FM_RANSAC);//

std::vector<cv::DMatch> matchs_copy = matchs;

matchs.clear();

for (int i = 0; i < matchs_copy.size(); ++i) {

if (m_RANSACStatus[i] != 0) matchs.push_back(matchs_copy[i]);

}

}

bool MyMatch::find_transform(cv::Mat & K, std::vector<MyKeyPoint>& p1, std::vector<MyKeyPoint>& p2, cv::Mat & R, cv::Mat & T, cv::Mat & mask)

{

//Obtain the focal length and optical center coordinates (principal point coordinates) of the camera according to the internal parameter matrix

double focal_length = 0.5*(K.at<double>(0) + K.at<double>(4));

cv::Point2d principle_point(K.at<double>(2), K.at<double>(5));

std::vector<cv::Point2f> _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//Obtain the eigenmatrix according to the matching points, and use RANSAC to further eliminate the mismatch points

cv::Mat E = cv::findEssentialMat(_p1, _p2, focal_length, principle_point, cv::RANSAC, 0.999, 1.0, mask);

if (E.empty()) return false;

double feasible_count = cv::countNonZero(mask);

std::cout << (int)feasible_count << " -in- " << p1.size() << std::endl;

//For RANSAC, when the number of outlier s is greater than 50%, the result is unreliable

if (feasible_count <= 15 || (feasible_count / p1.size()) < 0.6)

return false;

//Decompose the eigenmatrix to obtain the relative transformation

int pass_count = cv::recoverPose(E, _p1, _p2, R, T, focal_length, principle_point, mask);

//The number of points in front of both cameras should be large enough

if (((double)pass_count) / feasible_count < 0.7)

return false;

return true;

}

void MyMatch::maskout_points(std::vector<MyKeyPoint>& p1, cv::Mat & mask)

{

std::vector<MyKeyPoint> p1_copy = p1;

p1.clear();

for (int i = 0; i < mask.rows; ++i) {

if (mask.at<uchar>(i) > 0) p1.push_back(p1_copy[i]);

}

}

void MyMatch::reconstruct(cv::Mat & K, cv::Mat & R1, cv::Mat & T1, cv::Mat & R2, cv::Mat & T2,

std::vector<MyKeyPoint>& p1, std::vector<MyKeyPoint>& p2, std::vector<MyPoint3f>& structure)

{

std::vector<cv::Point2f> _p1, _p2;

for (int i = 0; i < p1.size(); i++) {

_p1.push_back(p1[i].pt);

_p2.push_back(p2[i].pt);

}

//Projection matrix of two cameras [R, t], triangular points only supports float type

cv::Mat proj1(3, 4, CV_32FC1);

cv::Mat proj2(3, 4, CV_32FC1);

R1.convertTo(proj1(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T1.convertTo(proj1.col(3), CV_32FC1);

R2.convertTo(proj2(cv::Range(0, 3), cv::Range(0, 3)), CV_32FC1);

T2.convertTo(proj2.col(3), CV_32FC1);

cv::Mat fK;

K.convertTo(fK, CV_32FC1);

proj1 = fK * proj1;

proj2 = fK * proj2;

//Triangular reconstruction

cv::Mat s;

cv::triangulatePoints(proj1, proj2, _p1, _p2, s);

structure.clear();

structure.reserve(s.cols);

for (int i = 0; i < s.cols; ++i)

{

cv::Mat_<float> col = s.col(i);

col /= col(3); //Homogeneous coordinates need to be divided by the last element to be the real coordinate value

structure.push_back(MyPoint3f(cv::Point3f(col(0), col(1), col(2)), p1[i].color));

}

}

bool MyMatch::init_structure()

{

cv::Mat & K = global_model->K;

std::cout << "MyMatch::init_structure\n";

cv::Mat R, T;//Rotation matrix and translation vector

cv::Mat mask;//Points greater than zero in the mask represent matching points, and points equal to zero represent mismatch points

find_transform(K, m1, m2, R, T, mask);

maskout_points(m1, mask);//Remove outliers

maskout_points(m2, mask);//Remove outliers

cv::Mat R0 = cv::Mat::eye(3, 3, CV_64FC1);

cv::Mat T0 = cv::Mat::zeros(3, 1, CV_64FC1);

reconstruct(K, R0, T0, R, T, m1, m2, m_structure);

this->leftimage->rotation = R0;

this->leftimage->motion = T0;

this->rightimage->rotation = R;

this->rightimage->motion = T;

auto& correspond_struct_idx = global_model->correspond_struct_idx;

correspond_struct_idx.clear();

correspond_struct_idx.resize(2);

correspond_struct_idx[0].resize(this->leftimage->keypoints.size(), -1);

correspond_struct_idx[1].resize(this->rightimage->keypoints.size(), -1);

//Fill in the structural index of the first two images

int idx = 0;

for (int i = 0; i < m_matchs.size(); ++i)

{

if (mask.at<uchar>(i) == 0)

continue;

correspond_struct_idx[0][m_matchs[i].queryIdx] = idx;

correspond_struct_idx[1][m_matchs[i].trainIdx] = idx;

++idx;

}

//m_correspond_struct_idx = correspond_struct_idx[1];

//Initialization, direct assignment

global_model->structure = m_structure;

return true;

}

void MyMatch::get_objpoints_and_imgpoints(

std::vector<cv::DMatch>& matches,

std::vector<int>& last_struct_indices,

std::vector<MyPoint3f>& global_structure,

std::vector<MyKeyPoint>& key_points,

std::vector<cv::Point3f>& object_points,

std::vector<cv::Point2f>& image_points)

{

object_points.clear();

image_points.clear();

for (int i = 0; i < matches.size(); ++i)

{

int query_idx = matches[i].queryIdx;

int train_idx = matches[i].trainIdx;

int struct_idx = last_struct_indices[query_idx];

if (struct_idx < 0) continue;

object_points.push_back(global_structure[struct_idx]);

image_points.push_back(key_points[train_idx].pt);

}

}

void MyMatch::fusion_structure(

std::vector<cv::DMatch>& matches,

std::vector<int>& last_struct_indices,

std::vector<int>& next_struct_indices,

std::vector<MyPoint3f>& global_structure,

std::vector<MyPoint3f>& local_structure)

{

for (int i = 0; i < matches.size(); ++i)

{

int query_idx = matches[i].queryIdx;

int train_idx = matches[i].trainIdx;

int struct_idx = last_struct_indices[query_idx];

if (struct_idx >= 0) //If the point already exists in the space, the space point corresponding to the pair of matching points should be the same, and the index should be the same

{

next_struct_indices[train_idx] = struct_idx;

continue;

}

//If the point already exists in space, the point is added to the structure, and the spatial point indexes of the pair of matching points are the indexes of the newly added points

global_structure.push_back(local_structure[i]);

last_struct_indices[query_idx] = next_struct_indices[train_idx] = global_structure.size() - 1;

}

}

cv::Mat MyMatch::get_disparity(cv::Mat & img1, cv::Mat & img2)

{

// Compute disparity

cv::Mat disparity;

cv::Ptr<cv::StereoMatcher> pStereo = cv::StereoSGBM::create(0, 16, 5);

pStereo->compute(img1, img2, disparity);

return disparity;

}

bool MyMatch::Build()

{

cv::Mat& K = global_model->K;

//std::cout << "MyMatch::Build\n";

if (this->match_type == 0) {

init_structure();

}

else {

//Incremental reconstruction of the remaining images

cv::Mat r, R, T;

std::vector<cv::Point3f> object_points;

std::vector<cv::Point2f> image_points;

auto& global_structure = global_model->structure;

auto& global_struct_idx = global_model->correspond_struct_idx;

auto& last_struct_idx = global_struct_idx[global_struct_idx.size() - 1];

//Obtain the three-dimensional points corresponding to the matching points in the i-th image and the corresponding pixel points in the i+1-th image

get_objpoints_and_imgpoints(

m_matchs,

last_struct_idx,

global_structure,

this->rightimage->keypoints,

object_points,

image_points

);

//Solving transformation matrix

cv::solvePnPRansac(object_points, image_points, K, cv::noArray(), r, T);

//Convert rotation vector to rotation matrix

cv::Rodrigues(r, R);

this->rightimage->rotation = R;

this->rightimage->motion = T;

reconstruct(

K, this->leftimage->rotation, this->leftimage->motion, R, T,

m1, m2, m_structure);

std::vector<int> next_struct_indices(this->rightimage->keypoints.size(), -1);

//Integrate the new reconstruction results with the previous ones

fusion_structure(

m_matchs,

last_struct_idx,

next_struct_indices,

global_structure,

m_structure);

global_struct_idx.push_back(next_struct_indices);

}

return true;

}

void MultiViewStereoReconstructor::SetData(std::vector<cv::Mat>& _images) {

for (auto& im : _images) {

std::shared_ptr<UnitView> im_ptr = std::shared_ptr<UnitView>(new UnitView(im));

//UnitView* im_ptr = new UnitView(im);

images.push_back(im_ptr);

}

cv::Ptr<cv::Feature2D> _executor = cv::xfeatures2d::SIFT::create(0, 3, 0.04, 10);

#pragma omp parallel for num_threads(2*omp_get_num_procs()-1)

for (int i = 0; i < images.size(); i++) {

printf("thread: %d\n", std::this_thread::get_id());

images[i]->extract_feature(_executor);

}

matchs.resize(images.size() - 1);

}

bool MultiViewStereoReconstructor::Execute() {

if (K.empty()) {

std::cout << "must set K matrix!\n";

return false;

}

global_model = std::shared_ptr<PointCloudModel>(new PointCloudModel());

global_model->K = K.clone();

global_model->distCoeffs = distCoeffs.clone();

//#pragma omp parallel for num_threads(2*omp_get_num_procs()-1)

for (int i = 0; i < images.size() - 1; i++) {

MyMatch &match = matchs[i];

if (i == 0) {

match.match_type = 0;

}

match.leftimage = images[i];

match.rightimage = images[i + 1];

match.global_model = global_model;

//std::cout << "Matching images " << i << " - " << i + 1 << std::endl;

std::printf("thread: %d Matching images %d - %d\n", std::this_thread::get_id(), i, i + 1);

match.SetUp();

}

for (int i = 0; i < images.size() - 1; i++) {

matchs[i].Build();

}

};

void MultiViewStereoReconstructor::Visualize()

{

auto& global_structure = global_model->structure;

std::vector < std::array<float, 3>> tpoints;

std::vector < std::array<float, 3>> tcolors;

for (size_t i = 0; i < global_structure.size(); ++i)

{

std::array<float, 3> tp;

tp[0] = global_structure[i].x;

tp[1] = global_structure[i].y;

tp[2] = global_structure[i].z;

tpoints.push_back(tp);

std::array<float, 3> tc;

tc[0] = global_structure[i].color[0];

tc[1] = global_structure[i].color[1];

tc[2] = global_structure[i].color[2];

tcolors.push_back(tc);

}

std::cout <<"points.size(): "<< tpoints.size() << "\n";

GlobalVTKUtils::ShowPoints(tpoints, tcolors);

//GlobalVTKUtils::ShowPoints(tpoints);

}

main.cpp

#include"MultiViewStereoReconstructor.h"

#include <io.h>

#include <chrono>

#ifndef INIT_TIMER2

#define INIT_TIMER2(name) \

std::chrono::high_resolution_clock::time_point timerStart##name = std::chrono::high_resolution_clock::now();

#endif // !INIT_TIMER2

#ifndef STOP_TIMER2

#define STOP_TIMER2(name,title) \

std::cout << "RUNTIME of " << title << ": " \

<< std::chrono::duration_cast<std::chrono::milliseconds>(std::chrono::high_resolution_clock::now() - timerStart##name).count()\

<< " ms " << std::endl;

#endif // !STOP_TIMER2

void get_files(const std::string & path, std::vector<std::string>& files)

{

std::cout << "[jhq] get_files" << std::endl << std::endl;

//File handle

long long hFile = 0;

//File information_ finddata_t requires io H header file

struct _finddata_t fileinfo;

std::string p;

if ((hFile = _findfirst(p.assign(path).append("/*").c_str(), &fileinfo)) != -1) {

do {

if (strcmp(fileinfo.name, ".") != 0 && strcmp(fileinfo.name, "..") != 0) {

files.push_back(p.assign(path).append(fileinfo.name));

}

} while (_findnext(hFile, &fileinfo) == 0);

_findclose(hFile);

}

}

int main()

{

INIT_TIMER2(MultiViewStereoReconstructor)

std::vector<std::string> img_names;

get_files("./Viewer16/", img_names);

cv::Mat K(cv::Matx33d(

2889.84, 0, 825.91,

0, 2881.08, 602.73,

0, 0, 1));

cv::Mat distCoeffs = (cv::Mat_<float>(1, 5) << -0.06122164316121147, -1.243003308575242, 0.001462736730339558, -0.0001684641589496723, 1.749846418870851);

std::vector<cv::Mat> images;

for (auto it = img_names.begin(); it != img_names.end(); ++it){

std::cout << *it << std::endl;

#if 1

cv::Mat im = cv::imread(*it);

cv::Size image_size = im.size();

cv::Mat mapx = cv::Mat(image_size, CV_32FC1);

cv::Mat mapy = cv::Mat(image_size, CV_32FC1);

cv::Mat R =cv::Mat::eye(3, 3, CV_32F);

cv::initUndistortRectifyMap(K, distCoeffs, R, K,image_size, CV_32FC1, mapx, mapy);//Used to calculate distortion mapping

cv::Mat newimage;

cv::remap(im, newimage, mapx, mapy, cv::INTER_LINEAR);//Apply the obtained mapping to the image

images.push_back(newimage);

#else

images.push_back(cv::imread(*it));

#endif

}

MultiViewStereoReconstructor mvs;

mvs.SetK(K);

mvs.SetDistCoeffs(distCoeffs);

mvs.SetData(images);

mvs.Execute();

STOP_TIMER2(MultiViewStereoReconstructor,"MultiViewStereoReconstructor")

mvs.Visualize();

return 0;

}