HashMap overview

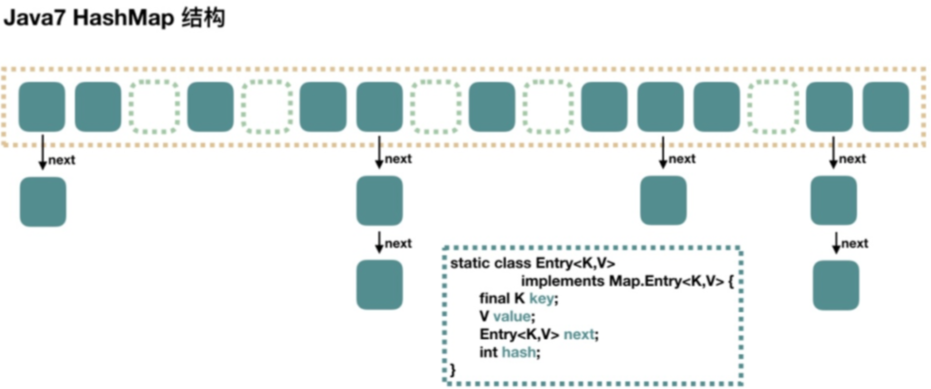

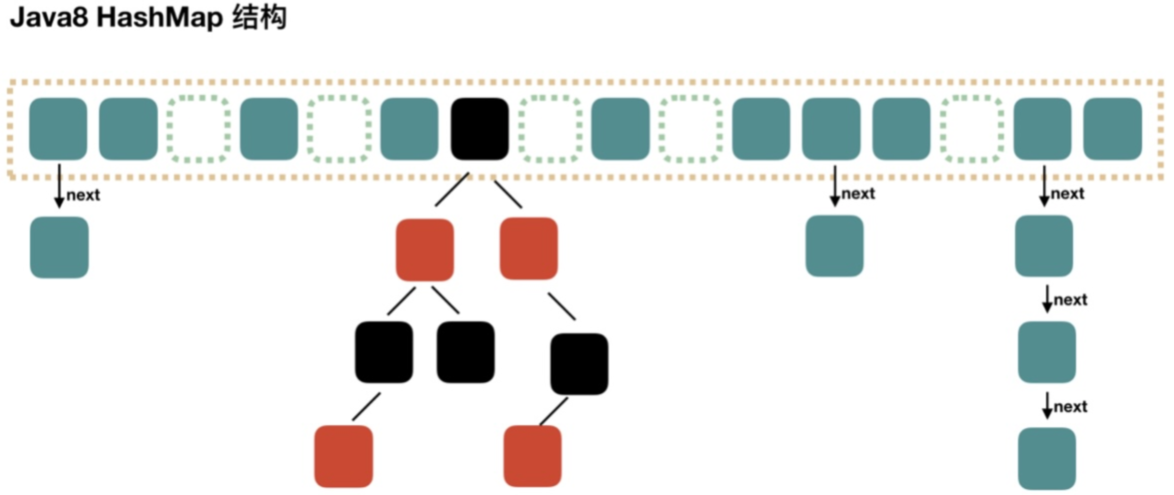

HashMap 1.7 and before, the underlying data structure uses [array + linked list], and after 1.8 uses [array + linked list / red black tree]. The use of array storage elements is because the search is fast. The linked list is to solve hash conflicts, while the red black tree is a data structure to optimize the linked list in order to solve the slow query speed in the linked list. HashMap is non thread safe. If thread safety is required, use concurrent HashMap or collections Synchronizedmap () wraps HashMap to achieve thread safety.

Use two figures to clearly express the data structure of hashmap in jdk 1.7 / 1.8. [picture from Internet]

Why does JDK use red black tree to optimize the linked list in HashMap? What are the benefits?? Listen to me.

Mangrove black

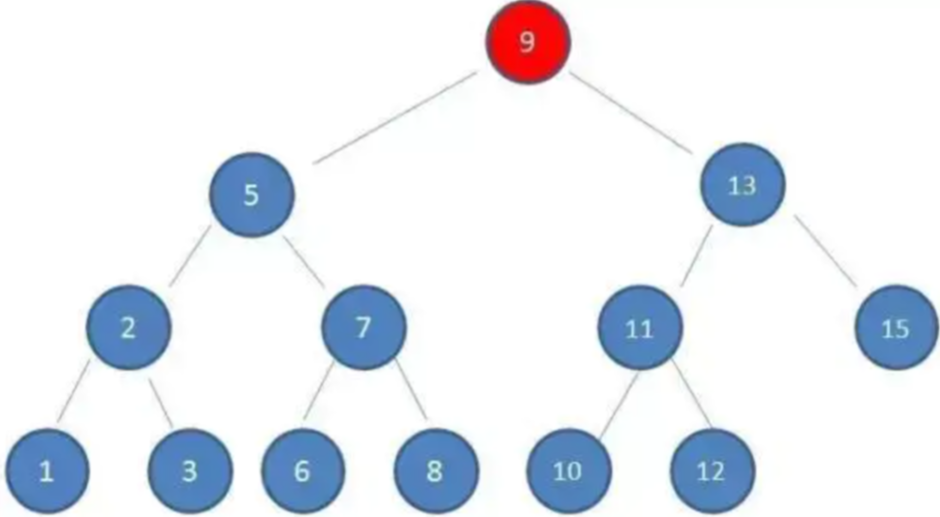

Before introducing the red black tree, first introduce [binary search tree], also known as: [binary search tree], [binary sort tree], which is generally used to optimize the linked list. It has the following characteristics:

- The value of all nodes on the left subtree is less than or equal to the value of its root node

- The value of all nodes on the right subtree is greater than or equal to the value of its root node

- The left and right subtrees are also binary sort trees

eg: to find the element 10, the search process is as follows:

- 9 < 10 (right node)

- 13 > 10 (left node)

- 11 > 10 (left node)

- 10 = 10 element found

The maximum number of times a binary sort tree finds a result is the height of the binary tree. What about the extreme case? For example, the elements are [7,6,5,4,3,2,1] respectively. At this time, according to the characteristics of binary sort tree, the tree will become a straight line, which will become a linear query. The time complexity is O(N). In order to optimize this problem, the [red black tree] appears.

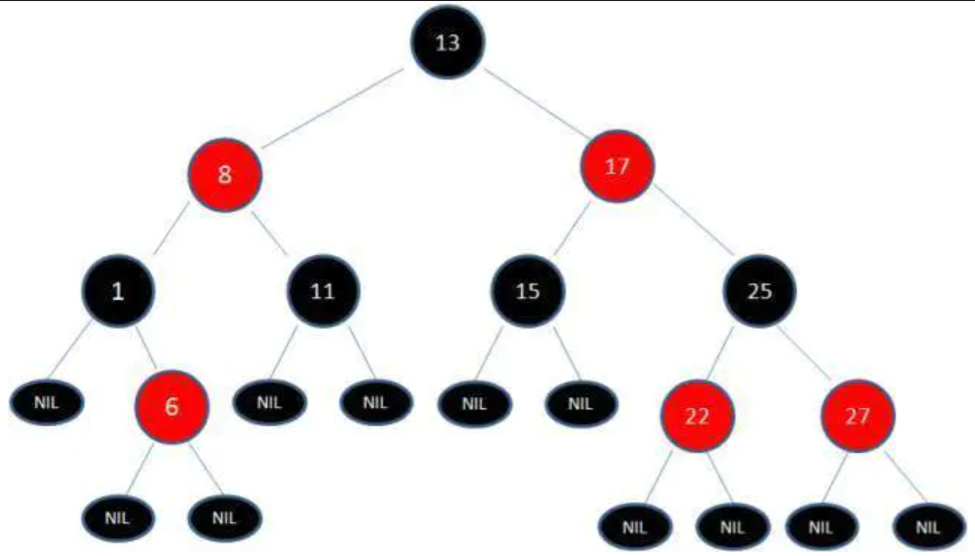

Red black tree is a kind of self balancing binary search tree. The feature of self balancing is to optimize the problems that may be long in the linked list in HashMap.

Red black tree is a binary lookup tree with color attribute on each node. Its characteristics are as follows:

- The nodes are red / black

- The root node must be black

- Each leaf node (NIL node, empty node) is black

- The two child nodes of each red node are black (there cannot be two consecutive red nodes on all paths from each leaf to the root)

- All paths from any node to each of its leaves contain the same number of black nodes

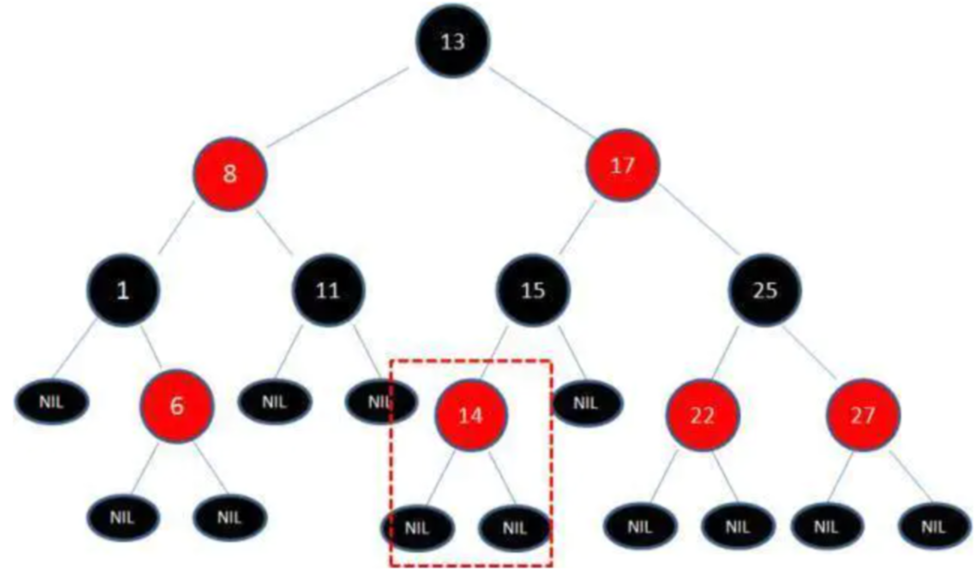

[a typical red black tree]

How to insert a node into the red black tree?

eg: inserting 14 node elements into the above figure will not destroy the characteristics of the red black tree.

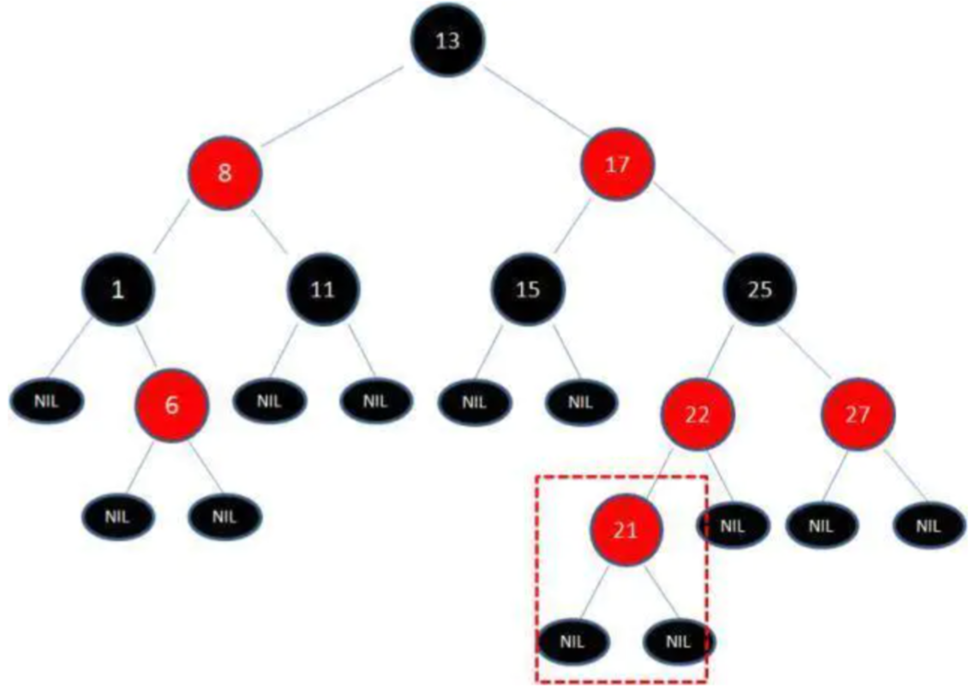

If element 21 is inserted, the characteristics of red black tree will be destroyed.

How does the red black tree maintain its self balance??

Red and black trees maintain the rules of red and black trees through "color change" and "rotation". Rotation is divided into "left rotation" and "right rotation".

Rotation example

1) Rotate the following figure to the left

2) Rotate the following figure to the right

Source code analysis

The following source codes are from jdk1 eight

Default value

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16, default initialization capacity static final int MAXIMUM_CAPACITY = 1 << 30; // Maximum capacity static final float DEFAULT_LOAD_FACTOR = 0.75f; //Load factor static final int TREEIFY_THRESHOLD = 8; // Tree threshold static final int UNTREEIFY_THRESHOLD = 6; // De treeing threshold static final int MIN_TREEIFY_CAPACITY = 64; // Minimum treelized array capacity, and the minimum array length converted to red black tree is 64

Two factors affecting HashMap performance

Initial capacity

- Capacity when creating hash table

Load factor

- The capacity that measures the filling degree of the hash table before automatically increasing the capacity. When the amount of data in the hash table exceeds (load factor * current capacity), the hash table will be rebuilt

- eg: when the HashMap capacity is not specified, the initial capacity is 16. When the amount of map data reaches 12, the hash table will be expanded and rebuilt.

public HashMap(int initialCapacity, float loadFactor) {}

put()

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node<K,V>[] tab; Node<K,V> p; int n, i;

// If the table of the storage element is empty, initialize the necessary fields

if ((tab = table) == null || (n = tab.length) == 0)

// Get length 16

n = (tab = resize()).length;

// If the node obtained according to the hash value is empty, a new node will be created

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

else {

Node<K,V> e; K k;

// If the hash and key values of the newly inserted node and the p node in the table are the same

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

// If it is a red black tree node, insert the red black tree

else if (p instanceof TreeNode)

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

else {

for (int binCount = 0; ; ++binCount) {

// If this single linked list has only one header node, you can directly create a new node

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

// When the length of the linked list reaches 8, the linked list is converted into a red black tree

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

// Build red black tree

treeifyBin(tab, hash);

break;

}

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

// When the key is repeated, the old value is overwritten, and onlyIfAbsent is the input parameter false

if (e != null) { // existing mapping for key

V oldValue = e.value;

// Judge whether overwrite is allowed and whether value is empty

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

}

// Record the number of times to modify the internal structure of HashMap (mapping times or re hashing times)

++modCount;

// map size > threshold

if (++size > threshold)

// Expand the size of the array to twice the original size, and assign the original array to the new array, which may be a linked list or a red black tree

resize();

// Callback to allow LinkedHashMap post operation

afterNodeInsertion(evict);

return null;

}

resize()

/**

* Initialize or increase the size of the table. If the table is empty, allocate it according to the initial capacity. Expansion table, power 2

*/

final Node<K,V>[] resize() {

// Get various information of the old element array

Node<K,V>[] oldTab = table;

// Old array length

int oldCap = (oldTab == null) ? 0 : oldTab.length;

// Old array threshold

int oldThr = threshold;

// Define the length of the new array and the critical value of capacity expansion

int newCap, newThr = 0;

// If the original table is not empty

if (oldCap > 0) {

// If the array length reaches the maximum value, modify the critical value to the maximum value

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

}

// Capacity expansion operation (2x)

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0) // initial capacity was placed in threshold

newCap = oldThr;

else { // zero initial threshold signifies using defaults

// The default values are used for the initial capacity and threshold of the new array

newCap = DEFAULT_INITIAL_CAPACITY; // 16

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY); // 16 * 0.75

}

// The critical value is also 0. Set the critical value

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

// Update load factor

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

if (oldTab != null) {

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

// Red black tree adjustment

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

// Linked list adjustment

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

treeify

/**

* Insert each value in the linked list into the red black tree

*/

final void treeify(Node<K,V>[] tab) {

TreeNode<K,V> root = null;

for (TreeNode<K,V> x = this, next; x != null; x = next) {

next = (TreeNode<K,V>)x.next;

// Initialize Root

x.left = x.right = null;

if (root == null) {

x.parent = null;

x.red = false;

root = x;

}

else {

K k = x.key;

int h = x.hash;

Class<?> kc = null;

for (TreeNode<K,V> p = root;;) {

int dir, ph;

K pk = p.key;

if ((ph = p.hash) > h)

dir = -1;

else if (ph < h)

dir = 1;

else if ((kc == null &&

(kc = comparableClassFor(k)) == null) ||

(dir = compareComparables(kc, k, pk)) == 0)

dir = tieBreakOrder(k, pk);

TreeNode<K,V> xp = p;

if ((p = (dir <= 0) ? p.left : p.right) == null) {

x.parent = xp;

if (dir <= 0)

xp.left = x;

else

xp.right = x;

// Balance adjustment

root = balanceInsertion(root, x);

break;

}

}

}

}

// Ensure that the given root is the root node

moveRootToFront(tab, root);

}

Interview questions

1. Why does HashMap choose red black tree as the data structure optimization linked list?

2. Why is the default initial capacity of HashMap 16?

3. Conditions for converting linked list into red black tree in HashMap?

4. Why is HashMap non thread safe? What areas does it reflect?

5. How to implement put in Hashmap?

6. Why is the Hashmap capacity power 2? What happens if it's not a power of two?

7. What types of elements are used as key s when using Hashmap?

summary

The above are some of the contents summarized by Xiaobian in the learning process. If there is any incomprehensible place, I hope you guys can point it out. Thank you very much.