1. Preface

Last article Redis Master-Slave Replication Principle In this paper, a basic principle of master-slave replication is briefly explained, which includes full replication, replication backlog buffer and incremental replication. Interested students can look at it first.

With master-slave replication, read-write separation, data backup and other functions can be achieved. However, if the primary library is down, maintenance personnel are required to manually upgrade one from the library to the new primary library and the others from the slaveof new primary Library in order to recover from the failure.

Therefore, one disadvantage of the master-slave mode is that automated failure recovery cannot be achieved. Redis later introduced the Sentinel mechanism, which greatly enhanced the high availability of the system.

2. What is a Sentinel

A Sentry is one who stands on guard and keeps watch over his surroundings, discovers enemies at the first time and gives timely warnings.

Sentinel in Redis is a special Redis instance, but it does not store data. That is, the Sentry will not load the RDB file when it starts.

For persistence of Redis, refer to my other article Talk about Redis Persistence--AOF Logs and RDB Snapshots

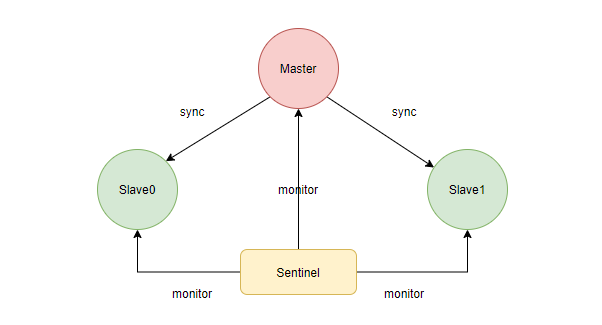

The figure above is a typical sentinel architecture consisting of data nodes and sentinel nodes, often with multiple sentinel nodes deployed.

Sentinels play three main roles, monitoring, selecting and notifying.

Monitoring: Sentinels periodically and continuously detect the viability of master and slave libraries using a heartbeat mechanism

Selector: When the sentry detects that the main library is down, select a slave library to switch it to a new one

Notification: Sentinels will notify all sublibraries of the new main library's address, so that all sublibraries and the old master slaveof the new main library will also notify clients of the new main library's address

I will detail the process of monitoring and selection below

3. Monitoring

The Sentinel System accomplishes the exploration between the main library, the secondary library and the sentry through three timed tasks.

How can the sentry get the address from the library

First, we configure the master library address in the configuration file so that when the sentry starts, it sends info commands to the master library every 10 seconds to get the current master-slave topology, and all the slave library addresses are taken.

How sentinels perceive the presence of other Sentinels

Then, every two seconds, messages are sent on the _sentinel_:hello channel on the main library using the pub/sub mechanism, which includes the sentry's own ip, port, runid, and configuration of the main library.

Each sentinel subscribes to the channel, where they post and consume messages to achieve mutual awareness.

How Sentinels Monitor Nodes

Primary and slave library addresses can be obtained using boot configuration and info commands, and the remaining sentinel nodes can be sensed using publish subscriptions.

On this basis, the sentry sends PING commands to the main library, from the library and other sentinel nodes every 1 second to spy on each other.

Subjective and objective offline

When a sentry has not received the correct response from the main library within a continuous period of down-after-milliseconds configuration (default is 30 seconds), the current sentry considers the main library to be subjectively offline and marks it as sdown (subjective down)

To avoid the current Sentinel misjudgement of the main library, other Sentinels need to be consulted at this time.

The sentinel is-master-down-by-addr command is then sent to other sentinels by the current sentinel. If more than half of the Sentinels (determined by the quorum parameter) believe that the main library is indeed subjectively offline, the current sentinel considers the main library objectively offline and marks it as odown (objective down)

4. Selectors

Once a main library is considered to be objectively offline, a Sentry election is needed at this time to elect a Leader Sentry to complete the master-slave switching process.

Sentinel Election

Sentry A, when sending sentinel is-master-down-by-addr commands to other sentinels, also asks the other sentinels to agree to set it as a Leader, meaning to get votes from other sentinels.

Each sentry has only one vote in each election. Voting follows the principle of first come, first come, first come, and if a sentry is not given to someone else, it is given to Sentry A.

Sentinels who first get more than half of their votes are called Leader s.

The Sentinel Election here uses the Raft algorithm. Raft is not discussed in detail here. Interested students can refer to another article of mine. 22 diagrams, with you getting started with the distributed consistency algorithm Raft

This article uses a lot of legends, and I'm sure you can learn something new from them, which opens the door to distributed consistency algorithms, as we remember when I finished Paxos and Zab.

More than a half-vote mechanism is also commonly used in many algorithms, such as RedLock, where locks are successfully placed on more than half of the nodes, indicating that a distributed lock has been applied for. I used tens of thousands of words to walk through Redis's bumpy road to distributed locking, so many problems would have happened, from single machine to master to multiple instances

In the Zookeeper election, a majority voting mechanism was also used, and in this article Interviewer: Can you draw me a picture of the Zookeeper election? I analyzed the Zookeeper election process from the source point of view.

Failure Recovery

When a leader sentinel is selected, it is up to the sentinel to complete recovery.

Failure recovery is divided into the following two steps:

- First you need to select a healthy and up-to-date library from each of the libraries.

- Promote this slave library to the new master library, which executes slaveof no one, and the other slave nodes slaveof the new master library.

To elaborate on the first step, the selection is conditional. Firstly, unhealthy nodes are filtered out, then sorted by some rules, and finally, the first from the library, we start directly from the source code:

sentinelRedisInstance *sentinelSelectSlave(sentinelRedisInstance *master) {

sentinelRedisInstance **instance =

zmalloc(sizeof(instance[0])*dictSize(master->slaves));

sentinelRedisInstance *selected = NULL;

int instances = 0;

mstime_t max_master_down_time = 0;

if (master->flags & SRI_S_DOWN)

max_master_down_time += mstime() - master->s_down_since_time;

max_master_down_time += master->down_after_period * 10;

di = dictGetIterator(master->slaves);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *slave = dictGetVal(de);

mstime_t info_validity_time;

//Subjective and objective offline status

if (slave->flags & (SRI_S_DOWN|SRI_O_DOWN)) continue;

//Disconnect

if (slave->link->disconnected) continue;

//No response to Sentry's ping command in 5 seconds

if (mstime() - slave->link->last_avail_time > SENTINEL_PING_PERIOD*5) continue;

//Priority is 0

if (slave->slave_priority == 0) continue;

//The response to the info command was not completed in 3 or 5 seconds (depending on the state of the primary library)

if (mstime() - slave->info_refresh > info_validity_time) continue;

//Disconnect time from primary library, more than max_master_down_time

if (slave->master_link_down_time > max_master_down_time) continue;

//Healthy nodes join the instance s array

instance[instances++] = slave;

}

//Quick Sort by a Rule

qsort(instance,instances,sizeof(sentinelRedisInstance*),compareSlavesForPromotion);

//Select the first

selected = instance[0];

return selected;

}

int compareSlavesForPromotion(const void *a, const void *b) {

sentinelRedisInstance **sa = (sentinelRedisInstance **)a,

**sb = (sentinelRedisInstance **)b;

char *sa_runid, *sb_runid;

//Compare priorities first. Whoever has a lower priority (except 0) chooses who.

if ((*sa)->slave_priority != (*sb)->slave_priority)

return (*sa)->slave_priority - (*sb)->slave_priority;

//Compare replication offsets when priorities are the same. Whoever has a large offset will choose who

if ((*sa)->slave_repl_offset > (*sb)->slave_repl_offset) {

return -1; /* a < b */

} else if ((*sa)->slave_repl_offset < (*sb)->slave_repl_offset) {

return 1; /* a > b */

}

//Compare runid when priority is consistent with copy offset

sa_runid = (*sa)->runid;

sb_runid = (*sb)->runid;

//Low version of Reedis, runid does not exist in info command, so may be null

//Runid for null, think it's larger than any runid

if (sa_runid == NULL && sb_runid == NULL) return 0;

else if (sa_runid == NULL) return 1; /* a > b */

else if (sb_runid == NULL) return -1; /* a < b */

//Sort alphabetically and choose whichever comes first

return strcasecmp(sa_runid, sb_runid);

}Therefore, the following are filtered out from the library:

- Subjective, objective or disconnected

- Response to Sentry ping command was not completed in 5 seconds

- priority=0

- The response to the info command was not completed in 3 or 5 seconds (determined by the state of the library)

- Disconnect time from primary library, more than max_master_down_time

The rest of the nodes are healthy nodes, then perform a quick sort again with the following rules:

- Priority, the lower the priority (except 0), the better the choice

- Compare copy offsets. Whoever has a large offset will choose who

- Compare runid, sorted alphabetically. Whoever comes first chooses who

V. Summary

This is an introductory article for the Redis Sentinel, which focuses on the role of the Sentinel, such as monitoring, selection, and notification.

With Redis read-write separation, the use of sentinels makes it easy to recover from failures and improves overall availability.

However, the Sentinel is unable to solve the Redis single-machine writing bottleneck, which requires the introduction of a cluster model, and the corresponding articles are listed as writing plans for the next year.