Original link: http://tecdat.cn/?p=19936

Original source: Tuo end data tribal official account

In this tutorial, you will learn how to create a neural network model in R.

Neural network (or artificial neural network) has the ability to learn through samples. Artificial neural network is an information processing model inspired by biological neuron system. It consists of a large number of highly interconnected processing elements (called neurons) to solve the problem. It follows a nonlinear path and processes information in parallel in the whole node. Neural network is a complex adaptive system. Adaptive means that it can change its internal structure by adjusting the input weight.

The neural network aims to solve the problems easily encountered by humans and difficult to be solved by machines, such as identifying the pictures of cats and dogs and identifying numbered pictures. These problems are usually called pattern recognition. Its applications range from optical character recognition to target detection.

This tutorial will cover the following topics:

- Introduction to neural networks

- Forward and reverse propagation

- Activation function

- Implementation of neural network in R

- case

- advantages and disadvantages

- conclusion

Introduction to neural networks

Neural network is an algorithm inspired by human brain to perform specific tasks. It is a set of connected input / output units, where each connection has a weight associated with it. In the learning stage, the network learns by adjusting the weight to predict the correct category label of a given input.

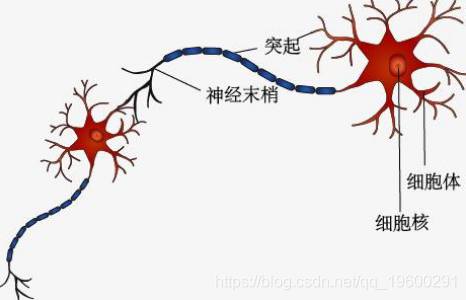

The human brain consists of billions of nerve cells that process information. Every nerve cell is considered a simple processing system. Neurons called biological neural networks transmit information through electrical signals. This parallel interactive system enables the brain to think and process information. The dendrites of one neuron receive input signals from another neuron and respond to the axons of some other neuron according to these inputs.

Dendrites receive signals from other neurons. The cell body sums all input signals to generate an output. When the sum reaches the threshold, it is output through the axon. Synapse is a point of interaction between neurons. It transmits electrochemical signals to another neuron.

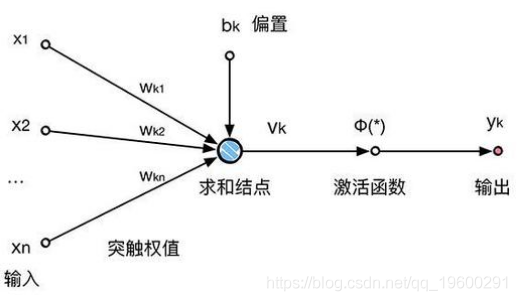

x1, X2... Xn are input variables. w1, W2... Wn is the weight of each input. b is the deviation, which can be added to the weighted input to form the input. Bias and weight are adjustable parameters of neurons. Use some learning rules to adjust the parameters. The output of neurons can range from - inf to + inf. Neurons don't know boundaries. Therefore, we need a mapping mechanism between the input and output of neurons. This mechanism for mapping inputs to outputs is called an activation function.

Feedforward and feedback artificial neural networks

There are two main types of artificial neural networks: feedforward and feedback artificial neural networks. Feedforward neural network is a non recursive network. Neurons in this layer are only connected to neurons in the next layer, and they do not form a cycle. In feedforward, the signal flows to the output layer in only one direction.

The feedback neural network contains loops. By introducing a loop into the network, the signal can propagate in both directions. The feedback period will cause the network behavior to change over time according to its input. Feedback neural network is also called recurrent neural network.

Activation function

The activation function defines the output of the neuron. The activation function makes the neural network nonlinear and expressive. There are many activation functions:

- Recognition function By activating the function Identity, the input of the node is equal to the output. It fits perfectly to the task that the underlying behavior is linear (similar to linear regression). When there is nonlinearity, it is not enough to use the activation function alone, but it can still be used as an activation function on the final output node for regression tasks..

- stay In Binary Step Function, if the value of Y is higher than a specific value (called threshold), the output is True (or activated), and if it is less than the threshold, the output is false (or inactive). This is very useful in classifiers.

- S-shaped function It is called S-shaped function. Logic and hyperbolic tangent functions are commonly used S-type functions. There are two types:

- Sigmoid function Is a logic function in which the output value is binary or varies from 0 to 1.

- Tanh function Is a logic function whose output value varies from - 1 to 1. Also called hyperbolic tangent function or tanh.

- ReLU function, also known as modified linear unit, is a piecewise linear function, which makes up for the gradient disappearance of sigmoid function and tanh function. It is the most commonly used activation function. For the negative value of x, it outputs 0.

Implementation of neural network in R

Create training dataset

We create datasets. Here, you need two attributes or columns in the data: characteristics and labels. In the table shown above, you can view students' professional knowledge, communication skills scores and student scores. Therefore, the first two columns (professional knowledge score and communication skill score) are characteristics, and the third column (student achievement) is binary label.

#Create training dataset # Here, multiple columns or features are combined into a set of data test=data.frame(professional knowledge,Communication skill score)Let's build a neural network classifier model. Firstly, the neural network library is imported, and the neural network classifier model is created by transmitting the parameter set of labels and features, the data set, the number of neurons in the hidden layer and error calculation. `````` # Fitting neural network nn(achievement~professional knowledge+Communication skill score, hidden=3,act.fct = "logistic", linear.output = FALSE)

Here we get the dependent variable, independent variable and loss of the model_ Functions_ Activation function, weight, result matrix (including the reached threshold, error, AIC and BIC and the weight of each repetition) and other information:

$model.list

$model.list$response

\[1\] "achievement"

$model.list$variables

\[1\] "professional knowledge" "Communication skill score"

$err.fct

function (x, y)

{

1/2 * (y - x)^2

}

$act.fct

function (x)

{

1/(1 + exp(-x))

}

$net.result

$net.result\[\[1\]\]

\[,1\]

\[1,\] 0.980052980

\[2,\] 0.001292503

\[3,\] 0.032268860

\[4,\] 0.032437961

\[5,\] 0.963346989

\[6,\] 0.977629865

$weights

$weights\[\[1\]\]

$weights\[\[1\]\]\[\[1\]\]

\[,1\] \[,2\] \[,3\]

\[1,\] 3.0583343 3.80801996 -0.9962571

\[2,\] 1.2436662 -0.05886708 1.7870905

\[3,\] -0.5240347 -0.03676600 1.8098647

$weights\[\[1\]\]\[\[2\]\]

\[,1\]

\[1,\] 4.084756

\[2,\] -3.807969

\[3,\] -11.531322

\[4,\] 3.691784

$generalized.weights

$generalized.weights\[\[1\]\]

\[,1\] \[,2\]

\[1,\] 0.15159066 0.09467744

\[2,\] 0.01719274 0.04320642

\[3,\] 0.15657354 0.09778953

\[4,\] -0.46017408 0.34621212

\[5,\] 0.03868753 0.02416267

\[6,\] -0.54248384 0.37453006

$startweights

$startweights\[\[1\]\]

$startweights\[\[1\]\]\[\[1\]\]

\[,1\] \[,2\] \[,3\]

\[1,\] 0.1013318 -1.11757311 -0.9962571

\[2,\] 0.8583704 -0.15529112 1.7870905

\[3,\] -0.8789741 0.05536849 1.8098647

$startweights\[\[1\]\]\[\[2\]\]

\[,1\]

\[1,\] -0.1283200

\[2,\] -1.0932526

\[3,\] -1.0077311

\[4,\] -0.5212917

$result.matrix

\[,1\]

error 0.002168460

reached.threshold 0.007872764

steps 145.000000000

Intercept.to.1layhid1 3.058334288

professional knowledge.to.1layhid1 1.243666180

Communication skill score.to.1layhid1 -0.524034687

Intercept.to.1layhid2 3.808019964

professional knowledge.to.1layhid2 -0.058867076

Communication skill score.to.1layhid2 -0.036766001

Intercept.to.1layhid3 -0.996257068

professional knowledge.to.1layhid3 1.787090472

Communication skill score.to.1layhid3 1.809864672

Intercept.to.achievement 4.084755522

1layhid1.to.achievement -3.807969087

1layhid2.to.achievement -11.531321534

1layhid3.to.achievement 3.691783805Rendering neural network

Let's draw your neural network model.

# Drawing neural network plot(nn)

Create test dataset

Create test data sets: professional knowledge scores and communication skills scores

# Create test set test=data.frame(professional knowledge,Communication skill score)

Predict the results of the test set

The calculation function is used to predict the probability score of the test data.

## Prediction using neural network Pred$result

0.9928202080 0.3335543925 0.9775153014

Now, convert the probability to a binary class.

# Converts the probability to a binary category that sets a threshold of 0.5 pred <- ifelse(prob>0.5, 1, 0) pred

1 0 1

The predicted results are 1, 0 and 1.

advantages and disadvantages

Neural networks are more flexible and can be used for regression and classification problems. Neural network is very suitable for nonlinear data sets with a large number of inputs (such as images). It can use any number of inputs and layers, and can perform work in parallel.

There are more alternative algorithms, such as SVM, decision tree and regression algorithm. These algorithms are simple, fast, easy to train and provide better performance. Neural networks are more black boxes, which need more development time and more computing power. Compared with other machine learning algorithms, neural networks need more data. NN can only be used for digital input and non missing value data sets. A famous neural network researcher said: "Neural network is the second best way to solve any problem. The best way is to really understand the problem."

Applications of neural networks

The characteristics of neural networks provide many applications, such as:

- Pattern recognition: Neural network is very suitable for pattern recognition problems, such as face recognition, object detection, fingerprint recognition and so on.

- Anomaly detection: Neural networks are good at anomaly detection. They can easily detect abnormal patterns that are not suitable for conventional patterns.

- Time series prediction: Neural networks can be used to predict time series problems, such as stock prices and weather forecasts.

- Natural language processing: Neural networks are widely used in natural language processing tasks, such as text classification, named entity recognition (NER), part of speech tagging, speech recognition and spell checking.

Most popular insights

1.Analysis of fitting yield curve with Nelson Siegel model improved by neural network in r language

2.r language to realize fitting neural network prediction and result visualization

3.python uses genetic algorithm neural network fuzzy logic control algorithm to control the lottoanalysis

4.python for nlp: multi label text lstm neural network classification using keras

5.An example of stock forecasting based on neural network using r language

6.Deep learning image classification of small data set based on Keras in R language

7.An example of seq2seq model for NLP uses Keras to realize neural machine translation

8.Analysis of deep learning model based on grid search algorithm optimization in python