Compiling ORBSLAM-2 in ROS environment

ROS installation (Ubuntu 18.04)

ROS environment ready for installation

Construction of ORB-SLAM2 algorithm environment

- Create ROS workspace:

mkdir -p ~/catkin_ws/src cd ~/catkin_ws/ catkin_make mkdir ORB-SLAM2

- Add environment variable:

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc source ~/.bashrc

- Install dependent libraries:

sudo apt-get install libblas-dev liblapack-dev

Compiling Pangolin:

sudo apt-get install libglew-dev sudo apt-get install libboost-dev libboost-thread-dev libboost-filesystem-dev sudo apt-get install libpython2.7-dev sudo apt-get install build-essential cd ~/catkin_ws/ORB-SLAM2 git clone https://github.com/stevenlovegrove/Pangolin.git cd Pangolin mkdir build cd build cmake -DCPP11_NO_BOOST = 1.. make

Download Eigen. Note that version 3.2.10:

mkdir build cd build cmake .. make sudo make install

Install OpenCV:

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev mkdir release cd release cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local .. make sudo make install

- Compile and install ORB_SLAM2:

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/(user)/catkin_ws/ORB-SLAM2/Examples/ROS

cd ORB_SLAM2 chmod +x build_ros.sh ./build_ros.sh

Error: for the undefined error of uspleep() function - > solution, add:

#include <unistd.h> #include <stdio.h> #include <stdlib.h>

- Using usb camera in ROS:

cd catkin_ws/src git clone https://github.com/bosch-ros-pkg/usb_cam.git cd .. catkin_make mkdir build cd build cmake .. make Find a replacement camera: ls /dev/video* cd /home/pan/catkin_ws/src/usb_cam cd launch gedit usb_cam-test.launch

Modify / dev/videoX to support cameras

8. Operation instruction:

roscore roslaunch usb_cam usb_cam-test.launch rosrun ORB_SLAM2 Mono /home/(user)/catkin_ws/src/ORB_SLAM2/Vocabulary/ORBvoc.txt /home/(user)/catkin_ws/src/ORB_SLAM2/Examples/Monocular/MyCam.yaml

Runtong Android client

Android client open source code

Android client source code: Github

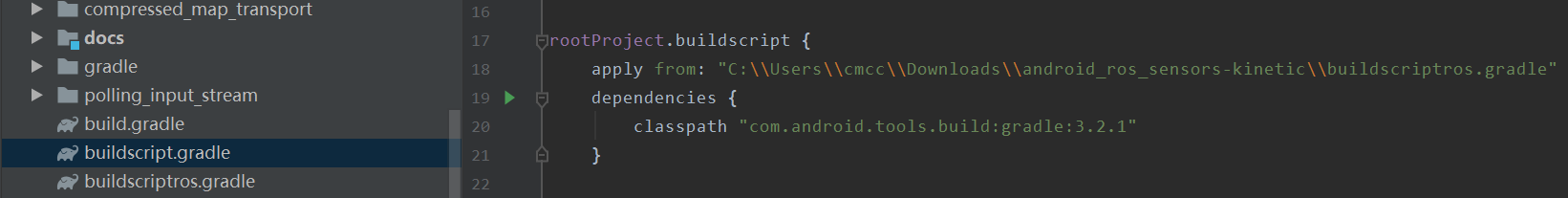

Modify the address of build

PC receiving side code: Github

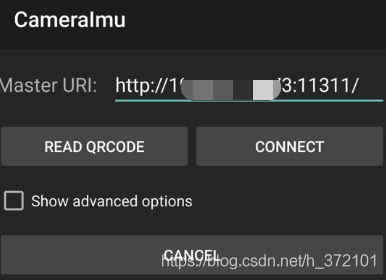

Ensure that the client and PC are in the same LAN, and modify the client connection ip address

How does RVIZ receive IMU and image data

- Receive IMU data:

Ubuntu installs IMU-TOOLS with the same version as ROS

sudo apt-get install ros-melodic-imu-tools perhaps sudo apt-get install ros-kinetic-imu-tools

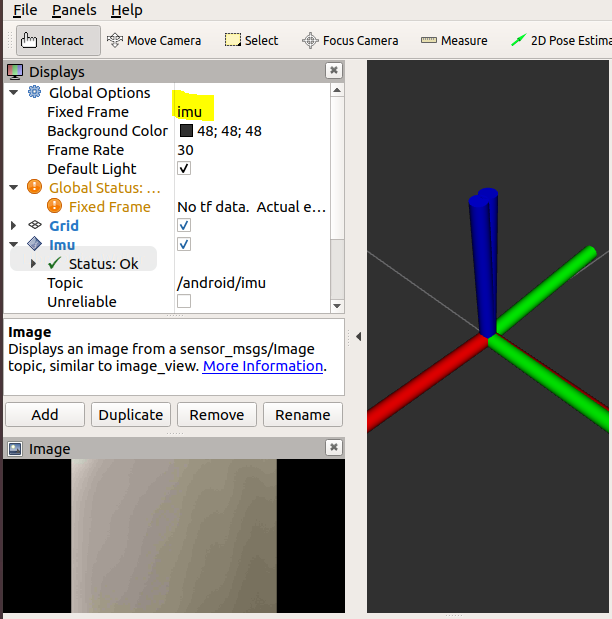

Command on RIVZ Select receive after Add - By topic - add to imu take topic be elected/android/imu And in Global Options -> Fix Frame Lieutenant general map Change to imu.

Reference operation:

Debug rviz and solve the problem "For frame [laser]: Fixed Frame [map] does not exist"!

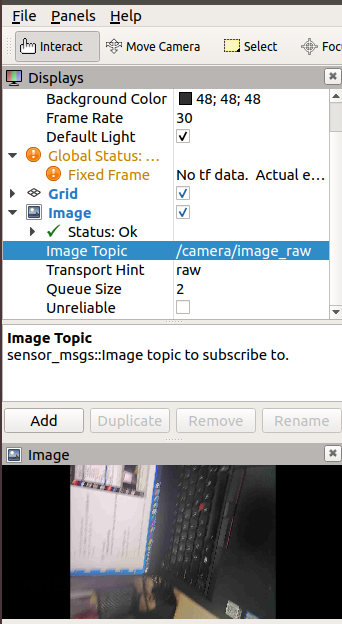

- Receive image data

First install the corresponding version of ROS accessories:

sudo apt-get install ros-indigo-image-view ros-indigo-rqt-image-view ros-indigo-image-transport-plugins perhaps sudo apt-get install ros-melodic-image-view ros-melodic-rqt-image-view ros-melodic-image-transport-plugins

Start roscore at Terminal and view the existing subscription topics through the rostopic list

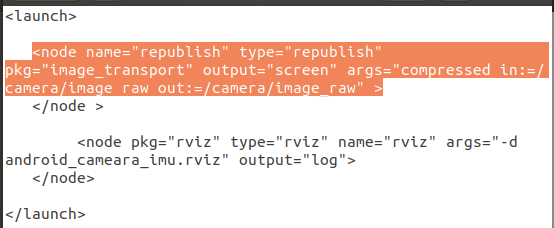

The default image transmission is compressed, so you need to convert the image to raw format

cd to Android_ Find Android in the camera IMU master folder_ Cam-imu.launch file, modify the subscription node as follows

Input instruction in Terminal: roslaunch android_cam-imu.launch

Add topic - > Image - > Image topic is set to / camera/image_raw

Camera calibration of Android camera

github source code

Instructions for camera calibration module

1. Input cheese in DASH, turn on the camera, shoot 10 ~ 20 calibrated chessboards from all angles, and be sure to change the camera attitude to reduce errors.

2. Copy the pictures taken by ccheese to camera_ In the calibration directory, be careful not to appear (. jpg named, which is a natural number, otherwise the picture may be overwritten).

3. Open the terminal and execute. / rename.sh to uniformly number the picture names into natural number sequences.

4. Execute. / camera at the terminal_ calibration boardWidth boardHeight squareSize frameNumber

boardWidth: the number of horizontal corners of the chessboard, such as 9

boardHeight: the number of vertical corner points of chessboard, such as 6

squareSize: the actual side length of each grid (required to be square) in the chessboard, unit: mm, such as 25

frameNumber: the number of pictures to be calculated, for example: 17

Sending and receiving data based on ROS protocol

Reference article: Know

On Android_ tutorial_ camera_ Talker and Listener Class are added under IMU project:

Listener.java listens for rmytopic

package org.ros.android.android_tutorial_camera_imu;

//import org.apache.commons.logging.Log;

import android.util.Log;

import org.ros.message.MessageListener;

import org.ros.namespace.GraphName;

import org.ros.node.AbstractNodeMain;

import org.ros.node.ConnectedNode;

import org.ros.node.NodeMain;

import org.ros.node.topic.Subscriber;

public class Listener extends AbstractNodeMain {

@Override

public GraphName getDefaultNodeName() {

return GraphName.of("rosjava_tutorial_pubsub/listener");

}

public void onStart(ConnectedNode connectedNode)

{

//final Log log = connectedNode.getLog();

Log.d("listener", "Node Log "+ connectedNode.getLog() + " ");

Subscriber<std_msgs.String> subscriber = connectedNode.newSubscriber("rmytopic", std_msgs.String._TYPE);

subscriber.addMessageListener(new MessageListener<std_msgs.String>() {

@Override

public void onNewMessage(std_msgs.String message) {

Log.d("listener", "I heard: \"" + message.getData() + "\"");

}

});

}

}

Talker.java to chatter

package org.ros.android.android_tutorial_camera_imu;

import org.ros.concurrent.CancellableLoop;

import org.ros.namespace.GraphName;

import org.ros.node.AbstractNodeMain;

import org.ros.node.ConnectedNode;

import org.ros.node.NodeMain;

import org.ros.node.topic.Publisher;

/**

* A simple {@link Publisher} {@link NodeMain}.

*

* @author damonkohler@google.com (Damon Kohler)

*/

public class Talker extends AbstractNodeMain {

@Override

public GraphName getDefaultNodeName() {

return GraphName.of("rosjava_tutorial_pubsub/talker");

}

@Override

public void onStart(final ConnectedNode connectedNode) {

final Publisher<std_msgs.String> publisher =

connectedNode.newPublisher("chatter", std_msgs.String._TYPE);

// This CancellableLoop will be canceled automatically when the node shuts

// down.

connectedNode.executeCancellableLoop(new CancellableLoop() {

@Override

protected void setup() {

}

@Override

public void loop() throws InterruptedException {

std_msgs.String str = publisher.newMessage();

str.setData("Hello ROS from client");

publisher.publish(str);

Thread.sleep(25000);

}

});

}

}

Modify MainActivity.java

/*

* Copyright (C) 2011 Google Inc.

*

* Licensed under the Apache License, Version 2.0 (the "License"); you may not

* use this file except in compliance with the License. You may obtain a copy of

* the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

* WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

* License for the specific language governing permissions and limitations under

* the License.

*/

package org.ros.android.android_tutorial_camera_imu;

import android.Manifest;

import android.annotation.TargetApi;

import android.content.Context;

import android.content.pm.PackageManager;

import android.hardware.Camera;

import android.hardware.SensorManager;

import android.location.LocationManager;

import android.os.Build;

import android.os.Bundle;

import android.support.v4.app.ActivityCompat;

import android.util.Log;

import android.view.MotionEvent;

import android.view.Window;

import android.view.WindowManager;

import android.widget.Toast;

import org.ros.address.InetAddressFactory;

import org.ros.android.RosActivity;

import org.ros.android.view.camera.RosCameraPreviewView;

import org.ros.node.NodeConfiguration;

import org.ros.node.NodeMainExecutor;

import java.util.List;

import org.ros.android.MessageCallable;

import org.ros.android.view.RosTextView;

import org.ros.android.android_tutorial_camera_imu.Talker;

/**

* @author ethan.rublee@gmail.com (Ethan Rublee)

* @author damonkohler@google.com (Damon Kohler)

* @author huaibovip@gmail.com (Charles)

*/

public class MainActivity extends RosActivity {

private int cameraId = 0;

private RosCameraPreviewView rosCameraPreviewView;

private NavSatFixPublisher fix_pub;

private ImuPublisher imu_pub;

private NodeMainExecutor nodeMainExecutor;

private LocationManager mLocationManager;

private SensorManager mSensorManager;

private RosTextView<std_msgs.String> rosTextView;

private Talker talker;

private Listener listener;

public MainActivity() {

super("ROS", "Camera & Imu");

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

requestWindowFeature(Window.FEATURE_NO_TITLE);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN);

setContentView(R.layout.activity_main);

rosCameraPreviewView = findViewById(R.id.ros_camera_preview_view);

mLocationManager = (LocationManager) this.getSystemService(Context.LOCATION_SERVICE);

mSensorManager = (SensorManager) this.getSystemService(SENSOR_SERVICE);

rosTextView = (RosTextView<std_msgs.String>)findViewById(R.id.text);

rosTextView.setTopicName("rmytopic");

rosTextView.setMessageType(std_msgs.String._TYPE);

rosTextView.setMessageToStringCallable(new MessageCallable<String, std_msgs.String>() {

@Override

public String call(std_msgs.String message) {

return message.getData();

}

});

}

@Override

public boolean onTouchEvent(MotionEvent event) {

if (Build.VERSION.SDK_INT < Build.VERSION_CODES.P) {

if (event.getAction() == MotionEvent.ACTION_UP) {

int numberOfCameras = Camera.getNumberOfCameras();

final Toast toast;

if (numberOfCameras > 1) {

cameraId = (cameraId + 1) % numberOfCameras;

rosCameraPreviewView.releaseCamera();

rosCameraPreviewView.setCamera(getCamera());

toast = Toast.makeText(this, "Switching cameras.", Toast.LENGTH_SHORT);

} else {

toast = Toast.makeText(this, "No alternative cameras to switch to.", Toast.LENGTH_SHORT);

}

runOnUiThread(new Runnable() {

@Override

public void run() {

toast.show();

}

});

}

}

return true;

}

@Override @TargetApi(Build.VERSION_CODES.ICE_CREAM_SANDWICH_MR1) //API = 15

protected void init(NodeMainExecutor nodeMainExecutor) {

this.nodeMainExecutor = nodeMainExecutor;

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.M) {

String[] PERMISSIONS = {"", "", "", ""};

PERMISSIONS[0] = Manifest.permission.ACCESS_FINE_LOCATION;

PERMISSIONS[1] = Manifest.permission.CAMERA;

PERMISSIONS[2] = Manifest.permission.READ_EXTERNAL_STORAGE;

PERMISSIONS[3] = Manifest.permission.WRITE_EXTERNAL_STORAGE;

ActivityCompat.requestPermissions(this, PERMISSIONS, 0);

}else {

NodeConfiguration nodeConfiguration1 = NodeConfiguration.newPublic(InetAddressFactory.newNonLoopback().getHostAddress());

nodeConfiguration1.setMasterUri(getMasterUri());

nodeConfiguration1.setNodeName("android_sensors_driver_nav_sat_fix");

this.fix_pub = new NavSatFixPublisher(mLocationManager);

nodeMainExecutor.execute(this.fix_pub, nodeConfiguration1);

rosCameraPreviewView.setCamera(getCamera());

NodeConfiguration nodeConfiguration2 = NodeConfiguration.newPublic(InetAddressFactory.newNonLoopback().getHostAddress());

nodeConfiguration2.setMasterUri(getMasterUri());

nodeConfiguration2.setNodeName("android_sensors_driver_camera");

nodeMainExecutor.execute(this.rosCameraPreviewView, nodeConfiguration2);

}

NodeConfiguration nodeConfiguration3 = NodeConfiguration.newPublic(InetAddressFactory.newNonLoopback().getHostAddress());

nodeConfiguration3.setMasterUri(getMasterUri());

nodeConfiguration3.setNodeName("android_sensors_driver_imu");

this.imu_pub = new ImuPublisher(mSensorManager);

nodeMainExecutor.execute(this.imu_pub, nodeConfiguration3);

talker = new Talker();

listener = new Listener();

NodeConfiguration nodeConfiguration4 = NodeConfiguration.newPublic(InetAddressFactory.newNonLoopback().getHostAddress());

nodeConfiguration4.setMasterUri(getMasterUri());

NodeConfiguration nodeConfiguration5 = NodeConfiguration.newPublic(InetAddressFactory.newNonLoopback().getHostAddress());

nodeConfiguration5.setMasterUri(getMasterUri());

nodeMainExecutor.execute(talker, nodeConfiguration4);

nodeMainExecutor.execute(listener,nodeConfiguration5);

nodeMainExecutor.execute(rosTextView,nodeConfiguration4);

}

private void executeGPS() {

NodeConfiguration nodeConfiguration1 = NodeConfiguration.newPublic(InetAddressFactory.newNonLoopback().getHostAddress());

nodeConfiguration1.setMasterUri(getMasterUri());

nodeConfiguration1.setNodeName("android_sensors_driver_nav_sat_fix");

this.fix_pub = new NavSatFixPublisher(mLocationManager);

nodeMainExecutor.execute(this.fix_pub, nodeConfiguration1);

}

private void executeCamera() {

rosCameraPreviewView.setCamera(getCamera());

NodeConfiguration nodeConfiguration2 = NodeConfiguration.newPublic(InetAddressFactory.newNonLoopback().getHostAddress());

nodeConfiguration2.setMasterUri(getMasterUri());

nodeConfiguration2.setNodeName("android_sensors_driver_camera");

nodeMainExecutor.execute(this.rosCameraPreviewView, nodeConfiguration2);

}

private Camera getCamera() {

Camera cam = Camera.open(cameraId);

cam.stopPreview();

Camera.Parameters camParams = cam.getParameters();

camParams.setPreviewSize(720,480);

camParams.setPictureSize(720,480);

List<Camera.Size> supportedPreviewSizes = cam.getParameters().getSupportedPreviewSizes();

List<Camera.Size> pictureSizes = cam.getParameters().getSupportedPictureSizes();

int length = pictureSizes.size();

for (int i = 0; i < length; i++) {

Log.d("SupportedSizes","SupportedPictureSizes : " + pictureSizes.get(i).width + "x" + pictureSizes.get(i).height);

}

length = supportedPreviewSizes.size();

for (int i = 0; i < length; i++) {

Log.d("SupportedSizes","SupportedPreviewSizes : " + supportedPreviewSizes.get(i).width + "x" + supportedPreviewSizes.get(i).height);

}

//Set pixel

//camParams.setPreviewSize(1280,720);

//camParams.setPictureSize(1280,720);

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.ICE_CREAM_SANDWICH) {

if (camParams.getSupportedFocusModes().contains(

Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO)) {

camParams.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO);

} else {

camParams.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE);

}

}

cam.setParameters(camParams);

cam.startPreview();

return cam;

}

@Override

public void onRequestPermissionsResult(int requestCode, String[] permissions, int[] grantResults) {

// If request is cancelled, the result arrays are empty.

if (requestCode == 0) {

if (grantResults.length > 0 && grantResults[0] == PackageManager.PERMISSION_GRANTED) {

// permission was granted, yay! Do the

executeGPS();

}

if (grantResults.length > 1 && grantResults[1] == PackageManager.PERMISSION_GRANTED) {

// permission was granted, yay! Do the

executeCamera();

}

if (grantResults.length > 2 && grantResults[2] == PackageManager.PERMISSION_GRANTED &&

grantResults[3] == PackageManager.PERMISSION_GRANTED) {

// permission was granted, yay! Do the

}

}

}

}

PC terminal side

Instruction review: ROSwiki

Check information: rostopic echo /chatter[topicName] Check the sending rate: rostopic hz /topic_name Send data: rostopic pub my_topic std_msgs/String "hello there"

Problems encountered:

When viewing the rosgraph on the pc side, all connections are normal. When viewing the topics published by android ros node, messages are also output. However, when publishing messages to the topics subscribed by android ros node through the rostopic pub command on the pc side, debugging shows that the android side cannot receive this message. The problem is that ROS is not set on the pc side_ IP environment variable

That is, ROS needs to be set in advance in each terminal running node on the pc side_ The IP environment variable is the IP address of the pc

export ROS_IP=[your pc ip]

How to Reszie receive image size

- Add node to orb_ In slam2

The orb in the workspace_ SLAM2/src/ORB_ SLAM2/Examples/ROS/ORB_ ROS in slam2 / SRC_ mono.cc

ros::Subscriber sub = nodeHandler.subscribe("/camera/image_raw", 1, &ImageGrabber::GrabImage,&igb);

The image called here is the original size of the camera, and the image size needs to be resized(),

Recompile ORBSLAM2, modify the internal parameters of Android camera to yaml file, and run the command:

Enter ROS_ mono.cc ->

- Add opencv domain: using namespace cv;

- void ImageGrabber::GrabImage() function, first convert the ROS image to CV format, and then use opencv Resize() function to modify the size again:

void ImageGrabber::GrabImage(const sensor_msgs::ImageConstPtr& msg)

{

// Copy the ros image message to cv::Mat.

cv_bridge::CvImageConstPtr cv_ptr;

cv::Mat img;

cv::Mat img_resize;

try

{

cv_ptr = cv_bridge::toCvShare(msg);

img = cv_ptr->image;

resize(img, img_resize, cv::Size(720, 480));

//circle(img,cvPoint(150,100),10,CV_RGB(0,255,255),2,8,0);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

//mpSLAM->TrackMonocular(cv_ptr->image,cv_ptr->header.stamp.toSec());

mpSLAM->TrackMonocular(img_resize,cv_ptr->header.stamp.toSec());

}

Finally, recompile the file and run the instruction again:

rosrun ORB_SLAM2 Mono '/home/xujiayi/Desktop/ORBvoc.bin' '/home/xujiayi/catkin_ws/ORB_SLAM2/ORB_SLAM2-master/Examples/ROS/ORB_SLAM2/Asus.yaml'