Source code for this article: GitHub. Click here || GitEE. Click here

1. Introduction to the Framework

1. Basic Introduction

Zookeeper is an observer-based component designed for scenarios such as unified naming services, unified configuration management, unified cluster management, dynamic offline of server nodes, and soft load balancing in a distributed system architecture.

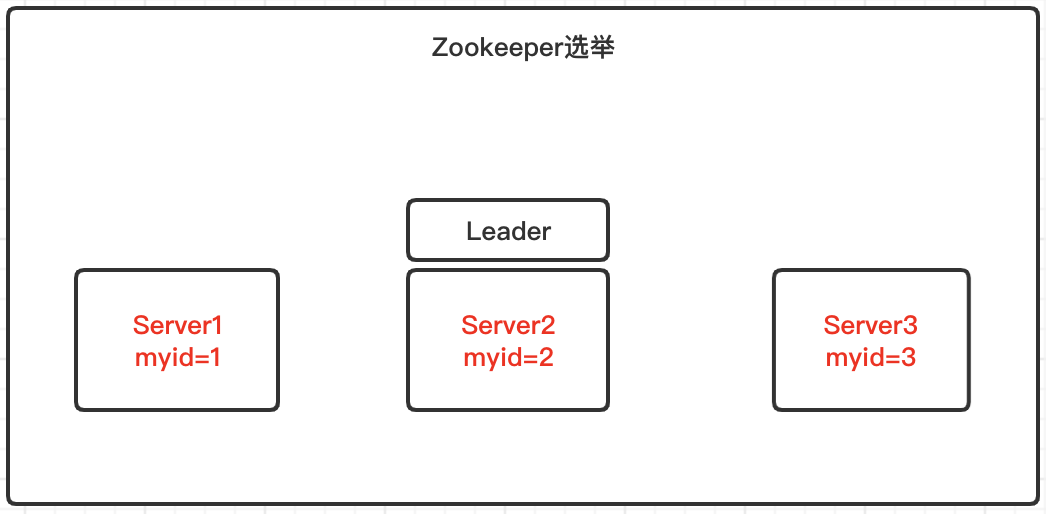

2. Cluster Elections

The Zookeeper cluster is based on half the mechanism. More than half of the machines in the cluster survive and the cluster is available.Therefore, it is recommended that the Zookeeper cluster be installed as an odd number of servers.Master and lave are not specified in the cluster configuration file.When Zookeeper works, one node is Leader and the other is Follower, which is temporarily generated through an internal election mechanism.

Basic description

Suppose you have a Zookeeper cluster of three servers, each with a myid number of 1-3, starting the server in turn, and you will find that server2 is selected as the Leader node.

Serr1 starts to run an election.Server 1 votes for itself.At this time, server 1 votes, not more than half (2 votes), the election can not be completed, server 1 status remains LOOKING;

Serr2 starts and another election is run.Server 1 and 2 vote for themselves and exchange voting information, because server 2's myid is larger than server 1's myid, server 1 will change the voting to vote for server 2.At this time, server 1 votes 0, server 2 votes 2 votes, more than half, the election is completed, server 1 status is follower, 2 status remains leader, cluster is available, server 3 starts directly as follower.

2. Cluster Configuration

1. Create a configuration directory

# mkdir -p /data/zookeeper/data # mkdir -p /data/zookeeper/logs

2. Basic Configuration

# vim /opt/zookeeper-3.4.14/conf/zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/zookeeper/data dataLogDir=/data/zookeeper/logs clientPort=2181

3. Single node configuration

# vim /data/zookeeper/data/myid

Three Node Services, written in myid file [1,2,3]

4. Cluster Services

The following configurations are written in the zoo.cfg configuration file for each service:

server.1=192.168.72.133:2888:3888 server.2=192.168.72.136:2888:3888 server.3=192.168.72.137:2888:3888

5. Start Cluster

Start three zookeeper services separately

[zookeeper-3.4.14]# bin/zkServer.sh start Starting zookeeper ... STARTED

6. View cluster status

Mode: leader is a Master node

Mode: follower is a Slave node

[zookeeper-3.4.14]# bin/zkServer.sh status Mode: leader

7. Cluster State Test

Log in to any client of a service, create a test node, and view it on another service.

[zookeeper-3.4.14 bin]# ./zkCli.sh [zk: 0] create /node-test01 node-test01 Created /node-test01 [zk: 1] get /node-test01

Or close the leader node

[zookeeper-3.4.14 bin]# ./zkServer.sh stop

The node will be re-elected.

8. Unified management of Nginx

[rnginx-1.15.2 conf]# vim nginx.conf stream { upstream zkcluster { server 192.168.72.133:2181; server 192.168.72.136:2181; server 192.168.72.136:2181; } server { listen 2181; proxy_pass zkcluster; } }

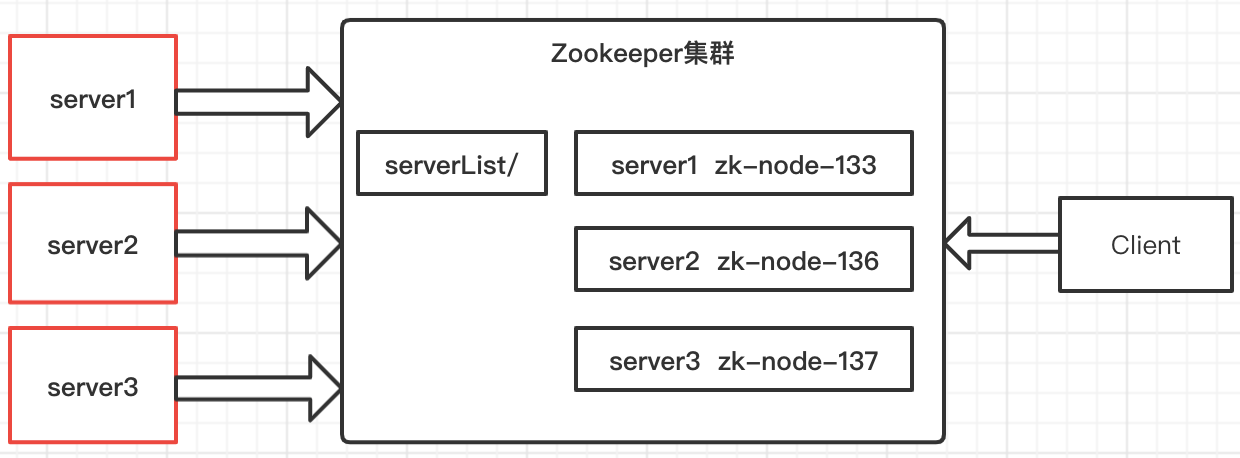

3. Service Node Monitoring

1. Basic Principles

In a distributed system, there can be more than one master node, which can be dynamically downloaded or downloaded. Any client can perceive the upstream or downline of the master node server in real time.

Process description:

- Start Zookeeper Cluster Service;

- RegisterServer simulates server-side registration;

- ClientServer simulates client listening;

- Start the server registration three times, register the zk-node services of different nodes;

- Close the registered servers in turn and simulate the service offline process;

- View the client log to monitor the changes of service nodes.

First, create a node: serverList, which holds the list of servers.

[zk: 0] create /serverList "serverList"

2. Service-side Registration

package com.zkper.cluster.monitor; import java.io.IOException; import org.apache.zookeeper.CreateMode; import org.apache.zookeeper.WatchedEvent; import org.apache.zookeeper.Watcher; import org.apache.zookeeper.ZooKeeper; import org.apache.zookeeper.ZooDefs.Ids; public class RegisterServer { private ZooKeeper zk ; private static final String connectString = "127.0.0.133:2181,127.0.0.136:2181,127.0.0.137:2181"; private static final int sessionTimeout = 3000; private static final String parentNode = "/serverList"; private void getConnect() throws IOException{ zk = new ZooKeeper(connectString, sessionTimeout, new Watcher() { @Override public void process(WatchedEvent event) { } }); } private void registerServer(String nodeName) throws Exception{ String create = zk.create(parentNode + "/server", nodeName.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL); System.out.println(nodeName +" Go online:"+ create); } private void working() throws Exception{ Thread.sleep(Long.MAX_VALUE); } public static void main(String[] args) throws Exception { RegisterServer server = new RegisterServer(); server.getConnect(); // Start services three times, register different nodes, shut down different servers again to see the client effect // server.registerServer("zk-node-133"); // server.registerServer("zk-node-136"); server.registerServer("zk-node-137"); server.working(); } }

3. Client Monitoring

package com.zkper.cluster.monitor; import org.apache.zookeeper.*; import java.io.IOException; import java.util.ArrayList; import java.util.List; public class ClientServer { private ZooKeeper zk ; private static final String connectString = "127.0.0.133:2181,127.0.0.136:2181,127.0.0.137:2181"; private static final int sessionTimeout = 3000; private static final String parentNode = "/serverList"; private void getConnect() throws IOException { zk = new ZooKeeper(connectString, sessionTimeout, new Watcher() { @Override public void process(WatchedEvent event) { try { // Listen on the list of online services getServerList(); } catch (Exception e) { e.printStackTrace(); } } }); } private void getServerList() throws Exception { List<String> children = zk.getChildren(parentNode, true); List<String> servers = new ArrayList<>(); for (String child : children) { byte[] data = zk.getData(parentNode + "/" + child, false, null); servers.add(new String(data)); } System.out.println("Current Service List:"+servers); } private void working() throws Exception{ Thread.sleep(Long.MAX_VALUE); } public static void main(String[] args) throws Exception { ClientServer client = new ClientServer(); client.getConnect(); client.getServerList(); client.working(); } }

4. Source code address

GitHub·address https://github.com/cicadasmile/data-manage-parent GitEE·address https://gitee.com/cicadasmile/data-manage-parent