Asio source code analysis (2): C + + skills and optimization used by Asio

Asio uses some C + + skills and optimizations. You may be confuse d when reading the source code for the first time. This article first briefly introduces a small part of it for later analysis.

Use destructors to perform cleanup operations

Asio uses the object's destructor to perform some cleanup operations at the end of the scope, which is a bit similar to defer in the Go language.

For example, Asio maintains a shared Operation Queue between threads, and Asio also maintains a thread private Operation Queue. When running, it will first put the completed operations in the private queue, and finally transfer the operations in the private Operation Queue to the shared Operation Queue:

struct scheduler::task_cleanup {

~task_cleanup() {

if (this_thread_->private_outstanding_work > 0) {

asio::detail::increment(

scheduler_->outstanding_work_,

this_thread_->private_outstanding_work);

}

this_thread_->private_outstanding_work = 0;

// Enqueue the completed operations and reinsert the task at the end of

// the operation queue.

lock_->lock();

scheduler_->task_interrupted_ = true;

scheduler_->op_queue_.push(this_thread_->private_op_queue);

scheduler_->op_queue_.push(&scheduler_->task_operation_);

}

scheduler* scheduler_;

mutex::scoped_lock* lock_;

thread_info* this_thread_;

};

This struct is used for (some irrelevant code is ignored):

std::size_t scheduler::do_run_one(mutex::scoped_lock& lock,

scheduler::thread_info& this_thread,

const asio::error_code& ec) {

while (!stopped_) {

if (!op_queue_.empty()) {

// Prepare to execute first handler from queue.

operation* o = op_queue_.front();

op_queue_.pop();

// ...

if (o == &task_operation_) {

// ...

task_cleanup on_exit = {this, &lock, &this_thread};

(void)on_exit;

// Run the task. May throw an exception. Only block if the operation

// queue is empty and we're not polling, otherwise we want to return

// as soon as possible.

task_->run(more_handlers ? 0 : -1, this_thread.private_op_queue);

} else {

// ...

}

} else {

// ...

}

}

return 0;

}

In fact, the same behavior can be achieved without destructors, but destructors are widely used in Asio to perform cleaning.

Using template metaprogramming to simulate concepts

In C++20, we can make constraints on template arguments through Concepts. However, this feature was not available before C++20, so template metaprogramming is usually used to simulate:

template<typename Iter>

/*requires*/ enable_if<random_access_iterator<Iter>, void>

advance(Iter p, int n) { p += n; }

template<typename Iter>

/*requires*/ enable_if<forward_iterator<Iter>, void>

advance(Iter p, int n) { assert(n >= 0); while (n--) ++p;}

Evaluation at compile time using template metaprogramming has been replaced by constexpr function. Now the main function of template metaprogramming is to manipulate types at compile time.

Use object pools to avoid frequent object creation and destruction

When using epoll under Linux, each fd has a descriptor status, which is placed in epoll_ On the ptr field of data:

// Per-descriptor queues.

class descriptor_state : operation {

friend class epoll_reactor;

friend class object_pool_access;

descriptor_state* next_;

descriptor_state* prev_;

mutex mutex_;

epoll_reactor* reactor_;

int descriptor_;

uint32_t registered_events_;

op_queue<reactor_op> op_queue_[max_ops];

bool try_speculative_[max_ops];

bool shutdown_;

ASIO_DECL descriptor_state(bool locking);

void set_ready_events(uint32_t events) { task_result_ = events; }

void add_ready_events(uint32_t events) { task_result_ |= events; }

ASIO_DECL operation* perform_io(uint32_t events);

ASIO_DECL static void do_complete(

void* owner, operation* base,

const asio::error_code& ec, std::size_t bytes_transferred);

};

Whenever a socket is created, Asio will create an associated descriptor status. Object pool can avoid frequent creation and destruction of objects, because it will not release the memory of dead objects, but reuse them.

There is an object in Asio_ The pool < Object > class is used to manage objects in use and released. Its implementation is not complicated. The specific implementation is to maintain two linked lists of objects, one live_list_ Store the objects in use, a free_list_ Store the released object (it can be used again).

When you need to create an Object, first take a look at free_list_ Is there any Object available on the? If so, return it directly and add it to live_list_; If not, create one and add it to live_list_.

When an Object is destroyed, it is removed from live_list_ Remove from and add to free_list_.

Here is the object_ Complete code of pool:

namespace asio {

namespace detail {

template <typename Object>

class object_pool;

class object_pool_access {

public:

template <typename Object>

static Object* create() {

return new Object;

}

template <typename Object, typename Arg>

static Object* create(Arg arg) {

return new Object(arg);

}

template <typename Object>

static void destroy(Object* o) {

delete o;

}

template <typename Object>

static Object*& next(Object* o) {

return o->next_;

}

template <typename Object>

static Object*& prev(Object* o) {

return o->prev_;

}

};

template <typename Object>

class object_pool : private noncopyable {

public:

// Constructor.

object_pool()

: live_list_(0),

free_list_(0) {

}

// Destructor destroys all objects.

~object_pool() {

destroy_list(live_list_);

destroy_list(free_list_);

}

// Get the object at the start of the live list.

Object* first() {

return live_list_;

}

// Allocate a new object.

Object* alloc() {

Object* o = free_list_;

if (o)

free_list_ = object_pool_access::next(free_list_);

else

o = object_pool_access::create<Object>();

object_pool_access::next(o) = live_list_;

object_pool_access::prev(o) = 0;

if (live_list_)

object_pool_access::prev(live_list_) = o;

live_list_ = o;

return o;

}

// Allocate a new object with an argument.

template <typename Arg>

Object* alloc(Arg arg) {

Object* o = free_list_;

if (o)

free_list_ = object_pool_access::next(free_list_);

else

o = object_pool_access::create<Object>(arg);

object_pool_access::next(o) = live_list_;

object_pool_access::prev(o) = 0;

if (live_list_)

object_pool_access::prev(live_list_) = o;

live_list_ = o;

return o;

}

// Free an object. Moves it to the free list. No destructors are run.

void free(Object* o) {

if (live_list_ == o)

live_list_ = object_pool_access::next(o);

if (object_pool_access::prev(o)) {

object_pool_access::next(object_pool_access::prev(o)) = object_pool_access::next(o);

}

if (object_pool_access::next(o)) {

object_pool_access::prev(object_pool_access::next(o)) = object_pool_access::prev(o);

}

object_pool_access::next(o) = free_list_;

object_pool_access::prev(o) = 0;

free_list_ = o;

}

private:

// Helper function to destroy all elements in a list.

void destroy_list(Object* list) {

while (list) {

Object* o = list;

list = object_pool_access::next(o);

object_pool_access::destroy(o);

}

}

// The list of live objects.

Object* live_list_;

// The free list.

Object* free_list_;

};

} // namespace detail

} // namespace asio

Polymorphism using function pointers and inheritance

Polymorphisms implemented using virtual functions have runtime overhead (such overhead is not only querying virtual function tables). For operation, as mentioned earlier, it will be frequently used in the program, and it is an interface class (operation has a complete() method, and different derived operations will have different implementation methods).

Asio stores a function pointer in operation. The subclass passes the specific completion method to the constructor of operation during construction, and then calls the function specified by the subclass through the function pointer in the complete() method of operation:

namespace asio {

namespace detail {

#if defined(ASIO_HAS_IOCP)

typedef win_iocp_operation operation;

#else

typedef scheduler_operation operation;

#endif

} // namespace detail

} // namespace asio

// Base class for all operations. A function pointer is used instead of virtual

// functions to avoid the associated overhead.

class scheduler_operation ASIO_INHERIT_TRACKED_HANDLER {

public:

typedef scheduler_operation operation_type;

void complete(void* owner, const asio::error_code& ec,

std::size_t bytes_transferred) {

func_(owner, this, ec, bytes_transferred);

}

void destroy() {

func_(0, this, asio::error_code(), 0);

}

protected:

typedef void (*func_type)(void*,

scheduler_operation*,

const asio::error_code&, std::size_t);

scheduler_operation(func_type func)

: next_(0),

func_(func),

task_result_(0) {}

// Prevents deletion through this type.

~scheduler_operation() {}

private:

friend class op_queue_access;

scheduler_operation* next_;

func_type func_;

protected:

friend class scheduler;

unsigned int task_result_; // Passed into bytes transferred.

};

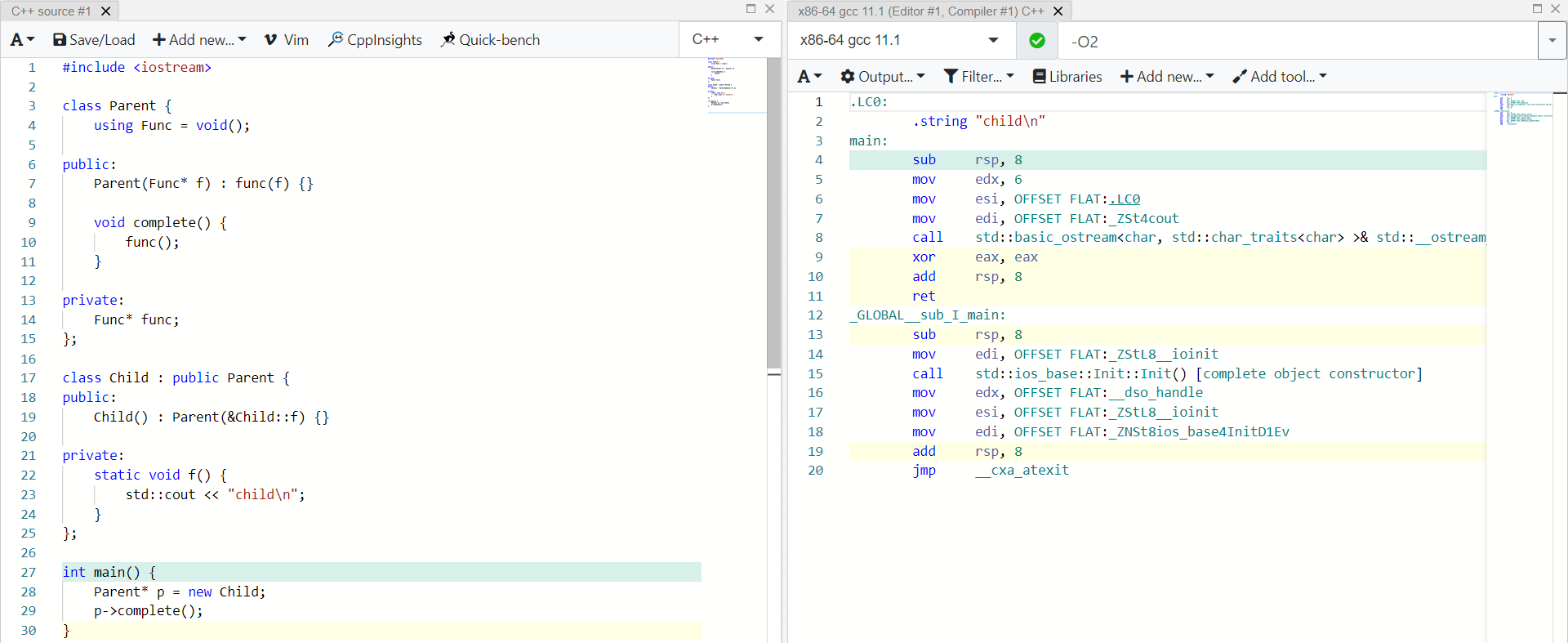

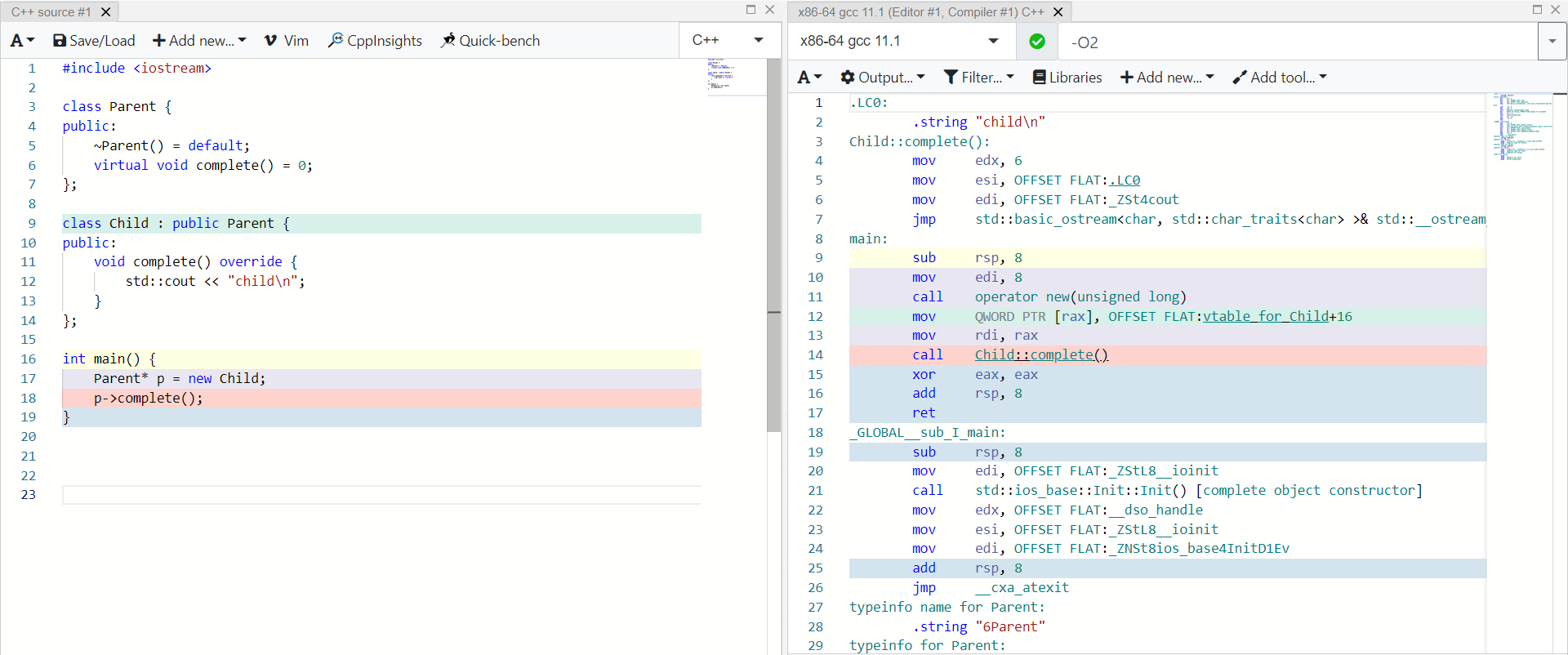

We can verify whether this is better than the virtual function (turn on O2 optimization):

It can be seen that the method of using function pointers can be expanded inline and optimized to only one std::cout statement. The compiler cannot expand inline by using virtual functions.

Use inheritance to share data instead of implementing polymorphism (virtual functions)

Inheritance has two main functions: sharing data and realizing polymorphism. In Asio, inheritance is only used to share data. As mentioned earlier, virtual functions will bring overhead. Virtual functions should be avoided in high-performance scenarios.

Inheritance is risky because it shares too much unnecessary data. Some modern programming languages do not have inheritance, such as Rust language:

Inheritance has recently fallen out of favor as a programming design solution in many programming languages because it's often at risk of sharing more code than necessary. Subclasses shouldn't always share all characteristics of their parent class but will do so with inheritance. This can make a program's design less flexible. It also introduces the possibility of calling methods on subclasses that don't make sense or that cause errors because the methods don't apply to the subclass. In addition, some languages will only allow a subclass to inherit from one class, further restricting the flexibility of a program's design.

For these reasons, Rust takes a different approach, using trait objects instead of inheritance.

reference resources

https://en.cppreference.com/w/cpp/language/constraints

http://isocpp.github.io/CppCoreGuidelines/CppCoreGuidelines#Rt-emulate

https://docs.cocos.com/creator/manual/zh/scripting/pooling.html# Concept of object pool