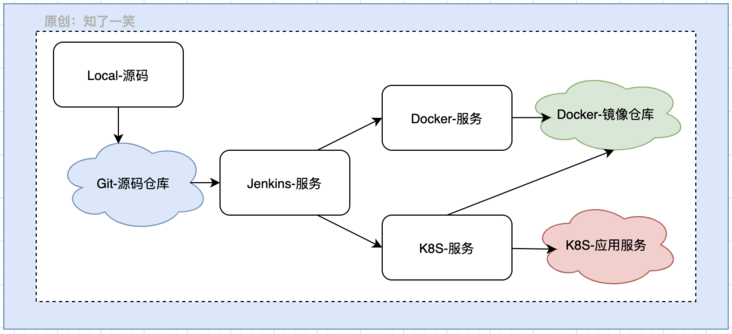

This series of articles focuses on continuous integration: Jenkins+Docker+K8S related components to realize automatic management of source code compilation, packaging, image construction, deployment and other operations; This article mainly describes the Pipeline pipeline usage.

1, Webhook principle

Pipeline pipeline tasks are usually triggered automatically. You can configure the address notified after the source code change in the Git warehouse.

For example, in Gitee warehouse, based on the configuration of WebHook, you can automatically call back the preset request address after push ing the code to the warehouse, so as to trigger the packaging action after code update. The basic process is as follows:

Two core configurations are involved:

- Gitee callback: the notification address of the warehouse after receiving the push request; In the WebHooks option of warehouse management;

- Jenkins process: write pipeline tasks and process the automatic process after code submission; The Jenkins address is required here. You can access it on the Internet. There are many components on the Internet. You can choose to build them by yourself;

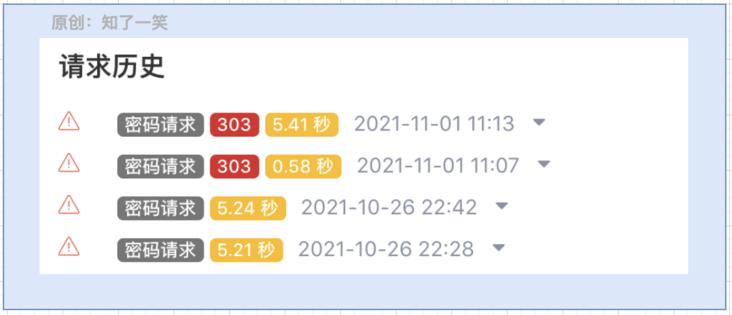

Note: you can set the callback address at will, copy the request parameters directly in the request log, and trigger the Jenkins task in postman, which will be much more convenient during the test.

Here, in combination with Gitee's help document, analyze the parameter identification of different push actions to judge the creation, push, deletion and other operations of branches, such as:

"after": "1c50471k92owjuh37dsadfs76ae06b79b6b66c57", "before": "0000000000000000000000000000000000000000",

Create branch: before characters are 0; Delete branch: after characters are all 0;

2, Pipeline configuration

1. Plug in installation

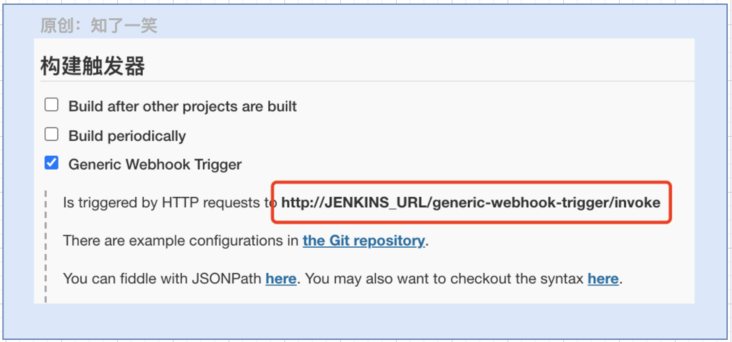

In Jenkins plug-in management, install the generic webhook trigger plug-in. pipeline related components have been installed during Jenkins initialization.

2. Create pipeline

Create a new Item, enter the task name and select the pipeline option:

Select the Webhook option, and the page prompts the trigger method.

3. Trigger pipeline

http://User name: password @ JENKINS_URL/generic-webhook-trigger/invoke

Based on the authentication in the above way, the pipeline execution is triggered, and the task log will be generated, that is, the process is smooth.

3, Pipeline syntax

1. Structural grammar

- triggers: trigger pipeline tasks based on hook mode;

- Environment: declare global environment variables;

- stages: define task steps, i.e. process segmentation;

- post.always: the final action;

The overall structure of the conventional process is as follows:

pipeline {

agent any

triggers {}

environment {}

stages {}

post { always {}}

}Configuring the scripts under each node will generate an automatic pipeline task. Note that the use Groovy sandbox option is not checked here.

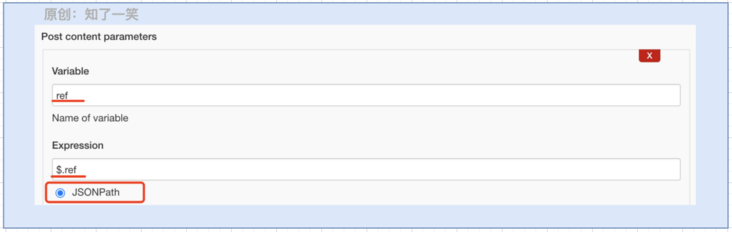

2. Parameter analysis

The parameter resolution here refers to Gitee's request for the parameters carried by Jenkins service through the hook mechanism. Here, you can mainly resolve the post parameter. See the description for the resolution method:

Here, several parameters used in the process are selected from the hook callback parameters. See the specific analysis method below. Click add in the figure above:

{

"ref":"refs/heads/master",

"repository":{

"name":"butte-auto-parent",

"git_http_url":"Warehouse address-URL"

},

"head_commit":{

"committer":{

"user_name":"Name of submitter",

}

},

"before":"277bf91ba85996da6c",

"after":"178d56ae06b79b6b66c"

}

Configure the above parameters in turn, so that they can be used in the workflow.

3. Trigger node

Here is the triggers module configuration. The core function is to load some parameters of the trigger process, which will be used in the script later. Other related configurations can be selected as needed. Note that the parameters here need to be configured in the previous step:

triggers {

GenericTrigger(

genericVariables: [

[key: 'ref', value: '$.ref'],

[key: 'repository_name', value: '$.repository.name'],

[key: 'repository_git_url', value: '$.repository.git_http_url'],

[key: 'committer_name', value: '$.head_commit.committer.user_name'],

[key: 'before', value: '$.before'],

[key: 'after', value: '$.after']

],

// causeString: ' Triggered on $ref' ,

// printContributedVariables: true,

// Print request parameters

// printPostContent: true

)

}4. Environmental variables

Declare some global environment variables, which can also be defined directly and referenced in the process in the form of ${variable}:

environment {

branch = env.ref.split("/")[2].trim()

is_master_branch = "master".equals(branch)

is_create_branch = env.before.replace('0','').trim().equals("")

is_delete_branch = env.after.replace('0','').trim().equals("")

is_success = false

}Here, according to the hook request parameters, the branch operation types are resolved: create, delete, trunk branch, and define an is_ Identification of whether the success process is successful.

5. Segmented process

It is mainly divided into five steps: parsing data, pulling branches, processing Pom files, branch pushing, and project packaging;

stages {

// Analyze warehouse information

stage('Parse') {

steps {

echo "Warehouse branch : ${branch} \n Warehouse name : ${repository_name} \n Warehouse address : ${repository_git_url} \n Submit user : ${committer_name}"

script {

if ("true".equals(is_master_branch)) {

echo "Protection branch : ${branch}"

}

if ("true".equals(is_create_branch)) {

echo "Create branch : ${branch}"

}

if ("true".equals(is_delete_branch)) {

echo "Delete branch : ${branch}"

}

}

}

}

// Pull warehouse branch

stage('GitPull') {

steps {

script {

if ("false".equals(is_delete_branch)) {

echo "Pull branch : ${branch}"

git branch: "${branch}",url: "${repository_git_url}"

}

}

}

}

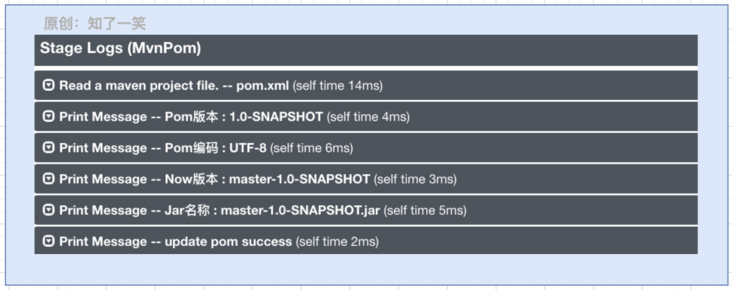

// Parse warehouse Pom file

stage('MvnPom') {

steps {

script {

// Parsing Pom file content

def pom = readMavenPom file: 'pom.xml'

def version = "${pom.version}"

def encode = pom.getProperties().get("project.build.sourceEncoding")

echo "Pom edition : "+ version

echo "Pom code : "+ encode

def devVersion = "${branch}-"+version

def jarName = "${branch}-"+version+".jar"

echo "Now edition : "+ devVersion

echo "Jar name : "+ jarName

// Modify Pom file content

// pom.getProperties().put("dev.version","${devVersion}".trim().toString())

// writeMavenPom file: 'pom.xml', model: pom

echo "update pom success"

}

}

}

// Push warehouse branch

stage('GitPush') {

steps {

script {

echo "git push success"

}

}

}

// Local packaging process

stage('Package') {

steps {

script {

sh 'mvn clean package -Dmaven.test.skip=true'

is_success = true

}

}

}

}- Analyze data: analyze and output some parameter information;

- Pull branch: pull branch code in combination with Git command;

- Processing POM files: reading and modifying POM files;

- Branch push: push branch code in combination with Git command;

- Project packaging: complete project packaging in combination with Mvn command;

Note: there is no push code when testing the process locally; After the project is packaged, start and publish the service in combination with the shell script.

6. Message notification

At the end of the process, identify the execution ID of the task_ Success is used to notify relevant personnel whether the package is successful. The notification method here can select the notification type pushed by mail or other API s, but more Description:

post {

always {

script {

echo "notify : ${committer_name} , pipeline is success : ${is_success}"

}

}

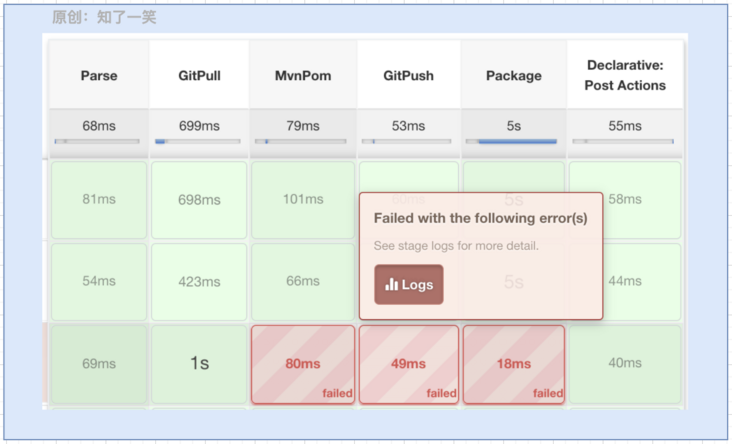

}7. Execution log

After completing the development of pipeline pipeline script above, continuously send requests through postman tool to complete script debugging:

Here, you can also click different modules in the process to view the log information under this module:

Note: the complete pipeline script content is placed in the Gitee open source warehouse at the end, and can be obtained by yourself if necessary.

Recommended for the same series:

- Jenkins management tool details

- Distributed service deployment Publishing

- Principle of microservice gray Publishing

- Service automation deployment and management

- Secondary shallow encapsulation of microservice components

4, Source code address

GitEE·address https://gitee.com/cicadasmile/butte-auto-parent Wiki·address https://gitee.com/cicadasmile/butte-java-note