Docker

-

Official document address: https://www.docker.com/get-started

-

Chinese Reference Manual: https://docker_practice.gitee.io/zh-cn/

What is Docker

Official definition

# 1. Official introduction - We have a complete container solution for you - no matter who you are and where you are on your containerization journey. - translate: We provide you with a complete container solution,Whoever you are,Wherever you are,You can start the journey of the container. - Official definition: docker Is a container technology.

Origin of Docker

Docker was originally an internal project initiated by Solomon Hykes, the founder of dotCloud company, during his stay in France. It is an innovation based on cloud service technology of dotCloud company for many years. It was open-source under Apache 2.0 license agreement in March 2013, and the main project code is maintained on GitHub. The docker project later joined the Linux foundation and established the alliance to promote open containers (OCI).

Docker has received extensive attention and discussion since it was open source. So far, its GitHub project has more than 57000 stars and more than 10000 fork s. Even due to the popularity of docker project, dotCloud decided to change its name to docker at the end of 2013. Docker was originally developed and implemented on Ubuntu 12.04; Red Hat supports docker from RHEL 6.5; Google also widely uses docker in its PaaS products.

Docker is developed and implemented in Go language introduced by Google. Based on cgroup and namespace of Linux kernel and Union FS of overlay FS, docker encapsulates and isolates processes, which belongs to virtualization technology at the operating system level. Because the isolated process is independent of the host and other isolated processes, it is also called a container.

Why Docker

-

During development, it can run in the native test environment, but not in the production environment

Here we take java Web application as an example. A java Web application involves many things, such as jdk, tomcat, mysql and other software environments. When one of these versions is inconsistent, the application may not run. Docker packages the program and the software environment directly, ensuring the consistency of the environment on any machine.

Advantage 1: consistent operating environment and easier migration

-

The server's own program hung up. It was found that someone else's program had a problem and ran out of memory. Its own program hung up because there was not enough memory

This is also a common situation. If your program is not particularly important, it is basically impossible for the company to let your program share a server. At this time, your server will share a server with other programs in the company, so it will inevitably be disturbed by other programs, resulting in problems in your own program. Docker solves the problem of environmental isolation, and other programs will not affect their own programs.

Advantage 2: the process is encapsulated and isolated, and containers do not affect each other, making more efficient use of system resources

-

If the company wants to get an activity, a large amount of traffic may come in, and the company needs to deploy dozens of more servers

In the absence of Docker, dozens of servers need to be deployed within a few days, which is a very painful thing for operation and maintenance. Moreover, the environment of each server is not necessarily the same, there will be various problems, and the scalp of the final deployment will be numb. With Docker, I only need to package the program into the image, and I can run as many containers as you want, which greatly improves the deployment efficiency.

Advantage 3: copy N multiple environment consistent containers through mirroring

Difference between Docker and virtual machine

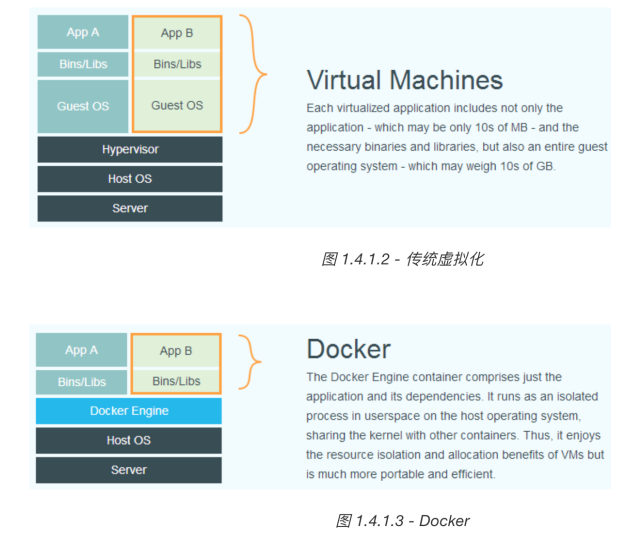

About the difference between Docker and virtual machine, I found a picture on the Internet, which is very intuitively and vividly displayed. I don't say much, but directly go to the figure above.

Comparing the above two figures, we find that the virtual machine carries the operating system, but the small application becomes very large and cumbersome because it carries the operating system. Docker does not carry the operating system, so the application of docker is very lightweight. In addition, when calling the host's CPU, disk and other resources, take memory as an example. The virtual machine uses the Hypervisor to virtualize memory. The whole calling process is virtual memory - > virtual physical memory - > real physical memory, but docker uses Docker Engine to call the host's resources. At this time, the process is virtual memory - > real physical memory.

| Traditional virtual machine | Docker container | |

|---|---|---|

| Disk occupancy | A few GB to dozens of GB or so | Tens of MB to hundreds of MB or so |

| CPU memory usage | The virtual operating system takes up a lot of CPU and memory | Docker engine occupies very low |

| Starting speed | (from Startup to running the project) a few minutes | (from opening the container to running the project) a few seconds |

| Installation management | Special operation and maintenance technology is required | Convenient installation and management |

| Application deployment | Every deployment takes time and effort | Easy and simple from the second deployment |

| Coupling | Multiple application services are installed together, which is easy to affect each other | Each application service has a container to achieve isolation |

| System dependence | nothing | Requires the same or similar kernel. Currently, Linux is recommended |

Installation of Docker

Install docker(centos7.x)

-

Uninstall the original docker

$ sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine -

Installing docker dependencies

$ sudo yum install -y yum-utils \ device-mapper-persistent-data \ lvm2

-

Set the yum source for docker

$ sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo -

Install the latest version of docker

$ sudo yum install docker-ce docker-ce-cli containerd.io

-

Install docker with the specified version

$ yum list docker-ce --showduplicates | sort -r $ sudo yum install docker-ce-<VERSION_STRING> docker-ce-cli-<VERSION_STRING> containerd.io $ sudo yum install docker-ce-18.09.5-3.el7 docker-ce-cli-18.09.5-3.el7 containerd.io

-

Start docker

$ sudo systemctl enable docker $ sudo systemctl start docker

-

Close docker

$ sudo systemctl stop docker

-

Test docker installation

$ sudo docker run hello-world

bash installation (common to all platforms)

-

In the test or development environment, Docker official provides a set of convenient installation scripts to simplify the installation process. This script can be used for installation on CentOS system. In addition, it can be installed from domestic sources through the -- mirror option: after executing this command, the script will automatically make all preparations, And install the stable version of Docker in the system.

$ curl -fsSL get.docker.com -o get-docker.sh $ sudo sh get-docker.sh --mirror Aliyun

-

Start docker

$ sudo systemctl enable docker $ sudo systemctl start docker

-

Create docker user group

$ sudo groupadd docker

-

Add the current user to the docker group

$ sudo usermod -aG docker $USER

-

Test whether docker is installed correctly

$ docker run hello-world

The core architecture of Docker

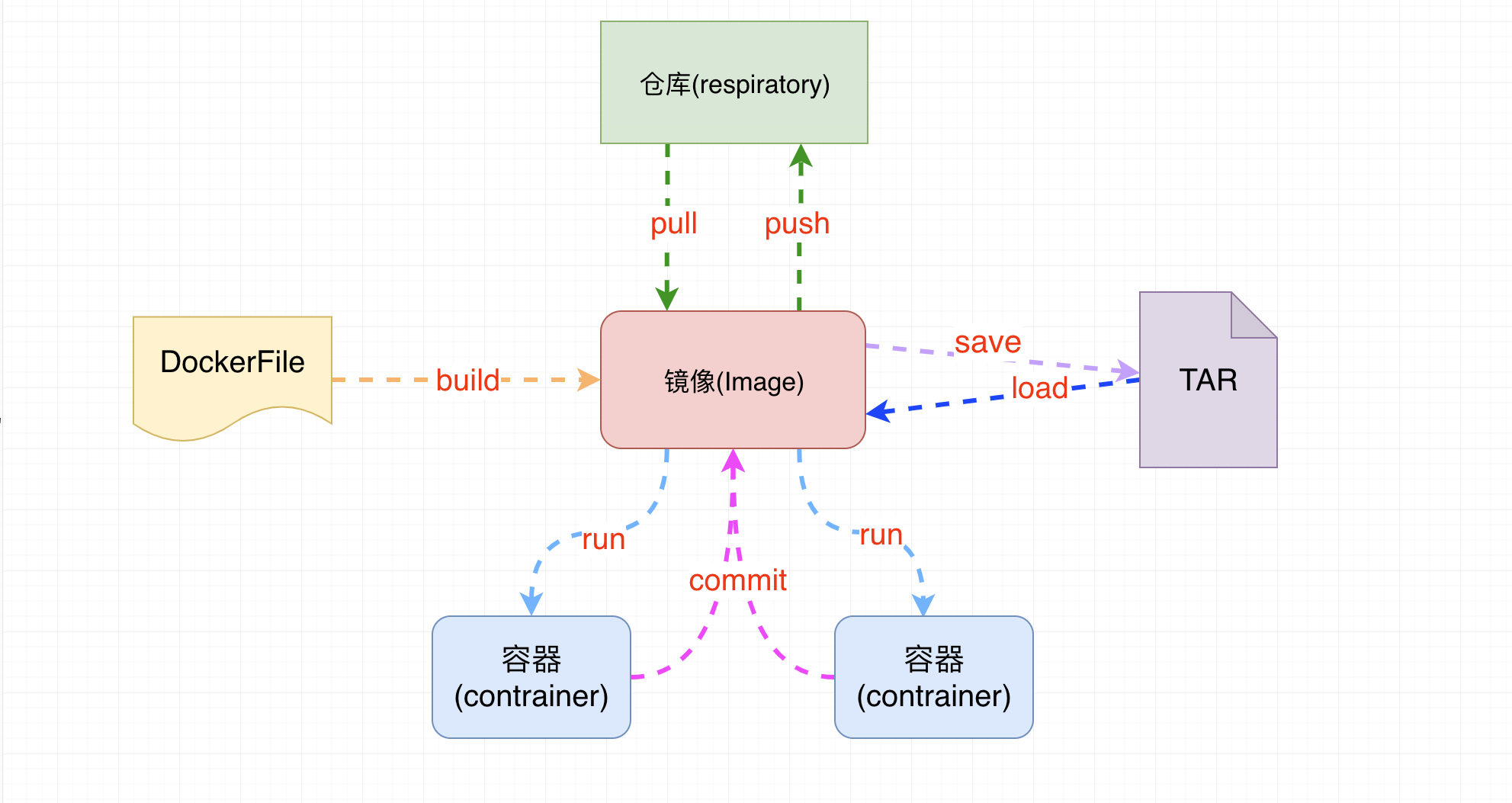

- Image: an image represents an application environment. It is a read-only file, such as mysql image, tomcat image, nginx image, etc

An image is a piece of software. The image is read-only and cannot be written

- Container: after each operation of the image, a container is generated, that is, the running image, which is readable and writable

A container represents a running software service

- Warehouse: the location where images are stored. Similar to maven warehouse, it is also the location where images are downloaded and uploaded

Remote warehouse: similar to maven's central warehouse

Local warehouse: the image downloaded from the remote warehouse is saved to the local warehouse. The default local warehouse path is: / var/lib/docker

- dockerFile:docker generates an image configuration file, which is used to write some configurations of user-defined images

- tar: a file packaged for the image, which can be restored to the image in the future

Docker configures Alibaba image acceleration service

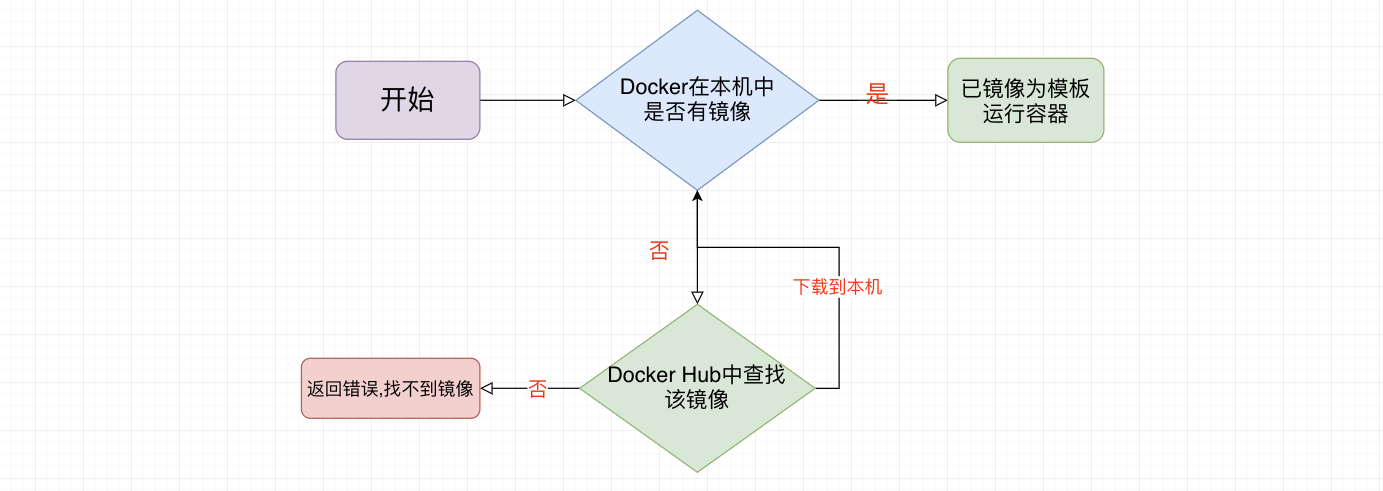

docker running process

docker configuring alicloud image acceleration

- Visit alicloud to log in to your account and view the docker image acceleration service

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://lz2nib3q.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

- Verify whether the image acceleration of docker is effective

[root@localhost ~]# docker info

..........

127.0.0.0/8

Registry Mirrors:

'https://lz2nib3q.mirror.aliyuncs.com/'

Live Restore Enabled: false

Product License: Community Engine

Common commands

Auxiliary command

# 1. Installation completion auxiliary command docker version -------------------------- see docker Information docker info -------------------------- View more details docker --help -------------------------- Help command

Images mirroring command

# 1. View all images in this machine docker images -------------------------- List all local mirrors -a List all mirrors (including intermediate image layer) -q Show only mirrors id docker images Image name --->Only the images related to the specified image name are listed # 2. Search image docker search [options] Image name ------------------- go dockerhub Query current image on -s Specified value Lists mirrors with a collection number of at least the specified value --no-trunc Display complete image information # 3. Download the image from the warehouse docker pull Image name[:TAG|@DIGEST] ----------------- Download Image # 4. Delete image docker rmi Image name -------------------------- delete mirror -f Force deletion

Contractor container command

# 1. Operating the container

docker run Image name -------------------------- Image name creates a new and starts the container

--name Alias gives a name to the container

-d Start the daemon container (start the container in the background)

-p Mapping port number: original port number Specify the port number to start

Example: docker run -it --name myTomcat -p 8888:8080 tomcat

docker run -d --name myTomcat -P tomcat

# 2. View running containers

docker ps -------------------------- List all running containers

-a Running and historically running containers

-q Display container number, silent mode only

# 3. Stop | close | restart the container

docker start Container name or container id --------------- Open container

docker restart Container name or container id --------------- Restart container

docker stop Container name or container id ------------------ Stop the operation of the container normally

docker kill Container name or container id ------------------ Stop the container immediately

docker pause Container name or container id

docker unpause Container name or container id

# 4. Delete container

docker rm -f container id And container name

docker rm -f $(docker ps -aq) -------------------------- Delete all containers

# 5. View the process in the container

docker top container id Or container name ------------------ View the processes in the container

# 6. Check the internal details of the container

docker inspect container id ------------------ View container interior details

# 7. Check the operation log of the container

docker logs [OPTIONS] container id Or container name ------------------ View container log

-t Add timestamp

-f Follow the latest log print

--tail number Show the last number

# 8. Enter the container

docker exec [options] container id In container command ------------------ Enter the container and execute the command

-i Run the container in interactive mode, usually with-t Use together

-t Assign a pseudo terminal shell window bash

# 9. Copy files between container and host

docker cp file|Directory container id:Container path ----------------- Copy the host to the inside of the container

docker cp container id:Resource path in container host directory path ----------------- Copy the resources in the container to the host

# 10. The data volume can share the directory with the host

docker run -v Path to the host|Any alias:/Path image name within the container

be careful:

1.If it is a host, the path must be absolute,The host directory will overwrite the contents of the directory in the container,The original data in the container will be emptied first,Then copy the data under the host directory

2.If it is an alias, it will be displayed in the docker Automatically create a directory in the host when running the container,And copy the container directory file to the host

Keep the original contents of the path in the container by alias,The path corresponding to the prerequisite alias cannot have a file

be careful: If alias exists,docker Direct use,Otherwise, an alias directory is automatically created,The default creation path is/var/lib/docker/volumes

docker run -v Path to the host|Any alias:/Path within container:ro Image name

here ro It means that only the host can read and write the container data volume, while the operations on the data volume in the container can only be read-only and cannot be added, deleted or modified

# 11. Package image

docker save Image name -o name-tag.tar

tag Equivalent version,You'd better mark the version

# 12. Load image

docker load -i name-tag.tar

# 13. Package the container into a new image

docker commit -m "Description information" -a "Author information" (container id Or (name) the name of the packaged image:label

Image principle of docker

What is mirroring?

Image is a lightweight and executable independent software package, which is used to package the software running environment and the software developed based on the running environment. It contains all the contents required to run a software, including code, libraries required for runtime, environment variables and configuration files.

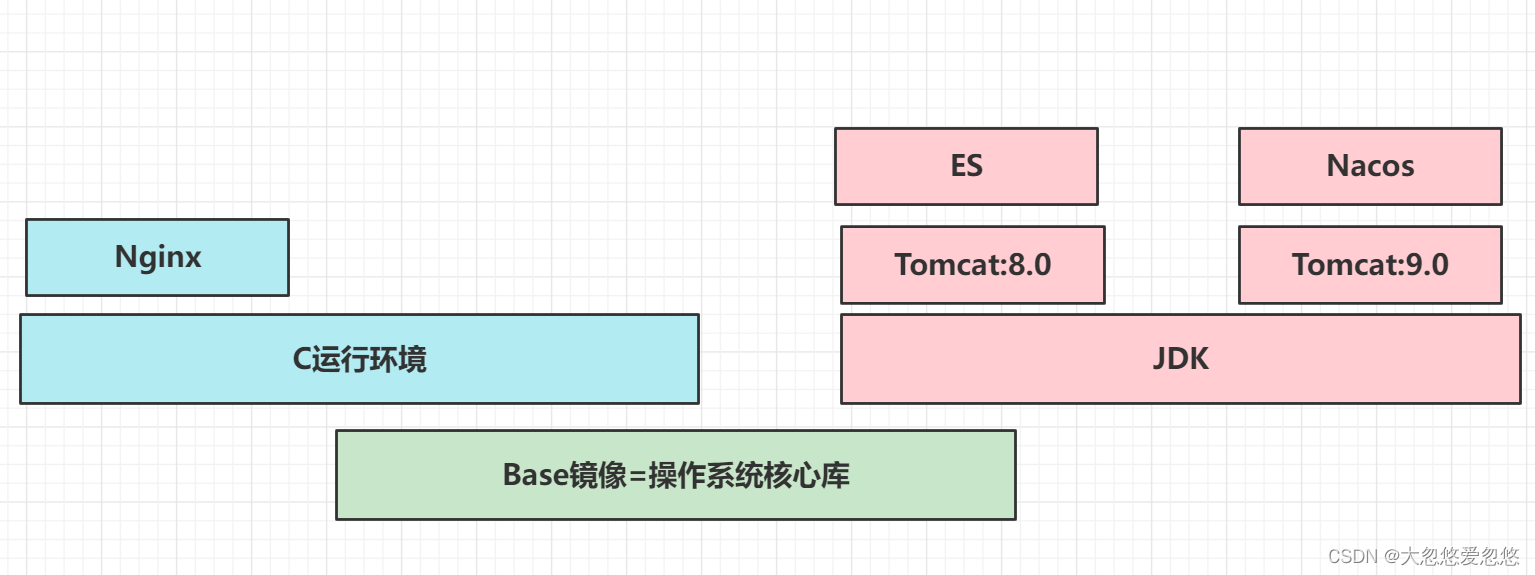

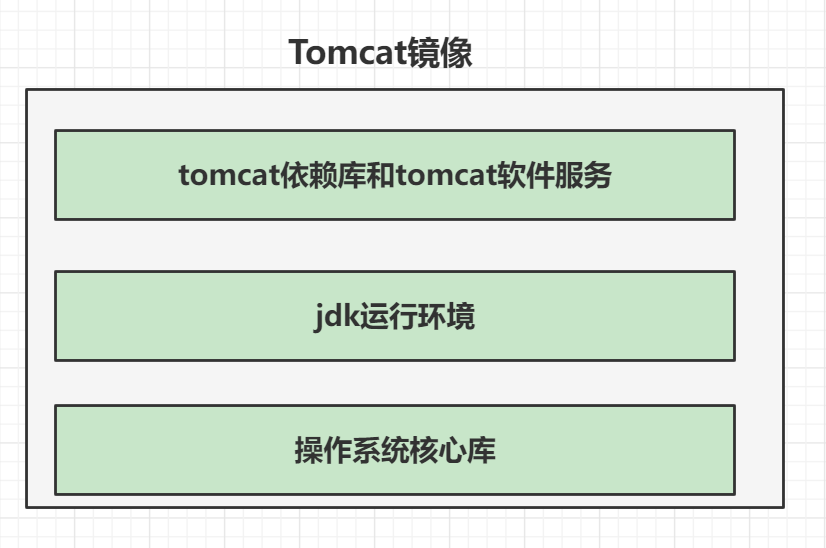

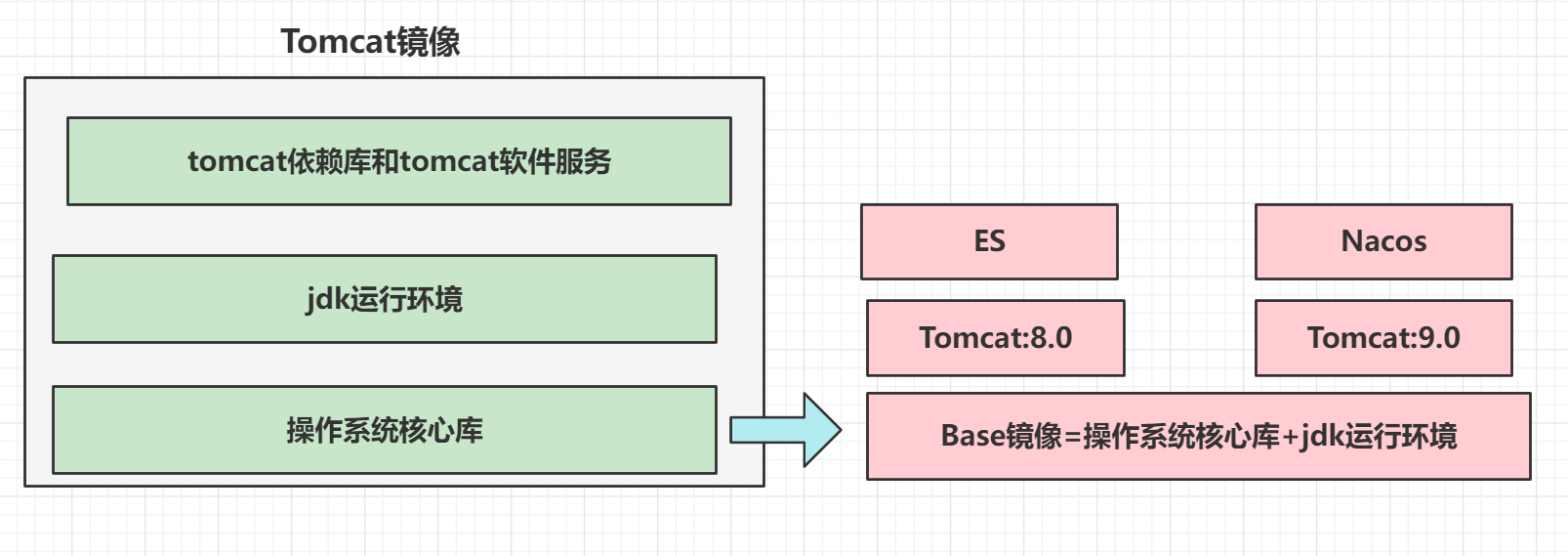

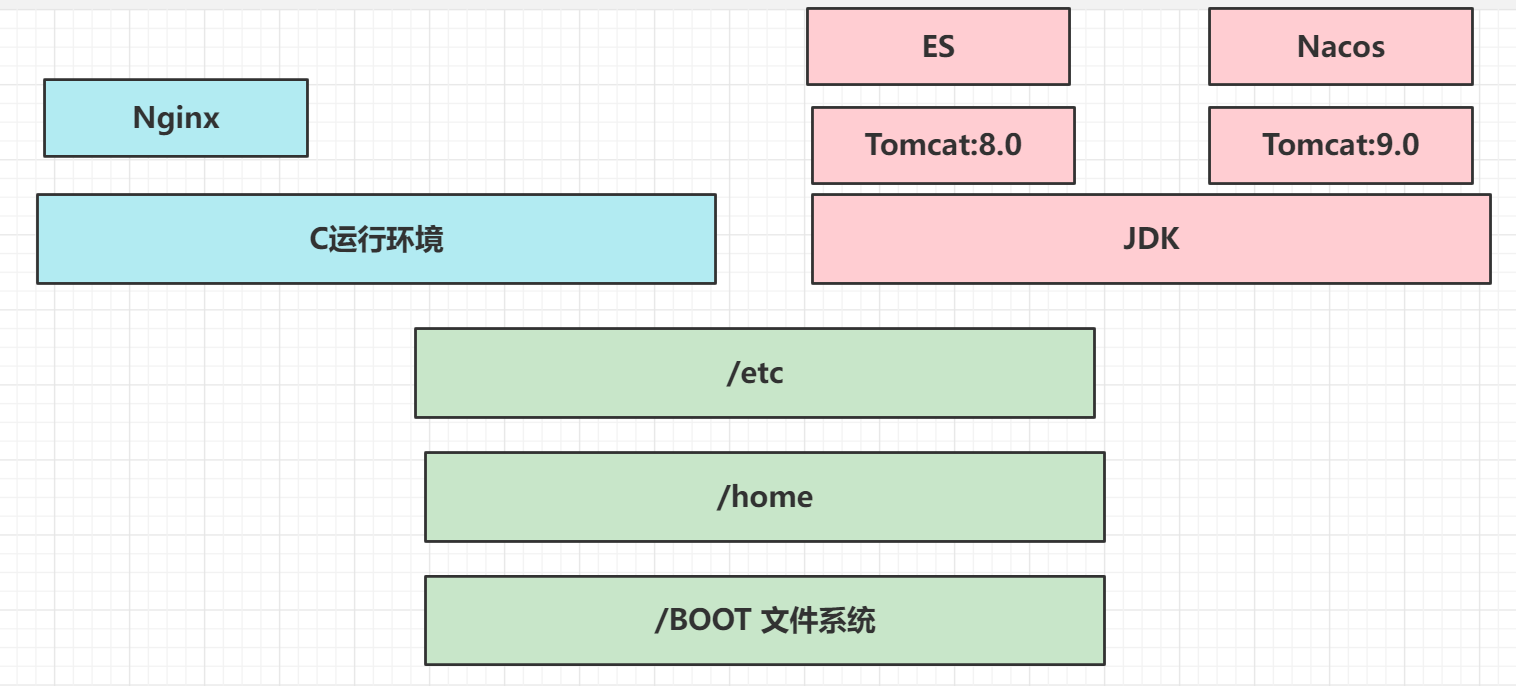

However, such an image structure can be optimized. We can extract the parts that can be reused. For example, all software service bottom layers need to rely on the operating system core library to run, so the operating system core library can be extracted and reused. For all java software, jdk is necessary, so jdk can also be extracted and reused. How to reuse, See below:

Why is a mirror so big?

Mirror image is a scroll

-

UnionFS (Federated file system) - file overlay system

Union file system is a layered, lightweight and high-performance file system. It supports the modification of the file system as a superposition of layers submitted at one time. At the same time, different directories can be mounted under the same virtual file system. The union file system is the foundation of Docker image. This file system feature: multiple file systems are loaded at the same time, but from the outside, only one file system can be seen. Joint loading will overlay all layers of file systems, so that the final file system will contain all underlying files and directories.

Reusing the underlying components can package the core library of the operating system and the jdk running environment into a basic image for use by java programs, so that the underlying images of other java programs can share this basic image. When downloading multiple java related software service images in our local warehouse, the base image only needs to be downloaded once to save disk space consumption

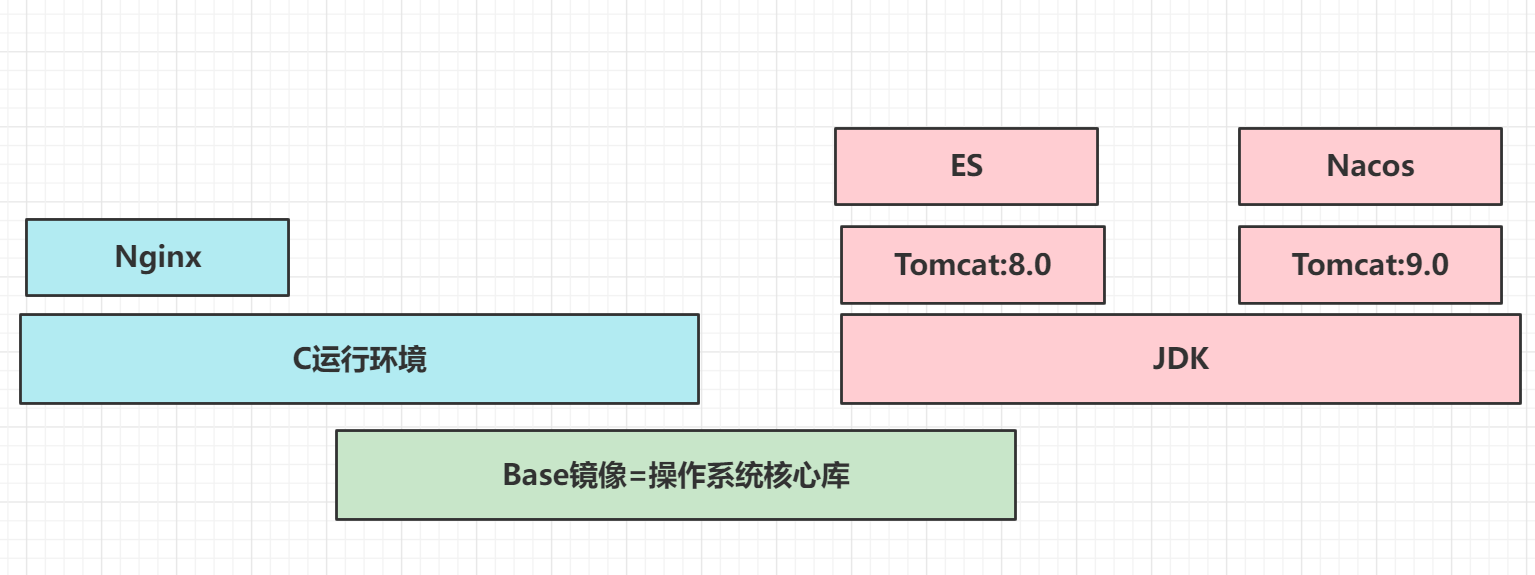

However, not all the underlying software services are implemented in java, so the jdk environment is also dispensable, so it can be further subdivided:

But in fact, it can be subdivided. Some software can run in different linux distributions, so that the image of the core library of the underlying operating system can continue to be split

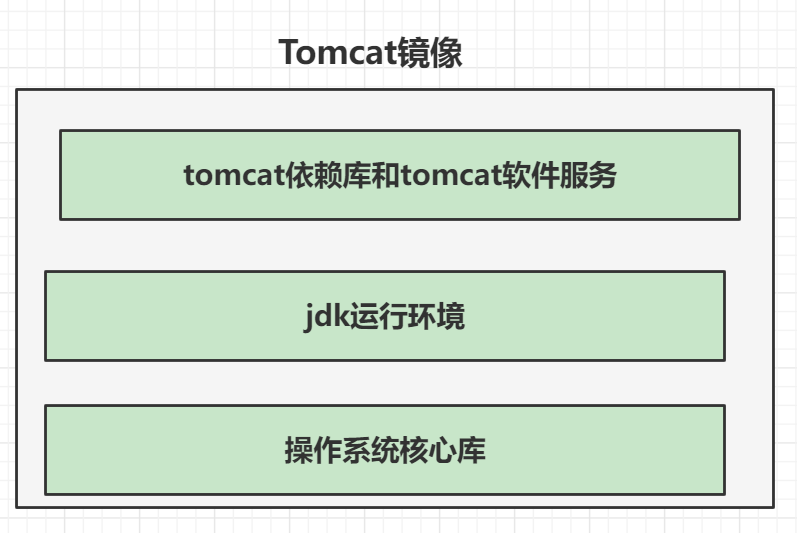

Although we have carried out detailed splitting, we often superimpose multiple file systems when using them. For example, we superimpose the operating system core library file system, jdk environment, tomcat dependency library and software service file system to form an external table, which looks like an overall federated file system, that is, tomcat image

Docker image principle

The image of docker is actually composed of file systems layer by layer.

-

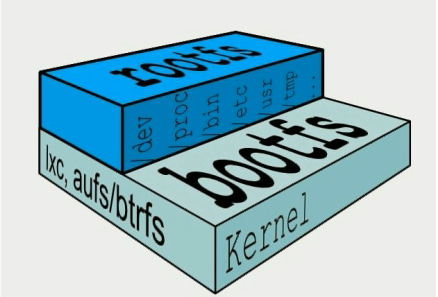

Bootfs (boot file system) mainly includes bootloader and kernel. Bootloader is mainly used to boot and load the kernel. Bootfs file system will be loaded when Linux starts. At the bottom of the docker image is bootfs. This layer is the same as Linux/Unix system, including boot loader and kernel. When the boot is loaded, the whole kernel is in memory. At this time, the right to use the memory has been transferred from bootfs to the kernel. At this time, bootfs will be unloaded.

-

rootfs (root file system), on top of bootfs, contains standard directories and files such as / dev, / proc, / bin, / etc in a typical linux system. rootfs is a variety of operating system distributions, such as Ubuntu/CentOS and so on.

-

We usually install centos into virtual machines with 1 to several GB. Why is docker only 200MB? For a streamlined OS, rootfs can be very small. It only needs to include the most basic commands, tools, and program libraries. Because the underlying layer directly uses the Kernal of the Host, it only needs to provide rootfs. It can be seen that different linux distributions have the same bootfs and different rootfs. Therefore, different distributions can share bootfs.

Why does the docker image adopt this hierarchical structure?

One of the biggest benefits is resource sharing

- For example, if multiple images are built from the same base image, the host only needs to save one base image in the disk. At the same time, only one base image needs to be loaded in memory to serve all containers. And every layer of the image can be shared. Docker images are read-only. When the container starts, a new writable layer is loaded on top of the image. This layer is usually called the container layer, and below the container layer is called the mirror layer.