1. Parse robots Txt file

There are some sites in robots Txt file is set to prohibit the proxy user from browsing the site. Since the target site has such a rule, we should follow it.

Use urllib's robot parser module to parse robots Txt file to avoid downloading the url prohibited from crawling, and then through can_fetch() function to determine whether the specified user agent conforms to the parsed robots Txt rule.

urllib contains four modules: request, error, parse, and robot parser.

Use steps:

1. Create a parser {RP = robot parser RobotFileParser()

#Returns a parser object < class' urllib robotparser. Robotfileparser '>, the content is empty

2. Set robots Txt URL: rp.set_url('http://example.python-scraping.com/robots.txt ')

3. Get robots Txt parsing (rp.read)

#Read out the parsing content and save it to the parsing object

4. Match resolved rules

allow = rp.can_fetch('BadCrawler','http://example.python-scraping.com/robots.txt')

#If useragent is allowed to resolve robots according to Txt file to get the url, returns True. To user_ Double qualification of agent and url to be accessed.

from urllib import robotparser

#Encapsulate the created RobotFileParser object into a function, pass in the link of the file, and return the parser object

def get_robots_parser(robots_url):

rp = robotparser.RobotFileParser()

rp.set_url(robots_url)

rp.read()

return rp2. Support server proxy

Different from the user agent (proxy browser ID), the agent here refers to the server agent. For example, when the local ip is blocked and the server cannot access the web page, you can use the agent to escape the shielding.

Compared with urllib library, python has a more http friendly library, requests library. Urllib is the python standard library. After you install python, this library can be used directly.

Requests is a third-party library that needs to be installed independently: pip install requests. It is generally recommended to use requests, which is the re encapsulation of urllib, and various operations will be easier. Here we also use urllib to familiarize ourselves with the underlying operations.

The operation is very simple. The code is as follows

from urllib import request

proxy = '60.7.208.137:9999'

proxy_support = urllib.request.ProxyHandler({'http':proxy})

opener = urllib.request.build_opener(proxy_support)

urllib.request.install_opener(opener)

3. Download speed limit

If the crawling speed is too fast, sometimes the ip will be blocked or cause the risk of server overload, so we need to add a delay between crawls

Here we will use the urlparse module to parse the url

In Python 3, urlparse module and urllib module are merged, and urlparse() is in urllib Called in parse. urlparse() divides the url into six parts, scheme (Protocol), netloc (domain name), path (path), params (optional parameters), query (connection key value pair), fragment (special anchor), and returns it in tuple form.

Create a Throttle class to record the last access time of each domain name (the domain name is resolved by urlparse). If the two access times are less than the given delay, perform sleep operation and pause crawling for a while

from urllib.parse import urlparse

import time

class Throttle:

def __init__(self, delay):

self.delay = delay #Save the set interval between downloads

self.domains = {} #Save the timestamp of the latest domain name download

def wait(self, url):

domain = urlparse(url).netloc #netloc get domain name

last_accessed = self.domains.get(domain) #Gets the time of the last download

if self.delay > 0 and last_accessed is not None: #The delay is greater than zero and has the timestamp of the last download

sleep_sec = self.delay - (time.time() - last_accessed) #This time should sleep = set delay - according to the time spent in the last download

if sleep_sec > 0:

time.sleep(sleep_sec)

self.domains[domain] = time.time() #Record the time stamp of this download

Call:

throttle = Throttle(delay)

......

throttle.wait(url)

html = GetData(link)

4. Limited climbing depth

For some web pages that dynamically generate page content. For example, an online calendar website provides links to visit the next month and the next year, and the next month contains the pages of the next month. In this way, it will always crawl and fall into the crawler trap.

A simple way to avoid the crawler trap is to record how many links have passed to the current page, that is, the depth. Set a threshold. When the maximum depth is reached, the crawler will no longer add the links in the web page to the queue.

Method:

Change see to a dictionary and save the depth record of the found link.

The dictionary get(key, parameter) function is used. When the value matching the key can be queried, the value corresponding to the corresponding key will be returned. If not, the following parameter will be returned.

Setting parameter to 0 initializes the depth of the domain name crawled for the first time to 0.

Take the previous crawling link tracking as an example and modify it to

def scrap_link(start_url, link_regex, robots_url = None, user_agent = 'wswp', max_depth =5):

if not robots_url:

robots_url = '{}/robots.txt'.format(start_url)

rp = get_robots_parser(robots_url)

crawl_queue = [start_url]

#seen = [start_url] # See = set (crawl_queue) the set function returns the 'set' object. You can use see Add() add element

seen = {}

while crawl_queue :

url = crawl_queue.pop()

if rp.can_fetch(user_agent, url):

depth = seen.get(url, 0)

if depth == max_depth:

print('The depth exceeds the maximum depth. Skip this page')

continue

html = GetData(url, user_agent = user_agent)

if html is None:

continue

for link in getlinks(html):

if re.match(link_regex,link):

abs_link = urljoin(start_url, link)

if abs_link not in seen:

crawl_queue.append(abs_link)

seen[abs_link] = depth + 1 #In fact, depth here reflects the number of layers of the loop, which is similar to the traversal of the tree by layer, adding one for each additional layer

#Returns links to all a tags of a page

def getlinks(html):

url_regex = re.compile("""<a[^>]+href=["'](.*?)["']""", re.IGNORECASE)

return url_regex.findall(html) #Or re findall(url_regex, html, re.IGNORECASE)The key modifications are

def scrap_link(start_url, link_regex, robots_url = None, user_agent = 'wswp', max_depth =5):

.........

seen = {}

........

if rp.can_fetch(user_agent, url):

depth = seen.get(url, 0)

if depth == max_depth:

print('The depth exceeds the maximum depth. Skip this page')

continue

.....

for link in getlinks(html):

if re.match(link_regex,link):

abs_link = urljoin(start_url, link)

if abs_link not in seen:

crawl_queue.append(abs_link)

seen[abs_link] = depth + 1 #In fact, depth here reflects the number of layers of the cycle,5. Use requests

Requests is the re encapsulation of urllib, and various operations will be easier. It is recommended that you first learn the urllib library and get familiar with the mechanism, and then you can mainly use the requests library.

In the GetData function I wrote, some functions can be replaced with requests, for example:

1. # Add user agent

request = urllib.request.Request(url)

request.add_header('User-Agent', user_agent)

response = urllib.request.urlopen(request)

# add server proxy

proxy_support = urllib.request.ProxyHandler({'http': proxy})

opener = urllib.request.build_opener(proxy_support)

urllib.request.install_opener(opener)

Replace with

response = requests.get(url, headers = header, proxies = proxy)

2.# Processing decoding

cs = response.headers.get_content_charset()

html = response.read().decode(cs)

Replace with

html = response.text

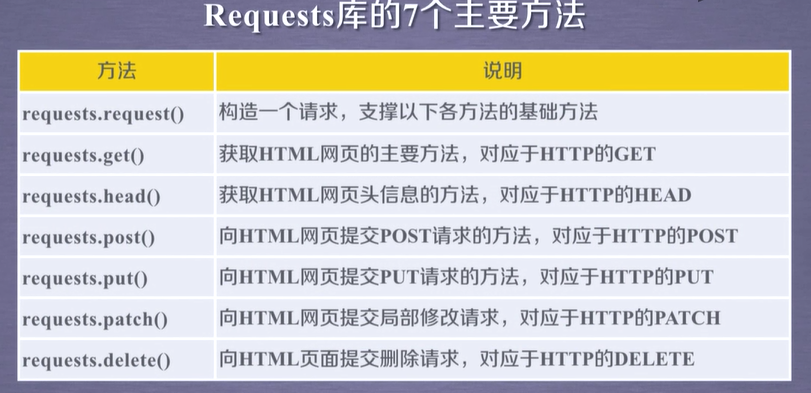

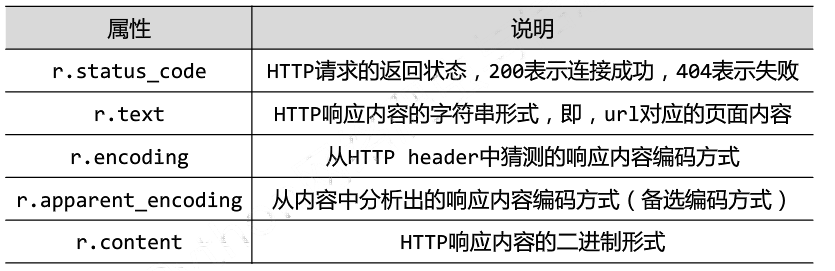

Here is an explanation of the functions of the requests functions

requests.get() requests an http page in get mode

. text attribute, string form of http response content, automatic test character encoding, and then decoding to string output

More detailed functions and attributes are shown in figure

The replaced code is

import requests

def GetData(url, user_agent = 'wswp', retry=2, proxy = None):

print('download : ' + url)

header = {'User-Agent', user_agent}

try:

response = requests.get(url, headers = header, proxies = proxy)

html = response.text

if response.status_code >= 400:

print('Download error', response.text)

html = None

if retry > 0 and 500 <= response.status_code <600:

return GetData(url, proxy_support,retry - 1)

except (URLError, HTTPError, ContentTooShortError) as e:

print('download error :', e.reason)

html = None

return htmlCompared with the original code, it is shorter and clearer in structure.