Xiaobai's big data journey (57)

Hadoop compression

Last review

After introducing zookeeper, the next step is Hadoop's extended knowledge point, compression and ha. Because HA is based on zookeeper, I bring this knowledge point now

Hadoop compression

Compression overview

- First of all, we should know that compression is an optimization method for data

- Using compression can effectively reduce the number of read and write sections of HDFS stored data and improve the efficiency of network bandwidth and disk space

- Because Shuffle and Merge take a lot of time when running MR operation, using compression can improve the efficiency of our MR program

Advantages and disadvantages of compression

- Although the disk IO can be reduced by compressing the data in the MR process to improve the running speed of the MR program, the compression also increases the computational burden of the CPU

- Because the compressed data needs to be decompressed before it can be used, the proper use of compression effects can improve the performance, and improper use may also reduce the performance

Scenes using compression

After knowing the advantages and disadvantages of compression, we can naturally guess the application scenario of compression?

- For computationally intensive jobs, we try to use less compression

- For IO intensive jobs, we need to use compression

Compression coding of MR

- Compression is also divided into many formats because their underlying algorithms are different

- Through different compression technologies, we can use compression for different scenarios

- Next, let's take a look at several compression technologies supported by MR

MR supported compression coding

| Compression format | hadoop comes with? | algorithm | File extension | Whether it can be segmented | After changing to compressed format, does the original program need to be modified |

|---|---|---|---|---|---|

| DEFLATE | Yes, direct use | DEFLATE | .deflate | no | Like text processing, it does not need to be modified |

| Gzip | Yes, direct use | DEFLATE | .gz | no | Like text processing, it does not need to be modified |

| bzip2 | Yes, direct use | bzip2 | .bz2 | yes | Like text processing, it does not need to be modified |

| LZO | No, need to install | LZO | .lzo | yes | You need to build an index and specify the input format |

| Snappy | No, need to install | Snappy | .snappy | no | Like text processing, it does not need to be modified |

Hadoop encoding / decoding API

| Compression format | Corresponding encoder / decoder |

|---|---|

| DEFLATE | org.apache.hadoop.io.compress.DefaultCodec |

| gzip | org.apache.hadoop.io.compress.GzipCodec |

| bzip2 | org.apache.hadoop.io.compress.BZip2Codec |

| LZO | com.hadoop.compression.lzo.LzopCodec |

| Snappy | org.apache.hadoop.io.compress.SnappyCodec |

Compression performance comparison

| compression algorithm | Original file size | Compressed file size | Compression speed | Decompression speed |

|---|---|---|---|---|

| gzip | 8.3GB | 1.8GB | 17.5MB/s | 58MB/s |

| bzip2 | 8.3GB | 1.1GB | 2.4MB/s | 9.5MB/s |

| LZO | 8.3GB | 2.9GB | 49.3MB/s | 74.6MB/s |

| snappy | 8.3GB | 4GB | 250MB/s | 500MB/s |

Snappy's compression speed is the fastest, but its compression effect is the lowest. Basically, it can only compress the file to about half the size of the source file. If you want to know about snappy's partners, please refer to the instructions of GitHub: http://google.github.io/snappy

Compression parameter configuration

| parameter | Default value | stage | proposal |

|---|---|---|---|

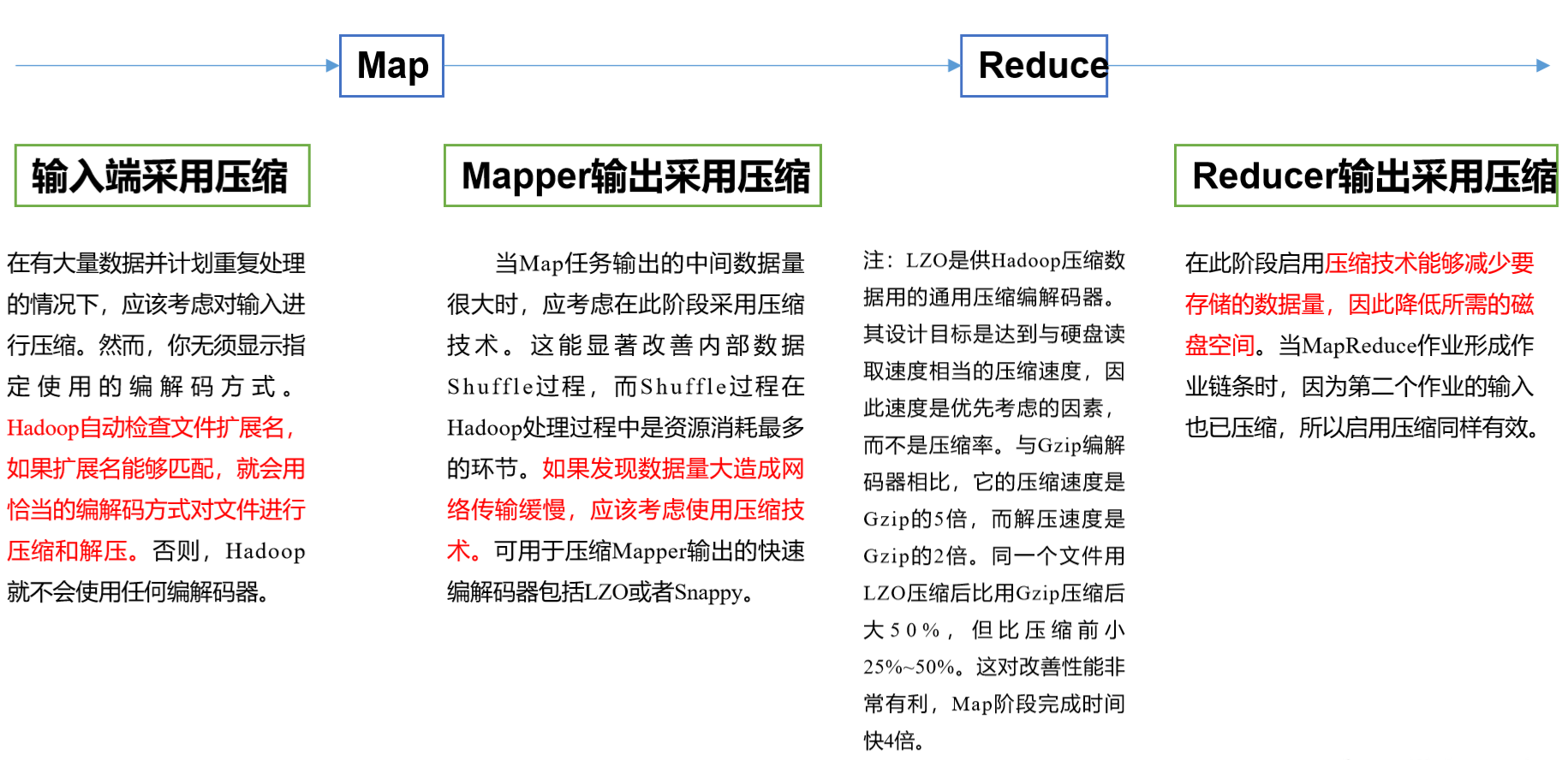

| io.compression.codecs (configured in core-site.xml) | org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec | Input compression | Hadoop uses file extensions to determine whether a codec is supported |

| mapreduce.map.output.compress (configured in mapred-site.xml) | false | mapper output | Set this parameter to true to enable compression |

| mapreduce.map.output.compress.codec (configured in mapred-site.xml) | org.apache.hadoop.io.compress.DefaultCodec | mapper output | Enterprises often use LZO or Snappy codec to compress data at this stage |

| mapreduce.output.fileoutputformat.compress (configured in mapred-site.xml) | false | reducer output | Set this parameter to true to enable compression |

| mapreduce.output.fileoutputformat.compress.codec (configured in mapred-site.xml) | org.apache.hadoop.io.compress.DefaultCodec | reducer output | Use standard tools or codecs, such as gzip and bzip2 |

The table is for your convenience. Let's summarize the compression application scenario

- Gzip

- Hadoop itself supports and is easy to use, because processing files in Gzip format in the program is the same as directly processing text, and most linux systems have Gzip commands

- Because Gzip does not support slicing, we can use Gzip when the compressed size of a single file is about equal to less than one block (about 130M)

- Bzip2

- Bzip2 is also a compression format of Hadoop. Its feature is that it supports the compression of a single large text file and wants to slice it. At this time, we choose to use bZIP

- However, the compression and decompression speed of Bzip2 is relatively slow

- Lzo

- Compressing and decompressing speed blocks and supporting slicing is one of the most commonly used compression formats. We can install lzop command in Linux to use it

- When using Lzo, we need to do some special processing for Lzo format files. For example, in order to support slicing, we need to establish an index and specify InputFormat as Lzo format

- Lzo is used when the compressed data size is greater than 200M

- The biggest advantage of Lzo is that the larger a single file is, the more obvious its compression efficiency advantage is

- Snappy

- Its characteristic is one word, fast, high-speed compression and decompression efficiency, which is beyond the reach of other compression

- However, it does not support slicing, and the compressed size is half the size of the source file

- Because it is very fast, we usually use it as the intermediate format from Map to Reduce when the data in MR Map stage is relatively large, or as the output of one MR job to the input of another MR job

Compression and decompression of data stream

- After understanding the compression and decompression and the formats supported by MR, let's demonstrate how to create and use specific compression through code

- In Hadoop, we can use the built-in CompressionCodec object to create a compressed stream to complete the compression and decompression of files

package com.compress; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IOUtils; import org.apache.hadoop.io.compress.*; import org.apache.hadoop.util.ReflectionUtils; import org.junit.Test; import java.io.FileInputStream; import java.io.FileNotFoundException; import java.io.FileOutputStream; public class CompressDemo { /** * compress */ @Test public void test() throws Exception { //1. Input stream -- ordinary file stream FileInputStream fis = new FileInputStream("D:\\io\\compress\\aaa.txt"); //2. Output stream -- compressed stream //2.1 create the object of the corresponding codec CompressionCodec gzipCodec = ReflectionUtils.newInstance(GzipCodec.class, new Configuration()); //2.2 create flow //gzipCodec.getDefaultExtension(): get the extension of compression type CompressionOutputStream cos = gzipCodec.createOutputStream( new FileOutputStream("D:\\io\\decompress\\aaa.txt" + gzipCodec.getDefaultExtension())); //3. Document copying IOUtils.copyBytes(fis,cos,1024,true); } /** * decompression */ @Test public void test2() throws Exception { //Input stream --- compressed stream CompressionCodec gzipCodec = ReflectionUtils.newInstance(GzipCodec.class, new Configuration()); CompressionInputStream cis = gzipCodec.createInputStream( new FileInputStream("D:\\io\\decompress\\aaa.txt.gz")); //Output stream --- ordinary file stream FileOutputStream fos = new FileOutputStream("D:\\io\\compress\\aaa.txt"); //3. Document copying IOUtils.copyBytes(cis,fos,1024,true); } /** * decompression */ @Test public void test3() throws Exception { //Create a compressed factory class CompressionCodecFactory factory = new CompressionCodecFactory(new Configuration()); //Input stream --- compressed stream //Create the object of the corresponding codec class according to the extension of the file (SMART) CompressionCodec gzipCodec = factory.getCodec(new Path("D:\\io\\decompress\\aaa.txt.gz")); if (gzipCodec != null){//Object without corresponding codec CompressionInputStream cis = gzipCodec.createInputStream( new FileInputStream("D:\\io\\decompress\\aaa.txt.gz")); //Output stream --- ordinary file stream FileOutputStream fos = new FileOutputStream("D:\\io\\compress\\aaa.txt"); //3. Document copying IOUtils.copyBytes(cis,fos,1024,true); } } }

summary

This chapter shares the knowledge points of compression. Understanding and using compression is one of the essential knowledge in our daily work. Reasonable use of compression can greatly improve the execution efficiency of our programs. Well, this chapter is all about these. The next chapter brings you the HA of Hadoop