Author: Hangzhou meichuang Technology Co., Ltd

Thanks to the open source feature of PostgreSQL, more and more third-party cluster management software fills the ease of use and reliability of PostgreSQL in cluster. patroni+etcd provides a series of cluster management schemes. Etcd is responsible for storing cluster status information to contact each node. patroni is responsible for providing high availability services for the cluster. The collection of the two provides high availability services for failover for PostgreSQL cluster. It is not only simple in configuration, but also rich in functions:

- Support manual and automatic failover

- Support one master and multiple slaves, cascade replication

- Support synchronous and asynchronous modes

- Support the use of watchdog to prevent brain crack

preparation in advance

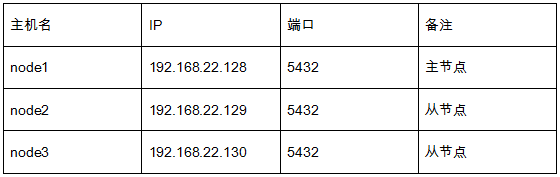

Node planning. In the experimental process, we use one master and two slaves to build a high availability environment.

Turn off host firewall

# systemctl stop firewalld.service # systemctl disable firewalld.service

Install postgresql and build a stream replication environment (this step is omitted)

Deploy etcd on each node

Install the necessary dependent packages and etcd software

# yum install -y gcc python-devel epel-release # yum install -y etcd

Edit the configuration file (the parameters to be modified are listed below, taking the master node as an example)

# vim /etc/etcd/etcd.conf #[Member] ETCD_DATA_DIR="/var/lib/etcd/node1.etcd" ETCD_LISTEN_PEER_URLS="http://192.168.22.128:2380" ETCD_LISTEN_CLIENT_URLS="http://192.168.22.128:2379,http://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.22.128:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.22.128:2379" ETCD_INITIAL_CLUSTER="node1=http://192.168.22.128:2380,node2=http://192.168.22.129:2380, node3=http://192.168.22.130:2380"

Start the etcd cluster and set the boot self boot

# systemctl start etcd # systemctl enable etcd

Deploy python3 on each node

We need to use a higher version of Python to use the patroni service. The general linux environment has a built-in Python environment of version 2.7. Therefore, we need to upgrade python. Here, we use the source code compilation and installation method

# wget -c https://www.python.org/ftp/python/3.8.2/Python-3.8.2.tar.xz # ./configure # make # make install

Delete the original 2.7 version of soft link and add a new soft link to use Python 3

# rm -f /usr/bin/python # ln -s /usr/local/bin/python3 /usr/bin/python

Deploy patroni on each node

Install the necessary dependency packages and patroni software

# pip3 install psycopg2-binary -i https://mirrors.aliyun.com/pypi/simple/ # pip3 install patroni -i https://mirrors.aliyun.com/pypi/simple/

Modify the patroni configuration file (take the master node as an example)

# vim /etc/patroni.yml

scope: pgsql

namespace: /pgsql/

name: pgsql_node2

restapi:

listen: 192.168.22.128:8008

connect_address: 192.168.22.128:8008

etcd:

host: 192.168.22.128:2379

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

master_start_timeout: 300

synchronous_mode: false

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

listen_addresses: "0.0.0.0"

port: 5432

wal_level: logical

hot_standby: "on"

wal_keep_segments: 100

max_wal_senders: 10

max_replication_slots: 10

wal_log_hints: "on"

# archive_mode: "on"

# archive_timeout: 1800s

# archive_command: gzip < %p > /data/backup/pgwalarchive/%f.gz

# recovery_conf:

# restore_command: gunzip < /data/backup/pgwalarchive/%f.gz > %p

postgresql:

listen: 0.0.0.0:5432

connect_address: 192.168.22.128:5432

data_dir: /pgdata/patr2

bin_dir: /usr/pgsql-12/bin

# config_dir: /etc/postgresql/9.6/main

authentication:

replication:

username: repl

password: repl

superuser:

username: postgres

password: postgres

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

Configuring the patroni service unit

# vim /etc/systemd/system/patroni.service [Unit] Description=Runners to orchestrate a high-availability PostgreSQL After=syslog.target network.target [Service] Type=simple User=postgres Group=postgres #StandardOutput=syslog ExecStart=/usr/local/bin/patroni /etc/patroni.yml ExecReload=/bin/kill -s HUP $MAINPID KillMode=process TimeoutSec=30 Restart=no [Install] WantedBy=multi-user.target

Start the patroni service

# systemctl start patroni

Of course, we can also directly use the patroni command to start the patroni service. The service unit is configured for easier use.

#/usr/local/bin/patroni /etc/patroni.yml > patroni.log 2>&1 &

Cluster environment usage

View node information

# patronictl -c /etc/patroni.yml list + Cluster: pgsql (6972099274779350082)+------+---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +-------------+---------------------+--------+---------+----+-----------+ | pgsql_node1 | 192.168.22.128:5432 | Leader | running | 3 | | | pgsql_node2 | 192.168.22.129:5432 | | running | 3 | 0 | | pgsql_node3 | 192.168.22.130:5432 | | running | 3 | 0 | +-------------+---------------------+--------+---------+----+-----------+

Manually switch between master and slave, and select an available slave node to make it the master node role

# patronictl -c /etc/patroni.yml switchover Master [pgsql_node1]: pgsql_node1 Candidate ['pgsql_node2', 'pgsql_node3'] []: pgsql_node2 When should the switchover take place (e.g. 2021-06-20T11:42 ) [now]: now

View cluster status

# patronictl -c /etc/patroni.yml list + Cluster: pgsql (6972099274779350082)+------+---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +-------------+---------------------+--------+---------+----+-----------+ | pgsql_node1 | 192.168.22.128:5432 | | running | 3 | 0 | | pgsql_node2 | 192.168.22.129:5432 | Leader | running | 3 | | | pgsql_node3 | 192.168.22.130:5432 | | running | 3 | 0 | +-------------+---------------------+--------+---------+----+-----------+

Automatically switch between master and slave. Restart the host of node1 node. Check the cluster status. node2 is automatically promoted to primary. If only the node instance is closed, patroni will automatically pull up the database service again.

# patronictl -c /etc/patroni.yml list + Cluster: pgsql (6972099274779350082)+------+---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +-------------+---------------------+--------+---------+----+-----------+ | pgsql_node2 | 192.168.22.129:5432 | Leader | running | 3 | | | pgsql_node3 | 192.168.22.130:5432 | | running | 3 | 0 | +-------------+---------------------+--------+---------+----+-----------+

Initialize a node. When a node is out of sync with the master database or the node runs abnormally, you can use this method to initialize the node information to rejoin the cluster.

# patronictl -c /etc/patroni.yml reinit pgsql + Cluster: pgsql (6972099274779350082)+------+---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +-------------+---------------------+--------+---------+----+-----------+ | pgsql_node1 | 192.168.22.128:5432 | | running | 3 | 0 | | pgsql_node2 | 192.168.22.129:5432 | Leader | running | 3 | | | pgsql_node3 | 192.168.22.130:5432 | | running | 3 | 0 | +-------------+---------------------+--------+---------+----+-----------+ Select the following node names to add: pgsql_node3 Are you sure you want to reinitialize members pgsql_node3?[y/N]: y Success: for member pgsql_node3 Perform initialization

patroni is a template developed based on python. etcd cluster is developed according to Raft algorithm and protocol. It is a highly consistent and distributed key value database. The combination of the two complements each other, making the use and maintenance of PostgreSQL cluster more convenient and transparent.