Build Ceph cluster

This article takes Ceph octopus version as an example!

If not specified, the following commands are executed on all nodes!

1, System resource planning

| Node name | System name | CPU / memory | disk | network card | IP address | OS |

|---|---|---|---|---|---|---|

| Deploy | deploy.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.10 | CentOS7 |

| Node1 | node1.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.11 | CentOS7 |

| ens37 | 10.0.0.11 | |||||

| Node2 | node2.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.12 | CentOS7 |

| ens37 | 10.0.0.12 | |||||

| Node3 | node3.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.13 | CentOS7 |

| ens37 | 10.0.0.13 | |||||

| Client | client.ceph.local | 2C/4G | 128G+3*20G | ens33 | 192.168.0.100 | CentOS7 |

2, Build Ceph storage cluster

1. Basic system configuration

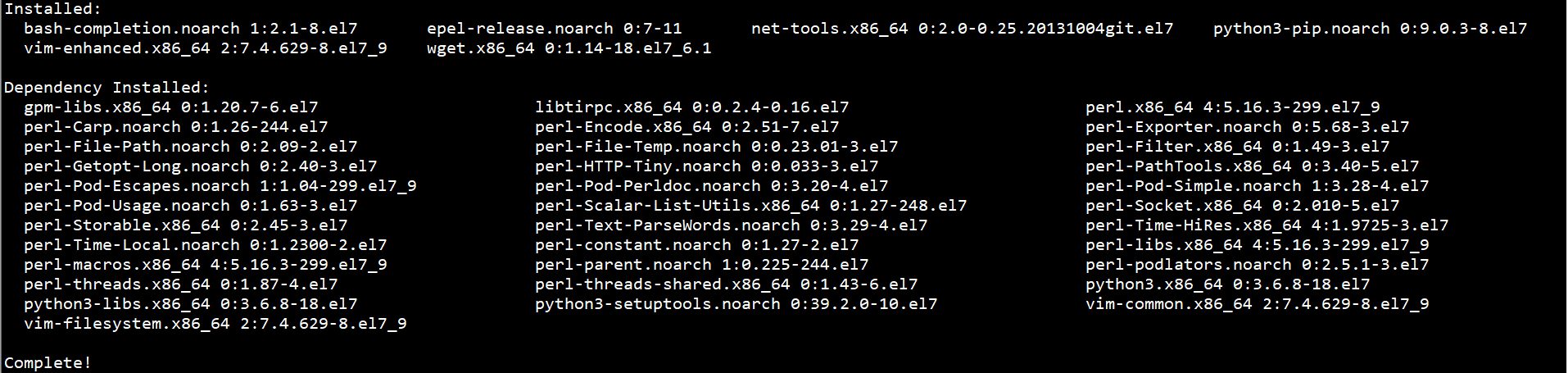

1. Install basic software

yum -y install vim wget net-tools python3-pip epel-release bash-completion

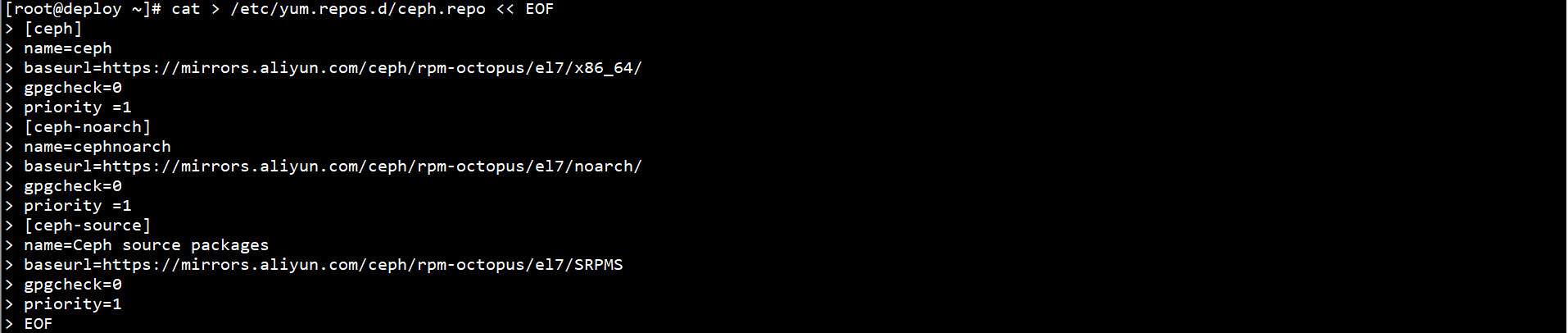

2. Set up mirror warehouse

cat > /etc/yum.repos.d/ceph.repo << EOF [ceph] name=ceph baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/x86_64/ gpgcheck=0 priority =1 [ceph-noarch] name=cephnoarch baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/ gpgcheck=0 priority =1 [ceph-source] name=Ceph source packages baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/SRPMS gpgcheck=0 priority=1 EOF

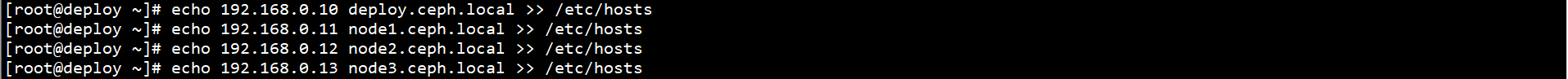

3. Set name resolution

echo 192.168.0.10 deploy.ceph.local >> /etc/hosts echo 192.168.0.11 node1.ceph.local >> /etc/hosts echo 192.168.0.12 node2.ceph.local >> /etc/hosts echo 192.168.0.13 node3.ceph.local >> /etc/hosts

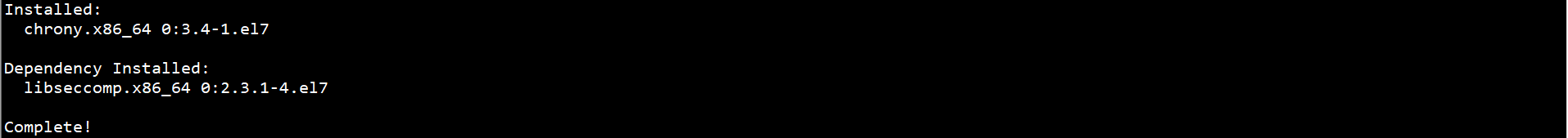

4. Set NTP

yum -y install chrony

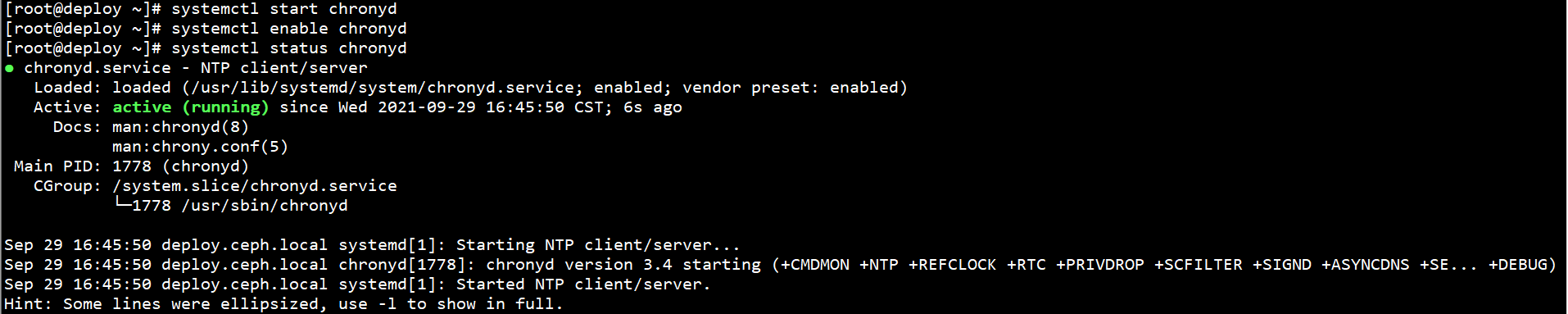

systemctl start chronyd systemctl enable chronyd systemctl status chronyd

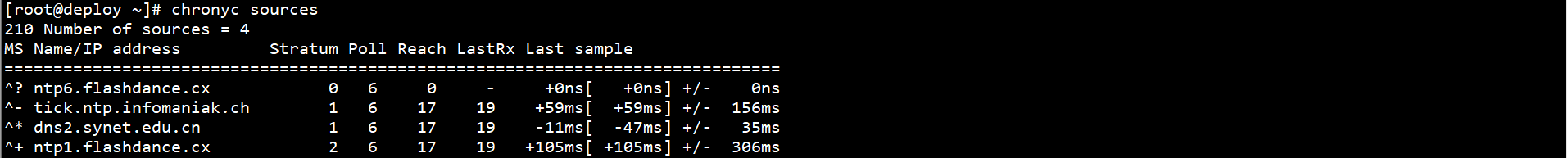

chronyc sources

5. Set firewall, SELinux

systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

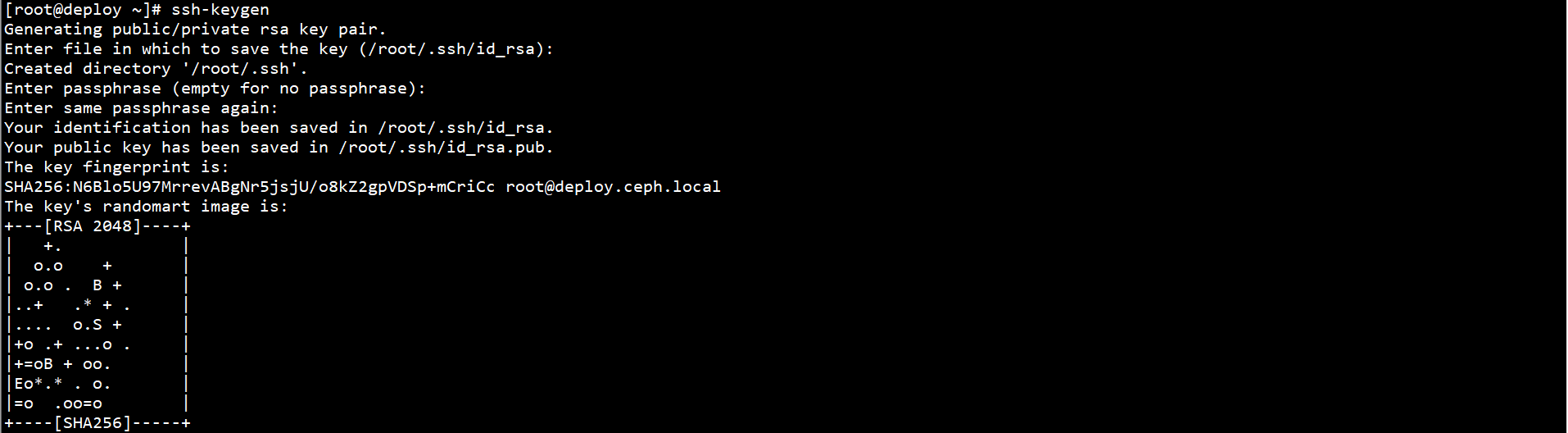

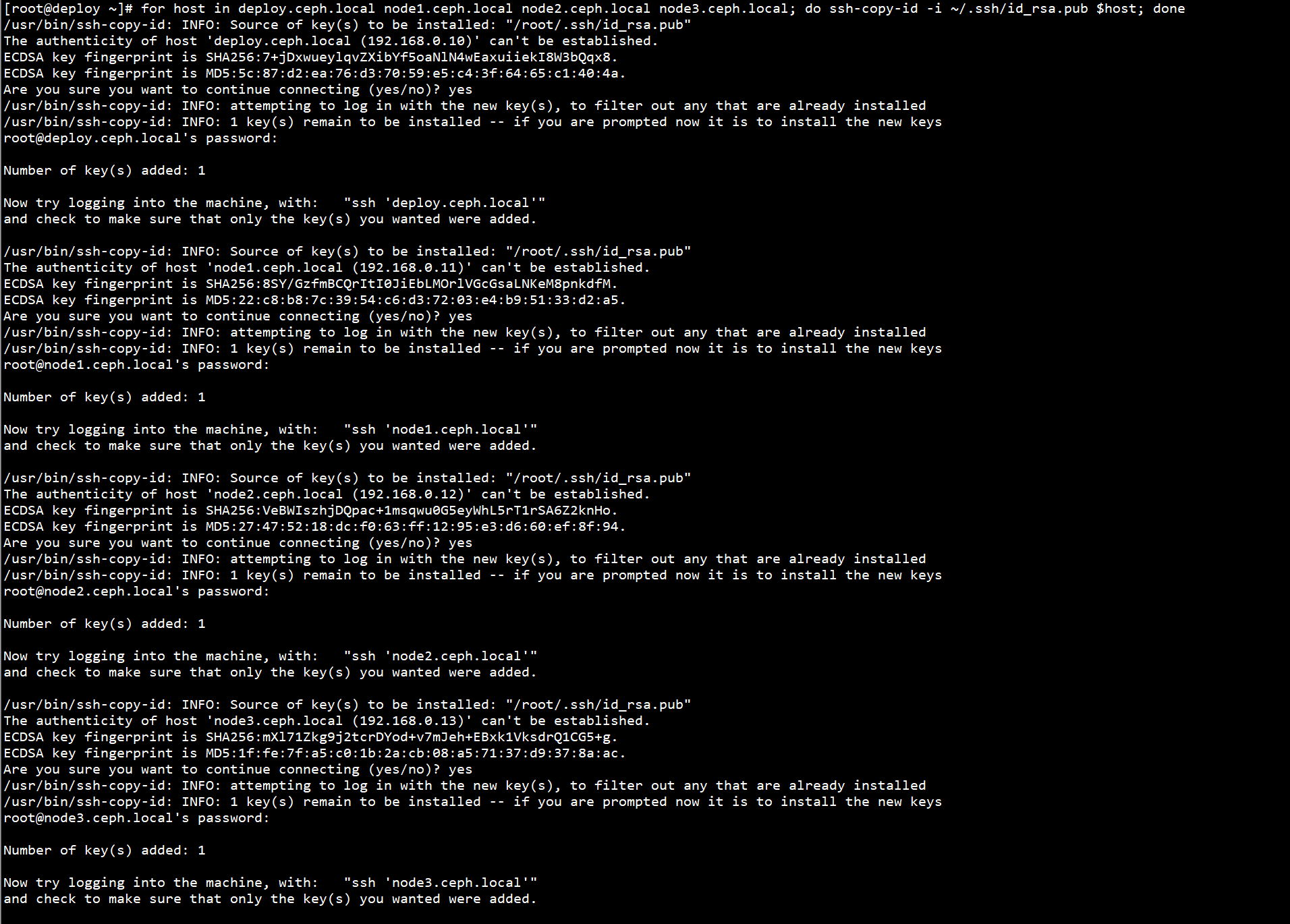

6. Set SSH password free login

Configure secret free ssh on the Delpoy node:

ssh-keygen

for host in deploy.ceph.local node1.ceph.local node2.ceph.local node3.ceph.local; do ssh-copy-id -i ~/.ssh/id_rsa.pub $host; done

2. Create Ceph storage cluster

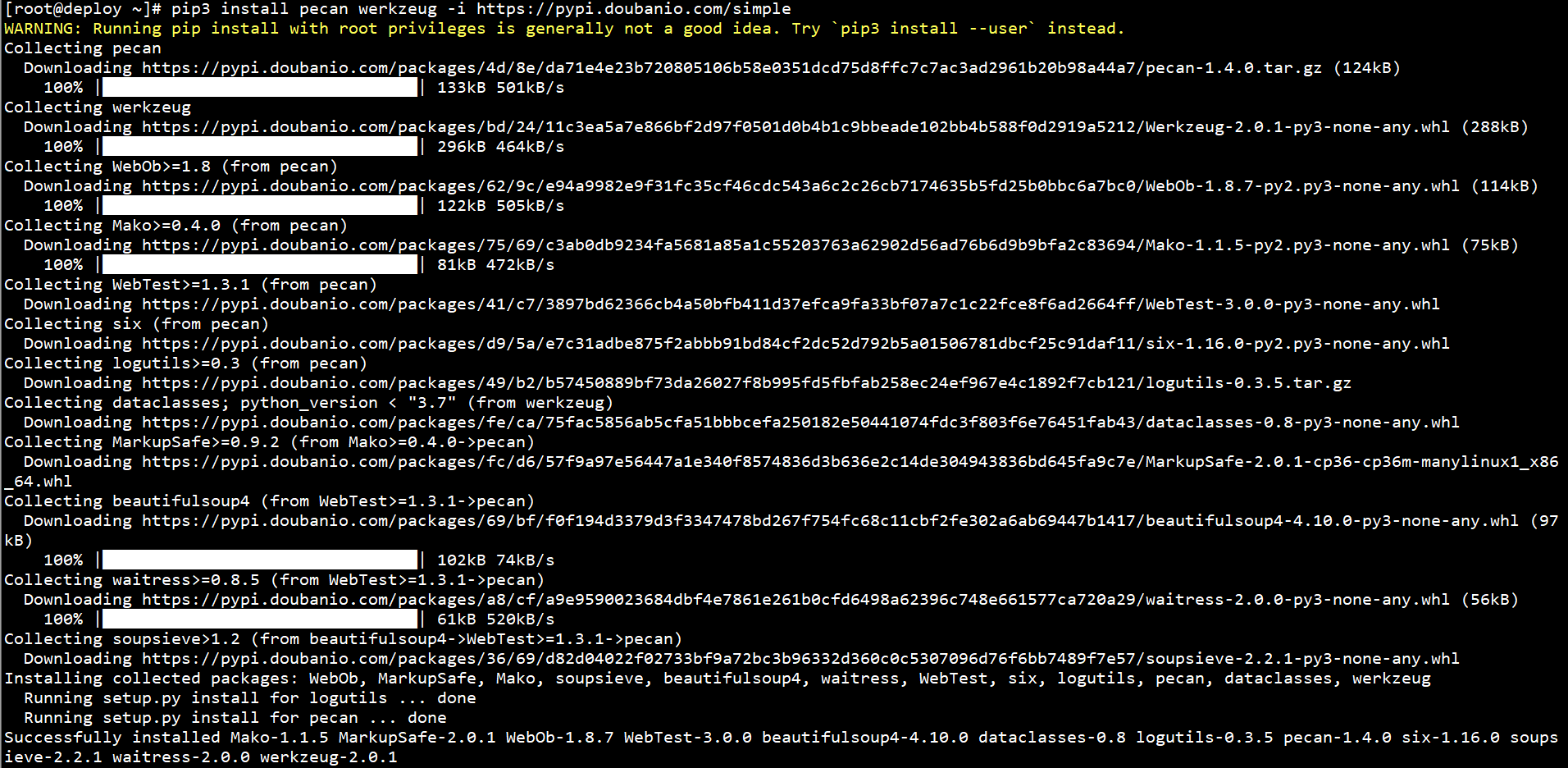

1. Installing Python modules

To install Python modules on the Deploy and Node nodes:

pip3 install pecan werkzeug -i https://pypi.doubanio.com/simple

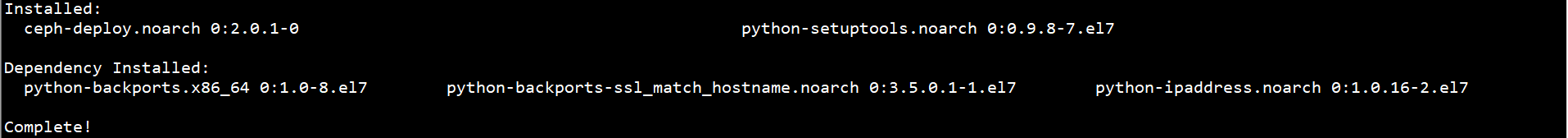

2. Install CEPH deploy

Install CEPH Deploy on the Deploy node:

yum -y install ceph-deploy python-setuptools

3. Create directory

Create a directory on the Deploy node and save the configuration file and key pair generated by CEPH Deploy:

mkdir /root/cluster/

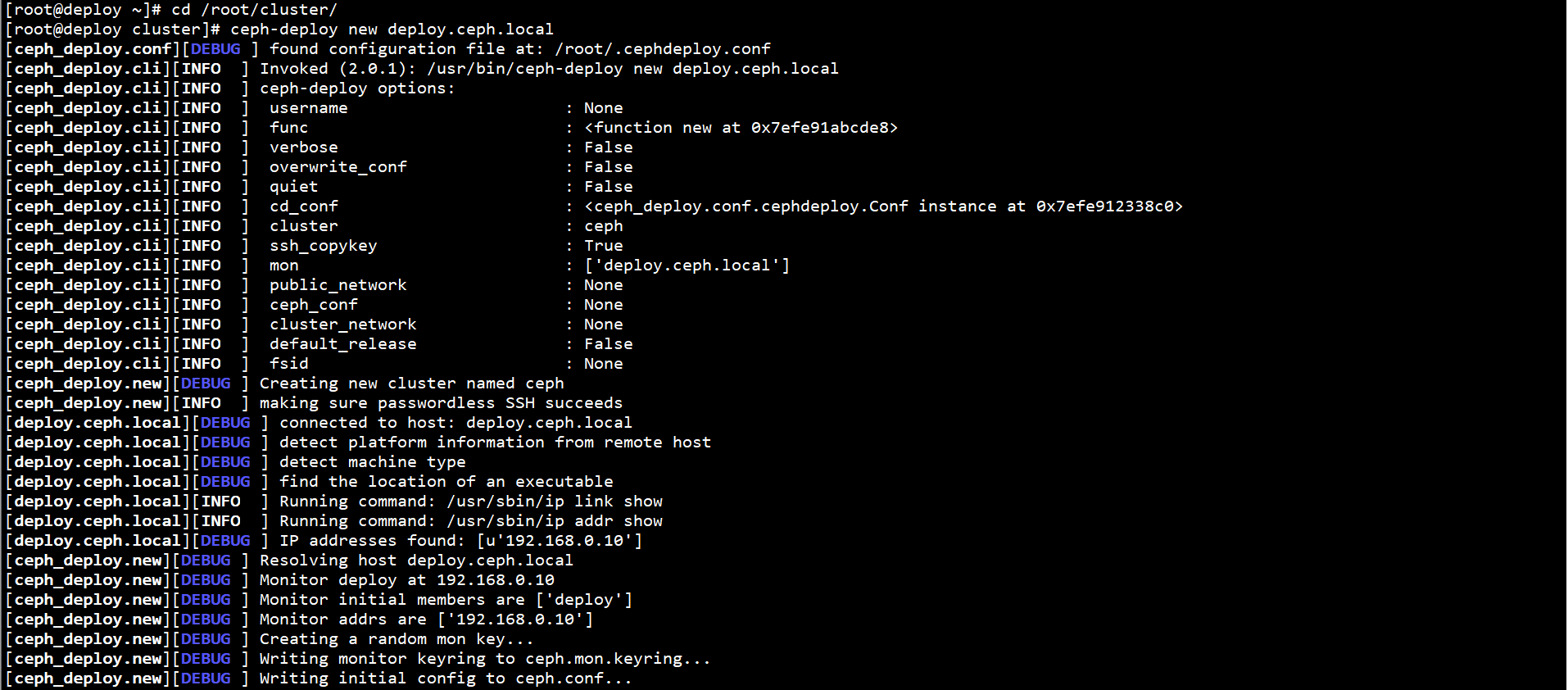

4. Create cluster

Create a cluster on the Deploy node:

cd /root/cluster/ ceph-deploy new deploy.ceph.local

5. Modify profile

On the Deploy node, modify / root/cluster/ceph.conf to specify the front-end and back-end networks:

public network = 192.168.0.0/24 cluster network = 10.0.0.0/24 [mon] mon allow pool delete = true

If the configuration file is changed, synchronize the configuration file to each node and restart the relevant processes

ceph-deploy --overwrite-conf config push deploy.ceph.local ceph-deploy --overwrite-conf config push node1.ceph.local ceph-deploy --overwrite-conf config push node2.ceph.local ceph-deploy --overwrite-conf config push node3.ceph.local

6. Install Ceph

Install Ceph on the Deploy node:

ceph-deploy install deploy.ceph.local ceph-deploy install node1.ceph.local ceph-deploy install node2.ceph.local ceph-deploy install node3.ceph.local

7. Initialize MON node

Initialize MON node on Deploy node:

cd /root/cluster/ ceph-deploy mon create-initial

8. View node disks

To view node disks on the Deploy node:

cd /root/cluster/ ceph-deploy disk list node1.ceph.local ceph-deploy disk list node2.ceph.local ceph-deploy disk list node3.ceph.local

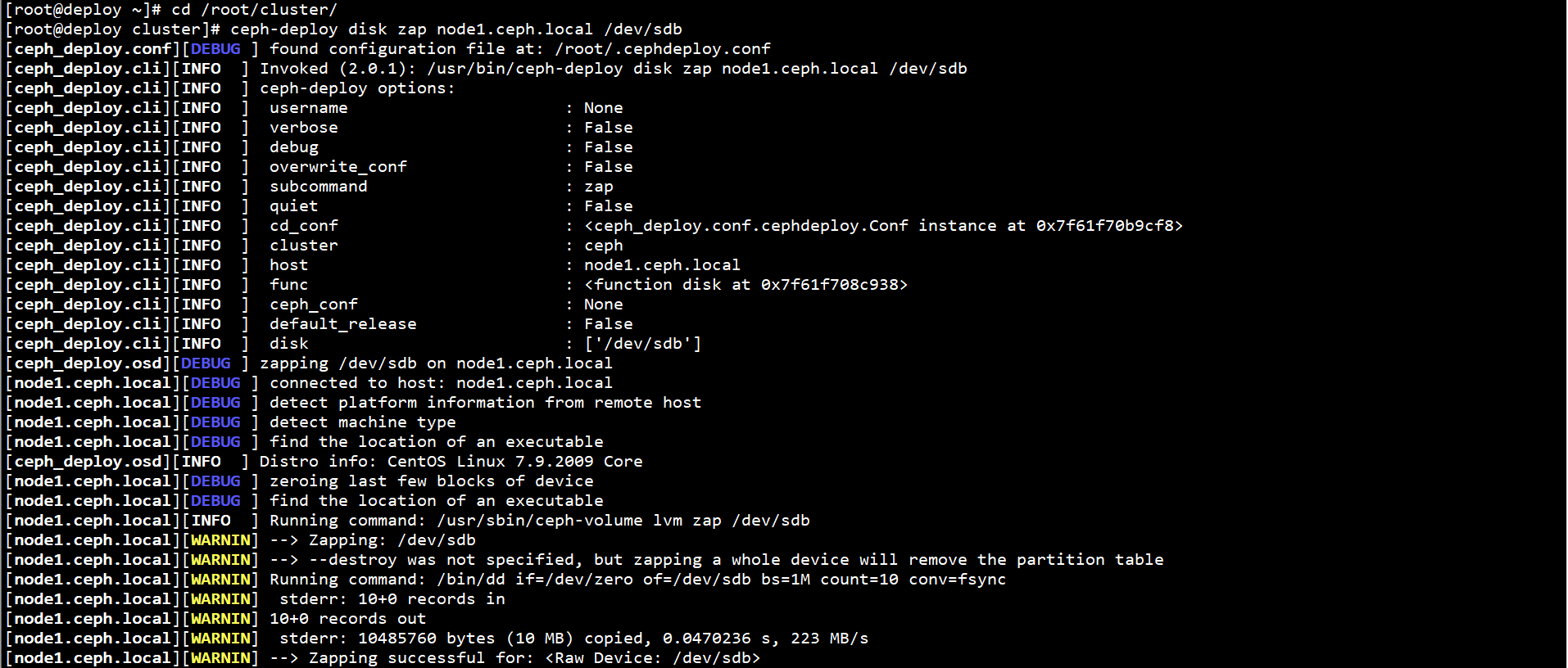

9. Erase node disk

To erase a node disk on a Deploy node:

cd /root/cluster/ ceph-deploy disk zap node1.ceph.local /dev/sdb ceph-deploy disk zap node1.ceph.local /dev/sdc ceph-deploy disk zap node1.ceph.local /dev/sdd ceph-deploy disk zap node2.ceph.local /dev/sdb ceph-deploy disk zap node2.ceph.local /dev/sdc ceph-deploy disk zap node2.ceph.local /dev/sdd ceph-deploy disk zap node3.ceph.local /dev/sdb ceph-deploy disk zap node3.ceph.local /dev/sdc ceph-deploy disk zap node3.ceph.local /dev/sdd

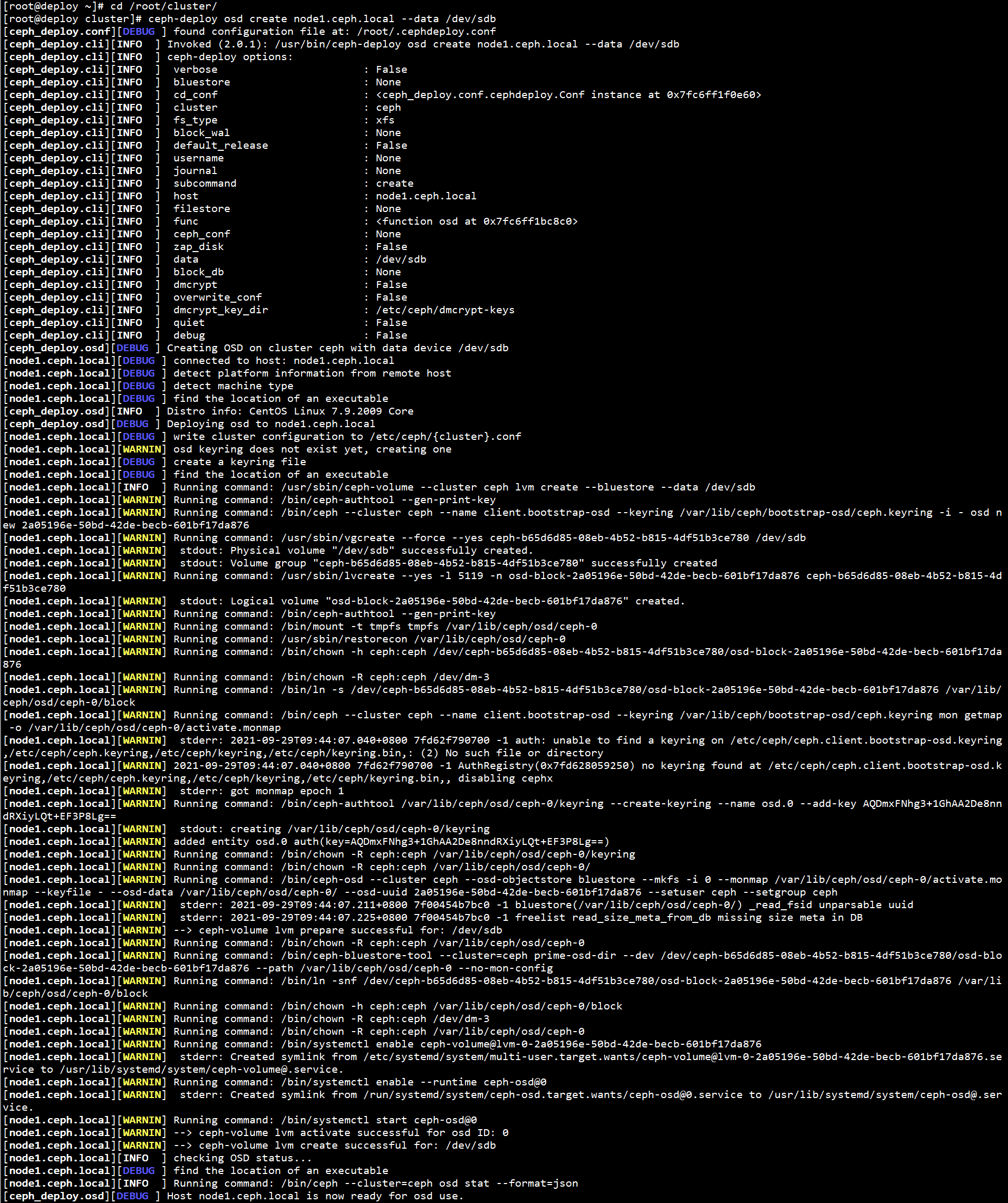

10. Create OSD

Create an OSD on the Deploy node:

cd /root/cluster/ ceph-deploy osd create node1.ceph.local --data /dev/sdb ceph-deploy osd create node1.ceph.local --data /dev/sdc ceph-deploy osd create node1.ceph.local --data /dev/sdd ceph-deploy osd create node2.ceph.local --data /dev/sdb ceph-deploy osd create node2.ceph.local --data /dev/sdc ceph-deploy osd create node2.ceph.local --data /dev/sdd ceph-deploy osd create node3.ceph.local --data /dev/sdb ceph-deploy osd create node3.ceph.local --data /dev/sdc ceph-deploy osd create node3.ceph.local --data /dev/sdd

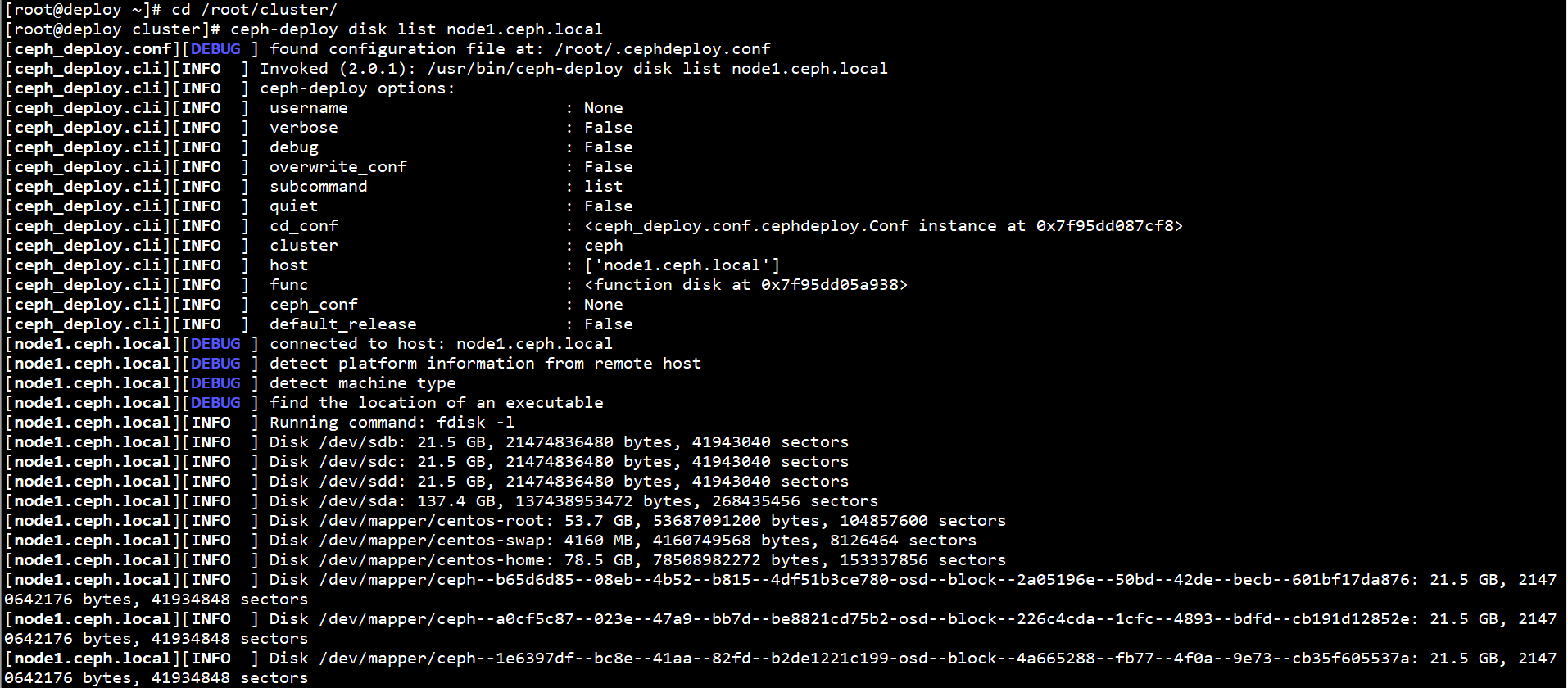

11. View disk and partition information

View disk and partition information on the Deploy node:

cd /root/cluster/ ceph-deploy disk list node1.ceph.local ceph-deploy disk list node2.ceph.local ceph-deploy disk list node3.ceph.local

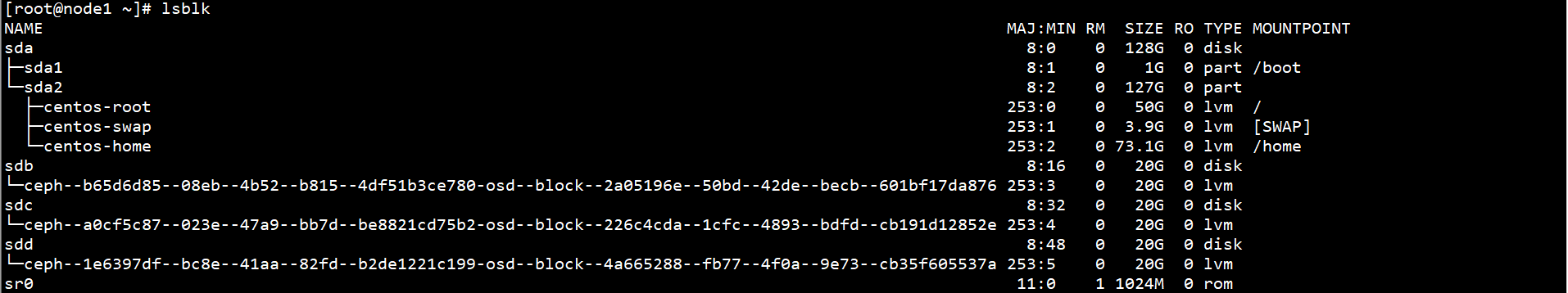

View disk and partition information on the Node:

lsblk

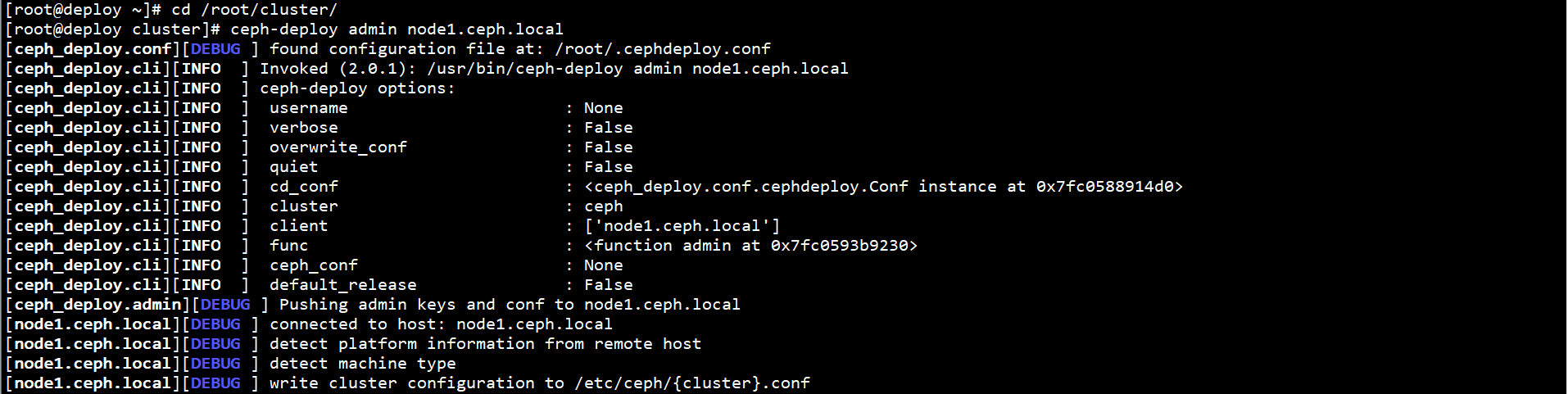

12. Copy configuration and key

Copy the configuration file and admin key on the Deploy Node to the Node:

cd /root/cluster/ ceph-deploy admin node1.ceph.local ceph-deploy admin node2.ceph.local ceph-deploy admin node3.ceph.local

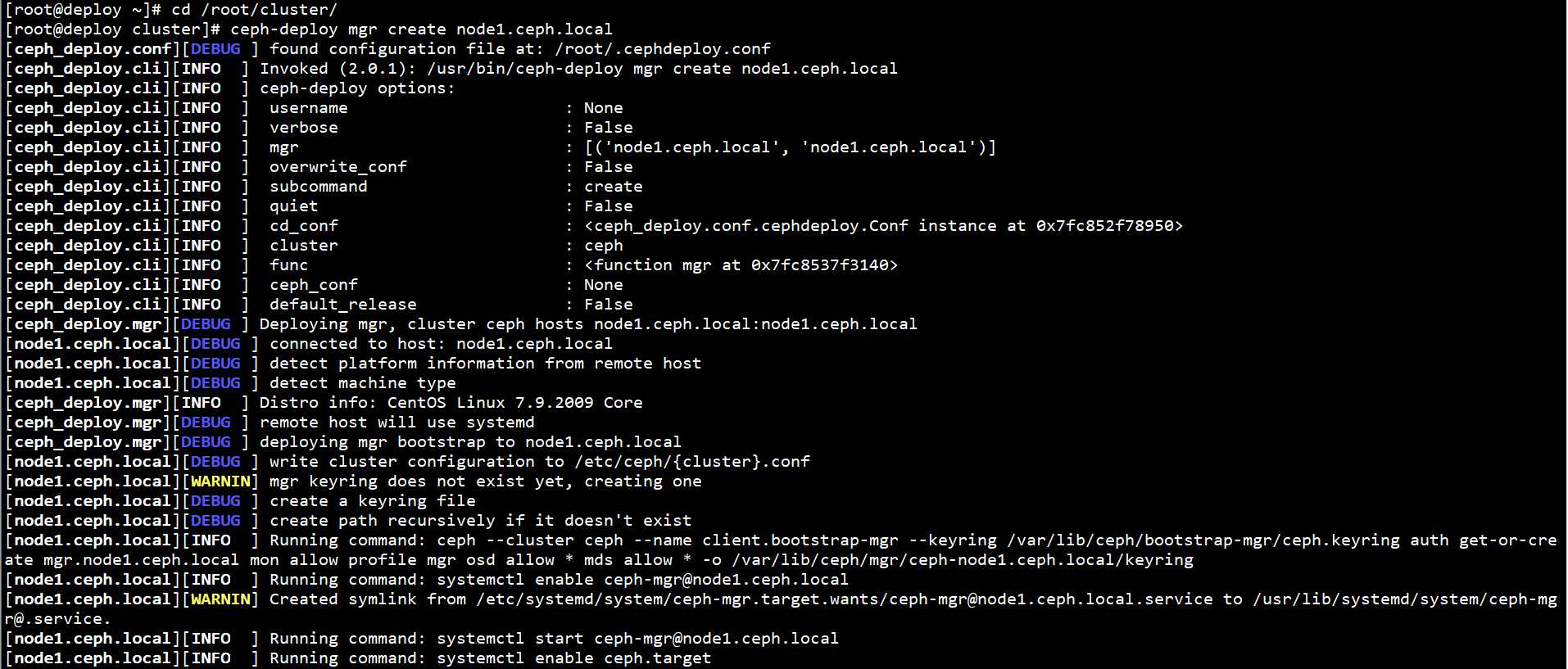

13. Initialize MGR node

Initialize the MGR node on the Deploy node:

cd /root/cluster/ ceph-deploy mgr create node1.ceph.local ceph-deploy mgr create node2.ceph.local ceph-deploy mgr create node3.ceph.local

14. Copy keyring file

On the Deploy node, copy the keyring file to the Ceph Directory:

cp /root/cluster/*.keyring /etc/ceph/

15. Disable unsafe mode

Disable unsafe mode on the Deploy node:

ceph config set mon auth_allow_insecure_global_id_reclaim false

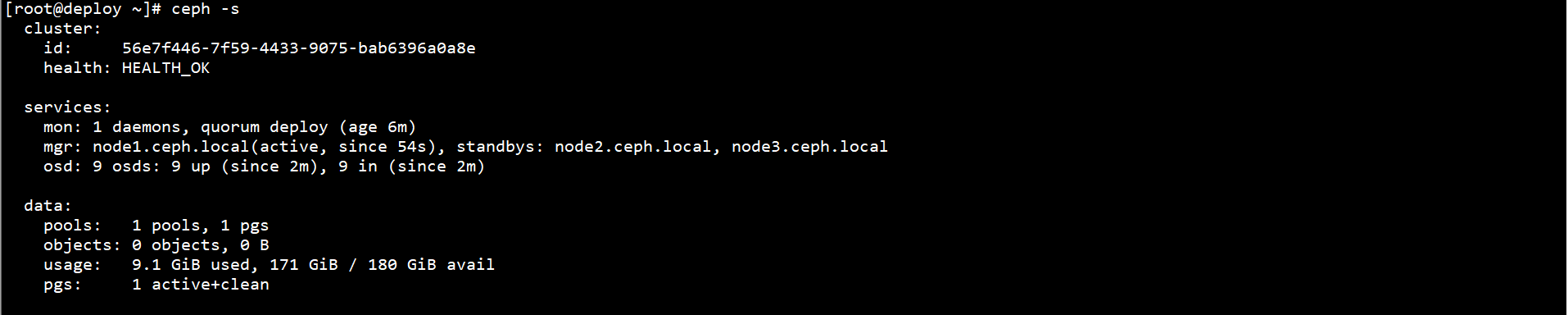

15. View cluster status

To view Ceph status on the Deploy node:

ceph -s

ceph health

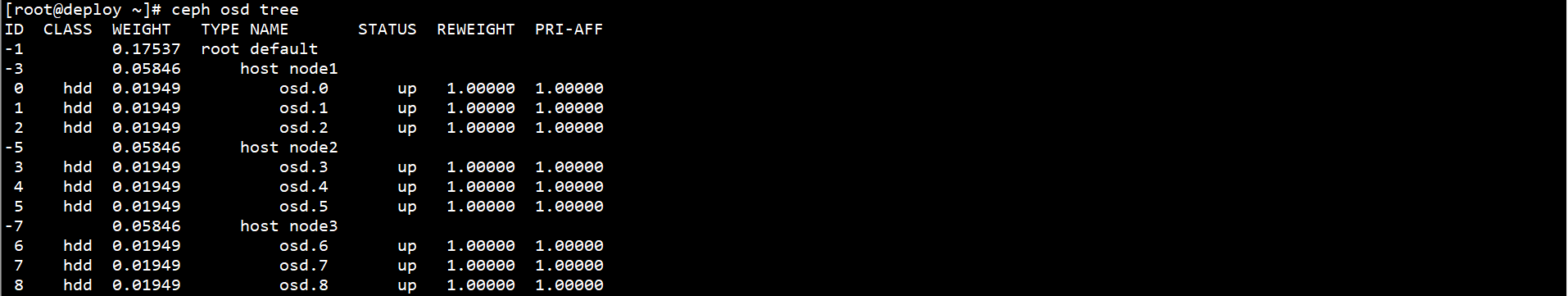

View OSD status on the Deploy node:

ceph osd stat

View the OSD directory tree on the Deploy node:

ceph osd tree