Build kubernetes cluster with kubedm

kubeadm

Kubedm is a K8s deployment tool that provides kubedm init and kebuadm join for rapid deployment of Kubernetes clusters. The deployment of a Kubernetes cluster can be completed through two instructions:

- Create a Master node using kubedm init

- Use kubedm join < master Node IP and port > to join the Node to the current cluster

ultimate objective

- Install Docker, kubedm, kubelet and kubectl on all nodes

- Deploy Kubernetes Master

- Deploy container network plug-in

- Deploy the Kubernetes Node and join the node to the Kubernetes cluster

- Deploy the Dashboard Web page to visually view Kubernetes resources

Environmental preparation

| role | IP |

|---|---|

| k8s-master | 192.168.1.16 |

| k8s-node1 | 192.168.1.30 |

| k8s-node2 | 192.168.1.31 |

install

System initialization

Turn off firewall

systemctl stop firewalld systemctl disable firewalld

Close selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0

Close swap

# Temporarily Closed swapoff -a # Permanently closed, next effective sed -ri 's/.*swap.*/#&/' /etc/fstab

host name

hostnamectl set-hostname <hostname>

Add hosts in master

cat >> /etc/hosts << EOF 192.168.1.16 k8s-master 192.168.1.30 k8s-node1 192.168.1.31 k8s-node2 EOF

The chain that passes bridged IPv4 traffic to iptables

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF # take effect sysctl --system

time synchronization

yum install ntpdate -y ntpdate time.windows.com

Install docker / kubedm / kubelet / kubectl on all nodes

Install docker

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo yum -y install docker-ce-18.06.1.ce-3.e17 systemctl enable docker && systemctl start docker docker --version

Add alicloud YUM software source

Set warehouse address

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

Update / etc / yum.com repos. d/kubernetes. Repo add Yum source

[kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

Install kubedm, kubelet, kubectl

yum install -y kubelet kubeadm kubectl systemctl enable kubelet

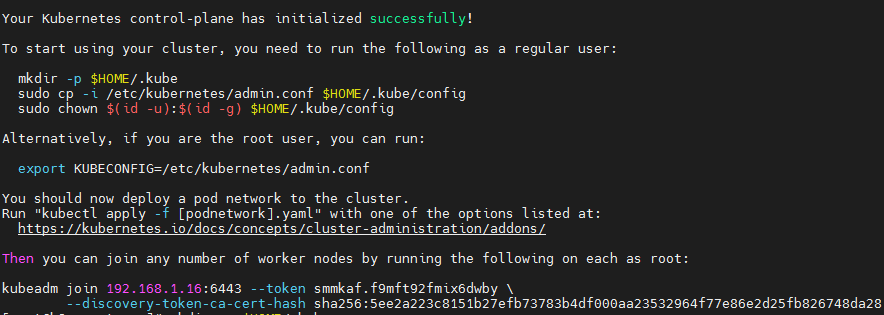

Deploy Kubernetes Master

kubeadm init \ --apiserver-advertise-address=192.168.1.16 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.23.1 \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=10.244.0.0/16

Parameter Description:

–kubernetes-version v1.23.1 designated version

– apiserver advertisement address refers to the IP advertised to other components. Generally, it should be the IP address of the master node

– service CIDR specifies the service network and cannot conflict with the node network

– pod network CIDR specifies the pod network and cannot conflict with node network and service network

–image-repository registry.aliyuncs.com/google_containers specifies the image source, because the default image address is k8s gcr. IO cannot be accessed in China. Specify the address of Alibaba cloud image warehouse here.

If the k8s version is relatively new, alicloud may not have a corresponding image, so you need to obtain images from other places.

Configure kubectl

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

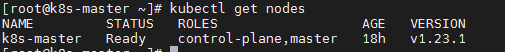

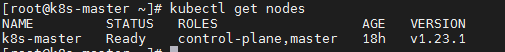

View node information

kubectl get nodes

Configure flannel

Create profile:

vim kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.245.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.14.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.14.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

Execute yaml file:

kubectl apply -f kube-flannel.yml

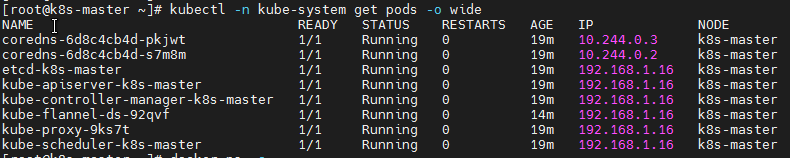

View flannel deployment results:

kubectl -n kube-system get pods -o wide

To view node status:

kubectl get nodes

Deploy Kubernetes Node

Add new nodes to the cluster on 192.168.1.30 and 192.168.1.31, and execute the kubedm join command output from kubedm init:

kubeadm join 192.168.1.16:6443 --token smmkaf.f9mft92fmix6dwby \

--discovery-token-ca-cert-hash sha256:5ee2a223c8151b27efb73783b4df000aa23532964f77e86e2d25fb826748da28

Test Kubernetes cluster

Create a Pod in Kubernetes and verify that it works properly:

kubectl create deployment nginx --image=nginx kubectl expose deployment nginx --port 80 --type NodePort kubectl get pod,svc

Access address: http://NodeIP:Port

Problems during installation

Upgrade system kernel

CentOS 7. 3.10.x built in system There are some Bugs in the X kernel, causing the running Docker and Kubernetes to be unstable.

CentOS allows the use of ELRepo, a third-party repository that can upgrade the kernel to the latest version.

To enable the ELRepo repository on CentOS 7, run:

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

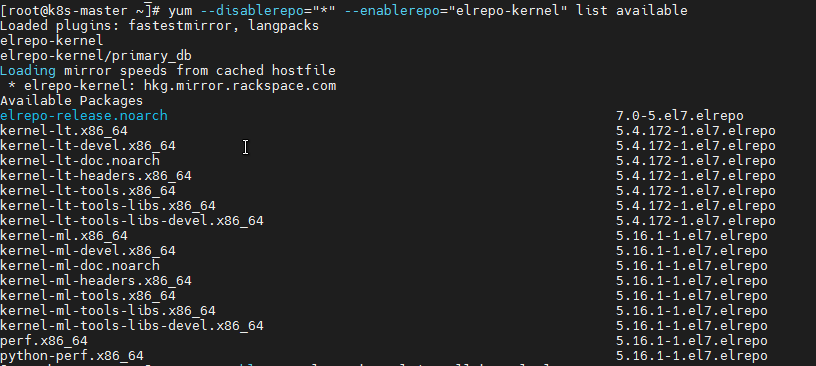

After the warehouse is enabled, you can use the following command to list the available kernel related packages:

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

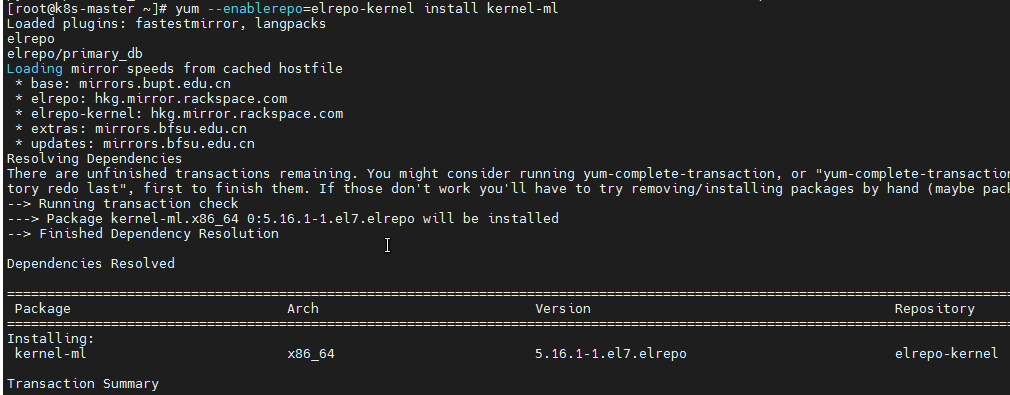

Install the latest mainline stable kernel:

yum --enablerepo=elrepo-kernel install kernel-ml

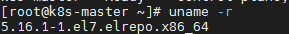

Finally, restart the machine and apply the latest kernel, then run the following command to check the latest kernel version:

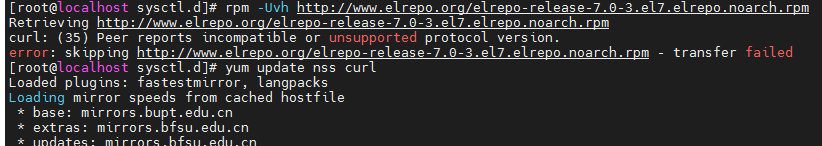

curl: Peer reports incompatible or unsupported protocol version

Find the reason for the low version of curl and NSS. The solution is to update NSS and curl.

yum update nss curl

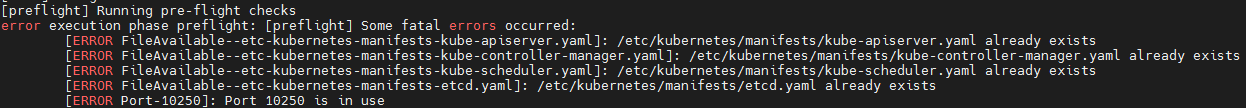

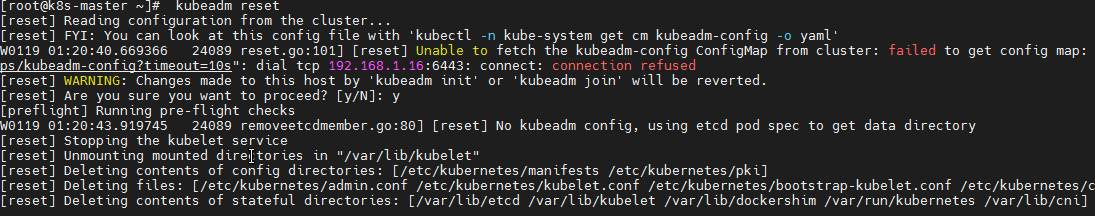

Problem initializing Kubernetes (configuration file already exists)

kubeadm reset

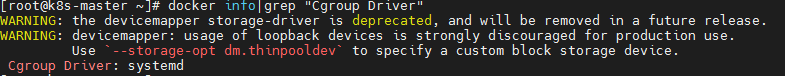

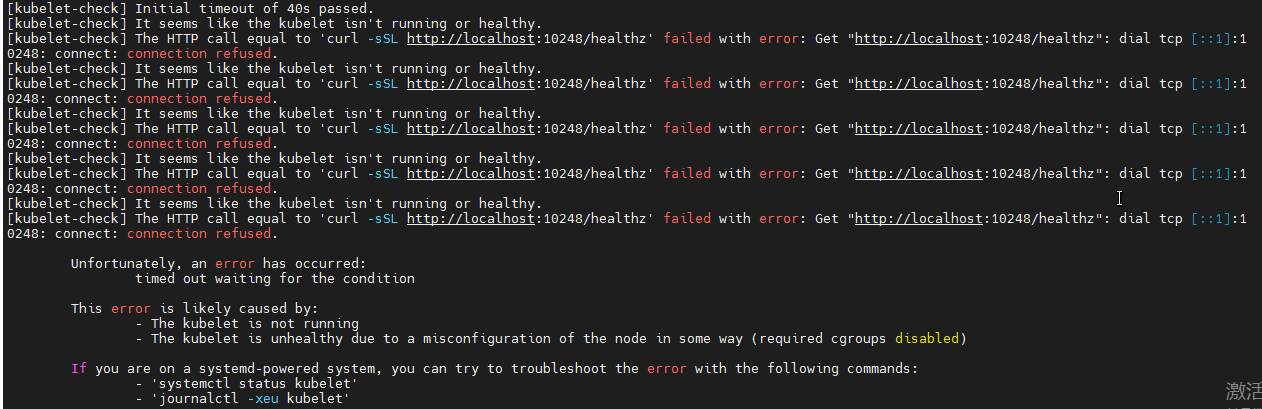

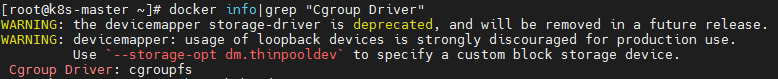

failed to run Kubelet: misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "systemd")

Initialization failed, indicating that kubelet health status is abnormal.

View the kubelet status systemctl status kubelet and prompt error: failed to run Kubelet: failed to create kubelet: misconfiguration: kubelet cgroup driver: "system D" is different from docker cgroup driver: "cgroupfs".

kubelet's default cgroupdriver is cgroupfs, but it actually recommends systemd, interesting!

Modify docker in / etc / docker / daemon JSON, add "exec opts": ["native.cgroupdriver=systemd"]

{

"registry-mirrors":["https://docker.mirrors.ustc.edu.cn"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

Modify kubelet:

cat > /var/lib/kubelet/config.yaml <<EOF apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd EOF

Restart docker and kubelet:

systemctl daemon-reload systemctl restart docker systemctl restart kubelet

Check whether docker info|grep "Cgroup Driver" outputs Cgroup Driver: systemd: