CaDDN code debugging - my computer

I translated CaDDN, Link address

Let's talk about the configuration of my computer first:

-

HP shadow sprite 5

-

Dual system: win10 + ubuntu 1804 (the later code debugging process is carried out in ubuntu system)

-

Memory: 8+16G ()

-

Hard disk: 512G SSD + 1TB mechanical

-

Graphics card: RTX 2060 (6G)

1. Environment configuration:

-

Configure cuda10 2 Environment

Cuda10 was previously installed 1. There were a lot of problems in the debugging process, and then 10.2 was reinstalled. As a result, there were still a lot of problems. During the installation process, it is recommended This blog All right.

-

Create a caddn virtual environment using conda

I recommend using Anaconda to create a caddn virtual environment because Python 3.0 is required 8 environment, many problems may occur in the later stage of using the system environment directly.

conda create -n caddn python=3.8

-

Download CaDDN code

With GitHub Proxy acceleration, the download will be much faster.

git clone https://mirror.ghproxy.com/https://github.com/TRAILab/CaDDN.git

-

Install and compile pcdet v0 three

First, you need to install a library, such as torch. Using Alibaba cloud acceleration

pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple

Errors may occur in this next step:

python setup.py develop

If there is no problem, it will be fine. If pcdet / OPS / iou3d occurs_ nms/src/iou3d_ cpu. cpp:12:18: fatal error: cuda. h: For such errors, please refer to [this blog]( fatal error: cuda.h: There is no such file or directory (pcdet/ops/iou3d_nms/src/iou3d_cpu.cpp:12:18: fatal error: cuda.h:)) did I ignore anything - CSDN blog).

2. Dataset preparation

The format of the dataset is as follows:

CaDDN ├── data │ ├── kitti │ │ │── ImageSets │ │ │── training │ │ │ ├──calib & velodyne & label_2 & image_2 & depth_2 │ │ │── testing │ │ │ ├──calib & velodyne & image_2 ├── pcdet ├── tools

You can prepare less data first, such as 300 pictures. In addition, the depth map needs to be downloaded depth maps . If there is no ladder, you can go to CSDN download , I uploaded this depth data, but there are only 300 depth maps for scientific research and academic exchange. If there is infringement, please contact me to delete it.

Execute the following command to generate data infos information

python -m pcdet.datasets.kitti.kitti_dataset create_kitti_infos tools/cfgs/dataset_configs/kitti_dataset.yaml

If there is an Assertion problem here, you can see whether it is calib or image. Train in ImageSets Txt and val.txt and test.txt Txt corresponds to the data in the dataset one by one. The dataset can contain multiple data, but it cannot be without the data contained in ImageSets.

3. Train a model

First, you need to download the pre training model DeepLabV3 model , put it into the checkpoints file. The file structure is as follows:

CaDDN ├── checkpoints │ ├── deeplabv3_resnet101_coco-586e9e4e.pth ├── data ├── pcdet ├── tools

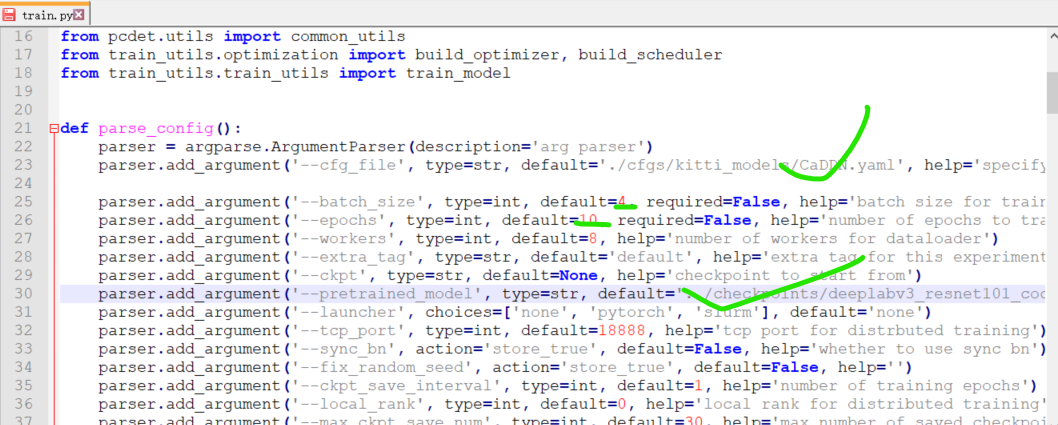

Modify train py

parser.add_argument('--cfg_file', type=str, default='./cfgs/kitti_models/CaDDN.yaml', help='specify the config for training')

parser.add_argument('--batch_size', type=int, default=4, required=False, help='batch size for training')

parser.add_argument('--epochs', type=int, default=10, required=False, help='number of epochs to train for')

parser.add_argument('--workers', type=int, default=8, help='number of workers for dataloader')

parser.add_argument('--extra_tag', type=str, default='default', help='extra tag for this experiment')

parser.add_argument('--ckpt', type=str, default=None, help='checkpoint to start from')

parser.add_argument('--pretrained_model', type=str, default='../checkpoints/deeplabv3_resnet101_coco-586e9e4e.pth', help='pretrained_model')

It is mainly to modify the configuration file path, batch_size, epochs, location information of pre training model, as shown in the figure below.

On the Terminal command line, notice cd the tools directory and start training:

python train.py

Then, there is not enough video memory, out of memory.

CaDDN code debugging - cloud environment

There is one V100 in this cloud environment. There should be no shortage of video memory.

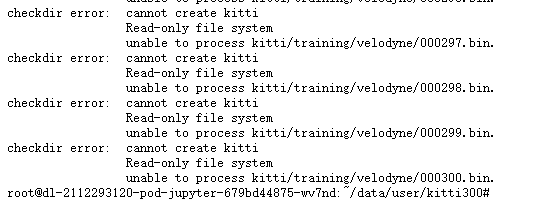

- Unable to unzip the files in the data directory

Move it to the share directory and unzip it.

- Insufficient video memory

Installation of docker

Test later