https://www.jianshu.com/writer#/notebooks/24210100/notes/28352164 In the last part, we introduced the construction of caffe environment, and this time we started the real caffe practice.

Of course, the training of the model needs a good text set. We haven't found a suitable printed Chinese character data set on the Internet for a long time, so we decided to generate our own Chinese character data set for training and testing.

1, Collection of Chinese characters

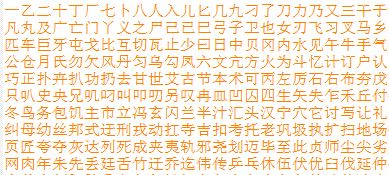

It depends on what Chinese characters you need to recognize. Due to the needs of the competition, we have collected 3500 common Chinese characters and some traditional Chinese characters. As shown in the picture:

Chinese character.JPG

2, Collect font files needed

Considering that the characters we encounter are not necessarily single regular script or Song typeface, we have further collected a variety of font files to generate Chinese character data sets of different fonts. Here are 9 font files we collected, including imitation song, bold, italics, etc

Font.JPG

3, Generate font image and store it in the specified directory

First, define the input parameters, including output directory, font directory, test set size, image size and so on. Here are some source codes:

description = '''

deep_ocr_make_caffe_dataset --out_caffe_dir /root/data/caffe_dataset

--font_dir /root/workspace/deep_ocr_fonts/chinese_fonts

--width 30 --height 30 --margin 4 --langs lower_eng

'''

parser = argparse.ArgumentParser(

description=description, formatter_class=RawTextHelpFormatter)

parser.add_argument('--out_caffe_dir', dest='out_caffe_dir',

default=None, required=True,

help='write a caffe dir')

parser.add_argument('--font_dir', dest='font_dir',

default=None, required=True,

help='font dir to to produce images')

parser.add_argument('--test_ratio', dest='test_ratio',

default=0.3, required=False,

help='test dataset size')

parser.add_argument('--width', dest='width',

default=None, required=True,

help='width')

parser.add_argument('--height', dest='height',

default=None, required=True,

help='height')

parser.add_argument('--no_crop', dest='no_crop',

default=True, required=False,

help='', action='store_true')

parser.add_argument('--margin', dest='margin',

default=0, required=False,

help='', )

parser.add_argument('--langs', dest='langs',

default="chi_sim", required=True,

help='deep_ocr.langs.*, e.g. chi_sim, chi_tra, digits...')

options = parser.parse_args()

out_caffe_dir = os.path.expanduser(options.out_caffe_dir)

font_dir = os.path.expanduser(options.font_dir)

test_ratio = float(options.test_ratio)

width = int(options.width)

height = int(options.height)

need_crop = not options.no_crop

margin = int(options.margin)

langs = options.langs

image_dir_name = "images"

images_dir = os.path.join(out_caffe_dir, image_dir_name)

Image adjustment and Chinese character image generation. According to the Chinese character list generated in the first step, we generate the corresponding Chinese character picture.

Chinese character list.JPG

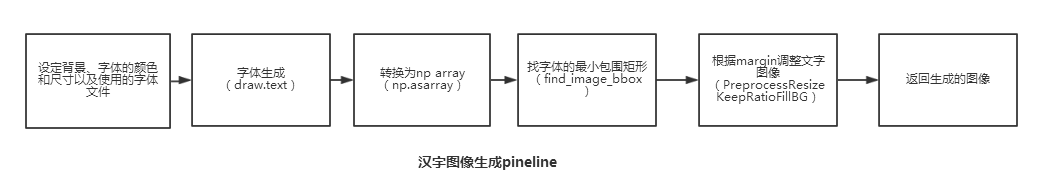

The tool we use is PIL, which has a very useful Chinese character generation function. We can use this function in combination with the font file we provide to generate the digital Chinese character we want. First, we set the color of the font we generated as black and white, and the font size is set dynamically by the input parameters.

image.png

The following is part of the source code;

class Font2Image(object):

def __init__(self,

width, height,

need_crop, margin):

self.width = width

self.height = height

self.need_crop = need_crop

self.margin = margin

def do(self, font_path, char, path_img):

find_image_bbox = FindImageBBox()

img = Image.new("RGB", (self.width, self.height), "black")

draw = ImageDraw.Draw(img)

font = ImageFont.truetype(font_path, int(self.width * 0.7),)

draw.text((0, 0), char, (255, 255, 255),

font=font)

data = list(img.getdata())

sum_val = 0

for i_data in data:

sum_val += sum(i_data)

if sum_val > 2:

np_img = np.asarray(data, dtype='uint8')

np_img = np_img[:, 0]

np_img = np_img.reshape((self.height, self.width))

cropped_box = find_image_bbox.do(np_img)

left, upper, right, lower = cropped_box

np_img = np_img[upper: lower + 1, left: right + 1]

if not self.need_crop:

preprocess_resize_keep_ratio_fill_bg = \

PreprocessResizeKeepRatioFillBG(self.width, self.height,

fill_bg=False,

margin=self.margin)

np_img = preprocess_resize_keep_ratio_fill_bg.do(

np_img)

cv2.imwrite(path_img, np_img)

else:

print("%s doesn't exist." % path_img)

Here we divide the generated data set into two parts, of which any 70% is used as training set and the rest 30% as test set.

parser.add_argument('--test_ratio', dest='test_ratio',

default=0.3, required=False,

help='test dataset size')

----------------

train_list = []

test_list = []

max_train_i = int(len(verified_font_paths) * (1.0 - test_ratio))

for i, verified_font_path in enumerate(verified_font_paths):

is_train = True

if i >= max_train_i:

is_train = False

for j, char in enumerate(lang_chars):

if j not in y_to_tag:

y_to_tag[j] = char

char_dir = os.path.join(images_dir, "%d" % j)

if not os.path.isdir(char_dir):

os.makedirs(char_dir)

path_image = os.path.join(

char_dir,

"%d_%s.jpg" % (i, os.path.basename(verified_font_path)))

relative_path_image = os.path.join(

image_dir_name, "%d"%j,

"%d_%s.jpg" % (i, os.path.basename(verified_font_path))

)

font2image.do(verified_font_path, char, path_image)

if is_train:

train_list.append((relative_path_image, j))

else:

test_list.append((relative_path_image, j))

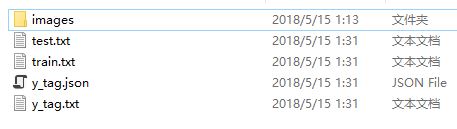

After running the whole code, we have generated five files, including a text list and a json file corresponding to the text list (Note: This json file will be used in the use of caffe model later), a training set, a test set and a picture set corresponding to each word with different fonts. The following picture:

Generate file.JPG

The picture sets of each word corresponding to different fonts are as follows:

Character picture.JPG

At this point, the dataset generation is complete.

To be continued