At present, there are mainly the following methods for live camera Broadcasting:

-

rtsp access can only realize video preview

-

The implementation of national standard protocol access is complex, and more SIP servers are needed

-

The video code stream is obtained through netsdk, pushed to the streaming media server, and played through wsflv, flv, hls and other streaming media protocols. H265 does not support it

1, Adoption scheme

After comparison, the third method is finally adopted. java uses jna to access Dahua netsdk, removes Dahua head and tail after obtaining dav video code stream, obtains H264 bare code stream, and pushes it to the streaming media server through javacv (encapsulation of ffmpeg, opencv and other libraries) (nms written by an open source node is selected)

2, JNA

2.1 JNI java calling c

If it has been compiled dll/.so file - > write another one in C language first dll/. The so shared library uses the data structure specified by SUN to replace the data structure of C language, calls the functions published in the existing dll/so - > load this library in Java - > write the Native function in Java as the proxy of the functions in the link library

2.2 JNA

JNA is a Java class library based on JNI technology. It provides a dynamic repeater written in C language, which can automatically realize the data type mapping between Java and C. developers only need to use java interface to describe the function and structure of the target local library, and there is no need to write C dynamic link library. It is convenient to use java to directly access the functions in the dynamic link library. In addition, JNA includes a platform library that has been mapped with many local functions and a set of common interfaces to simplify local access. JNA has some slight loss in performance, so it is impossible to call Java from C.

2.3 simple use under Windows

(1) ) compile a dll library and create a new cal cpp,cal.h. Use vs to compile dll files (compile so files under linux)

cal.h

extern "C" __declspec(dllexport) int cal(int a, int b, int calType);

cal.cpp

#include "cal.h"

int cal(int a,int b,int calType) {

if (calType == 1) {

return a + b;

}else{

return a - b;

}

}(2) Create a new java project and introduce jna into maven

<dependency> <groupId>net.java.dev.jna</groupId> <artifactId>jna</artifactId> <version>4.5.1</version> </dependency>

Put the cal compiled above dll is placed in the root path of the project, and a new java interface is created to describe the function and structure of the dll library

import com.sun.jna.Library;

import com.sun.jna.Native;

public interface CalSDK extends Library {

//The instance is automatically generated by JNA through reflection, which can be expanded to load different dynamic link libraries according to different systems

CalSDK CalSDK_INSTANCE = (CalSDK)Native.loadLibrary("cal", CalSDK.class);

//This method is a method in the link library

int cal(int a, int b, int calType);

}

call

public class Main {

public static void main(String[] args) {

System.out.println(CalSDK.CalSDK_INSTANCE.cal(1,2,1));

}

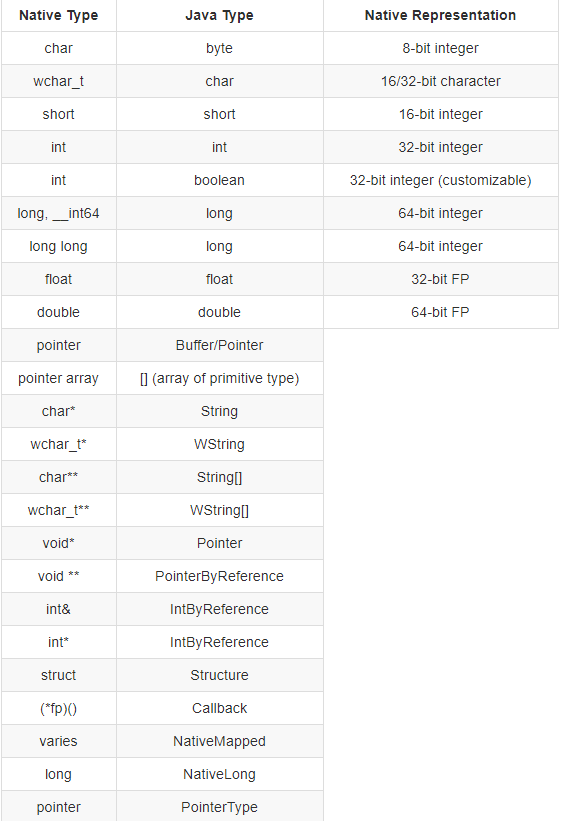

}2.4 type mapping

jna official

2.5 function callback

Take the real-time preview callback of Dahua netsdk device as an example, c code

// Prototype of real-time monitoring data callback function typedef void (CALLBACK *fRealDataCallBackEx)( LLONG lRealHandle, DWORD dwDataType, BYTE *pBuffer, DWORD dwBufSize, LONG param, LDWORD dwUser);

java code, you can get the video stream in the invoke method of the implementation class

public interface fRealDataCallBackEx extends StdCallCallback {

public void invoke(

LLong lRealHandle,

int dwDataType,

Pointer pBuffer,

int dwBufSize,

int param,

Pointer dwUser);

}Interface setting callback after playback is enabled

public void startRealplay(LLong loginHandle,Integer playSign,CameraPojo cameraPojo){

//Default to main stream

int streamType = 0;

//Open Preview

LLong lRealHandle= NetSDKConfig.netSdk.CLIENT_RealPlayEx(

loginHandle,

cameraPojo.getChannel(),

null,

streamType);

//Set video stream callback

if(lRealHandle.longValue()!=0){

try {

NetSDKConfig.netSdk.CLIENT_SetRealDataCallBackEx(

lRealHandle,

RealDataCallBack.getInstance(),

null,

31)

} catch (IOException e) {

log.error(e.getMessage());

}

}

}2.6 pointer

The void * Pointer in c points to an address, corresponding to the Pointer in jna. The following active registration callback code is a method to take out the data in the Pointer

public int invoke(NetSDKLib.LLong lHandle, final String pIp, final int wPort,

int lCommand, Pointer pParam, int dwParamLen,

Pointer dwUserData) {

// Convert pParam to serial number

byte[] buffer = new byte[dwParamLen];

pParam.read(0, buffer, 0, dwParamLen);

String serNum = "";

try {

serNum = new String(buffer, "GBK").trim();

} catch (UnsupportedEncodingException e) {

log.error(e.getMessage());

}

}

2.7 java class corresponding to structure

The field order in the class needs to be aligned with that in c + +, otherwise NoSuchFieldError will be reported!

c

// Timed recording configuration information

typedef struct tagCFG_RECORD_INFO

{

int nChannelID; // Channel number (from 0)

CFG_TIME_SECTION stuTimeSection[WEEK_DAY_NUM][MAX_REC_TSECT]; // timetable

int nPreRecTime; // Pre recorded time, when it is zero, it means closed (0 ~ 300)

BOOL bRedundancyEn; // Video redundancy switch

int nStreamType; // 0 - primary code stream, 1 - secondary code stream 1, 2 - secondary code stream 2, 3 - secondary code stream 3

int nProtocolVer; // Protocol version number, read only

// ability

BOOL abHolidaySchedule; // When it is true, there is holiday configuration information, and bHolidayEn and stuHolTimeSection are valid;

BOOL bHolidayEn; // Holiday video enabled TRUE: enabled, FALSE: not enabled

CFG_TIME_SECTION stuHolTimeSection[MAX_REC_TSECT]; // Holiday video schedule

} CFG_RECORD_INFO;java

// Timed recording configuration information

public static class CFG_RECORD_INFO extends Structure

{

public int nChannelID; // Channel number (from 0)

public TIME_SECTION_WEEK_DAY_6[] stuTimeSection = (TIME_SECTION_WEEK_DAY_6[])new TIME_SECTION_WEEK_DAY_6().toArray(WEEK_DAY_NUM); // timetable

public int nPreRecTime; // Pre recorded time, when it is zero, it means closed (0 ~ 300)

public int bRedundancyEn; // Video redundancy switch

public int nStreamType; // 0 - primary code stream, 1 - secondary code stream 1, 2 - secondary code stream 2, 3 - secondary code stream 3

public int nProtocolVer; // Protocol version number, read only

public int abHolidaySchedule; // When it is true, there is holiday configuration information, and bHolidayEn and stuHolTimeSection are valid;

public int bHolidayEn; // Holiday video enabled TRUE: enabled, FALSE: not enabled

public TIME_SECTION_WEEK_DAY_6 stuHolTimeSection; // Holiday video schedule

}

//Convert the pointer of c to jna structure

public static void GetPointerDataToStruct(Pointer pNativeData, long OffsetOfpNativeData, Structure pJavaStu) {

pJavaStu.write();

Pointer pJavaMem = pJavaStu.getPointer();

pJavaMem.write(0, pNativeData.getByteArray(OffsetOfpNativeData, pJavaStu.size()), 0,

pJavaStu.size());

pJavaStu.read();

}

3, JAVACV

JavaCV provides a package library in the field of computer vision, including: OpenCV ,ARToolKitPlus,libdc1394 2.x. PGR FlyCapture and FFmpeg. It can process audio, video, pictures, etc.

3.1 introduction of javacv

<dependency> <groupId>org.bytedeco</groupId> <artifactId>javacv</artifactId> <version>1.5.3</version> </dependency> <dependency> <groupId>org.bytedeco</groupId> <artifactId>ffmpeg</artifactId> <version>4.2.2-1.5.3</version> </dependency> //It can be configured to introduce dependencies in different environments according to different profile s <dependency> <groupId>org.bytedeco</groupId> <artifactId>ffmpeg</artifactId> <version>4.2.2-1.5.3</version> <classifier>windows-x86_64</classifier> //Use in linux Environment <!--<classifier>linux-x86_64</classifier>--> </dependency>

3.2 use

The above Dahua video obtained through netsdk flows through Dahua packaging in the past and obtains the H264 raw code stream. After it is judged as a key frame, it starts to write the H264 raw code stream into the PipedOutputStream through the Java pipeline stream, and then pass the corresponding PipedInputStream as a parameter into the construction method of FFmpegFrameGrabber of javacv, and push it to the streaming media server through FFMPEG in javacv

It mainly uses two classes: FFmpegFrameGrabber (frame grabber) and FFmpegFrameRecorder (frame recorder / streamer)

/**

* @return RtmpPush

* @Title: from

* @Description:Analyze video streams

**/

public RtmpPush from(){

try {

grabber = new FFmpegFrameGrabber(inputStream, 0);

grabber.setVideoOption("vcodec", "copy");

grabber.setFormat("h264");

grabber.setOption("rtsp_transport", "tcp");

grabber.setPixelFormat(avutil.AV_PIX_FMT_YUV420P);

grabber.setVideoCodec(avcodec.AV_CODEC_ID_HEVC);

// It is used to detect whether there is data flow in the Dahua sdk callback function, so as to avoid avformat caused by no data flow_ open_ Input() function blocking

long stime = new Date().getTime();

while (true) {

Thread.sleep(100);

if (new Date().getTime() - stime > 20*1000) {

log.error("[id:" + pojo.getSerNum() + " ch:" + pojo.getChannel() + "] No video streaming data more than 20 s");

release();

return null;

}

if (inputStream.available() == 1024) {

log.error("[id:" + pojo.getSerNum() + " ch:"+ pojo.getChannel() +"]inputStream is 1024");

break;

}

}

// grabber.setOption("stimeout", "20000");

//Here, when the device signal is bad, it will be blocked. Temporarily modify the source code and remove org bytedeco. ffmpeg. global. avcodec. synchronized for class

grabber.start();

isGrabberStartOver=true;

log.error("[id:" + pojo.getSerNum() + " ch:" + pojo.getChannel() +"]Grabber strat over!");

// Generally speaking, the frame rate of the camera is 25

if (grabber.getFrameRate() > 0 && grabber.getFrameRate() < 100) {

framerate = grabber.getFrameRate();

} else {

framerate = 25.0;

}

} catch (Exception e) {

log.error(e.getMessage());

release();

return null;

}

return this;

}/**

* @return void

* @Title: push

* @Description:Push video

**/

public void push() {

try {

//Encapsulated in flv format and pushed to streaming media server through rtmp protocol

this.recorder = new FFmpegFrameRecorder(pojo.getRtmp(), grabber.getImageWidth(), grabber.getImageHeight(),0);

this.recorder.setInterleaved(true);

this.recorder.setVideoOptions(this.videoOption);

// Set bit rate

this.recorder.setVideoBitrate(bitrate);

// h264 codec

this.recorder.setVideoCodec(avcodec.AV_CODEC_ID_H264);

// Encapsulate flv format

this.recorder.setFormat("flv");

this.recorder.setPixelFormat(avutil.AV_PIX_FMT_YUV420P);

// Video frame rate (the minimum is 25 when video quality is guaranteed, and a flash screen will appear if it is lower than 25)

this.recorder.setFrameRate(framerate);

// The key frame interval is generally the same as the frame rate or twice the video frame rate

this.recorder.setGopSize((int) framerate * 2);

AVFormatContext fc = null;

fc = grabber.getFormatContext();

this.recorder.start(fc);

AVPacket pkt = null;

long dts = 0;

long pts = 0;

//Exit when the empty packet duration is greater than 30s

Long startTs = CurrentTimeMillisClock.getInstance().now();

Long nullTs;

for (int no_frame_index = 0; err_index < 5 && !isExit; ) {

if (exitcode == 1) {

break;

}

pkt = grabber.grabPacket();

if (pkt == null || pkt.size() <= 0 || pkt.data() == null) {

if(no_frame_index==0){

log.error("[id:" + pojo.getSerNum() + " ch:" + pojo.getChannel() +"]pkt is null,continue...");

}

nullTs = CurrentTimeMillisClock.getInstance().now();

//Number of empty packet records skipped

no_frame_index++;

exitcode = 2;

if(nullTs-startTs>30*1000){

break;

}

Thread.sleep(100);

continue;

}

startTs = CurrentTimeMillisClock.getInstance().now();

if(no_frame_index!=0){

log.error("pkt is not null");

}

no_frame_index=0;

// Filter audio

if (pkt.stream_index() == 1) {

continue;

} else {

// Correct the dts of sdk callback data and the problem that the player cannot continue broadcasting caused by pts not accumulating from 0 every time

pkt.pts(pts);

pkt.dts(dts);

err_index += (recorder.recordPacket(pkt) ? 0 : 1);

}

// pts,dts accumulation

timebase = grabber.getFormatContext().streams(pkt.stream_index()).time_base().den();

pts += timebase / (int) framerate;

dts += timebase / (int) framerate;

// Count the reference of the cache space to - 1 and set the other fields in the Packet to the initial value. If the reference count is 0, the cache space is automatically released.

av_packet_unref(pkt);

}

} catch (Exception e) {

log.error(e.getMessage());

} finally {

release();

}

}

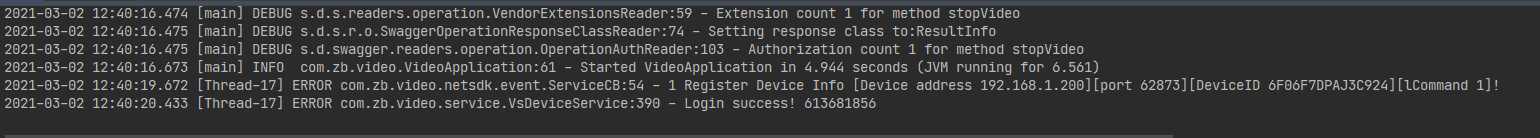

4, Realization effect

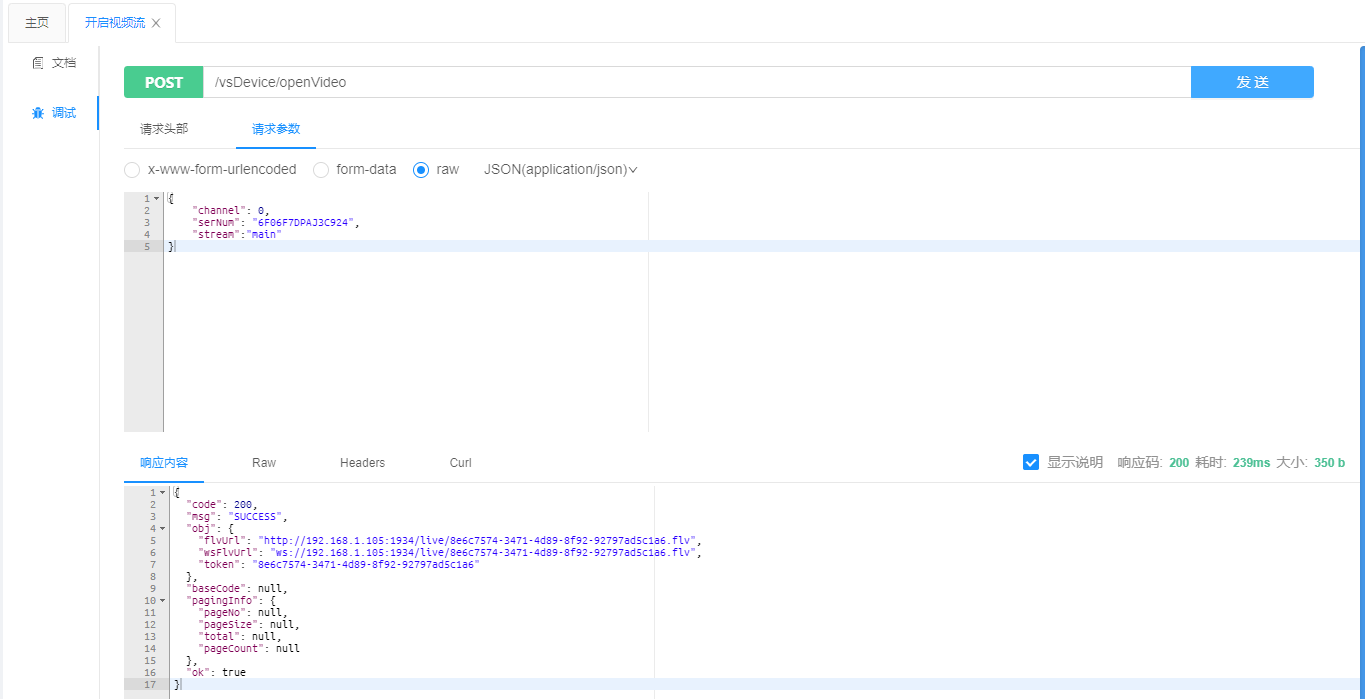

After configuring the camera and deploying the nms, run the project and wait for the camera to actively register  swagger calls the interface to open the video stream and push the video of the main code stream to nms

swagger calls the interface to open the video stream and push the video of the main code stream to nms  Copy the flv address after successful push and view the network stream in vlc

Copy the flv address after successful push and view the network stream in vlc  At the same time, it can also be played on other streaming media players. The page can use the flv of station b JS, etc. You can also see this video stream on the nms management page.

At the same time, it can also be played on other streaming media players. The page can use the flv of station b JS, etc. You can also see this video stream on the nms management page.