1. Version and planning

1.1 version information:

| name | Version number |

|---|---|

| kernel | 3.10.0-1160.el7.x86_64 |

| operating system | CentOS Linux release 7.9.2009 (Core) |

| docker | 20.10.11 |

| kubeadm | 1.23.0 |

| kubelet | 1.23.0 |

| kubectl | 1.23.0 |

1.2 cluster planning

| IP | hostname |

|---|---|

| 192.168.0.114 | k8s-master |

| 192.168.0.115 | k8s-node01 |

| 192.168.0.116 | k8s-node02 |

2 deployment

explain:

- From step 1 to step 8, all nodes must operate

- master node: steps 9 and 10

- node: step 11

1. Turn off firewall

- Close: systemctl stop firewalld

- Permanent shutdown: systemctl disable firewalld

2. Close selinux

- Close: setenforce0

3. Close swap

- Temporary shutdown: swapoff -a

- Permanently closed: sed - RI's /* swap.*/#&/' / Etc / fstab, comment out the code with swap lines through this command

- Inspection: free -m

4. Add host name and IP correspondence

- Add the mapping relationship in / etc/hosts according to the planning in 1.2

192.168.0.114 k8s-master 192.168.0.115 k8s-node01 192.168.0.116 k8s-node02

5. The chain that passes bridged IPV4 traffic to iptables

- Add a configuration using the following command:

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

- Execute the sysctl --system command to make the configuration effective:

6. Install docker

- Installation:

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo yum install docker-ce

- Set startup and self startup

systemctl start docker systemctl enable docker

- View version: docker version

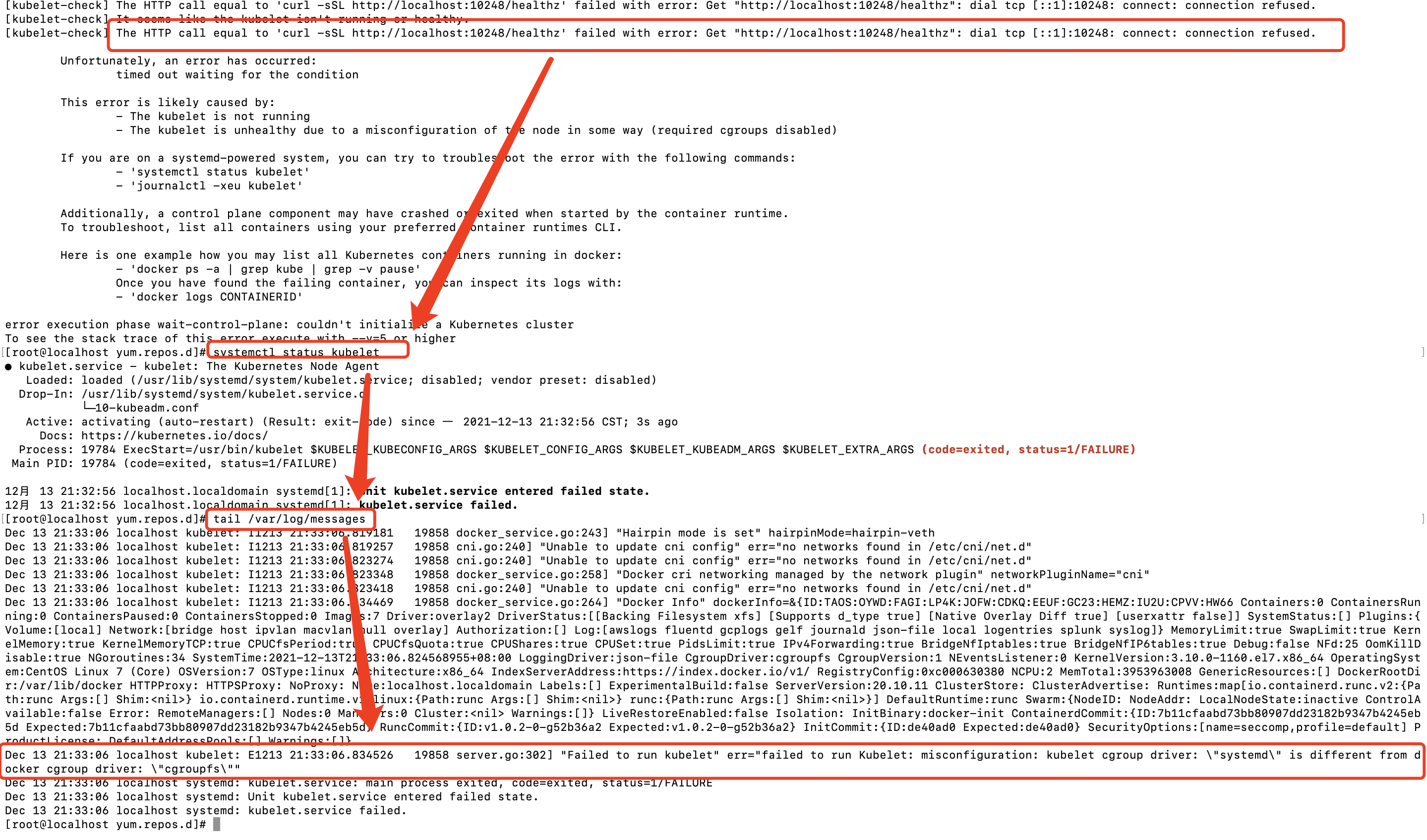

Possible pits (skip here for the time being, and you can modify them later):

- Problem: use the command docker info|grep cgroup to view. If the Cgroup Driver used by docker is cgroupfs. It may conflict with kubelet and need to be changed to consistent systemd

- Solution 1: VI / etc / docker / daemon JSON, add the following code to the file and restart docker

{ "exec-opts": ["native.cgroupdriver=systemd"] } - Solution 2: you can also modify ExecStart in the docker startup service and add the parameter "-- exec opt native. Cgroupdriver = SYSTEMd"

# docker.service vi /usr/lib/systemd/system/docker.service # add to ExecStart=/usr/bin/dockerd --exec-opt native.cgroupdriver=systemd

7. Add alicloud yum software source

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

Possible pits (skip here for the time being and come back later)

- Problem: XXX may be prompted during installation RPM public key not installed

- Solution 1: after importing the public key, reinstall it again

wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg wget https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg rpm --import yum-key.gpg rpm --import rpm-package-key.gpg

- Solution 2: kubernetes The configuration gpgchekc in the repo file is 0. You can do it without checking

8. Install kubedm, kubelet, kubectl

- Install: Yum install -y kubelet-1.23 0 kubectl-1.23. 0 kubeadm-1.23. 0

- Or install the latest: Yum install - y kubelet kubectl kubedm

- Set startup and self startup: systemctl enable kubelet. Here, you only need to set startup. Because the configuration has not been completed, you do not need to start, and the start will not succeed

9. Initialize master node

- Initialization command

kubeadm init \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.23.0 \ --service-cidr=10.1.0.0/16 \ --pod-network-cidr=10.244.0.0/16

- Parameter description

- --image-repository string

- Choose a container registry to pull control plane images from (default "k8s.gcr.io")

- Alicloud's registry is used here. Otherwise, it will be slow or even fail

- --image-repository string

- If the installation fails, you need to clean up the environment with kubedm reset command before reinstalling.

- Pit encountered:

- Question: The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

- Solution: see Step 6 for details

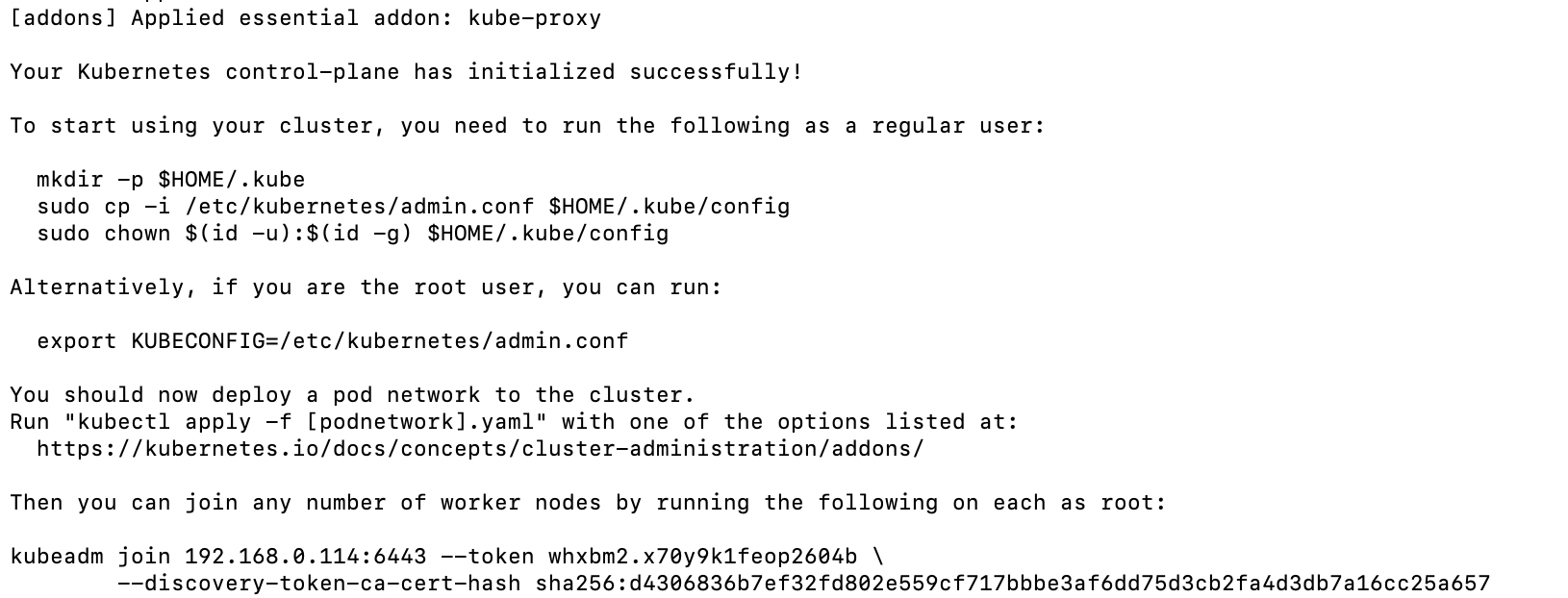

Initialization succeeded

- If the following results appear, the initialization is successful

- At the prompt, execute the following command

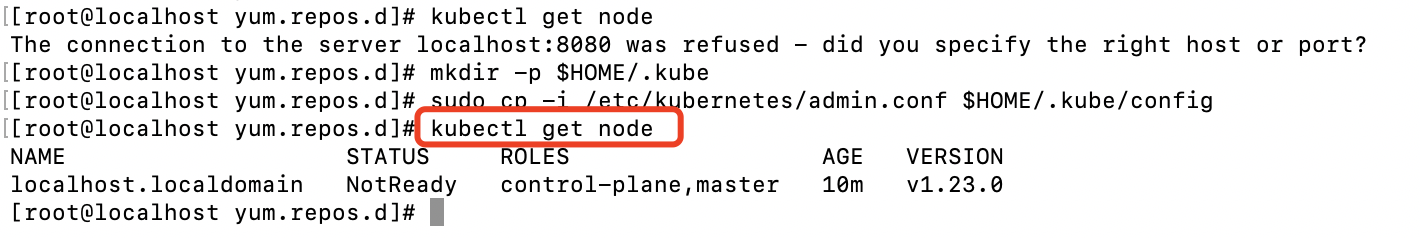

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config # The first command: to create a hidden folder kube # The second command: copy the file admin Conf to this folder # The third command: indicates the user and user group of the setting file

- After executing the above commands, you can use the kubectl command. Otherwise, you will be prompted the connection to the server localhost: 8080 was rejected - did you specify the right host or port?

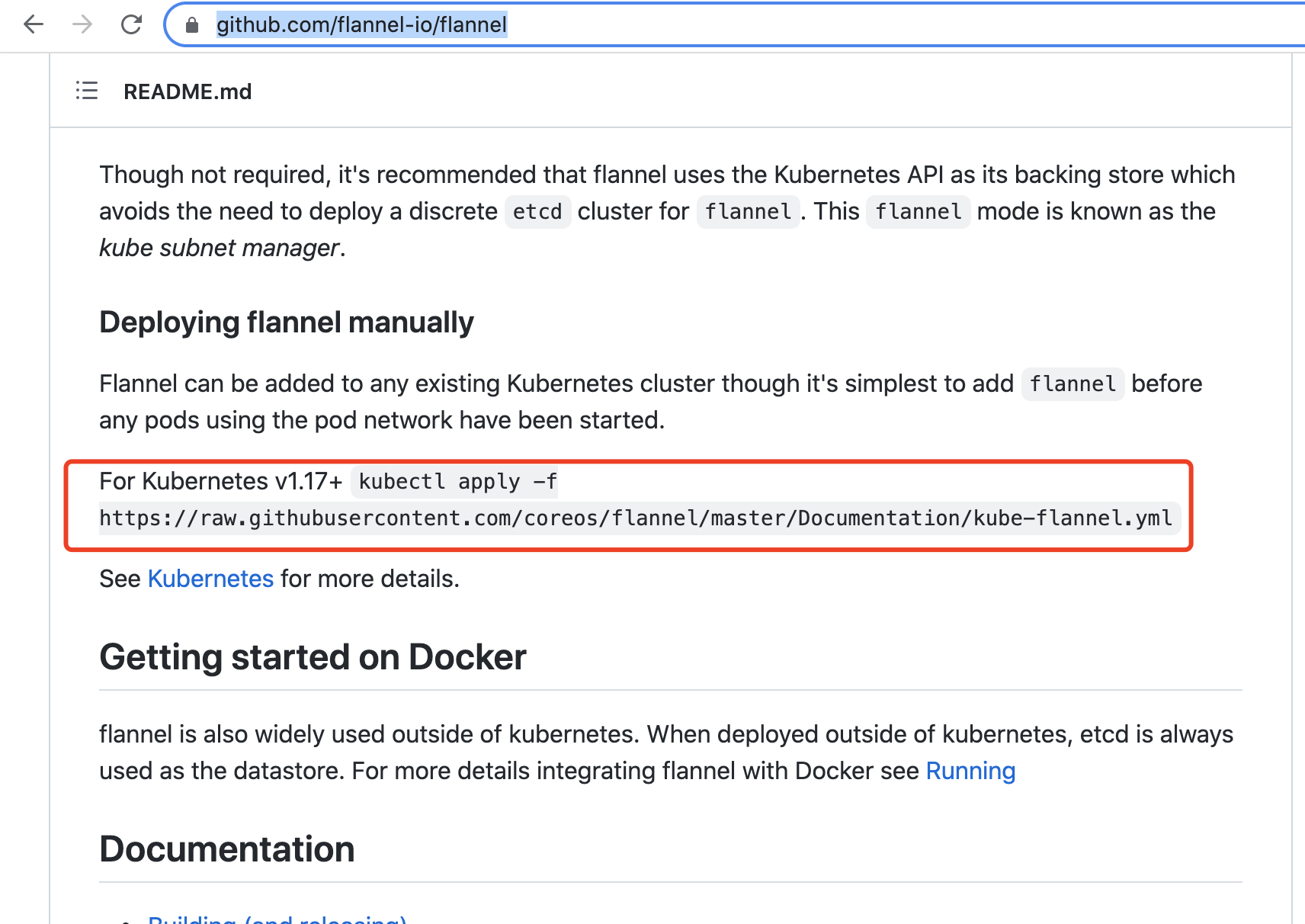

10. Install pod network plug-in (CNI)

-

GitHub address: https://github.com/flannel-io/flannel You can also download files directly

-

Install plug-ins:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

-

Pit encountered

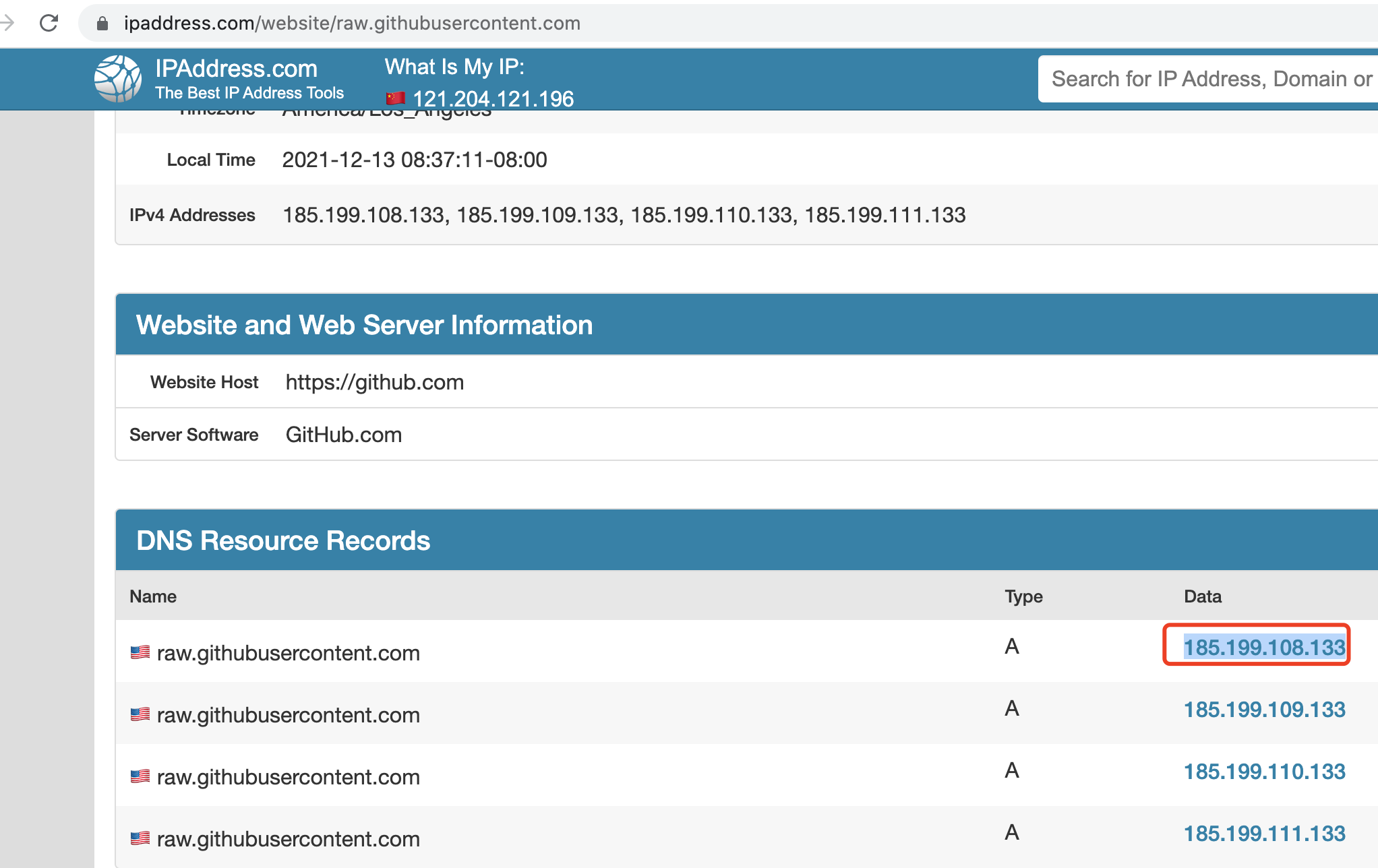

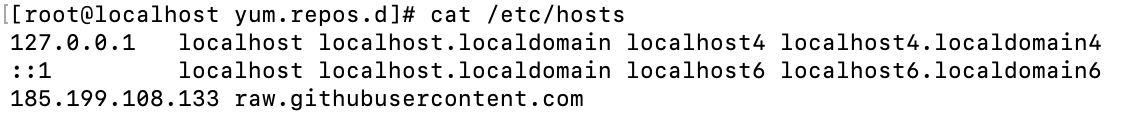

- Question: the connection to the server raw githubusercontent. com was refused - did you specify the right host or port?

- Solution: add raw.net in / etc/hosts githubusercontent. Com mapping. The ip address can be https://www.ipaddress.com View

-

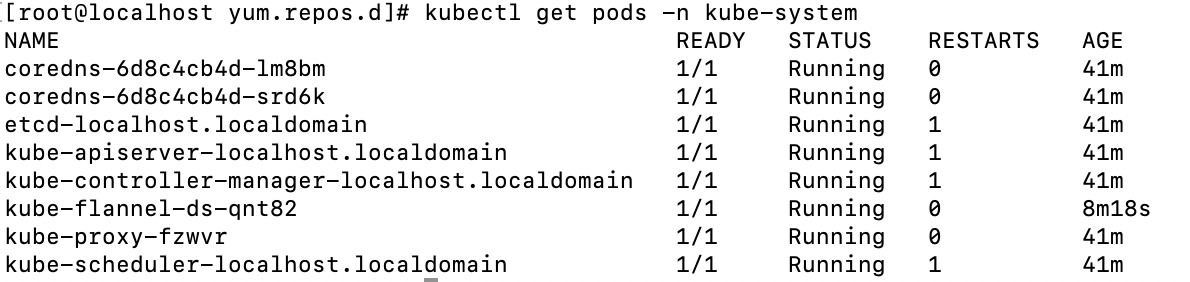

Check whether the deployment is successful: kubectl get Pods - n Kube system

-

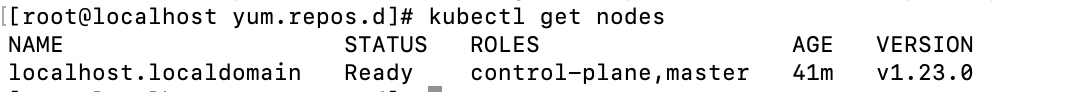

Check the node again and the status changes to ready

11. node join the cluster

- To add a new node to the cluster, you only need to copy the kubedm join command after kubedm init is successfully executed in the master node to the node node to be added

kubeadm join 192.168.0.114:6443 --token whxbm2.x70y9k1feop2604b \ --discovery-token-ca-cert-hash sha256:d4306836b7ef32fd802e559cf717bbbe3af6dd75d3cb2fa4d3db7a16cc25a657

- After joining successfully, use the command kubectl get nodes on the master node to find that the node node has joined the cluster and its status is ready (wait a moment here)

Reference articles

Deployment of K8S cluster in CentOS7: https://www.cnblogs.com/caoxb/p/11243472.html