Kubernetes CEPH CSI analysis directory navigation

CEPH CSI source code analysis (6) - RBD driver nodeserver analysis (Part 2)

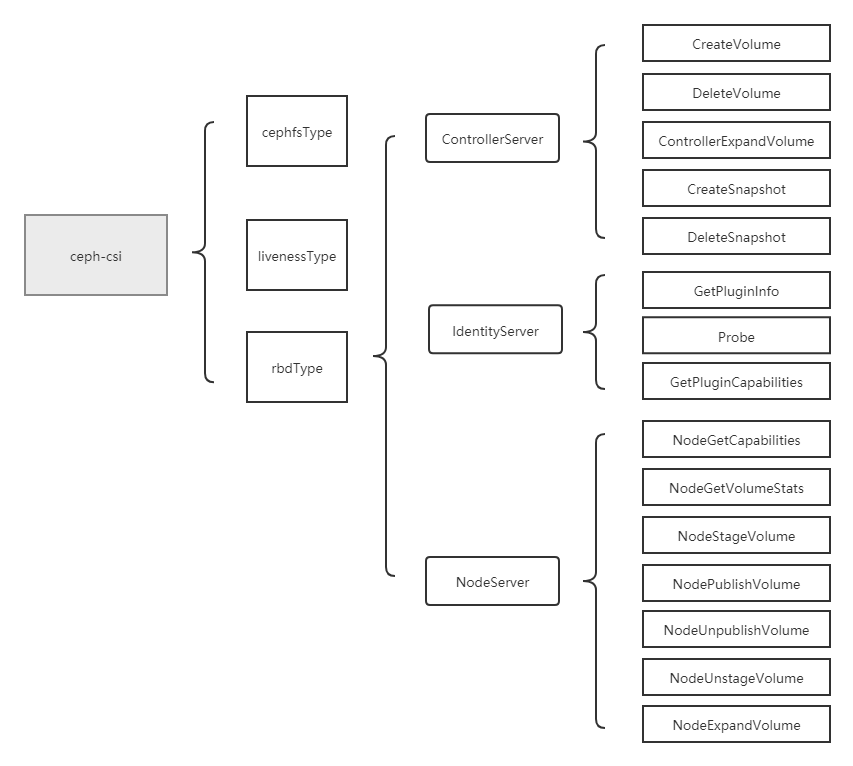

When the driver type specified when the CEPH CSI component is started is rbd, the services related to rbd driver will be started. Then, according to the parameter configuration of controllerserver and nodeserver, decide to start controllerserver and IdentityServer, or nodeserver and IdentityServer.

Based on tag v3 zero

https://github.com/ceph/ceph-csi/releases/tag/v3.0.0

rbd driver analysis will be divided into four parts: service entry analysis, controller server analysis, nodeserver analysis and identity server analysis.

nodeserver mainly includes NodeGetCapabilities, NodeGetVolumeStats, NodeStageVolume (map rbd and mount stagingPath), NodePublishVolume (mount targetPath), nodeuublishvolume (umount targetPath), NodeUnstageVolume (umount stagingPath and unmap rbd) NodeExpandVolume operations will be analyzed one by one. This section analyzes NodeStageVolume, NodePublishVolume, NodeUnpublishVolume and NodeUnstageVolume.

nodeserver analysis (Part 2)

ceph rbd mount knowledge explanation

rbd image map blocking device mainly has two ways: (1) through RBD Kernel Module and (2) through RBD-NBD. reference resources: https://www.jianshu.com/p/bb9d14bd897c , http://xiaqunfeng.cc/2017/06/07/Map-RBD-Devices-on-NBD/

A ceph rbd image is attached to the pod in two steps:

1. The kubelet component calls the NodeStageVolume method of rbdtype nodeserver CEPH CSI, maps the RBD image to the rbd/nbd device on the node, and then formats and mount s the rbd device to the staging path;

2. The kubelet component calls the NodePublishVolume method of rbdtype nodeserver CEPH CSI to mount the staging path in the previous step to the target path.

Explanation of ceph rbd unmounting knowledge

A ceph rbd image is unmounted from the pod in two steps, as follows:

1. The kubelet component calls the NodeUnpublishVolume method of rbdtype nodeserver CEPH CSI to remove the mounting relationship between stagingPath and targetPath.

2. The kubelet component calls the NodeUnstageVolume method of rbdtype nodeserver CEPH CSI, first remove the mounting relationship between targetPath and rbd/nbd device, and then unmap rbd/nbd device (that is, remove the mounting of rbd/nbd device and ceph rbd image on the node side).

After the rbd image is mounted to the pod, two mount relationships will appear on the node, as shown in the following example:

# mount | grep nbd /dev/nbd0 on /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-e2104b0f-774e-420e-a388-1705344084a4/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-40b130e1-a630-11eb-8bea-246e968ec20c type xfs (rw,relatime,nouuid,attr2,inode64,noquota,_netdev) /dev/nbd0 on /home/cld/kubernetes/lib/kubelet/pods/80114f88-2b09-440c-aec2-54c16efe6923/volumes/kubernetes.io~csi/pvc-e2104b0f-774e-420e-a388-1705344084a4/mount type xfs (rw,relatime,nouuid,attr2,inode64,noquota,_netdev)

Where / home / CLD / kubernetes / lib / kubelet / plugins / kubernetes IO / CSI / PV / pvc-e2104b0f-774e-420e-a388-1705344084a4 / globalmount / 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-40b130e1-a630-11eb-8bea-246e968ec20c is the staging path; And / home / CLD / kubernetes / lib / kubelet / pods / 80114f88-2b09-440c-aec2-54c16efe6923 / volumes / kubernetes IO ~ CSI / pvc-e2104b0f-774e-420e-a388-1705344084a4 / mount is target path and / dev/nbd0 is nbd device.

be careful

When an rbd image is attached to multiple pods on a node, the NodeStageVolume method will only be called once, and NodePublishVolume will be called multiple times, that is, in this case, there will be only one staging path and multiple target paths. You can understand that the staging path corresponds to rbd image and the target path corresponds to pod. Therefore, when an rbd image is mounted to multiple pods on a node, there is only one staging path and multiple target paths.

The same is true for unmounting. The NodeUnstageVolume method will be called only when all pod s of an rbd image are deleted.

(4)NodeStageVolume

brief introduction

Map the RBD image to the rbd/nbd device on the node, format it and mount it to the staging path.

NodeStageVolume mounts the volume to a staging path on the node.

- Stash image metadata under staging path

- Map the image (creates a device)

- Create the staging file/directory under staging path

- Stage the device (mount the device mapped for image)

Main steps:

(1) Map RBD image to rbd/nbd device on node;

(2) Format rbd device (do not format when volumeMode is block) and mount it to staging path.

NodeStageVolume

NodeStageVolume main process:

(1) Verify request parameters and AccessMode;

(2) Obtain volID from request parameters;

(3) Build the ceph request voucher according to the secret (the secret is passed in by kubelet);

(4) Check whether the stagingPath exists and has been mount ed;

(5) Obtain image name from volume journal according to voluid;

(6) Create image meta. Under stagingParentPath JSON, which is used to store the metadata of image;

(7) Call ns Stagetransaction performs map and mount operations.

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) NodeStageVolume(ctx context.Context, req *csi.NodeStageVolumeRequest) (*csi.NodeStageVolumeResponse, error) {

// (1) Verification request parameters

if err := util.ValidateNodeStageVolumeRequest(req); err != nil {

return nil, err

}

// Verify AccessMode

isBlock := req.GetVolumeCapability().GetBlock() != nil

disableInUseChecks := false

// MULTI_NODE_MULTI_WRITER is supported by default for Block access type volumes

if req.VolumeCapability.AccessMode.Mode == csi.VolumeCapability_AccessMode_MULTI_NODE_MULTI_WRITER {

if !isBlock {

klog.Warningf(util.Log(ctx, "MULTI_NODE_MULTI_WRITER currently only supported with volumes of access type `block`, invalid AccessMode for volume: %v"), req.GetVolumeId())

return nil, status.Error(codes.InvalidArgument, "rbd: RWX access mode request is only valid for volumes with access type `block`")

}

disableInUseChecks = true

}

// (2) Get volID from request parameters

volID := req.GetVolumeId()

// (3) Build ceph request voucher according to secret

cr, err := util.NewUserCredentials(req.GetSecrets())

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

defer cr.DeleteCredentials()

if acquired := ns.VolumeLocks.TryAcquire(volID); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), volID)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, volID)

}

defer ns.VolumeLocks.Release(volID)

stagingParentPath := req.GetStagingTargetPath()

stagingTargetPath := stagingParentPath + "/" + volID

// check is it a static volume

staticVol := false

val, ok := req.GetVolumeContext()["staticVolume"]

if ok {

if staticVol, err = strconv.ParseBool(val); err != nil {

return nil, status.Error(codes.InvalidArgument, err.Error())

}

}

// (4) Check whether the stagingPath exists and has been mount ed

var isNotMnt bool

// check if stagingPath is already mounted

isNotMnt, err = mount.IsNotMountPoint(ns.mounter, stagingTargetPath)

if err != nil && !os.IsNotExist(err) {

return nil, status.Error(codes.Internal, err.Error())

}

if !isNotMnt {

util.DebugLog(ctx, "rbd: volume %s is already mounted to %s, skipping", volID, stagingTargetPath)

return &csi.NodeStageVolumeResponse{}, nil

}

volOptions, err := genVolFromVolumeOptions(ctx, req.GetVolumeContext(), req.GetSecrets(), disableInUseChecks)

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

// (5) Get image name from volume journal according to voluid

// get rbd image name from the volume journal

// for static volumes, the image name is actually the volume ID itself

switch {

case staticVol:

volOptions.RbdImageName = volID

default:

var vi util.CSIIdentifier

var imageAttributes *journal.ImageAttributes

err = vi.DecomposeCSIID(volID)

if err != nil {

err = fmt.Errorf("error decoding volume ID (%s) (%s)", err, volID)

return nil, status.Error(codes.Internal, err.Error())

}

j, err2 := volJournal.Connect(volOptions.Monitors, cr)

if err2 != nil {

klog.Errorf(

util.Log(ctx, "failed to establish cluster connection: %v"),

err2)

return nil, status.Error(codes.Internal, err.Error())

}

defer j.Destroy()

imageAttributes, err = j.GetImageAttributes(

ctx, volOptions.Pool, vi.ObjectUUID, false)

if err != nil {

err = fmt.Errorf("error fetching image attributes for volume ID (%s) (%s)", err, volID)

return nil, status.Error(codes.Internal, err.Error())

}

volOptions.RbdImageName = imageAttributes.ImageName

}

volOptions.VolID = volID

transaction := stageTransaction{}

// (6) Create image meta. Under stagingParentPath JSON, which is used to store the metadata of the image

// Stash image details prior to mapping the image (useful during Unstage as it has no

// voloptions passed to the RPC as per the CSI spec)

err = stashRBDImageMetadata(volOptions, stagingParentPath)

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

defer func() {

if err != nil {

ns.undoStagingTransaction(ctx, req, transaction)

}

}()

// (7) Call ns Stagetransaction performs map/mount operations

// perform the actual staging and if this fails, have undoStagingTransaction

// cleans up for us

transaction, err = ns.stageTransaction(ctx, req, volOptions, staticVol)

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

util.DebugLog(ctx, "rbd: successfully mounted volume %s to stagingTargetPath %s", req.GetVolumeId(), stagingTargetPath)

return &csi.NodeStageVolumeResponse{}, nil

}

1.ValidateNodeStageVolumeRequest

ValidateNodeStageVolumeRequest verifies the following:

(1) volume capability parameter cannot be empty;

(2) volume ID parameter cannot be empty;

(3) The staging target path parameter cannot be empty;

(4) The stage secrets parameter cannot be empty;

(5) Whether the staging path exists on dnode.

//ceph-csi/internal/util/validate.go

func ValidateNodeStageVolumeRequest(req *csi.NodeStageVolumeRequest) error {

if req.GetVolumeCapability() == nil {

return status.Error(codes.InvalidArgument, "volume capability missing in request")

}

if req.GetVolumeId() == "" {

return status.Error(codes.InvalidArgument, "volume ID missing in request")

}

if req.GetStagingTargetPath() == "" {

return status.Error(codes.InvalidArgument, "staging target path missing in request")

}

if req.GetSecrets() == nil || len(req.GetSecrets()) == 0 {

return status.Error(codes.InvalidArgument, "stage secrets cannot be nil or empty")

}

// validate stagingpath exists

ok := checkDirExists(req.GetStagingTargetPath())

if !ok {

return status.Error(codes.InvalidArgument, "staging path does not exists on node")

}

return nil

}

2.stashRBDImageMetadata

stashRBDImageMetadata creates image meta under stagingParentPath JSON, which is used to store the metadata of the image.

//ceph-csi/internal/rbd/rbd_util.go

const stashFileName = "image-meta.json"

func stashRBDImageMetadata(volOptions *rbdVolume, path string) error {

var imgMeta = rbdImageMetadataStash{

// there are no checks for this at present

Version: 2, // nolint:gomnd // number specifies version.

Pool: volOptions.Pool,

ImageName: volOptions.RbdImageName,

Encrypted: volOptions.Encrypted,

}

imgMeta.NbdAccess = false

if volOptions.Mounter == rbdTonbd && hasNBD {

imgMeta.NbdAccess = true

}

encodedBytes, err := json.Marshal(imgMeta)

if err != nil {

return fmt.Errorf("failed to marshall JSON image metadata for image (%s): (%v)", volOptions, err)

}

fPath := filepath.Join(path, stashFileName)

err = ioutil.WriteFile(fPath, encodedBytes, 0600)

if err != nil {

return fmt.Errorf("failed to stash JSON image metadata for image (%s) at path (%s): (%v)", volOptions, fPath, err)

}

return nil

}

root@cld-dnode3-1091:/home/zhongjialiang# ls /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/ image-meta.json 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74/

3.ns.stageTransaction

Main process:

(1) Call attachRBDImage to map RBD device to dnode;

(2) Call ns Mountvolumetostagepath formats the rbd device on dnode and mount s it to StagePath.

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) stageTransaction(ctx context.Context, req *csi.NodeStageVolumeRequest, volOptions *rbdVolume, staticVol bool) (stageTransaction, error) {

transaction := stageTransaction{}

var err error

var readOnly bool

var feature bool

var cr *util.Credentials

cr, err = util.NewUserCredentials(req.GetSecrets())

if err != nil {

return transaction, err

}

defer cr.DeleteCredentials()

err = volOptions.Connect(cr)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to connect to volume %v: %v"), volOptions.RbdImageName, err)

return transaction, err

}

defer volOptions.Destroy()

// Allow image to be mounted on multiple nodes if it is ROX

if req.VolumeCapability.AccessMode.Mode == csi.VolumeCapability_AccessMode_MULTI_NODE_READER_ONLY {

util.ExtendedLog(ctx, "setting disableInUseChecks on rbd volume to: %v", req.GetVolumeId)

volOptions.DisableInUseChecks = true

volOptions.readOnly = true

}

if kernelRelease == "" {

// fetch the current running kernel info

kernelRelease, err = util.GetKernelVersion()

if err != nil {

return transaction, err

}

}

if !util.CheckKernelSupport(kernelRelease, deepFlattenSupport) {

if !skipForceFlatten {

feature, err = volOptions.checkImageChainHasFeature(ctx, librbd.FeatureDeepFlatten)

if err != nil {

return transaction, err

}

if feature {

err = volOptions.flattenRbdImage(ctx, cr, true, rbdHardMaxCloneDepth, rbdSoftMaxCloneDepth)

if err != nil {

return transaction, err

}

}

}

}

// Mapping RBD image

var devicePath string

devicePath, err = attachRBDImage(ctx, volOptions, cr)

if err != nil {

return transaction, err

}

transaction.devicePath = devicePath

util.DebugLog(ctx, "rbd image: %s/%s was successfully mapped at %s\n",

req.GetVolumeId(), volOptions.Pool, devicePath)

if volOptions.Encrypted {

devicePath, err = ns.processEncryptedDevice(ctx, volOptions, devicePath)

if err != nil {

return transaction, err

}

transaction.isEncrypted = true

}

stagingTargetPath := getStagingTargetPath(req)

isBlock := req.GetVolumeCapability().GetBlock() != nil

err = ns.createStageMountPoint(ctx, stagingTargetPath, isBlock)

if err != nil {

return transaction, err

}

transaction.isStagePathCreated = true

// nodeStage Path

readOnly, err = ns.mountVolumeToStagePath(ctx, req, staticVol, stagingTargetPath, devicePath)

if err != nil {

return transaction, err

}

transaction.isMounted = true

if !readOnly {

// #nosec - allow anyone to write inside the target path

err = os.Chmod(stagingTargetPath, 0777)

}

return transaction, err

}

3.1 attachRBDImage

attachRBDImage main process:

(1) Call waitForPath to determine whether the image has been map ped to the node;

(2) When there is no map on the node, call waitForrbdImage to judge whether the image exists and has been used;

(3) Call createPath to map the image to node.

//ceph-csi/internal/rbd/rbd_attach.go

func attachRBDImage(ctx context.Context, volOptions *rbdVolume, cr *util.Credentials) (string, error) {

var err error

image := volOptions.RbdImageName

useNBD := false

if volOptions.Mounter == rbdTonbd && hasNBD {

useNBD = true

}

devicePath, found := waitForPath(ctx, volOptions.Pool, image, 1, useNBD)

if !found {

backoff := wait.Backoff{

Duration: rbdImageWatcherInitDelay,

Factor: rbdImageWatcherFactor,

Steps: rbdImageWatcherSteps,

}

err = waitForrbdImage(ctx, backoff, volOptions)

if err != nil {

return "", err

}

devicePath, err = createPath(ctx, volOptions, cr)

}

return devicePath, err

}

createPath splices the ceph command, and then executes the map command to map the RBD image to dnode as rbd device.

RBD NBD mount mode, specified by – device type = NBD.

func createPath(ctx context.Context, volOpt *rbdVolume, cr *util.Credentials) (string, error) {

isNbd := false

imagePath := volOpt.String()

util.TraceLog(ctx, "rbd: map mon %s", volOpt.Monitors)

// Map options

mapOptions := []string{

"--id", cr.ID,

"-m", volOpt.Monitors,

"--keyfile=" + cr.KeyFile,

"map", imagePath,

}

// Choose access protocol

accessType := accessTypeKRbd

if volOpt.Mounter == rbdTonbd && hasNBD {

isNbd = true

accessType = accessTypeNbd

}

// Update options with device type selection

mapOptions = append(mapOptions, "--device-type", accessType)

if volOpt.readOnly {

mapOptions = append(mapOptions, "--read-only")

}

// Execute map

stdout, stderr, err := util.ExecCommand(ctx, rbd, mapOptions...)

if err != nil {

klog.Warningf(util.Log(ctx, "rbd: map error %v, rbd output: %s"), err, stderr)

// unmap rbd image if connection timeout

if strings.Contains(err.Error(), rbdMapConnectionTimeout) {

detErr := detachRBDImageOrDeviceSpec(ctx, imagePath, true, isNbd, volOpt.Encrypted, volOpt.VolID)

if detErr != nil {

klog.Warningf(util.Log(ctx, "rbd: %s unmap error %v"), imagePath, detErr)

}

}

return "", fmt.Errorf("rbd: map failed with error %v, rbd error output: %s", err, stderr)

}

devicePath := strings.TrimSuffix(stdout, "\n")

return devicePath, nil

}

3.2 mountVolumeToStagePath

Main process:

(1) When volumeMode is Filesystem, run mkfs to format rbd device;

(2) Mount rbd device to stagingPath.

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) mountVolumeToStagePath(ctx context.Context, req *csi.NodeStageVolumeRequest, staticVol bool, stagingPath, devicePath string) (bool, error) {

readOnly := false

fsType := req.GetVolumeCapability().GetMount().GetFsType()

diskMounter := &mount.SafeFormatAndMount{Interface: ns.mounter, Exec: utilexec.New()}

// rbd images are thin-provisioned and return zeros for unwritten areas. A freshly created

// image will not benefit from discard and we also want to avoid as much unnecessary zeroing

// as possible. Open-code mkfs here because FormatAndMount() doesn't accept custom mkfs

// options.

//

// Note that "freshly" is very important here. While discard is more of a nice to have,

// lazy_journal_init=1 is plain unsafe if the image has been written to before and hasn't

// been zeroed afterwards (unlike the name suggests, it leaves the journal completely

// uninitialized and carries a risk until the journal is overwritten and wraps around for

// the first time).

existingFormat, err := diskMounter.GetDiskFormat(devicePath)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to get disk format for path %s, error: %v"), devicePath, err)

return readOnly, err

}

opt := []string{"_netdev"}

opt = csicommon.ConstructMountOptions(opt, req.GetVolumeCapability())

isBlock := req.GetVolumeCapability().GetBlock() != nil

rOnly := "ro"

if req.VolumeCapability.AccessMode.Mode == csi.VolumeCapability_AccessMode_MULTI_NODE_READER_ONLY ||

req.VolumeCapability.AccessMode.Mode == csi.VolumeCapability_AccessMode_SINGLE_NODE_READER_ONLY {

if !csicommon.MountOptionContains(opt, rOnly) {

opt = append(opt, rOnly)

}

}

if csicommon.MountOptionContains(opt, rOnly) {

readOnly = true

}

if fsType == "xfs" {

opt = append(opt, "nouuid")

}

if existingFormat == "" && !staticVol && !readOnly {

args := []string{}

if fsType == "ext4" {

args = []string{"-m0", "-Enodiscard,lazy_itable_init=1,lazy_journal_init=1", devicePath}

} else if fsType == "xfs" {

args = []string{"-K", devicePath}

// always disable reflink

// TODO: make enabling an option, see ceph/ceph-csi#1256

if ns.xfsSupportsReflink() {

args = append(args, "-m", "reflink=0")

}

}

if len(args) > 0 {

cmdOut, cmdErr := diskMounter.Exec.Command("mkfs."+fsType, args...).CombinedOutput()

if cmdErr != nil {

klog.Errorf(util.Log(ctx, "failed to run mkfs error: %v, output: %v"), cmdErr, string(cmdOut))

return readOnly, cmdErr

}

}

}

if isBlock {

opt = append(opt, "bind")

err = diskMounter.Mount(devicePath, stagingPath, fsType, opt)

} else {

err = diskMounter.FormatAndMount(devicePath, stagingPath, fsType, opt)

}

if err != nil {

klog.Errorf(util.Log(ctx,

"failed to mount device path (%s) to staging path (%s) for volume "+

"(%s) error: %s Check dmesg logs if required."),

devicePath,

stagingPath,

req.GetVolumeId(),

err)

}

return readOnly, err

}

Example of CEPH CSI component log

Operation: NodeStageVolume

Source: daemon: CSI rbdplugin, container: CSI rbdplugin

I0828 06:25:07.604431 3316053 utils.go:159] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC call: /csi.v1.Node/NodeStageVolume

I0828 06:25:07.607979 3316053 utils.go:160] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC request: {"secrets":"***stripped***","staging_target_path":"/home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount","volume_capability":{"AccessType":{"Mount":{"fs_type":"ext4","mount_flags":["discard"]}},"access_mode":{"mode":1}},"volume_context":{"clusterID":"0bba3be9-0a1c-41db-a619-26ffea20161e","imageFeatures":"layering","imageName":"csi-vol-1699e662-e83f-11ea-8e79-246e96907f74","journalPool":"kubernetes","pool":"kubernetes","storage.kubernetes.io/csiProvisionerIdentity":"1598236777786-8081-rbd.csi.ceph.com"},"volume_id":"0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74"}

I0828 06:25:07.608239 3316053 rbd_util.go:722] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 setting disableInUseChecks on rbd volume to: false

I0828 06:25:07.610528 3316053 omap.go:74] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 got omap values: (pool="kubernetes", namespace="", name="csi.volume.1699e662-e83f-11ea-8e79-246e96907f74"): map[csi.imageid:e583b827ec63 csi.imagename:csi-vol-1699e662-e83f-11ea-8e79-246e96907f74 csi.volname:pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893]

E0828 06:25:07.610765 3316053 util.go:236] kernel 4.19.0-8-amd64 does not support required features

I0828 06:25:07.786825 3316053 cephcmds.go:60] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 command succeeded: rbd [device list --format=json --device-type krbd]

I0828 06:25:07.832097 3316053 rbd_attach.go:208] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 rbd: map mon 10.248.32.13:6789,10.248.32.14:6789,10.248.32.15:6789

I0828 06:25:07.926180 3316053 cephcmds.go:60] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 command succeeded: rbd [--id kubernetes -m 10.248.32.13:6789,10.248.32.14:6789,10.248.32.15:6789 --keyfile=***stripped*** map kubernetes/csi-vol-1699e662-e83f-11ea-8e79-246e96907f74 --device-type krbd]

I0828 06:25:07.926221 3316053 nodeserver.go:291] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 rbd image: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74/kubernetes was successfully mapped at /dev/rbd0

I0828 06:25:08.157777 3316053 nodeserver.go:230] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 rbd: successfully mounted volume 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 to stagingTargetPath /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74

I0828 06:25:08.158588 3316053 utils.go:165] ID: 12008 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC response: {}

(5)NodePublishVolume

brief introduction

The stagemount path method in the stagemount volume.

NodeStageVolume maps RBD image to dnode as device, and then mounts device to a staging path.

NodePublishVolume mounts the stagingpath to the target path.

stagingPath Example: /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 targetPath Example: /home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount

NodePublishVolume

Main process:

(1) Check request parameters;

(2) Check whether the target path exists. If it does not exist, create it;

(3) Mount staging path to target path.

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) NodePublishVolume(ctx context.Context, req *csi.NodePublishVolumeRequest) (*csi.NodePublishVolumeResponse, error) {

err := util.ValidateNodePublishVolumeRequest(req)

if err != nil {

return nil, err

}

targetPath := req.GetTargetPath()

isBlock := req.GetVolumeCapability().GetBlock() != nil

stagingPath := req.GetStagingTargetPath()

volID := req.GetVolumeId()

stagingPath += "/" + volID

if acquired := ns.VolumeLocks.TryAcquire(volID); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), volID)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, volID)

}

defer ns.VolumeLocks.Release(volID)

// Check if that target path exists properly

notMnt, err := ns.createTargetMountPath(ctx, targetPath, isBlock)

if err != nil {

return nil, err

}

if !notMnt {

return &csi.NodePublishVolumeResponse{}, nil

}

// Publish Path

err = ns.mountVolume(ctx, stagingPath, req)

if err != nil {

return nil, err

}

util.DebugLog(ctx, "rbd: successfully mounted stagingPath %s to targetPath %s", stagingPath, targetPath)

return &csi.NodePublishVolumeResponse{}, nil

}

1.ValidateNodePublishVolumeRequest

Validatenodebublishvolumerequest is mainly used to verify some request parameters. Verify that volume capacity / volume ID / target path / staging target path cannot be empty.

//ceph-csi/internal/util/validate.go

func ValidateNodePublishVolumeRequest(req *csi.NodePublishVolumeRequest) error {

if req.GetVolumeCapability() == nil {

return status.Error(codes.InvalidArgument, "volume capability missing in request")

}

if req.GetVolumeId() == "" {

return status.Error(codes.InvalidArgument, "volume ID missing in request")

}

if req.GetTargetPath() == "" {

return status.Error(codes.InvalidArgument, "target path missing in request")

}

if req.GetStagingTargetPath() == "" {

return status.Error(codes.InvalidArgument, "staging target path missing in request")

}

return nil

}

2.createTargetMountPath

createTargetMountPath mainly checks whether the mount path exists. If it does not exist, it is created

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) createTargetMountPath(ctx context.Context, mountPath string, isBlock bool) (bool, error) {

// Check if that mount path exists properly

notMnt, err := mount.IsNotMountPoint(ns.mounter, mountPath)

if err != nil {

if os.IsNotExist(err) {

if isBlock {

// #nosec

pathFile, e := os.OpenFile(mountPath, os.O_CREATE|os.O_RDWR, 0750)

if e != nil {

util.DebugLog(ctx, "Failed to create mountPath:%s with error: %v", mountPath, err)

return notMnt, status.Error(codes.Internal, e.Error())

}

if err = pathFile.Close(); err != nil {

util.DebugLog(ctx, "Failed to close mountPath:%s with error: %v", mountPath, err)

return notMnt, status.Error(codes.Internal, err.Error())

}

} else {

// Create a directory

if err = util.CreateMountPoint(mountPath); err != nil {

return notMnt, status.Error(codes.Internal, err.Error())

}

}

notMnt = true

} else {

return false, status.Error(codes.Internal, err.Error())

}

}

return notMnt, err

}

3.mountVolume

mountVolume is mainly used to piece up the mount command and mount the staging path to the target path

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) mountVolume(ctx context.Context, stagingPath string, req *csi.NodePublishVolumeRequest) error {

// Publish Path

fsType := req.GetVolumeCapability().GetMount().GetFsType()

readOnly := req.GetReadonly()

mountOptions := []string{"bind", "_netdev"}

isBlock := req.GetVolumeCapability().GetBlock() != nil

targetPath := req.GetTargetPath()

mountOptions = csicommon.ConstructMountOptions(mountOptions, req.GetVolumeCapability())

util.DebugLog(ctx, "target %v\nisBlock %v\nfstype %v\nstagingPath %v\nreadonly %v\nmountflags %v\n",

targetPath, isBlock, fsType, stagingPath, readOnly, mountOptions)

if readOnly {

mountOptions = append(mountOptions, "ro")

}

if err := util.Mount(stagingPath, targetPath, fsType, mountOptions); err != nil {

return status.Error(codes.Internal, err.Error())

}

return nil

}

Example of CEPH CSI component log

Action: NodePublishVolume

Source: daemon: CSI rbdplugin, container: CSI rbdplugin

I0828 06:25:08.172901 3316053 utils.go:159] ID: 12010 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC call: /csi.v1.Node/NodePublishVolume

I0828 06:25:08.176683 3316053 utils.go:160] ID: 12010 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC request: {"staging_target_path":"/home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount","target_path":"/home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"ext4","mount_flags":["discard"]}},"access_mode":{"mode":1}},"volume_context":{"clusterID":"0bba3be9-0a1c-41db-a619-26ffea20161e","imageFeatures":"layering","imageName":"csi-vol-1699e662-e83f-11ea-8e79-246e96907f74","journalPool":"kubernetes","pool":"kubernetes","storage.kubernetes.io/csiProvisionerIdentity":"1598236777786-8081-rbd.csi.ceph.com"},"volume_id":"0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74"}

I0828 06:25:08.177363 3316053 nodeserver.go:518] ID: 12010 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 target /home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount

isBlock false

fstype ext4

stagingPath /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74

readonly false

mountflags [bind _netdev discard]

I0828 06:25:08.191877 3316053 nodeserver.go:426] ID: 12010 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 rbd: successfully mounted stagingPath /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 to targetPath /home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount

I0828 06:25:08.192653 3316053 utils.go:165] ID: 12010 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC response: {}

From the log, we can see some parameter values of the mount command

target /home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount isBlock false fstype ext4 stagingPath /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 readonly false mountflags [bind _netdev discard]

(6)NodeUnpublishVolume

brief introduction

Unmount the stagingPath to targetPath.

NodeUnpublishVolume unmounts the volume from the target path.

NodeUnpublishVolume

Main process:

(1) Check request parameters;

(2) Judge whether the specified path is a mount point;

(3) Remove the mounting from stagingPath to targetPath;

(4) Delete the targetPath directory and any subdirectories it contains.

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) NodeUnpublishVolume(ctx context.Context, req *csi.NodeUnpublishVolumeRequest) (*csi.NodeUnpublishVolumeResponse, error) {

// (1) Check request parameters;

err := util.ValidateNodeUnpublishVolumeRequest(req)

if err != nil {

return nil, err

}

targetPath := req.GetTargetPath()

volID := req.GetVolumeId()

if acquired := ns.VolumeLocks.TryAcquire(volID); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), volID)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, volID)

}

defer ns.VolumeLocks.Release(volID)

// (2) Judge whether the specified path is mountpoint

notMnt, err := mount.IsNotMountPoint(ns.mounter, targetPath)

if err != nil {

if os.IsNotExist(err) {

// targetPath has already been deleted

util.DebugLog(ctx, "targetPath: %s has already been deleted", targetPath)

return &csi.NodeUnpublishVolumeResponse{}, nil

}

return nil, status.Error(codes.NotFound, err.Error())

}

if notMnt {

if err = os.RemoveAll(targetPath); err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

return &csi.NodeUnpublishVolumeResponse{}, nil

}

// (3)unmount targetPath;

if err = ns.mounter.Unmount(targetPath); err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

// (4) Delete the targetPath directory and any subdirectories it contains.

if err = os.RemoveAll(targetPath); err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

util.DebugLog(ctx, "rbd: successfully unbound volume %s from %s", req.GetVolumeId(), targetPath)

return &csi.NodeUnpublishVolumeResponse{}, nil

}

RemoveAll

Delete the targetPath directory and any subdirectories it contains.

//GO/src/os/path.go

// RemoveAll removes path and any children it contains.

// It removes everything it can but returns the first error

// it encounters. If the path does not exist, RemoveAll

// returns nil (no error).

// If there is an error, it will be of type *PathError.

func RemoveAll(path string) error {

return removeAll(path)

}

Example of CEPH CSI component log

Operation: NodeUnpublishVolume

Source: daemon: CSI rbdplugin, container: CSI rbdplugin

I0828 07:14:25.117004 3316053 utils.go:159] ID: 12123 GRPC call: /csi.v1.Node/NodeGetVolumeStats

I0828 07:14:25.117825 3316053 utils.go:160] ID: 12123 GRPC request: {"volume_id":"0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74","volume_path":"/home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount"}

I0828 07:14:25.128161 3316053 utils.go:165] ID: 12123 GRPC response: {"usage":[{"available":1003900928,"total":1023303680,"unit":1,"used":2625536},{"available":65525,"total":65536,"unit":2,"used":11}]}

I0828 07:14:40.863935 3316053 utils.go:159] ID: 12124 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC call: /csi.v1.Node/NodeUnpublishVolume

I0828 07:14:40.864889 3316053 utils.go:160] ID: 12124 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC request: {"target_path":"/home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount","volume_id":"0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74"}

I0828 07:14:40.908930 3316053 nodeserver.go:601] ID: 12124 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 rbd: successfully unbound volume 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 from /home/cld/kubernetes/lib/kubelet/pods/c14de522-0679-44b6-af8b-e1ba08b5b004/volumes/kubernetes.io~csi/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/mount

I0828 07:14:40.909906 3316053 utils.go:165] ID: 12124 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC response: {}

(7)NodeUnstageVolume

brief introduction

Remove the mounting of targetPath to rbd/nbd device first, and then unmap rbd/nbd device (that is, remove the mounting of node rbd/nbd device and ceph rbd image).

NodeUnstageVolume unstages the volume from the staging path.

NodeUnstageVolume

Main process:

(1) Check request parameters;

(2) Judge whether stagingTargetPath exists;

(3) Set stagingTargetPath unmount rbd device;

(4) Delete stagingTargetPath;

(5) From image meta Read the metadata of image in JSON file;

(6)unmap rbd device;

(7) Delete the metadata corresponding to the image, i.e. image meta JSON file.

//ceph-csi/internal/rbd/nodeserver.go

func (ns *NodeServer) NodeUnstageVolume(ctx context.Context, req *csi.NodeUnstageVolumeRequest) (*csi.NodeUnstageVolumeResponse, error) {

// (1) Check request parameters;

var err error

if err = util.ValidateNodeUnstageVolumeRequest(req); err != nil {

return nil, err

}

volID := req.GetVolumeId()

if acquired := ns.VolumeLocks.TryAcquire(volID); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), volID)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, volID)

}

defer ns.VolumeLocks.Release(volID)

stagingParentPath := req.GetStagingTargetPath()

stagingTargetPath := getStagingTargetPath(req)

// (2) Judge whether stagingTargetPath exists;

notMnt, err := mount.IsNotMountPoint(ns.mounter, stagingTargetPath)

if err != nil {

if !os.IsNotExist(err) {

return nil, status.Error(codes.NotFound, err.Error())

}

// Continue on ENOENT errors as we may still have the image mapped

notMnt = true

}

if !notMnt {

// (3) Set stagingTargetPath unmount rbd device;

// Unmounting the image

err = ns.mounter.Unmount(stagingTargetPath)

if err != nil {

util.ExtendedLog(ctx, "failed to unmount targetPath: %s with error: %v", stagingTargetPath, err)

return nil, status.Error(codes.Internal, err.Error())

}

}

// (4) Delete stagingTargetPath;

// Example: / home / CLD / kubernetes / lib / kubelet / plugins / kubernetes io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74

if err = os.Remove(stagingTargetPath); err != nil {

// Any error is critical as Staging path is expected to be empty by Kubernetes, it otherwise

// keeps invoking Unstage. Hence any errors removing files within this path is a critical

// error

if !os.IsNotExist(err) {

klog.Errorf(util.Log(ctx, "failed to remove staging target path (%s): (%v)"), stagingTargetPath, err)

return nil, status.Error(codes.Internal, err.Error())

}

}

// (5) From image meta Read the metadata of image in JSON file;

imgInfo, err := lookupRBDImageMetadataStash(stagingParentPath)

if err != nil {

util.UsefulLog(ctx, "failed to find image metadata: %v", err)

// It is an error if it was mounted, as we should have found the image metadata file with

// no errors

if !notMnt {

return nil, status.Error(codes.Internal, err.Error())

}

// If not mounted, and error is anything other than metadata file missing, it is an error

if !errors.Is(err, ErrMissingStash) {

return nil, status.Error(codes.Internal, err.Error())

}

// It was not mounted and image metadata is also missing, we are done as the last step in

// in the staging transaction is complete

return &csi.NodeUnstageVolumeResponse{}, nil

}

// (6)unmap rbd device;

// Unmapping rbd device

imageSpec := imgInfo.String()

if err = detachRBDImageOrDeviceSpec(ctx, imageSpec, true, imgInfo.NbdAccess, imgInfo.Encrypted, req.GetVolumeId()); err != nil {

klog.Errorf(util.Log(ctx, "error unmapping volume (%s) from staging path (%s): (%v)"), req.GetVolumeId(), stagingTargetPath, err)

return nil, status.Error(codes.Internal, err.Error())

}

util.DebugLog(ctx, "successfully unmounted volume (%s) from staging path (%s)",

req.GetVolumeId(), stagingTargetPath)

// (7) Delete the metadata corresponding to the image, i.e. image meta JSON file.

if err = cleanupRBDImageMetadataStash(stagingParentPath); err != nil {

klog.Errorf(util.Log(ctx, "failed to cleanup image metadata stash (%v)"), err)

return nil, status.Error(codes.Internal, err.Error())

}

return &csi.NodeUnstageVolumeResponse{}, nil

}

root@cld-dnode3-1091:/home/zhongjialiang# ls /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/ image-meta.json 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74/

1.lookupRBDImageMetadataStash

From image meta The metadata of the image is read from the JSON file.

//ceph-csi/internal/rbd/rbd_util.go

// file name in which image metadata is stashed.

const stashFileName = "image-meta.json"

func lookupRBDImageMetadataStash(path string) (rbdImageMetadataStash, error) {

var imgMeta rbdImageMetadataStash

fPath := filepath.Join(path, stashFileName)

encodedBytes, err := ioutil.ReadFile(fPath) // #nosec - intended reading from fPath

if err != nil {

if !os.IsNotExist(err) {

return imgMeta, fmt.Errorf("failed to read stashed JSON image metadata from path (%s): (%v)", fPath, err)

}

return imgMeta, util.JoinErrors(ErrMissingStash, err)

}

err = json.Unmarshal(encodedBytes, &imgMeta)

if err != nil {

return imgMeta, fmt.Errorf("failed to unmarshall stashed JSON image metadata from path (%s): (%v)", fPath, err)

}

return imgMeta, nil

}

root@cld-dnode3-1091:/home/zhongjialiang# cat /home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/image-meta.json

{"Version":2,"pool":"kubernetes","image":"csi-vol-1699e662-e83f-11ea-8e79-246e96907f74","accessType":false,"encrypted":false}

2.detachRBDImageOrDeviceSpec

Pieced together the unmap command to perform unmap rbd/nbd device.

//ceph-csi/internal/rbd/rbd_attach.go

func detachRBDImageOrDeviceSpec(ctx context.Context, imageOrDeviceSpec string, isImageSpec, ndbType, encrypted bool, volumeID string) error {

if encrypted {

mapperFile, mapperPath := util.VolumeMapper(volumeID)

mappedDevice, mapper, err := util.DeviceEncryptionStatus(ctx, mapperPath)

if err != nil {

klog.Errorf(util.Log(ctx, "error determining LUKS device on %s, %s: %s"),

mapperPath, imageOrDeviceSpec, err)

return err

}

if len(mapper) > 0 {

// mapper found, so it is open Luks device

err = util.CloseEncryptedVolume(ctx, mapperFile)

if err != nil {

klog.Errorf(util.Log(ctx, "error closing LUKS device on %s, %s: %s"),

mapperPath, imageOrDeviceSpec, err)

return err

}

imageOrDeviceSpec = mappedDevice

}

}

accessType := accessTypeKRbd

if ndbType {

accessType = accessTypeNbd

}

options := []string{"unmap", "--device-type", accessType, imageOrDeviceSpec}

_, stderr, err := util.ExecCommand(ctx, rbd, options...)

if err != nil {

// Messages for krbd and nbd differ, hence checking either of them for missing mapping

// This is not applicable when a device path is passed in

if isImageSpec &&

(strings.Contains(stderr, fmt.Sprintf(rbdUnmapCmdkRbdMissingMap, imageOrDeviceSpec)) ||

strings.Contains(stderr, fmt.Sprintf(rbdUnmapCmdNbdMissingMap, imageOrDeviceSpec))) {

// Devices found not to be mapped are treated as a successful detach

util.TraceLog(ctx, "image or device spec (%s) not mapped", imageOrDeviceSpec)

return nil

}

return fmt.Errorf("rbd: unmap for spec (%s) failed (%v): (%s)", imageOrDeviceSpec, err, stderr)

}

return nil

}

3.cleanupRBDImageMetadataStash

Delete the metadata corresponding to the image, i.e. image meta JSON file.

//ceph-csi/internal/rbd/rbd_util.go

func cleanupRBDImageMetadataStash(path string) error {

fPath := filepath.Join(path, stashFileName)

if err := os.Remove(fPath); err != nil {

return fmt.Errorf("failed to cleanup stashed JSON data (%s): (%v)", fPath, err)

}

return nil

}

Example of CEPH CSI component log

Operation: NodeUnstageVolume

Source: daemon: CSI rbdplugin, container: CSI rbdplugin

I0828 07:14:40.972279 3316053 utils.go:159] ID: 12126 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC call: /csi.v1.Node/NodeUnstageVolume

I0828 07:14:40.973139 3316053 utils.go:160] ID: 12126 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC request: {"staging_target_path":"/home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount","volume_id":"0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74"}

I0828 07:14:41.186119 3316053 cephcmds.go:60] ID: 12126 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 command succeeded: rbd [unmap --device-type krbd kubernetes/csi-vol-1699e662-e83f-11ea-8e79-246e96907f74]

I0828 07:14:41.186171 3316053 nodeserver.go:690] ID: 12126 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 successfully unmounted volume (0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74) from staging path (/home/cld/kubernetes/lib/kubelet/plugins/kubernetes.io/csi/pv/pvc-14ee5002-9d60-4ba3-a1d2-cc3800ee0893/globalmount/0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74)

I0828 07:14:41.187119 3316053 utils.go:165] ID: 12126 Req-ID: 0001-0024-0bba3be9-0a1c-41db-a619-26ffea20161e-0000000000000004-1699e662-e83f-11ea-8e79-246e96907f74 GRPC response: {}

So far, the analysis of RBD driver nodeserver has been completed. Here is a summary.

RBD driver nodeserver analysis summary

(1) nodeserver mainly includes NodeGetCapabilities, NodeGetVolumeStats, NodeStageVolume, NodePublishVolume, nodeuublishvolume, NodeUnstageVolume and NodeExpandVolume methods. Their functions are as follows:

NodeGetCapabilities: obtain the capability of CEPH CSI driver.

NodeGetVolumeStats: probe the state of the mounted storage and return the relevant metrics of the storage to kubelet.

NodeExpandVolume: perform corresponding operations on the node to synchronize the stored capacity expansion information to the node.

NodeStageVolume: Map RBD image to rbd/nbd device on node, and mount it to staging path after formatting.

NodePublishVolume: from staging path and mount in NodeStageVolume method to target path.

NodeUnpublishVolume: unmount the stagingPath to targetPath.

NodeUnstageVolume: first remove the mounting of targetPath to rbd/nbd device, and then unmap rbd/nbd device (that is, remove the mounting of node rbd/nbd device and ceph rbd image).

(2) Before kubelet calls NodeExpandVolume, NodeStageVolume, NodeUnstageVolume and other methods, it will first call NodeGetCapabilities to obtain the capability of the CEPH CSI driver to see whether it supports calling these methods.

(3) kubelet periodically calls NodeGetVolumeStats to obtain volume related indicators.

(4) Storage expansion is divided into two steps. The first step is csi's ControllerExpandVolume, which is mainly responsible for expanding the underlying storage; The second step is the NodeExpandVolume of csi. When the volumemode is filesystem, it is mainly responsible for synchronizing the capacity expansion information of the underlying rbd image to the rbd/nbd device to expand the xfs/ext file system; When the volumemode is block, the node side capacity expansion operation is not required.

(5) When an rbd image is attached to multiple pods on a node, the NodeStageVolume method will only be called once, and NodePublishVolume will be called multiple times, that is, in this case, there will be only one staging path and multiple target paths. You can understand that the staging path corresponds to rbd image and the target path corresponds to pod. Therefore, when an rbd image is mounted to multiple pods on a node, there is only one staging path and multiple target paths. The same is true for unmounting. The NodeUnstageVolume method will be called only when all pods of an rbd image are deleted.