Original link🔗: https://www.jianshu.com/p/72b0c9bd3967

In the early days, clickhouse only supported a single storage device. After version 19.15, data can be saved in different storage devices, and data can be automatically moved between different devices. So that clickhouse can realize stepped multi-layer storage, that is, the cold and hot data are separated and stored in different types of storage devices.

In daily interactive queries, 95% of queries access data in recent days, and the remaining 5% run some long-term batch tasks. Through stepped multi-layer storage, we can put the latest hot data on high-performance media, such as SSD, and the old historical data on cheap mechanical hard disk. In addition, by storing data in multiple storage devices to expand the storage capacity of the server, clickhouse can also automatically move data between different storage devices.

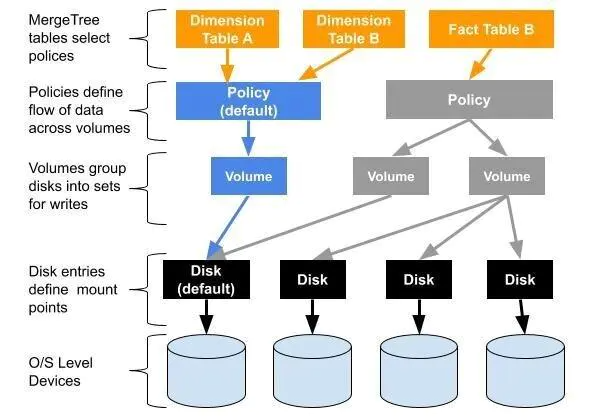

Each MergeTree table has a storage policy to specify how the table data is written; Policies divide different disks into one or more volumes, and specify the write order of data and how to move data between disks.

If not specified, each table has a default storage policy default, which stores the data in the path specified in path in the configuration file.

In the initial state, the data storage directory specified in the clickhouse configuration file is:

/home/work/bigdata/clickhouse/data/

Start the client and view the disk directory perceived by the current clickhouse:

SELECT

name,

path,

formatReadableSize(free_space) AS free,

formatReadableSize(total_space) AS total,

formatReadableSize(keep_free_space) AS reserved

FROM system.disks

┌─name────┬─path────────────────────────────────┬─free─────┬─total────┬─reserved─┐

│ default │ /home/work/bigdata/clickhouse/data/ │ 1.16 TiB │ 3.52 TiB │ 0.00 B │

└─────────┴─────────────────────────────────────┴──────────┴──────────┴──────────┘

1 rows in set. Elapsed: 0.040 sec.Additional disks mounted on the server:

$ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sdb 8:16 0 3.7T 0 disk `-sdb1 8:17 0 3.7T 0 part /home/disk0 sdc 8:32 0 3.7T 0 disk `-sdc1 8:33 0 3.7T 0 part /home/disk1 sdd 8:48 0 3.7T 0 disk `-sdd1 8:49 0 3.7T 0 part /home/disk2 sde 8:64 0 3.7T 0 disk `-sde1 8:65 0 3.7T 0 part /home/disk3 sdf 8:80 0 3.7T 0 disk `-sdf1 8:81 0 3.7T 0 part /home/disk4 sdg 8:96 0 3.7T 0 disk `-sdg1 8:97 0 3.7T 0 part /home/disk5 sdh 8:112 0 3.7T 0 disk `-sdh1 8:113 0 3.7T 0 part /home/disk6

Create a corresponding directory for storing clickhouse data in each disk, and modify the directory owner to click house user

mkdir -p /home/disk0/clickhouse/ chown -R clickhouse.clickhouse /home/disk0/clickhouse/

Modify the service configuration file / server / clickhouse.etc/clickhouse XML add the above disks

<yandex>

<storage_configuration>

<disks>

<disk_name_0> <!-- disk name -->

<path>/home/disk0/clickhouse/</path>

</disk_name_0>

<disk_name_1>

<path>/home/disk1/clickhouse/</path>

</disk_name_1>

<disk_name_2>

<path>/home/disk2/clickhouse/</path>

</disk_name_2>

<disk_name_3>

<path>/home/disk3/clickhouse/</path>

</disk_name_3>

<disk_name_4>

<path>/home/disk4/clickhouse/</path>

</disk_name_4>

<disk_name_5>

<path>/home/disk5/clickhouse/</path>

</disk_name_5>

<disk_name_6>

<path>/home/disk6/clickhouse/</path>

</disk_name_6>

</disks>

</storage_configuration>

</yandex>restart clickhouse service

service clickhouse-server stop service clickhouse-server start

At this point, check the disk directory perceived by clickhouse

SELECT

name,

path,

formatReadableSize(free_space) AS free,

formatReadableSize(total_space) AS total,

formatReadableSize(keep_free_space) AS reserved

FROM system.disks

┌─name────────┬─path────────────────────────────────┬─free─────┬─total────┬─reserved─┐

│ default │ /home/work/bigdata/clickhouse/data/ │ 1.34 TiB │ 3.52 TiB │ 0.00 B │

│ disk_name_0 │ /home/disk0/clickhouse/ │ 3.58 TiB │ 3.58 TiB │ 0.00 B │

│ disk_name_1 │ /home/disk1/clickhouse/ │ 3.58 TiB │ 3.58 TiB │ 0.00 B │

│ disk_name_2 │ /home/disk2/clickhouse/ │ 3.58 TiB │ 3.58 TiB │ 0.00 B │

│ disk_name_3 │ /home/disk3/clickhouse/ │ 3.58 TiB │ 3.58 TiB │ 0.00 B │

│ disk_name_4 │ /home/disk4/clickhouse/ │ 3.58 TiB │ 3.58 TiB │ 0.00 B │

│ disk_name_5 │ /home/disk5/clickhouse/ │ 3.58 TiB │ 3.58 TiB │ 0.00 B │

│ disk_name_6 │ /home/disk6/clickhouse/ │ 3.58 TiB │ 3.58 TiB │ 0.00 B │

└─────────────┴─────────────────────────────────────┴──────────┴──────────┴──────────┘You can see that in addition to the original default path, we have added our newly mounted disk directory.

Through the above operations, multiple disks are configured for clickhouse, but only these can not make the data in the table exist in the configured multiple disks. You can observe through experiments:

CREATE TABLE sample1

(

`id` UInt64

)

ENGINE = MergeTree

ORDER BY id

Ok.

0 rows in set. Elapsed: 2.467 sec.

INSERT INTO sample1 SELECT *

FROM numbers(1000000)

← Progress: 1.05 million rows, 8.39 MB (10.84 million rows/s., 86.74 MB/s.) 99%Ok.

0 rows in set. Elapsed: 0.097 sec. Processed 1.05 million rows, 8.39 MB (10.84 million rows/s., 86.70 MB/s.)

SELECT

name,

data_paths

FROM system.tables

WHERE name = 'sample1'

┌─name────┬─data_paths───────────────────────────────────────────────────┐

│ sample1 │ ['/home/work/bigdata/clickhouse/data/data/default/sample1/'] │

└─────────┴──────────────────────────────────────────────────────────────┘

SELECT

name,

disk_name,

path

FROM system.parts

WHERE (table = 'sample1') AND active

┌─name──────┬─disk_name─┬─path───────────────────────────────────────────────────────────────┐

│ all_1_1_0 │ default │ /home/work/bigdata/clickhouse/data/data/default/sample1/all_1_1_0/ │

└───────────┴───────────┴────────────────────────────────────────────────────────────────────┘You can see that the table data is still only saved in one directory, because if it is not specified, clickhouse The table has a default single storage policy default:

SELECT

policy_name,

volume_name,

disks

FROM system.storage_policies

┌─policy_name─┬─volume_name─┬─disks───────┐

│ default │ default │ ['default'] │

└─────────────┴─────────────┴─────────────┘In order for multiple disks to take effect, there are still two tasks:

- Formulate storage policies in the configuration file and organize multiple disks through volume labels

- When creating a table, use SETTINGS storage_policy = '' to specify the storage policy for the table

JBOD ("Just a Bunch of Disks"), by allocating multiple disks to a volume, the data part s generated by each data insertion will be written to these disks in turn in the form of polling. The advantages of this strategy are as follows:

- The storage capacity can be directly expanded by adding disks

- When multithreading accesses multiple different disks in parallel, it can improve the reading and writing speed

- Since there are fewer data parts on each disk, the loading speed of the table can be accelerated

Add the following storage policy configuration to the configuration file and restart the clickhouse service.

<policies>

<policy_name_1>

<volumes>

<volume_name_0>

<disk>disk_name_0</disk>

<disk>disk_name_1</disk>

<disk>disk_name_2</disk>

<disk>disk_name_3</disk>

<disk>disk_name_4</disk>

<disk>disk_name_5</disk>

<disk>disk_name_6</disk>

</volume_name_6>

</volumes>

</policy_name_1>

</policies>You can see that a storage policy with multiple disks has been added at this time

SELECT policy_name,

volume_name,

disks

FROM system.storage_policies

┌─policy_name───┬─volume_name───┬─disks───────────────────────────────────────────────────────────────────────────────────────────────┐

│ default │ default │ ['default'] │

│ policy_name_1 │ volume_name_0 │ ['disk_name_0','disk_name_1','disk_name_2','disk_name_3','disk_name_4','disk_name_5','disk_name_6'] │

└───────────────┴───────────────┴─────────────────────────────────────────────────────────────────────────────────────────────────────┘Create a new table and test it

CREATE TABLE sample3 (id UInt64)

Engine=MergeTree

ORDER BY id

SETTINGS storage_policy = 'policy_name_1';

SELECT

name,

data_paths,

metadata_path,

storage_policy

FROM system.tables

WHERE name = 'sample3';

┌─name────┬─data_paths─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┬─metadata_path───────────────────────────────────────────────────┬─storage_policy─┐

│ sample3 │ ['/home/disk0/clickhouse/data/default/sample3/','/home/disk1/clickhouse/data/default/sample3/','/home/disk2/clickhouse/data/default/sample3/','/home/disk3/clickhouse/data/default/sample3/','/home/disk4/clickhouse/data/default/sample3/','/home/disk5/clickhouse/data/default/sample3/','/home/disk6/clickhouse/data/default/sample3/'] │ /home/work/bigdata/clickhouse/data/metadata/default/sample3.sql │ policy_name_1 │

└─────────┴────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┴─────────────────────────────────────────────────────────────────┴────────────────┘

insert into sample3 select * from numbers(1000000);

insert into sample3 select * from numbers(1000000);

insert into sample3 select * from numbers(1000000);

insert into sample3 select * from numbers(1000000);

select name, disk_name, path from system.parts where table = 'sample3';

┌─name──────┬─disk_name───┬─path───────────────────────────────────────────────────┐

│ all_1_1_0 │ disk_name_0 │ /home/disk0/clickhouse/data/default/sample3/all_1_1_0/ │

│ all_2_2_0 │ disk_name_1 │ /home/disk1/clickhouse/data/default/sample3/all_2_2_0/ │

│ all_3_3_0 │ disk_name_2 │ /home/disk2/clickhouse/data/default/sample3/all_3_3_0/ │

│ all_4_4_0 │ disk_name_3 │ /home/disk3/clickhouse/data/default/sample3/all_4_4_0/ │

└───────────┴─────────────┴────────────────────────────────────────────────────────┘The multi disk storage of the table has taken effect, and the inserted test data are written to multiple disks in turn,

Note that the metadata still exists in the default disk directory. The background merge task will merge these small tasks regularly

data parts And generate larger data parts,The same polling method is used to write to these disks.

You can manually trigger the execution of the merge task:

OPTIMIZE TABLE sample3

Ok. 0 rows in set. Elapsed: 0.148 sec. Processed: 0 rows, 0.0B (0 rows/s, 0.0B/s)

SELECT

name,

disk_name,

path

FROM system.parts

WHERE (table = 'sample3') AND active;

┌─name──────┬─disk_name───┬─path───────────────────────────────────────────────────┐

│ all_1_4_1 │ disk_name_4 │ /home/disk4/clickhouse/data/default/sample3/all_1_4_1/ │

└───────────┴─────────────┴────────────────────────────────────────────────────────┘