- preparation

- Start hadoop cluster

[amelia@hadoop102 hadoop-2.7.2]$ sbin/start-dfs.sh

- -help: output this command parameter

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -help rm

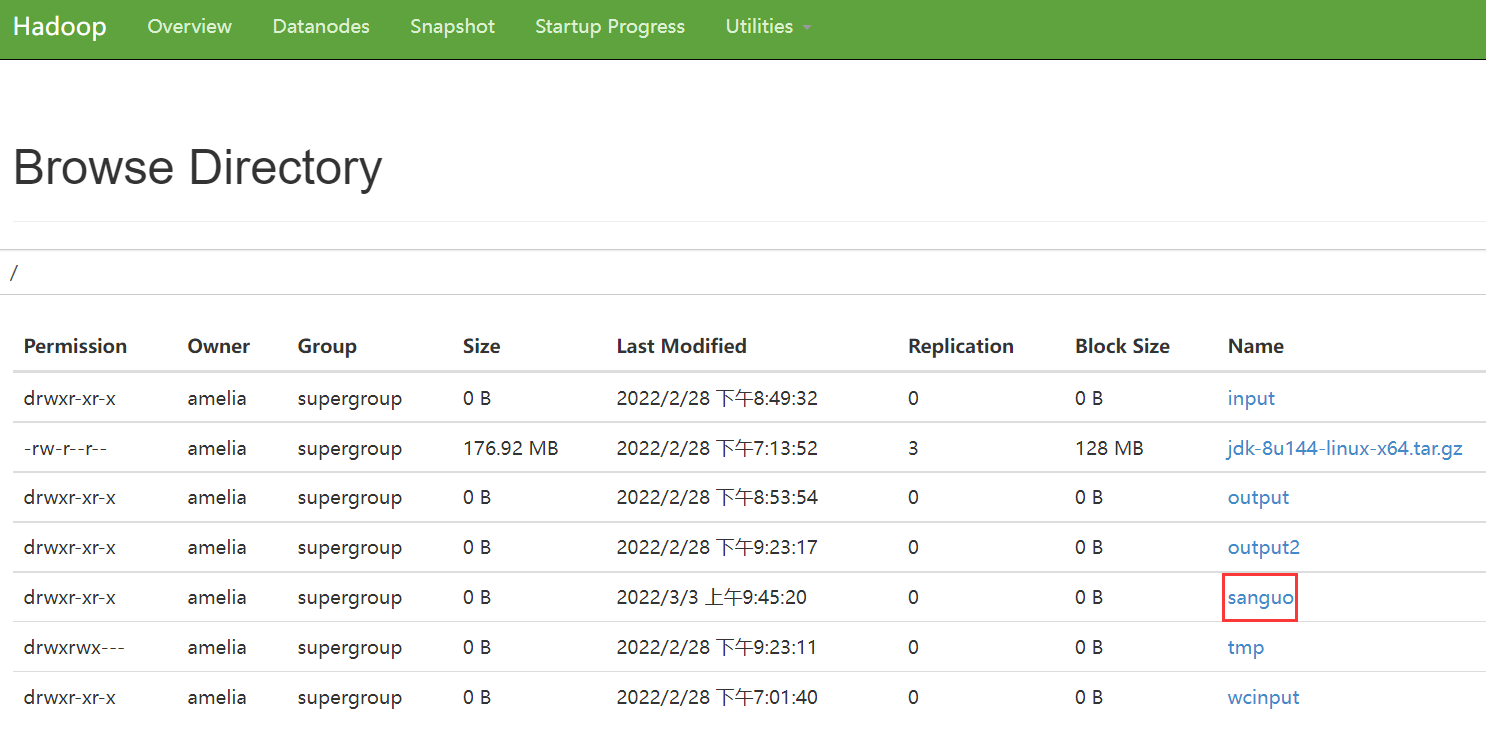

- Create / sanguo folder

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -mkdir /sanguo

Check whether the sanguo file exists in hadoop

2. Upload

- -moveFromLocal: cut and paste from local to HDFS

[amelia@hadoop102 hadoop-2.7.2]$ vim shuguo.txt [amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -moveFromLocal ./shuguo.txt /sanguo

After the operation is completed, you will find that the file has been cut and pasted into the / sanguo folder

- -Copy from local: copy files from the local file system to the HDFS path

[amelia@hadoop102 hadoop-2.7.2]$ vim weiguo.txt [amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -copyFromLocal ./weiguo.txt /sanguo

After the operation is completed, you will find that the file has been copied to the / sanguo folder

- -Put: equivalent to copyFromLocal, the production environment is more used to put

[amelia@hadoop102 hadoop-2.7.2]$ vim wuguo.txt [amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -put ./wuguo.txt /sanguo

After the operation is completed, you will find that the file has been copied to the / sanguo folder

- -appendToFile: appends a file to the end of an existing file

[amelia@hadoop102 hadoop-2.7.2]$ vim wuguo.txt [amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -appendToFile liubei.txt /sanguo/shuguo.txt

After the operation is completed, you will find that the file content is attached to / Shuguo Txt

- download

- -copyToLocal: copy from HDFS to local

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -copyToLocal /sanguo/shuguo.txt ./

After the operation is completed, you will find that the file content is attached to the / hadoop-2.7.2 directory

- -Get: equivalent to copyToLocal. The production environment is more used to get

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -get /sanguo/shuguo.txt ./shuguo2.txt

When the operation is completed, the content of the file will be attached to the / 2.2.2 directory

4. HDFS direct operation

- -ls: display directory information

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -ls /sanguo

- -cat: display file contents

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -ls /sanguo

- -chgrp, - chmod, - chown: modify the permissions of the file

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -chown amelia:amelia /sanguo/shuguo.txt

- -mkdir: create path

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -mkdir /jinguo

- -cp: copy from one path of HDFS to another path of HDFS

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -cp /sanguo/shuguo.txt /jinguo

- -mv: move files in HDFS directory

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -mv /sanguo/weiguo.txt /jinguo [amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -mv /sanguo/wuguo.txt /jinguo

- -tail: displays data at the end 1kb of a file

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -tail /jinguo/shuguo.txt

- -rm: delete a file or folder

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -rm /sanguo/shuguo.txt

- -rm -r: recursively delete the directory and its contents

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -rm -r /sanguo

Note: be careful when using the delete command!!!!

- -du: Statistics of folder size information

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -du -s -h /jinguo 27 /jinguo [amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -du -h /jinguo 14 /jinguo/shuguo.txt 7 /jinguo/weiguo.txt 6 /jinguo/wuguo.txt

- -setrep: sets the number of copies of files in HDFS

[amelia@hadoop102 hadoop-2.7.2]$ hadoop fs -setrep 10 /jinguo/shuguo.txt Replication 10 set: /jinguo/shuguo.txt

The number of copies set here is only recorded in the original array of NameNode. Whether there will be so many copies depends on the number of datanodes. At present, there are only three devices, and there are only three replicas at most. Only when the number of nodes increases to 10, the number of replicas can reach 10.