RAID can greatly improve disk performance and reliability. How can such a good technology not be mastered? This article introduces some common RAID and its soft RAID creation methods on Linux.

mdadm

- Creating Soft RAID

Mdadm-C-v/dev/Created device name-l level-n number added disk [-x number added hot backup disk]

- C: Create a new array -- create

- v: Display details -- verbose

- l: Set the RAID level -- level=

- n: Number of devices available in the specified array -- raid-devices=

- x: Specify the number of redundant devices in the initial array -- spare-devices=, and the idle disk (hot standby disk) can be replaced automatically when the disk is damaged.

- View Details

Mdadm-D/dev/device name

- D: Print the details of one or more MD devices -- detail

- View the status of RAID

cat /proc/mdstat

- Simulated damage

Mdadm-f/dev/device name disk

- f: simulated fail ure

- Remove damage

Mdadm-r/dev/device name disk

- r: Remove remove

- Add a new hard disk as a hot backup disk

Mdadm-a/dev/device name disk

- a: add

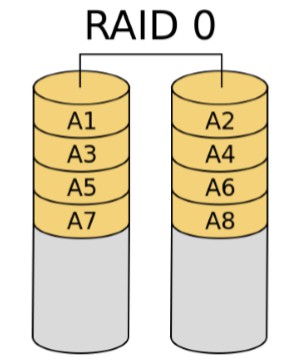

RAID0

RAID0, commonly known as strip, consists of two or more hard disks into a logical hard disk. Its capacity is the sum of all hard disks. Because multiple hard disks are combined into one, it can be written in parallel and write faster. However, in this way, there is no redundancy and no fault tolerance in hard disk data. Once a physical hard disk is damaged, all data will be lost. . Therefore, RAID0 is suitable for scenarios with large amount of data but low security requirements, such as storage of audio-visual and video files.

Experiments: RAID0 creation, formatting, mounting use.

1. Add 2 20G hard disks, partition, type ID is fd.

[root@localhost ~]# fdisk -l | grep raid /dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

2. Create RAID0.

[root@localhost ~]# mdadm -C -v /dev/md0 -l0 -n2 /dev/sd{b,c}1

mdadm: chunk size defaults to 512K

mdadm: Fail create md0 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.3. Check raidstat status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0]

md0 : active raid0 sdc1[1] sdb1[0]

41906176 blocks super 1.2 512k chunks

unused devices: <none>4. See the details of RAID0.

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sun Aug 25 15:28:13 2019

Raid Level : raid0

Array Size : 41906176 (39.96 GiB 42.91 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:28:13 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost:0 (local to host localhost)

UUID : 7ff54c57:b99a59da:6b56c6d5:a4576ccf

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc15. Formatting.

[root@localhost ~]# mkfs.xfs /dev/md0

meta-data=/dev/md0 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=06. Mount and use.

[root@localhost ~]# mkdir /mnt/md0 [root@localhost ~]# mount /dev/md0 /mnt/md0/ [root@localhost ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos-root xfs 17G 1013M 16G 6% / devtmpfs devtmpfs 901M 0 901M 0% /dev tmpfs tmpfs 912M 0 912M 0% /dev/shm tmpfs tmpfs 912M 8.7M 904M 1% /run tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup /dev/sda1 xfs 1014M 143M 872M 15% /boot tmpfs tmpfs 183M 0 183M 0% /run/user/0 /dev/md0 xfs 40G 33M 40G 1% /mnt/md0

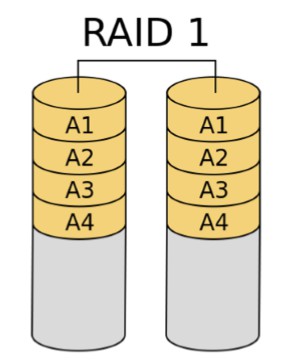

RAID1

RAID1, commonly known as mirror, consists of at least two hard disks, and the data stored on both disks are the same to achieve data redundancy. RAID1 reads faster and writes at the same speed as a single hard disk in theory, but it actually decreases slightly because data needs to be written to all hard disks at the same time. Fault tolerance is the best of all combinations. As long as a hard disk is working properly, it can keep working properly. But its utilization rate of hard disk capacity is the lowest, only 50%, so the cost is the highest. RAID1 is suitable for scenarios with high data security requirements, such as storing database data files.

Experiments: RAID1 creation, formatting, mounting, fault simulation, re-adding hot backup.

1. Add 3 20G hard disks, partition, type ID is fd.

[root@localhost ~]# fdisk -l | grep raid /dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect

2. Create RAID1 and add a hot backup disk.

[root@localhost ~]# mdadm -C -v /dev/md1 -l1 -n2 /dev/sd{b,c}1 -x1 /dev/sdd1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md1 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.3. Check raidstat status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2](S) sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

[========>............] resync = 44.6% (9345792/20953088) finish=0.9min speed=203996K/sec

unused devices: <none>[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2](S) sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>4. See the details of RAID1.

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:39:24 2019

State : clean, resyncing

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Resync Status : 40% complete

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 6

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

2 8 49 - spare /dev/sdd15. Formatting.

[root@localhost ~]# mkfs.xfs /dev/md1

meta-data=/dev/md1 isize=512 agcount=4, agsize=1309568 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5238272, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=06. Mount and use.

[root@localhost ~]# mkdir /mnt/md1 [root@localhost ~]# mount /dev/md1 /mnt/md1/ [root@localhost ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos-root xfs 17G 1014M 16G 6% / devtmpfs devtmpfs 901M 0 901M 0% /dev tmpfs tmpfs 912M 0 912M 0% /dev/shm tmpfs tmpfs 912M 8.7M 904M 1% /run tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup /dev/sda1 xfs 1014M 143M 872M 15% /boot tmpfs tmpfs 183M 0 183M 0% /run/user/0 /dev/md1 xfs 20G 33M 20G 1% /mnt/md1

7. Create test files.

[root@localhost ~]# touch /mnt/md1/test{1..9}.txt

[root@localhost ~]# ls /mnt/md1/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt8. Fault simulation.

[root@localhost ~]# mdadm -f /dev/md1 /dev/sdb1 mdadm: set /dev/sdb1 faulty in /dev/md1

9. View the test files.

[root@localhost ~]# ls /mnt/md1/ test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

10. View status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2] sdc1[1] sdb1[0](F)

20953088 blocks super 1.2 [2/1] [_U]

[=====>...............] recovery = 26.7% (5600384/20953088) finish=1.2min speed=200013K/sec

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:47:57 2019

State : active, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 1

Spare Devices : 1

Consistency Policy : resync

Rebuild Status : 17% complete

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 22

Number Major Minor RaidDevice State

2 8 49 0 spare rebuilding /dev/sdd1

1 8 33 1 active sync /dev/sdc1

0 8 17 - faulty /dev/sdb111. Look at the status again.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2] sdc1[1] sdb1[0](F)

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:49:28 2019

State : active

Active Devices : 2

Working Devices : 2

Failed Devices : 1

Spare Devices : 0

Consistency Policy : resync

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 37

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 33 1 active sync /dev/sdc1

0 8 17 - faulty /dev/sdb112. Remove damaged disks

[root@localhost ~]# mdadm -r /dev/md1 /dev/sdb1 mdadm: hot removed /dev/sdb1 from /dev/md1

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:52:57 2019

State : active

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 38

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 33 1 active sync /dev/sdc113. Re-add the hot backup disk.

[root@localhost ~]# mdadm -a /dev/md1 /dev/sdb1 mdadm: added /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:53:32 2019

State : active

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 39

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 33 1 active sync /dev/sdc1

3 8 17 - spare /dev/sdb1RAID5

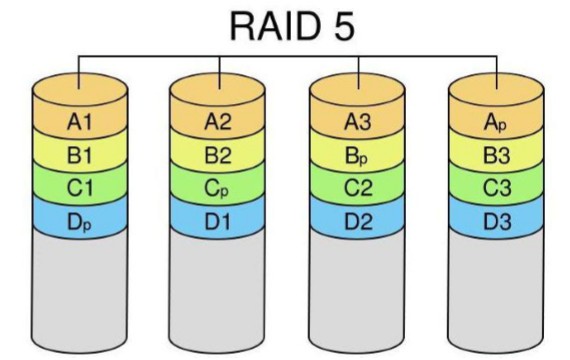

RAID 5 consists of at least three hard disks. It stores data dispersedly on each hard disk in the array, and it also has a data check bit. Data bit and check bit can be verified by algorithm. When one of them is lost, RAID controller can calculate the lost data by algorithm, using the other two data. Original. Thus RAID5 can allow at most one hard disk to be damaged and fault-tolerant. RAID5 has a balance between fault tolerance and cost compared with other combinations, and is popular with most users. RAID5 is the most commonly used method for general disk arrays.

Experiments: RAID5 creation, formatting, mounting, fault simulation, re-adding hot backup.

1. Add 4 20G hard disks, partition, type ID is fd.

[root@localhost ~]# fdisk -l | grep raid /dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect /dev/sde1 2048 41943039 20970496 fd Linux raid autodetect

2. Create RAID5 and add a hot backup disk.

[root@localhost ~]# mdadm -C -v /dev/md5 -l5 -n3 /dev/sd[b-d]1 -x1 /dev/sde1 mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 512K mdadm: size set to 20953088K mdadm: Fail create md5 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md5 started.

3. Check raidstat status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [UU_]

[====>................] recovery = 24.1% (5057340/20953088) finish=1.3min speed=202293K/sec

unused devices: <none>[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>4. See the details of RAID5.

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:15:29 2019

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 18

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

3 8 65 - spare /dev/sde15. Formatting.

[root@localhost ~]# mkfs.xfs /dev/md5

meta-data=/dev/md5 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=06. Mount and use.

[root@localhost ~]# mkdir /mnt/md5 [root@localhost ~]# mount /dev/md5 /mnt/md5/ [root@localhost ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos-root xfs 17G 1014M 16G 6% / devtmpfs devtmpfs 901M 0 901M 0% /dev tmpfs tmpfs 912M 0 912M 0% /dev/shm tmpfs tmpfs 912M 8.7M 904M 1% /run tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup /dev/sda1 xfs 1014M 143M 872M 15% /boot tmpfs tmpfs 183M 0 183M 0% /run/user/0 /dev/md5 xfs 40G 33M 40G 1% /mnt/md5

7. Create test files.

[root@localhost ~]# touch /mnt/md5/test{1..9}.txt

[root@localhost ~]# ls /mnt/md5/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt8. Fault simulation.

[root@localhost ~]# mdadm -f /dev/md5 /dev/sdb1 mdadm: set /dev/sdb1 faulty in /dev/md5

9. View the test files.

[root@localhost ~]# ls /mnt/md5/ test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

10. View status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3] sdc1[1] sdb1[0](F)

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[====>................] recovery = 21.0% (4411136/20953088) finish=1.3min speed=210054K/sec

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:21:31 2019

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 12% complete

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 23

Number Major Minor RaidDevice State

3 8 65 0 spare rebuilding /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb111. Look at the status again.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3] sdc1[1] sdb1[0](F)

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:23:09 2019

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 39

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb112. Remove damaged disks.

[root@localhost ~]# mdadm -r /dev/md5 /dev/sdb1 mdadm: hot removed /dev/sdb1 from /dev/md5

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:25:01 2019

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 40

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd113. Re-add the hot backup disk.

[root@localhost ~]# mdadm -a /dev/md5 /dev/sdb1 mdadm: added /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:25:22 2019

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 41

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

5 8 17 - spare /dev/sdb1RAID6

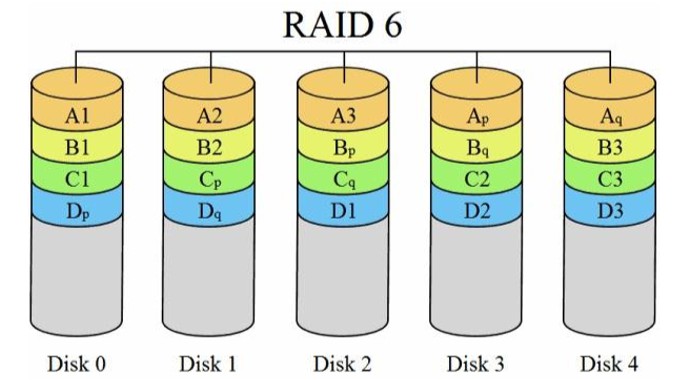

RAID6 is improved on the basis of RAID5. RAID6 adds another bit of data checksum, so the number of hard disks allowed to be damaged is increased from one to two. Because the probability of two hard disks in the same array being damaged at the same time is very small, RAID6 trades for higher data security than RAID5 by increasing the cost of one hard disk.

Experiments: RAID6 creation, formatting, mounting, fault simulation, re-adding hot backup.

1. Add 6 20G hard disks, partition, type ID is fd.

[root@localhost ~]# fdisk -l | grep raid /dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect /dev/sde1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdf1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdg1 2048 41943039 20970496 fd Linux raid autodetect

2. Create RAID6 and add two hot backup disks.

[root@localhost ~]# mdadm -C -v /dev/md6 -l6 -n4 /dev/sd[b-e]1 -x2 /dev/sd[f-g]1 mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 512K mdadm: size set to 20953088K mdadm: Fail create md6 when using /sys/module/md_mod/parameters/new_array mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md6 started.

3. Check raidstat status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5](S) sdf1[4](S) sde1[3] sdd1[2] sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

[===>.................] resync = 18.9% (3962940/20953088) finish=1.3min speed=208575K/sec

unused devices: <none>[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5](S) sdf1[4](S) sde1[3] sdd1[2] sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>4. See the details of RAID6.

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:34:43 2019

State : clean, resyncing

Active Devices : 4

Working Devices : 6

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Resync Status : 10% complete

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 1

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

4 8 81 - spare /dev/sdf1

5 8 97 - spare /dev/sdg15. Formatting.

[root@localhost ~]# mkfs.xfs /dev/md6

meta-data=/dev/md6 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=06. Mount and use.

[root@localhost ~]# mkdir /mnt/md6 [root@localhost ~]# mount /dev/md6 /mnt/md6/ [root@localhost ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos-root xfs 17G 1014M 16G 6% / devtmpfs devtmpfs 901M 0 901M 0% /dev tmpfs tmpfs 912M 0 912M 0% /dev/shm tmpfs tmpfs 912M 8.7M 903M 1% /run tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup /dev/sda1 xfs 1014M 143M 872M 15% /boot tmpfs tmpfs 183M 0 183M 0% /run/user/0 /dev/md6 xfs 40G 33M 40G 1% /mnt/md6

7. Create test files.

[root@localhost ~]# touch /mnt/md6/test{1..9}.txt

[root@localhost ~]# ls /mnt/md6/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt8. Fault simulation.

[root@localhost ~]# mdadm -f /dev/md6 /dev/sdb1 mdadm: set /dev/sdb1 faulty in /dev/md6 [root@localhost ~]# mdadm -f /dev/md6 /dev/sdc1 mdadm: set /dev/sdc1 faulty in /dev/md6

9. View the test files.

[root@localhost ~]# ls /mnt/md6/ test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

10. View status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5] sdf1[4] sde1[3] sdd1[2] sdc1[1](F) sdb1[0](F)

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/2] [__UU]

[====>................] recovery = 23.8% (4993596/20953088) finish=1.2min speed=208066K/sec

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:41:09 2019

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 2

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 13% complete

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 27

Number Major Minor RaidDevice State

5 8 97 0 spare rebuilding /dev/sdg1

4 8 81 1 spare rebuilding /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

0 8 17 - faulty /dev/sdb1

1 8 33 - faulty /dev/sdc111. Look at the status again.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5] sdf1[4] sde1[3] sdd1[2] sdc1[1](F) sdb1[0](F)

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:42:42 2019

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 2

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 46

Number Major Minor RaidDevice State

5 8 97 0 active sync /dev/sdg1

4 8 81 1 active sync /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

0 8 17 - faulty /dev/sdb1

1 8 33 - faulty /dev/sdc112. Remove damaged disks.

[root@localhost ~]# mdadm -r /dev/md6 /dev/sd{b,c}1

mdadm: hot removed /dev/sdb1 from /dev/md6

mdadm: hot removed /dev/sdc1 from /dev/md6[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:43:43 2019

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 47

Number Major Minor RaidDevice State

5 8 97 0 active sync /dev/sdg1

4 8 81 1 active sync /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde113. Re-add the hot backup disk.

[root@localhost ~]# mdadm -a /dev/md6 /dev/sd{b,c}1

mdadm: added /dev/sdb1

mdadm: added /dev/sdc1[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:44:01 2019

State : clean

Active Devices : 4

Working Devices : 6

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 49

Number Major Minor RaidDevice State

5 8 97 0 active sync /dev/sdg1

4 8 81 1 active sync /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

6 8 17 - spare /dev/sdb1

7 8 33 - spare /dev/sdc1RAID10

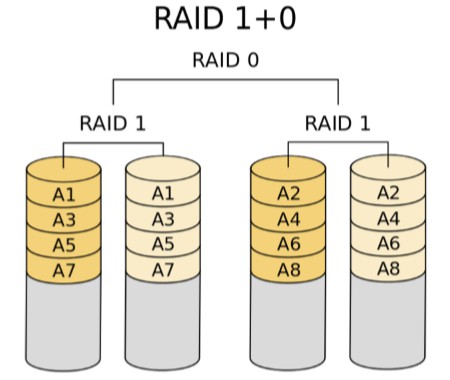

RAID10 mirrors the data first, and then groups the data. RAID1 is a redundant backup array here, while RAID0 is responsible for reading and writing the data array. At least four disks, two groups of RAID 1, then RAID 0, RAID 10 on the utilization of storage capacity is as low as RAID 1, only 50%. Raid10 scheme causes 50% disk waste, but it provides 200% speed and data security with single disk damage. When the damaged disk is not in the same RAID1, data security can be guaranteed. RAID10 can provide better performance than RAID5. This new structure has poor scalability and is expensive to use.

Experiments: RAID10 creation, formatting, mounting use, fault simulation, re-adding hot backup.

1. Add 4 20G hard disks, partition, type ID is fd.

[root@localhost ~]# fdisk -l | grep raid /dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect /dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect /dev/sde1 2048 41943039 20970496 fd Linux raid autodetect

2. Create two RAID1 without adding a hot backup disk.

[root@localhost ~]# mdadm -C -v /dev/md101 -l1 -n2 /dev/sd{b,c}1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md101 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md101 started.[root@localhost ~]# mdadm -C -v /dev/md102 -l1 -n2 /dev/sd{d,e}1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md102 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md102 started.3. Check raidstat status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md102 : active raid1 sde1[1] sdd1[0]

20953088 blocks super 1.2 [2/2] [UU]

[=========>...........] resync = 48.4% (10148224/20953088) finish=0.8min speed=200056K/sec

md101 : active raid1 sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

[=============>.......] resync = 69.6% (14604672/20953088) finish=0.5min speed=200052K/sec

unused devices: <none>[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md102 : active raid1 sde1[1] sdd1[0]

20953088 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>4. See the details of two RAID1.

[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:53:58 2019

State : clean, resyncing

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Resync Status : 62% complete

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 9

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:54:02 2019

State : clean, resyncing

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Resync Status : 42% complete

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 6

Number Major Minor RaidDevice State

0 8 49 0 active sync /dev/sdd1

1 8 65 1 active sync /dev/sde15. Create RAID 10.

[root@localhost ~]# mdadm -C -v /dev/md10 -l0 -n2 /dev/md10{1,2}

mdadm: chunk size defaults to 512K

mdadm: Fail create md10 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md10 started.6. Check raidstat status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sde1[1] sdd1[0]

20953088 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>7. Check out the details of RAID 10.

[root@localhost ~]# mdadm -D /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Sun Aug 25 16:56:08 2019

Raid Level : raid0

Array Size : 41871360 (39.93 GiB 42.88 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:56:08 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost:10 (local to host localhost)

UUID : 23c6abac:b131a049:db25cac8:686fb045

Events : 0

Number Major Minor RaidDevice State

0 9 101 0 active sync /dev/md101

1 9 102 1 active sync /dev/md1028. Formatting.

[root@localhost ~]# mkfs.xfs /dev/md10

meta-data=/dev/md10 isize=512 agcount=16, agsize=654208 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10467328, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5112, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=09. Mount and use.

[root@localhost ~]# mkdir /mnt/md10 [root@localhost ~]# mount /dev/md10 /mnt/md10/ [root@localhost ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos-root xfs 17G 1014M 16G 6% / devtmpfs devtmpfs 901M 0 901M 0% /dev tmpfs tmpfs 912M 0 912M 0% /dev/shm tmpfs tmpfs 912M 8.7M 903M 1% /run tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup /dev/sda1 xfs 1014M 143M 872M 15% /boot tmpfs tmpfs 183M 0 183M 0% /run/user/0 /dev/md10 xfs 40G 33M 40G 1% /mnt/md10

10. Create test files.

[root@localhost ~]# touch /mnt/md10/test{1..9}.txt

[root@localhost ~]# ls /mnt/md10/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt11. Fault simulation.

[root@localhost ~]# mdadm -f /dev/md101 /dev/sdb1 mdadm: set /dev/sdb1 faulty in /dev/md101 [root@localhost ~]# mdadm -f /dev/md102 /dev/sdd1 mdadm: set /dev/sdd1 faulty in /dev/md102

12. View the test files.

[root@localhost ~]# ls /mnt/md10/ test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

13. View status.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sde1[1] sdd1[0](F)

20953088 blocks super 1.2 [2/1] [_U]

md101 : active raid1 sdc1[1] sdb1[0](F)

20953088 blocks super 1.2 [2/1] [_U]

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:01:11 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Consistency Policy : resync

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 23

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 33 1 active sync /dev/sdc1

0 8 17 - faulty /dev/sdb1[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:00:43 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Consistency Policy : resync

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 19

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 65 1 active sync /dev/sde1

0 8 49 - faulty /dev/sdd1[root@localhost ~]# mdadm -D /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Sun Aug 25 16:56:08 2019

Raid Level : raid0

Array Size : 41871360 (39.93 GiB 42.88 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:56:08 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost:10 (local to host localhost)

UUID : 23c6abac:b131a049:db25cac8:686fb045

Events : 0

Number Major Minor RaidDevice State

0 9 101 0 active sync /dev/md101

1 9 102 1 active sync /dev/md10214. Remove damaged disks.

[root@localhost ~]# mdadm -r /dev/md101 /dev/sdb1 mdadm: hot removed /dev/sdb1 from /dev/md101 [root@localhost ~]# mdadm -r /dev/md102 /dev/sdd1 mdadm: hot removed /dev/sdd1 from /dev/md102

[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 1

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:04:59 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 26

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 33 1 active sync /dev/sdc1[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 1

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:05:07 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 20

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 65 1 active sync /dev/sde115. Re-add the hot backup disk.

[root@localhost ~]# mdadm -a /dev/md101 /dev/sdb1 mdadm: added /dev/sdb1 [root@localhost ~]# mdadm -a /dev/md102 /dev/sdd1 mdadm: added /dev/sdd1

16. Check the status again.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sdd1[2] sde1[1]

20953088 blocks super 1.2 [2/1] [_U]

[====>................] recovery = 23.8% (5000704/20953088) finish=1.2min speed=208362K/sec

md101 : active raid1 sdb1[2] sdc1[1]

20953088 blocks super 1.2 [2/1] [_U]

[======>..............] recovery = 32.0% (6712448/20953088) finish=1.1min speed=203407K/sec

unused devices: <none>[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sdd1[2] sde1[1]

20953088 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdb1[2] sdc1[1]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:07:28 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 45

Number Major Minor RaidDevice State

2 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:07:36 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 39

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 65 1 active sync /dev/sde1

COMPARISON BETWEEN COMMON RAID

| Name | Number of hard disks | Capacity/utilization ratio | Readability | Writing performance | data redundancy |

|---|---|---|---|---|---|

| RAID0 | N | N Block Sum | N times | N times | No, one fault, all data lost |

| RAID1 | N (even number) | 50% | ↑ | ↓ | Write two devices to allow one failure |

| RAID5 | N≥3 | (N-1)/N | ↑↑ | ↓ | Calculate checks to allow a failure |

| RAID6 | N≥4 | (N-2)/N | ↑↑ | ↓↓ | Dual Check, Two Faults Allowed |

| RAID10 | N (even number, N > 4) | 50% | (N/2) times | (N/2) times | Allow each disk in the base group to be damaged |

Some remarks

The operation involved in this article is very simple, but a lot of viewing takes up a lot of space, to see the key points, the process is a routine, are repetitive.