1, ceph L version code

Download github, omit

2, Change do_cmake.sh file

For the comment line, add the following: #cmake -DBOOST_J=$(nproc) $ARGS "$@" .. cmake -DCMAKE_C_FLAGS="-O0 -g3 -gdwarf-4" -DCMAKE_CXX_FLAGS="-O0 -g3 -gdwarf-4" -DBOOST_J=$(nproc) $ARGS "$@" ..

Interpretation of the above modifications:

CMAKE_C_FLAGS = "- O0 -g3 -gdwarf-4": c language compilation configuration CMAKE_CXX_FLAGS = "- O0 -g3 -gdwarf-4": c + + compilation configuration -O0: turn off compiler optimization. If not, when using GDB tracker, most variables are optimized and cannot be displayed. The production environment must be turned off -g3: means a lot of debugging information will be generated -Gdwarf-4: dwarf is a debugging format, and the version of dwarf-4 is 4

3, do_cmake.sh script,

This step will create a directory called build and enter the build directory. You can see the Makefile file to facilitate the next make compilation.

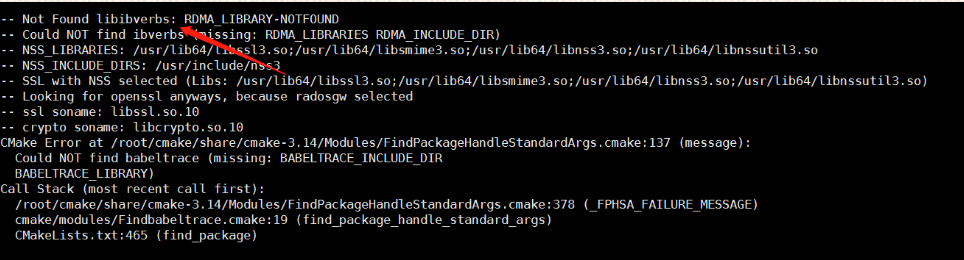

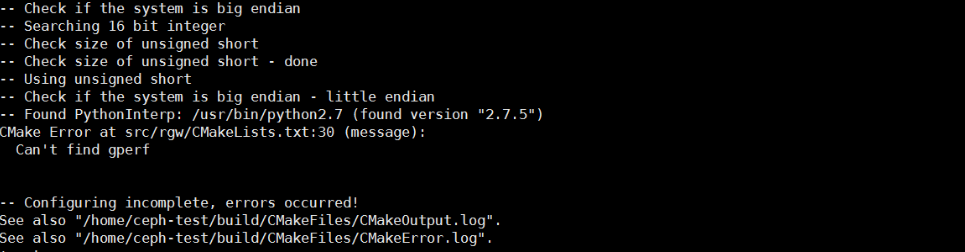

Many errors should be reported in this step, which can be solved one by one:

About the following rpm packages and their dependencies need to be installed:

yum install python-sphinx nss-devel lttng-ust-devel babeltrace libbabeltrace-devel libibverbs python3 python3-devel python-Cython python3*-Cython gperftools gperftools-devel gperf Tips: Install here python3-devel There will be conflicts when you uninstall python-devel,Waiting for installation python3-devel After installation python-devel That's right; rpm -e python-devel yum install python-devel

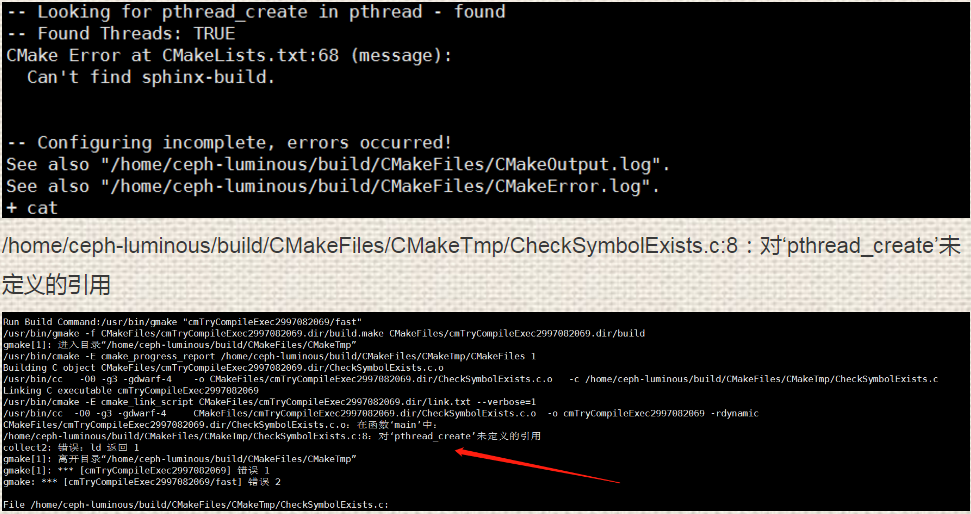

1. pthread_create problem

This problem has been fixed for a long time

/Home / CEPH luminous / build / cmakefiles / cmaketmp / checksymbolexists. C: 8: for 'pthread'_ Undefined reference to create '

solve:

yum install python-sphinx

Some bloggers say that it can be solved by upgrading the version of cmake, but the problems still exist after upgrading.

ref: https://tracker.ceph.com/issues/19294

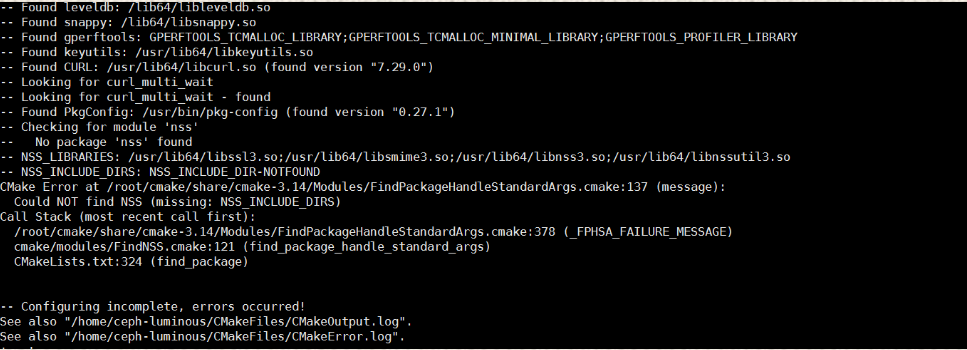

2. nss related

solve:

yum install nss-devel

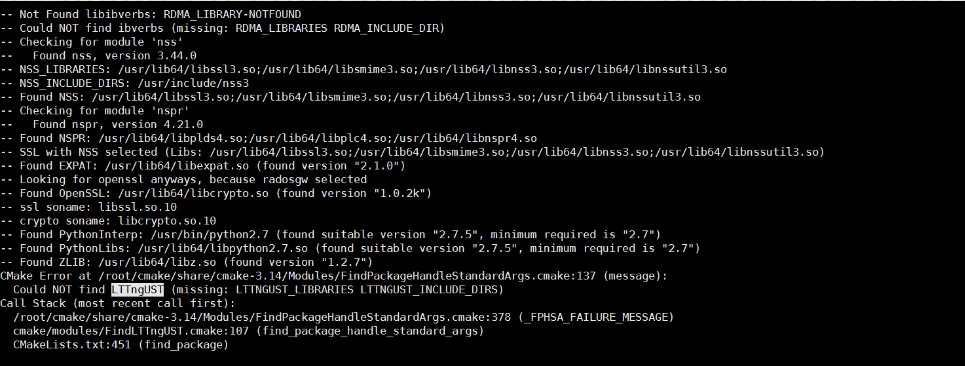

3.LTTngUST related

solve:

yum install lttng-ust-devel

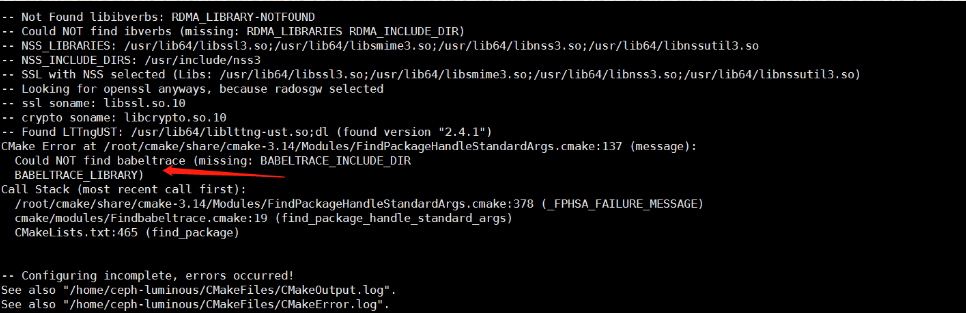

4.babeltrace

solve:

yum install babeltrace

yum install libbabeltrace-devel

5.libibverbs

solve:

yum install libibverbs

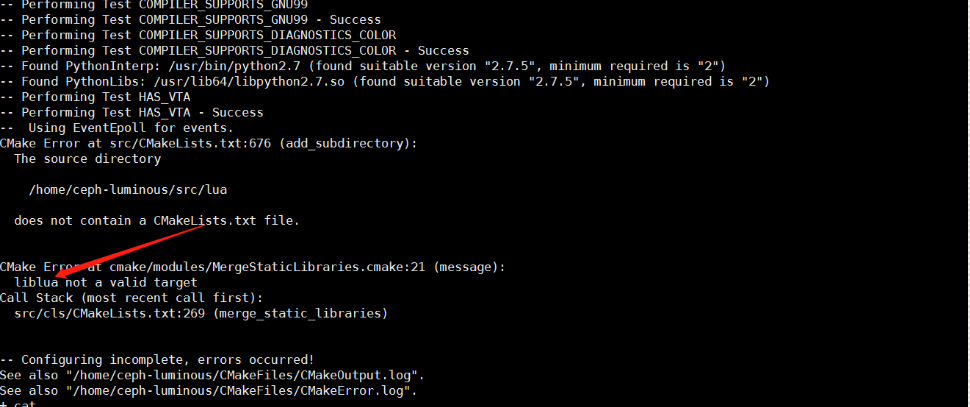

6.liblua related

The problem has also been fixed for a long time.

solve:

Forget how,

It seems to be using do_cmake.sh In the script, execute git submodule update --init --recursive to install the relevant source package? (because I commented out this line for convenience)

Compiled environment dependent packages? [root@ceph1 ceph-luminous]# rpm -qa | grep lua lua-5.1.4-15.el7.x86_64 lua-static-5.1.4-15.el7.x86_64 [root@ceph1 ceph-luminous]# ls src/lua/ cmake CMakeLists.txt dist.info doc etc Makefile README.md src

https://tracker.ceph.com/issues/21418

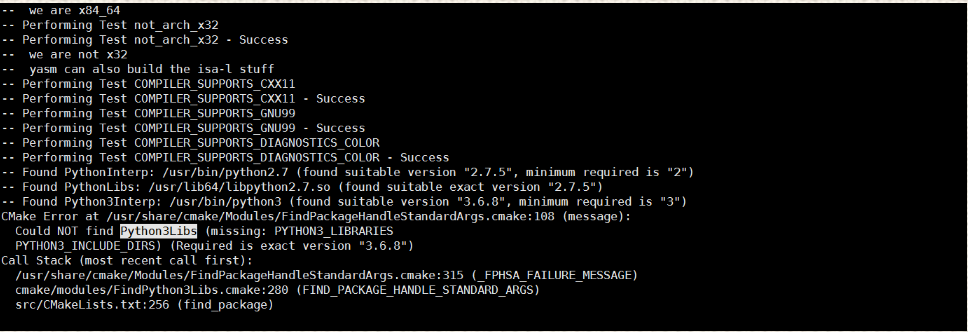

7. Python 3libs problem

solve:

yum install yum

install python3-devel python3-devel

tips: uninstall rpm-e Python devel, install python3 devel, and then install Python devel

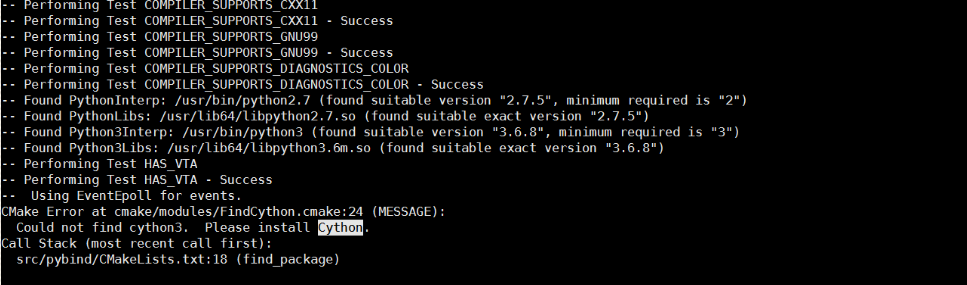

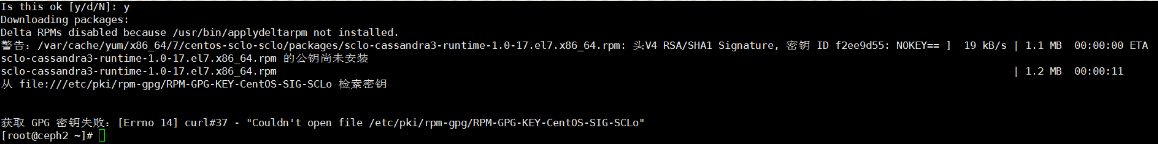

8. Python problem

solve:

yum install python-Cython,Actually installed: sclo-cassandra3-runtime-1.0-17.el7.x86_64 sclo-cassandra3-python2-Cython-0.27.1-2.el7.x86_64 yum install python3*-Cython,Actually installed: python34-3.4.10-4.el7.x86_64.rpm python34-Cython-0.28.5-1.el7.x86_64.rpm python34-libs-3.4.10-4.el7.x86_64.rpm python36-Cython-0.28.5-1.el7.x86_64.rpm

9.gperf problem:

solve:

Yum install gperftools gperftools devel (69 dependent packages)

yum install gperf

10.sclo problem

solve:

yum install centos-release-scl

Do now_ cmake.sh complete

4, Compile and install

Execute cmake. - LH to see what compilation options ceph has, and select according to your own needs

implement

cmake .. -DWITH_LTTNG=OFF -DWITH_RDMA=OFF -DWITH_FUSE=OFF -DWITH_DPDK=OFF -DCMAKE_INSTALL_PREFIX=/usr

Execute make – j8 to compile the source code

Execute make install to compile and install the source code

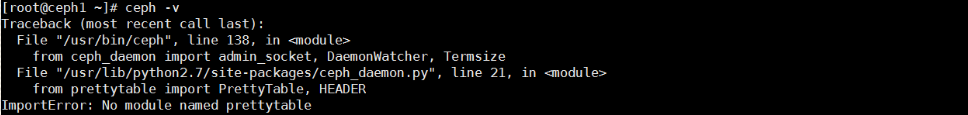

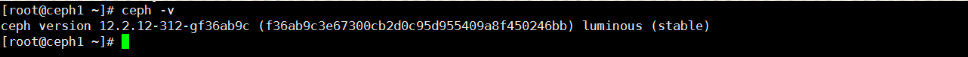

5, Check ceph version, prettytable problem

solve:

yum install python-prettytable

6, Start deployment

1. Time synchronization:

[root@ceph-node1 ~]# yum install -y ntp ntpdate ntp-doc [root@ceph-node2 ~]# yum install -y ntp ntpdate ntp-doc [root@ceph-node3 ~]# yum install -y ntp ntpdate ntp-doc [root@ceph-node1 ~]# ntpdate ntp1.aliyun.com 31 Jul 03:43:04 ntpdate[973]: adjust time server 120.25.115.20 offset 0.001528 sec [root@ceph-node1 ~]# hwclock Tue 31 Jul 2018 03:44:55 AM EDT -0.302897 seconds [root@ceph-node1 ~]# crontab -e */5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com

2. No key access between nodes and configuration of / etc/hosts and hostname

slightly

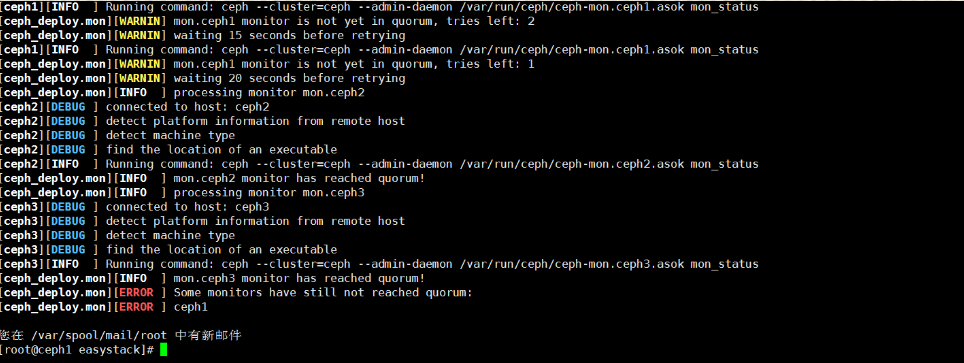

3. Initialization configuration of mon

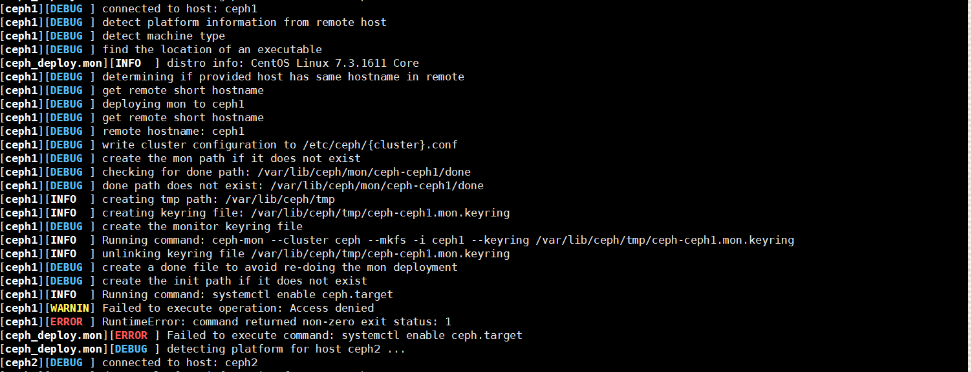

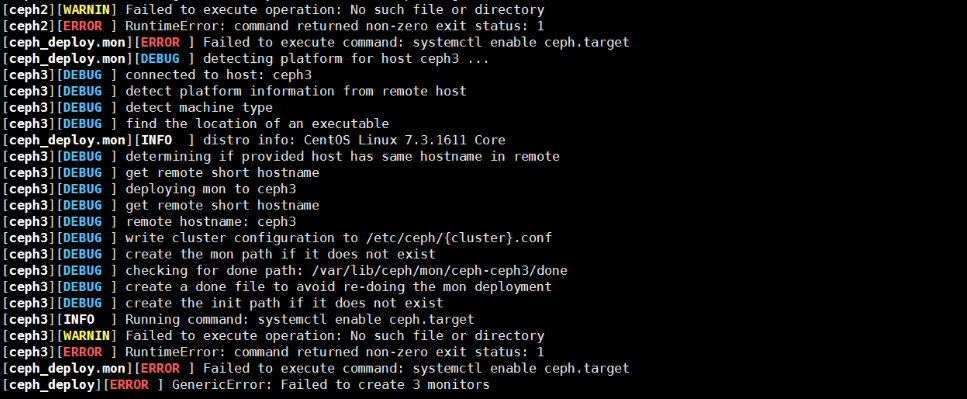

ceph-deploy new ceph1 ceph2 ceph3 --public-network=192.168.111.0/24 --cluster-network=192.168.111.0/24 ceph-deploy --overwrite-conf mon create-initial

Question 1:

Error: Failed to execute command: systemctl enable ceph.target (the problem will be handled for a long time)

After setting setenforce 0

After the source code is installed, no target and service files are generated under / usr/lib/systemd/system /

solve:

[root@ceph2 ceph-luminous]# pwd /home/ceph-luminous cp systemd/ceph*.target /usr/lib/systemd/system/ cp systemd/ceph*.service /usr/lib/systemd/system/ cp systemd/ceph /usr/lib/systemd/system/

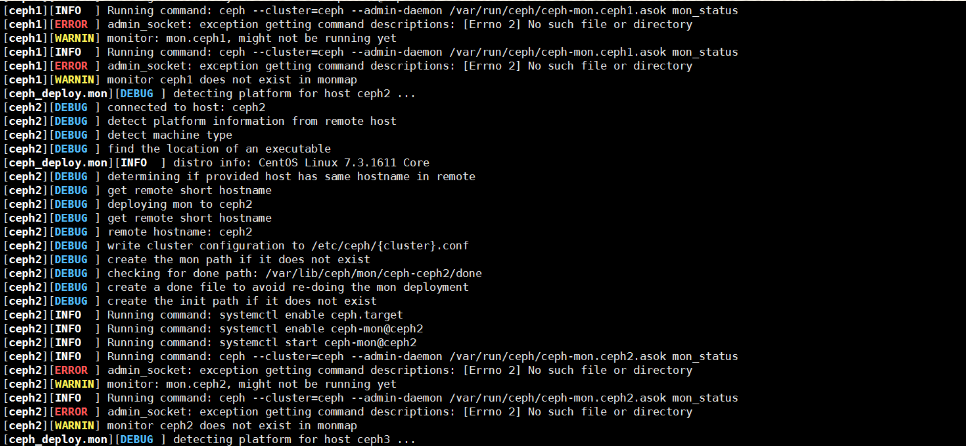

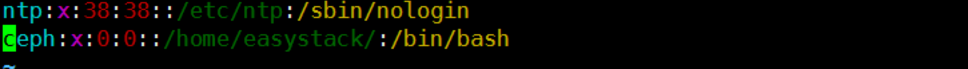

Question 2:

No ceph user issues

solve:

Add ceph users and modify permissions adduser -d /home/easystack/ -m ceph vim /etc/passwd / / change to 0

Question 3:

This is because the name and hostname of / etc/hosts are inconsistent (or conflict).

After correct modification:

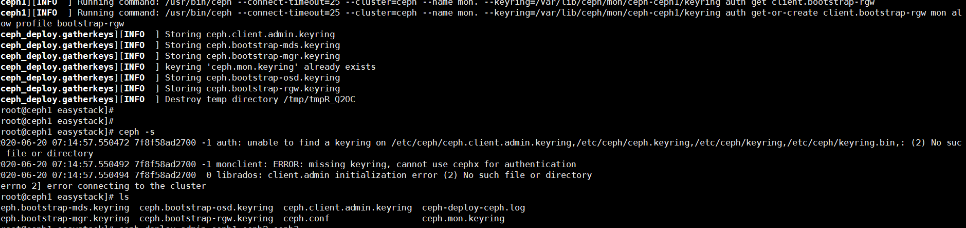

Execute CEPH deploy admin ceph1 ceph2 ceph3 to push the key to the corresponding location (mainly ceph.client.admin.keyring should be placed under / etc/ceph /)

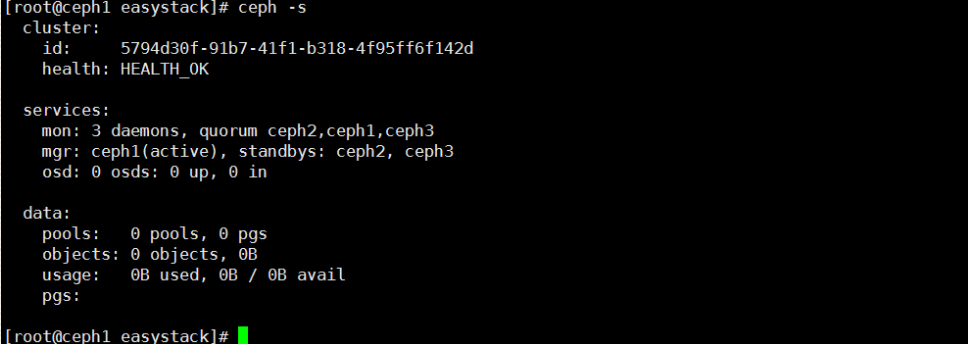

So far, mon initialization succeeded

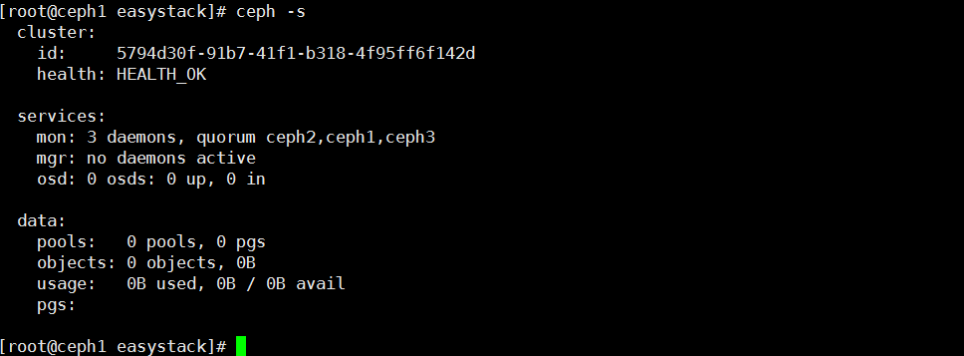

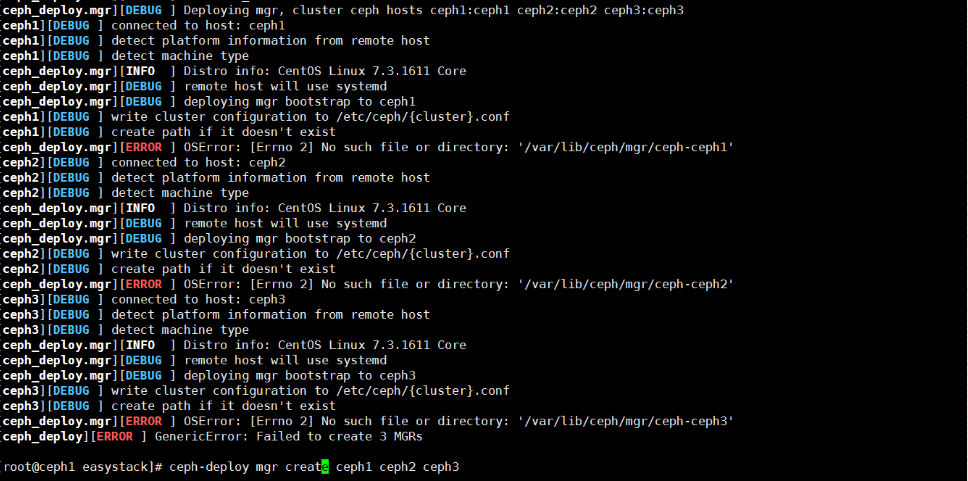

4. mgr deployment

no active mgr is a manager added after version J. at this time, you need to open this function module:

solve:

Just create the mgr directory manually: mkdir -p /var/lib/ceph/mgr

Redeployment:

5. osd deployment

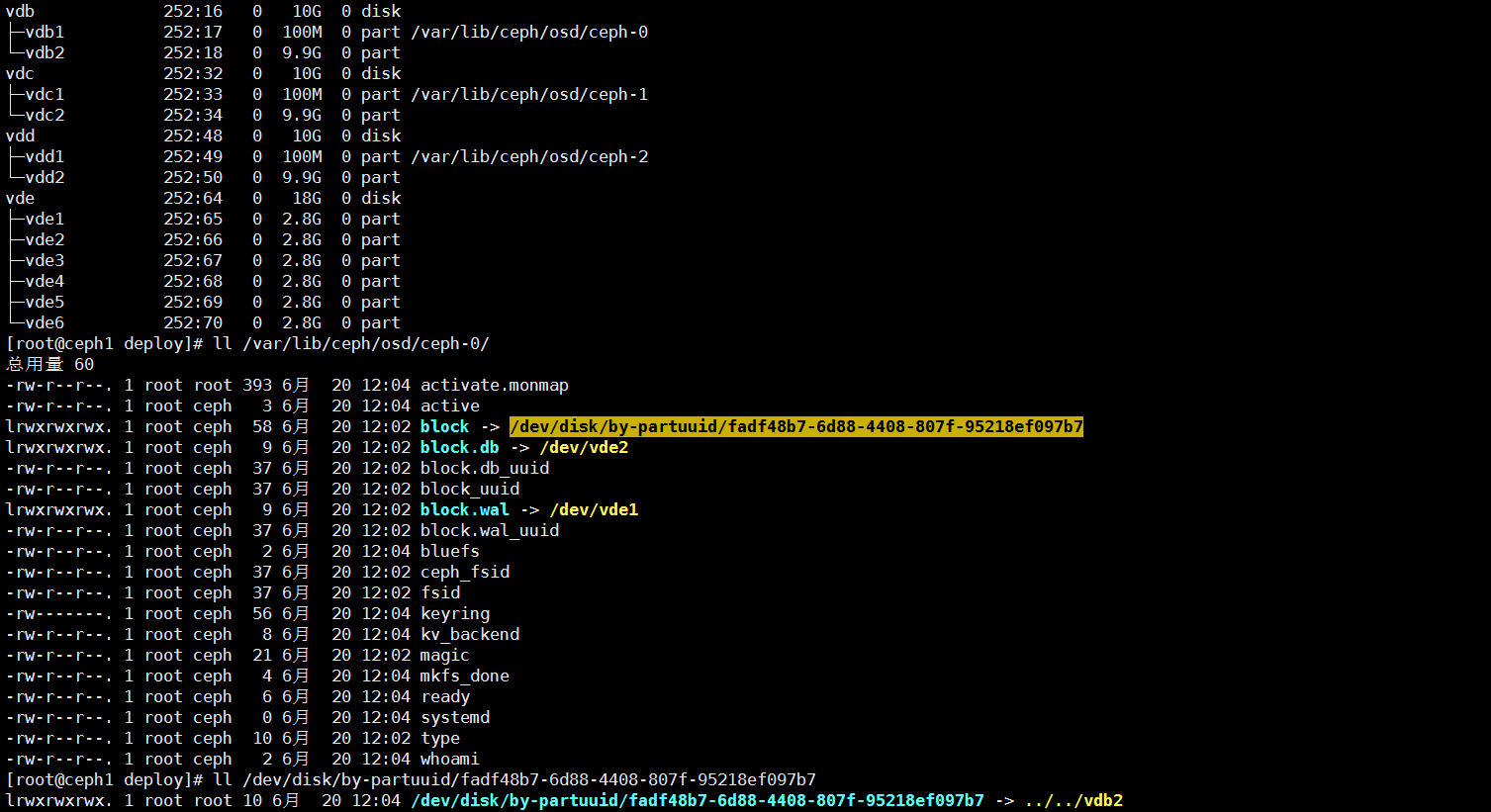

//Here, the effect is the same when prepare is changed to create ceph-deploy osd prepare --bluestore --block-wal /dev/vde1 --block-db /dev/vde2 ceph1:/dev/vdb ceph-deploy osd activate ceph1:/dev/vdb1

After deployment, the structure relationship among block wal, block dB and block (take osd.0 as an example):

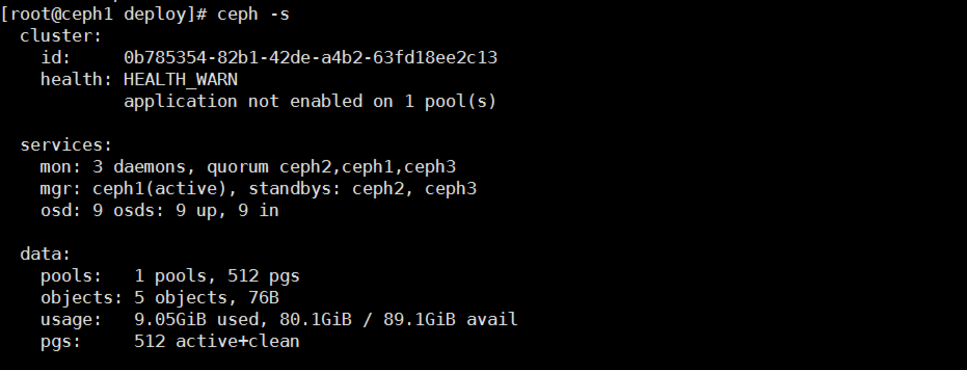

After the deployment is successful, "health" appears_ WARN application not enabled on 1 pool(s)”,refs: https://ceph.io/community/new-luminous-pool-tags/

It is used to mark the created storage pool: use 'ceph osd pool application enable <pool-name> <app-name>', where <app-name> is 'cephfs', 'rbd', 'rgw', or freeform for custom applications. //handle: ceph osd pool application enable dpool rbd

a. Simplify the behavior of pools by allowing senior management tools to easily identify their use cases. For example, the new Ceph dashboard (the upcoming blog) currently uses a set of heuristics to guess whether to use pools for RBD workloads. Pool tags avoid cumbersome and error prone processes.

b. Prevent applications from using pools that are not properly marked for use by them. For example, the rbd CLI can warn or prevent image creation in a pool with an RGW label.

6. End

The final deployment is completed, and the renderings are as follows:

Deployment script:

deploy_luminous.sh

Reference link:

https://www.cnblogs.com/powerrailgun/p/12133107.html

https://www.cnblogs.com/linuxk/p/9419423.html

https://www.cnblogs.com/hukey/p/11975109.html