Version information

Amabri :2.7.4

HDP: 3.1.4

HUE:4.10.0

Download installation package

https://cdn.gethue.com/downloads/hue-4.7.0.tgz

Installation dependency

yum install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-plain gcc gcc-c++ krb5-devel libffi-devel libxml2-devel libxslt-devel make mysql mysql-devel openldap-devel python-devel sqlite-devel gmp-devel yum install -y nodejs npm install --global npm

compile

cd ./hue PREFIX=/usr/share make install

Integration of HUE into Ambar management

- Execute on ambari server machine

VERSION=`hdp-select status hadoop-client | sed 's/hadoop-client - \([0-9]\.[0-9]\).*/\1/'` rm -rf /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/HUE git clone https://github.com/EsharEditor/ambari-hue-service.git /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/HUE

Note: the purpose of VERSION is to extract the VERSION information of hdp,

/var/lib/ambariserver/resources/stacks/HDP/VERSION/services are all files of ambari management tools (hive, HDFS, yarn, etc. are in this directory and exist in the form of folders). Directly unzip ambari-hue-service-branch-2.0.0 Zip to / var / lib / ambari server / resources / stacks / HDP / $version / services / hue directory. At this time, hue installs ambari-hue-service-branch-2.0.0 directory. At this time, all files in this directory should be moved to hue, and the empty file should be deleted.

- Modify configuration

- Modify version information

cd /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/HUE sed -i 's/4.6.0/4.10.0/g' metainfo.xml README.md package/scripts/params.py package/scripts/setup_hue.py

- Modify / var / lib / ambari server / resources / stacks / HDP / 2.6/services/hue/ambari-hue-service-branch-2.0.0/package/scripts/params Download in line 33 of PY_ Change the URL configuration to the actual hue yum source address

For example: download_url = 'http://localhosts/HDP/centos7/2.6.5.0-292/hue/hue-4.7.0.tgz . Put line 99 hue_ install_ The value of dir configuration item is changed to: / usr/hdp/2.6.5.0-292;

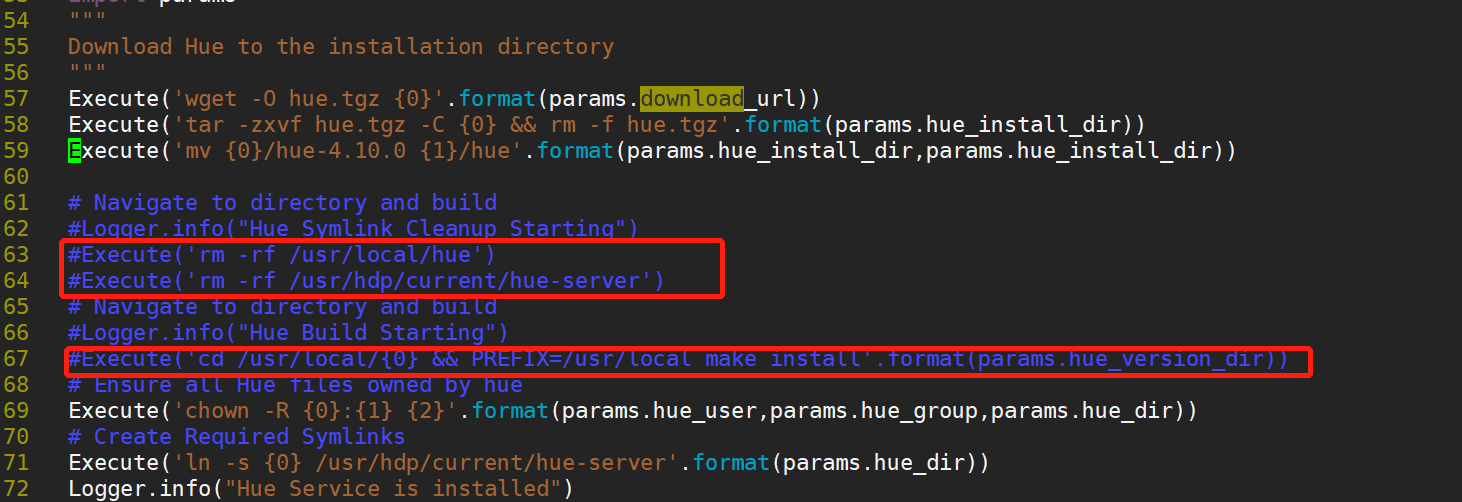

- Modify package / scripts / common Change line 57 of Py to code

Execute('0} | xargs wget -O hue.tgz '. format(params.download_url)) is modified to: Execute('wget -O hue.tgz {0}'. format(params.download_url)).

- Note the configuration content of httpfs in lines 87-89;

- Modify package / files / configurations PASSWD configuration item in line 39 of SH. modify the configuration according to the actual login password of ambari web

- Restart ambari server

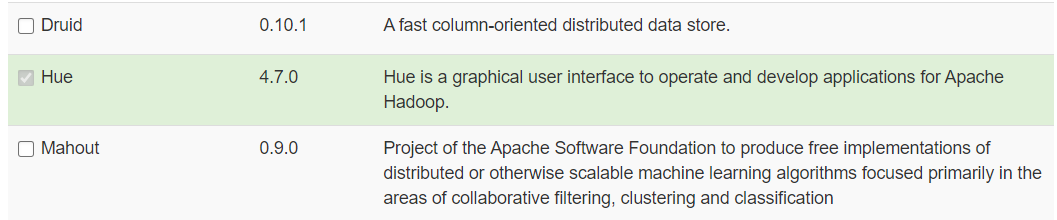

Installing hue through ambari web

You can install hue as a normal component from the ambari web page.

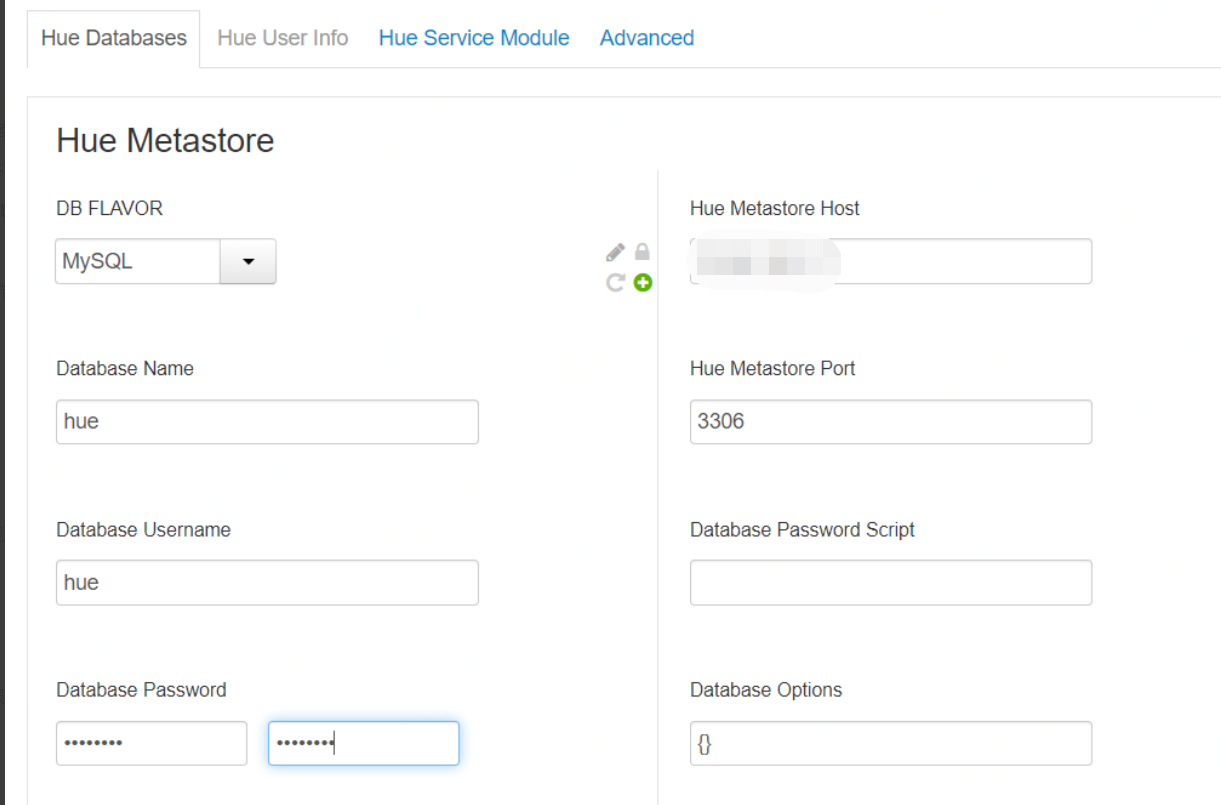

During the installation process, modify the hue metadata store to mysql, log in to mysql, create the corresponding database, user and authorize;

Modify HUE configuration

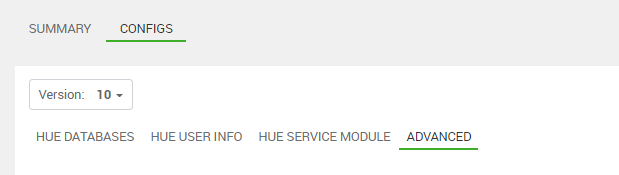

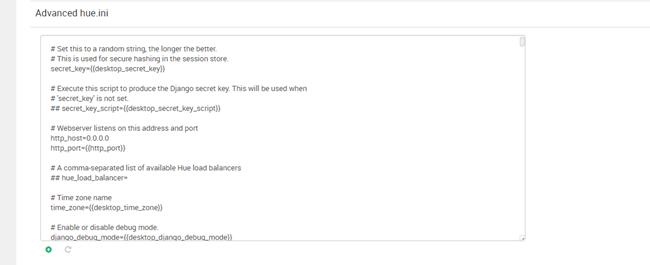

The following is in the ADVANCED page of ambari's hue interface Ini can't be modified directly in the configuration file, because ambari manages hue, and hue's configuration is stored in ambari's metadata instead of hue's configuration file. The direct command line has no effect on the modification of hue's configuration file.

[[database]] engine=mysql host=0.0.0.0 port=3306 user=hue password=xxx name=hue [desktop] time_zone=Asia/Shanghai [hadoop] [[hdfs_clusters]] webhdfs_url=http://0.0.0.0:14000/webhdfs/v1; [beeswax] hive_server_host=0.0.0.0 hive_server_port=10000 use_sasl=true.

After modifying the configuration, you need to initialize the hue database table,

cd /usr/hdp/2.6.5.0-292/hue/build/env/bin/ /hue syncdb /hue migrate

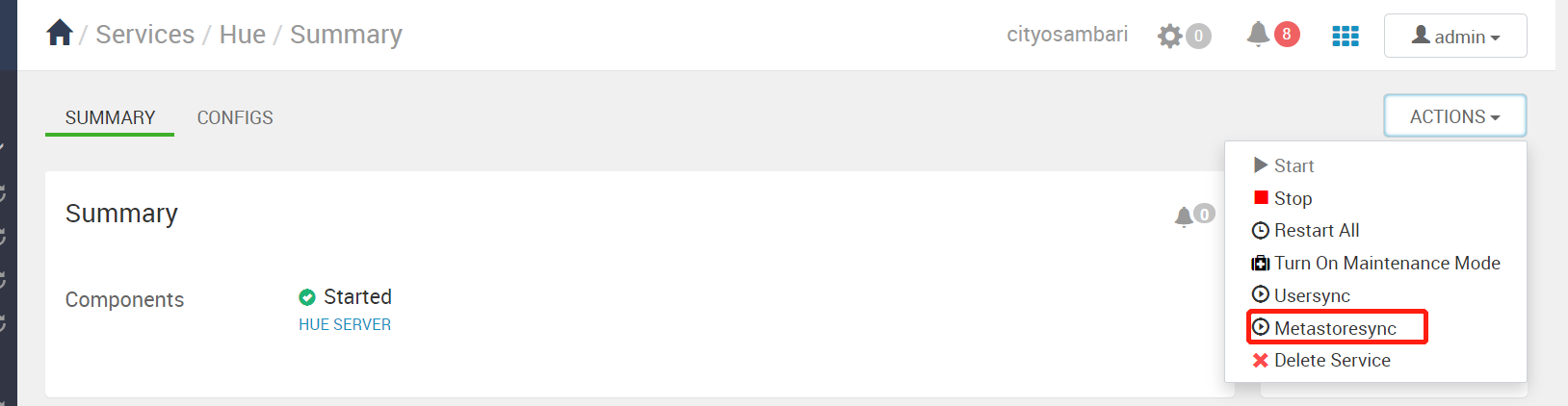

Or click on the hue page:

Replace the compiled hue installation package

After the installation is completed, do not click start, because hue is not compiled and cannot run.

You can directly transfer the hue folder compiled on the virtual machine with network to overwrite the hue in the installation directory, And create a new compilation directory for soft connection to the virtual machine (for example, hue is compiled in the / usr/share directory of the virtual machine, and the installation directory is / usr/hdp/3.1.4.0-315/hue. You need to copy a copy to the installation directory / usr/hdp/3.1.4.0-315/hue defined in ambari, and ln – s /usr/hdp/3.1.4.0-315 /usr/hdp/share.

Install Hadoop httpfs

Since our hdfs enables HA mode, we need to start Hadoop httpfs service, because webhdfs cannot automatically sense hdfs site HA high availability information configured in XML. Manually install Hadoop httpfs using yum or up2date, modify the configuration file and start httpfs:

yum install -y hadoop-httpfs echo 'source /etc/profile'>> /etc/hadoop-httpfs/conf/httpfs-env.sh systemctl start hadoop-httpfs hdp-select set hadoop-httpfs 3.1.x.x-xxx

Modify / usr / HDP / current / Hadoop httpfs / SBIN / httpfs sh

#!/bin/bash # Autodetect JAVA_HOME if not defined

if [ -e /usr/libexec/bigtop-detect-javahome ]; then

. /usr/libexec/bigtop-detect-javahome

elif [ -e /usr/lib/bigtop-utils/bigtop-detect-javahome ]; then

. /usr/lib/bigtop-utils/bigtop-detect-javahome

fi

### Added to assist with locating the right configuration directory

export HTTPFS_CONFIG=/etc/hadoop-httpfs/conf

### Remove the original HARD CODED Version reference

export HADOOP_HOME=${HADOOP_HOME:-/usr/hdp/current/hadoop-client}

export HADOOP_LIBEXEC_DIR=${HADOOP_HOME}/libexec

Start HUE

QA

When you install hue on ambari, you will be prompted with an error: 'ascii' codec can't encode character u '\ u2018'

Processing: find the error source code, add the following three lines of code to the header, and then reinstall it.

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

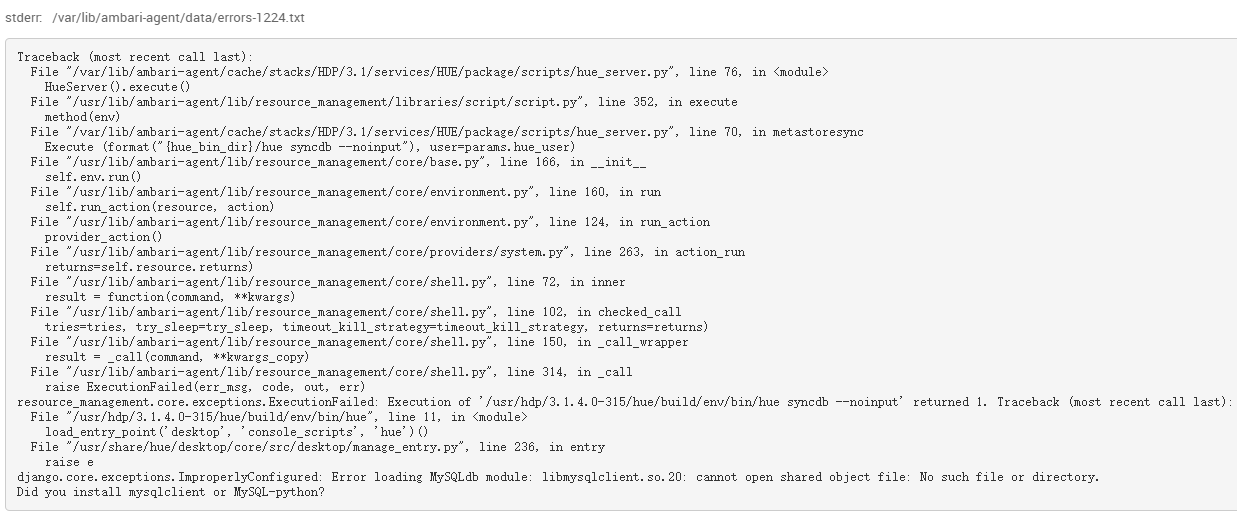

During installation, an error is reported: Django core. exceptions. ImproperlyConfigured: Error loading MySQLdb module: libmysqlclient. so. 20: cannot open shared object file: No such file or directory. Did you install mysqlclient or MySQL-python?

Processing: ln - S / export / server / mysql-5.7.22/lib/libmysqlclient so. 20 /usr/lib64/

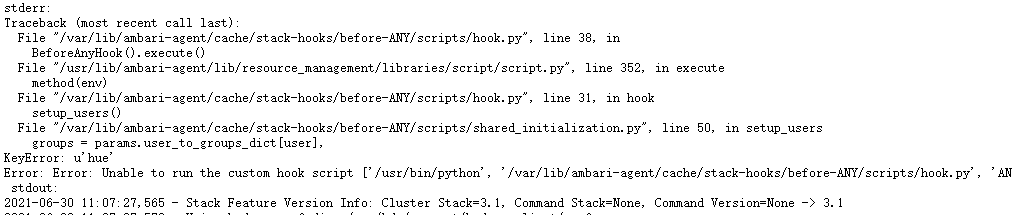

The installation process prompts keyError, u'hue '

Processing: VI / var / lib / ambari server / resources / stacks / HDP / 3.1/services/hue/configuration/hue-env Add red ink part to XML

<property>

<name>hue_user</name>

<value>hue</value>

<display-name>Hue User</display-name>

<property-type>USER</property-type>

<description>hue user</description>

<value-attributes>

<type>user</type>

<overridable>false</overridable>

<user-groups>

<property>

<type>cluster-env</type>

<name>user_group</name>

</property>

<property>

<type>hue-env</type>

<name>hue_group</name>

</property>

</user-groups>

</value-attributes>

<on-ambari-upgrade add="true"/>

</property>

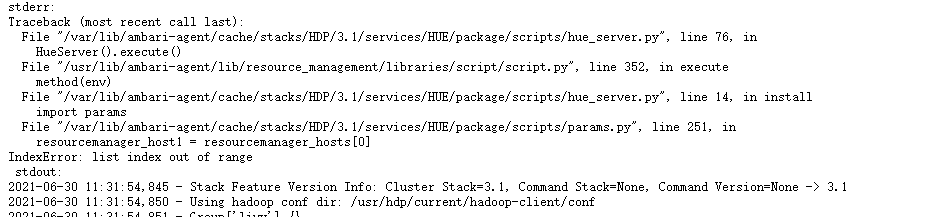

resourceManager not found, indicating that the array subscript is out of bounds

handle:

take https://github.com/EsharEditor/ambari-hue-service Replace with https://github.com/steven-matison/HDP3-Hue-Service

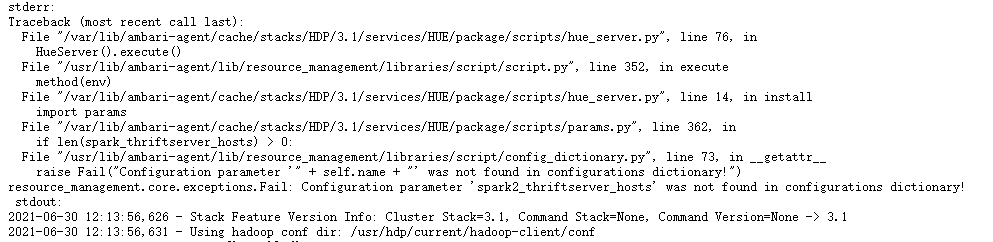

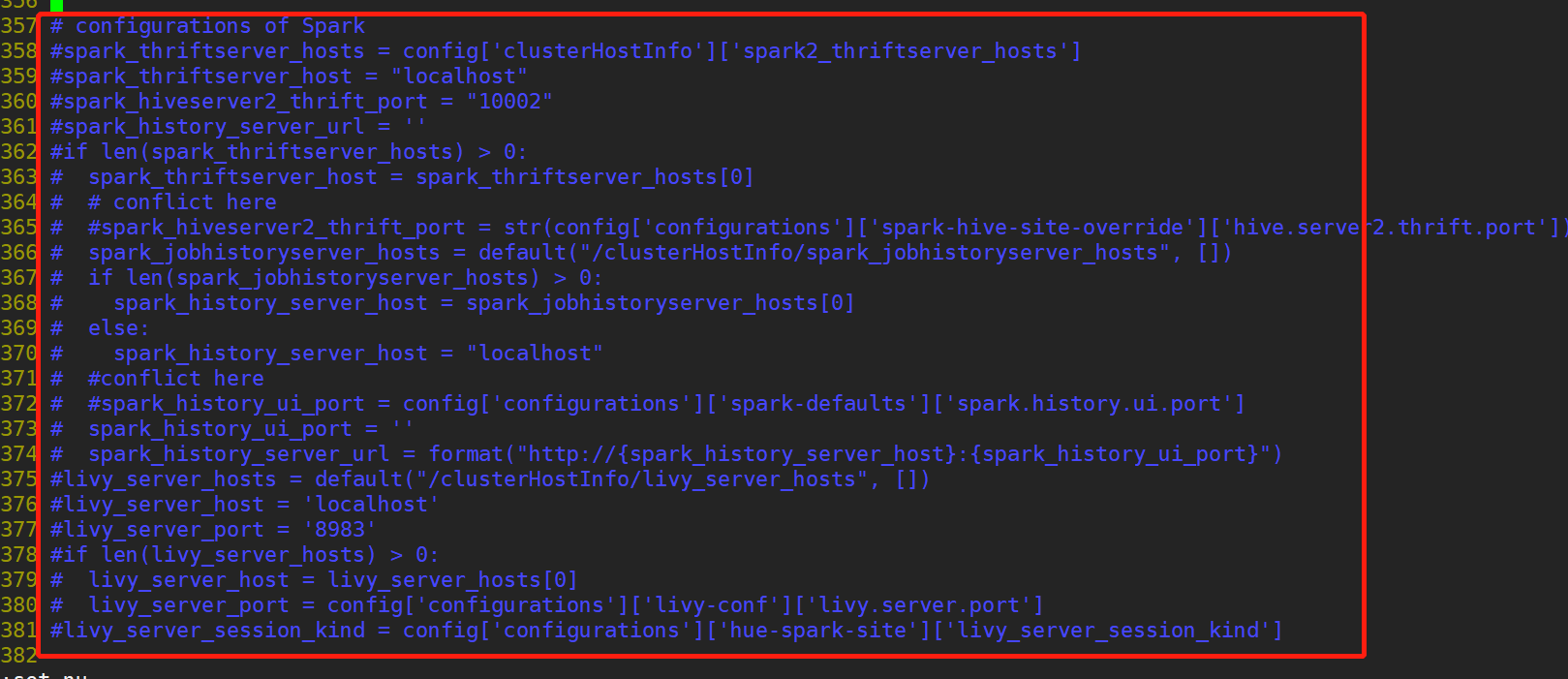

spark thrift server not found

Processing: comment out package / script / common Compile relevant parts and package / script / param in py Relevant parts of spark in PY

common.py

After you start hue, you will be prompted to write a readonly database

handle:

Authorization to modify hue installation directory, chown – R hue:hue /usr/hdp/3.1.4.0-315/hue

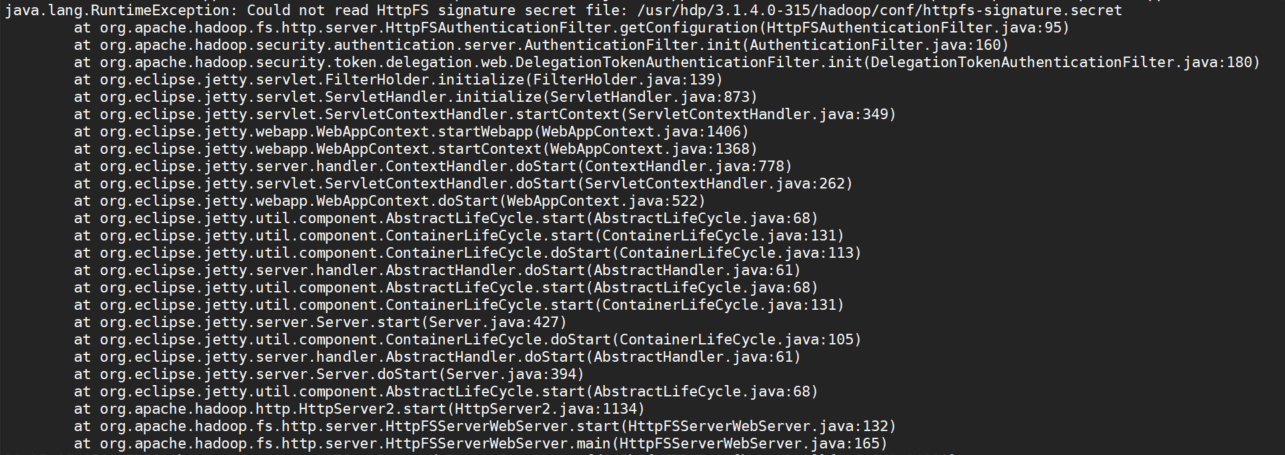

When installing httpfs, the service starts normally and the port does not exist. It is in / var / log / Hadoop / HDFS / Hadoop root httpfs - $host Out display

Handle, touch the file in the directory described in the error report, and the file content is not empty.

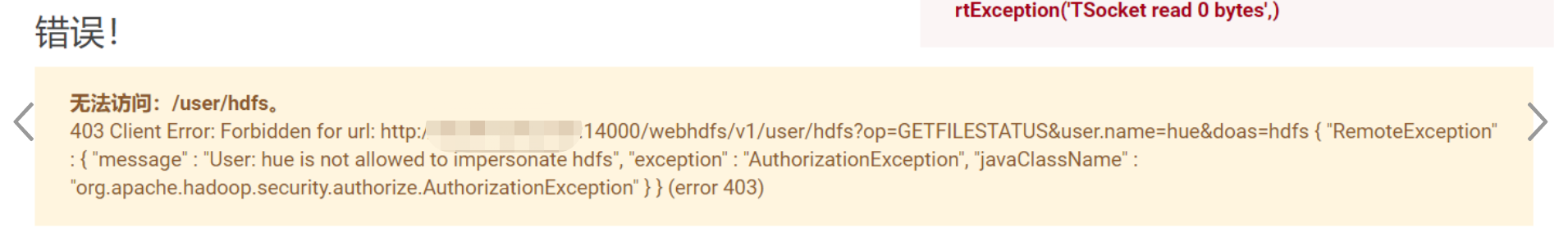

Prompt hue unable to impersonate user

Processing: create a new httpfs site in / etc/hadoop/conf directory XML, fill in the following and restart httpfs

<!-- Hue HttpFS proxy user setting -->

<configuration>

<property>

<name>httpfs.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>httpfs.proxyuser.hue.groups</name>

<value>*</value>

</property>

</configuration>

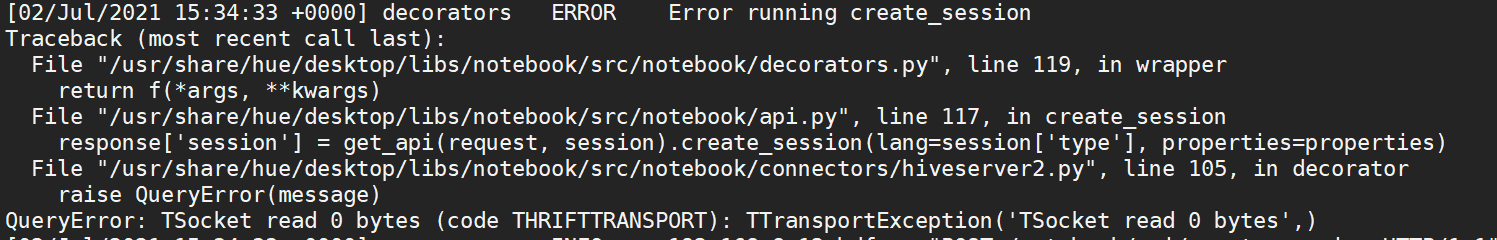

hue is unable to connect to hive, prompting Tsocket read 0 bytes

Processing: hue Use in ini_ SASL = true (even if the cluster does not have Kerberos enabled)