Share your achievements in learning VoiceEngine these two days for your reference. If you have any questions, you are welcome to point out and learn and make progress together.

This paper will analyze the basic structure of VoiceEngine. The analysis method is bottom-up: see how an audio encoder is encapsulated into VoiceEngine layer by layer.

First, let's take a look at the core API of VoiceEngine, which is basically in several header files of webrtc\voiceengine\include. Specifically, voe_base -Full duplex VoIP applications that support G.711 encoding and RTP transmission. If you want to support other encoders, you need the support of voicodec -Initializing and destroying VoiceEngine instances -Record trace information through text or callback function -Support multiple channel s (mixing or sending to multiple destination addresses) voe_codec -Support other encoders -Voice Activity detection -Possibility to specify how to map received payload types to codecs. voe_dtmf -Telephone event transmission. -DTMF tone generation. voe_errors -Error message voe_external_media -Register additional audio processing features voe_file -Playback, recording and conversion of files voe_hardware -Operation of audio equipment -Equipment information -CPU load monitoring voe_neteq_stats -Obtain network information and audio decoding information voe_network -Support for additional protocols -Packet timeout notification. -Dead-or-Alive connection observations. voe_rtp_rtcp - Callbacks for RTP and RTCP events such as modified SSRC or CSRC. - SSRC handling. - Transmission of RTCP sender reports. - Obtaining RTCP data from incoming RTCP sender reports. - RTP and RTCP statistics (jitter, packet loss, RTT etc.). - Redundant Coding (RED) - Writing RTP and RTCP packets to binary files for off-line analysis of the call quality. voe_video_sync -RTP header modification (time stamp and sequence number fields). -Playout delay tuning to synchronize the voice with video. -Playout delay monitoring. voe_volume_control -Speaker and microphone volume control -Mute voe_audio_processing -Noise suppression -Automatic gain control AGC -Echo cancellation EC -VAD, NS and AGC at the receiving end -Measurement of voice, noise and echo level s -Generation and recording of audio processing debugging information -Detect keyboard action

All kinds of audio coders are in various projects under webrtc\modules, including g711,g722,ilbc,isac,red (redundant audio coding), pcm16b, CNG for noise generation, and third_ opus under the party directory. Take G722 audio encoder as an example, in webrtc\modules \ audio_ coding\codecs\G722\g722_ enc_ The dec.h file defines two key structures G722EncoderState and G722DecoderState in the encoding and decoding process, as follows:

typedef struct

{

/*! TRUE if the operating in the special ITU test mode, with the band split filters

disabled. */

int itu_test_mode;

/*! TRUE if the G.722 data is packed */

int packed;

/*! TRUE if encode from 8k samples/second */

int eight_k;

/*! 6 for 48000kbps, 7 for 56000kbps, or 8 for 64000kbps. */

int bits_per_sample;

/*! Signal history for the QMF */

int x[24];

struct

{

int s;

int sp;

int sz;

int r[3];

int a[3];

int ap[3];

int p[3];

int d[7];

int b[7];

int bp[7];

int sg[7];

int nb;

int det;

} band[2];

unsigned int in_buffer;

int in_bits;

unsigned int out_buffer;

int out_bits;

} G722EncoderState;

typedef struct

{

/*! TRUE if the operating in the special ITU test mode, with the band split filters

disabled. */

int itu_test_mode;

/*! TRUE if the G.722 data is packed */

int packed;

/*! TRUE if decode to 8k samples/second */

int eight_k;

/*! 6 for 48000kbps, 7 for 56000kbps, or 8 for 64000kbps. */

int bits_per_sample;

/*! Signal history for the QMF */

int x[24];

struct

{

int s;

int sp;

int sz;

int r[3];

int a[3];

int ap[3];

int p[3];

int d[7];

int b[7];

int bp[7];

int sg[7];

int nb;

int det;

} band[2];

unsigned int in_buffer;

int in_bits;

unsigned int out_buffer;

int out_bits;

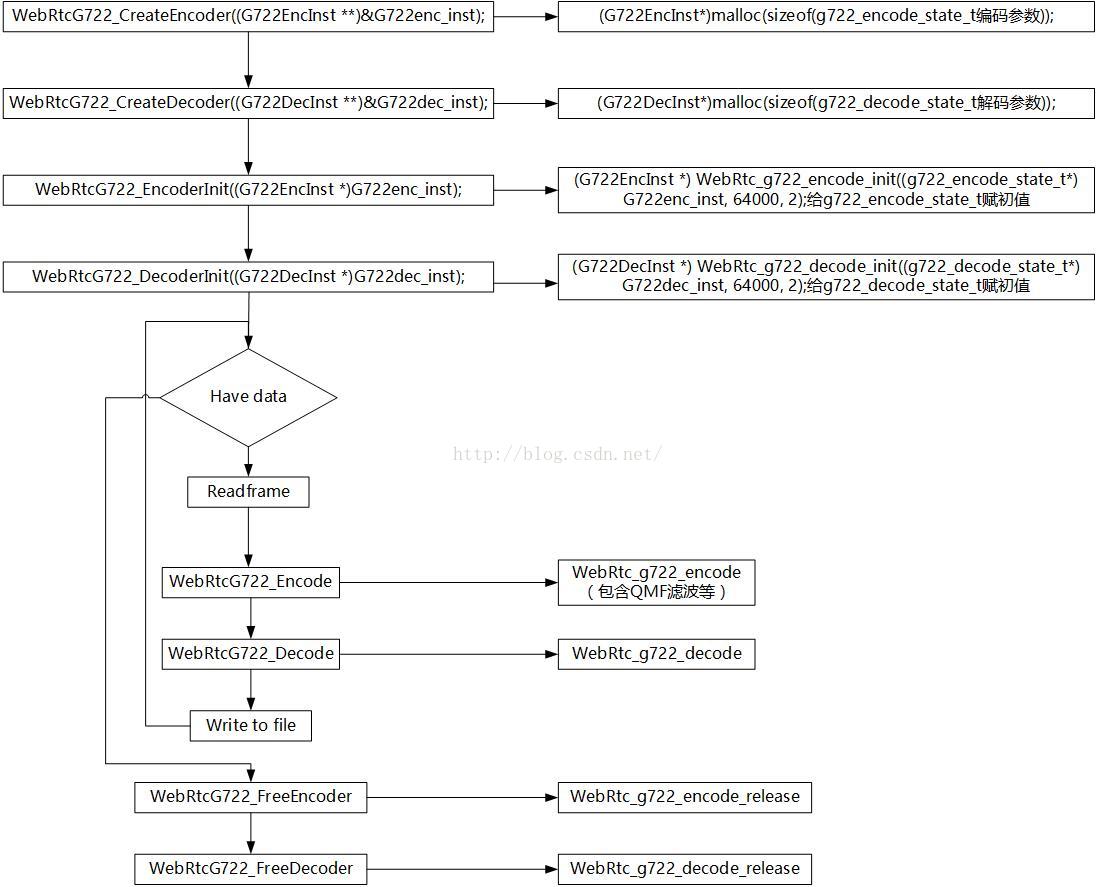

} G722DecoderState;The corresponding G722 encoding and decoding flow chart is as follows

The WebRtcG722xxx functions on the left in the figure are defined in g722_interface.h, and webrtc on the right_ G722xx functions are defined in g722_ enc_ In dec.h, the real encoding and decoding functions are in webrtc_ g722_ Implemented in encode \ decode. G722EncInst\DecInst in the figure is actually the G722EncoderState and G722DecoderState mentioned earlier.

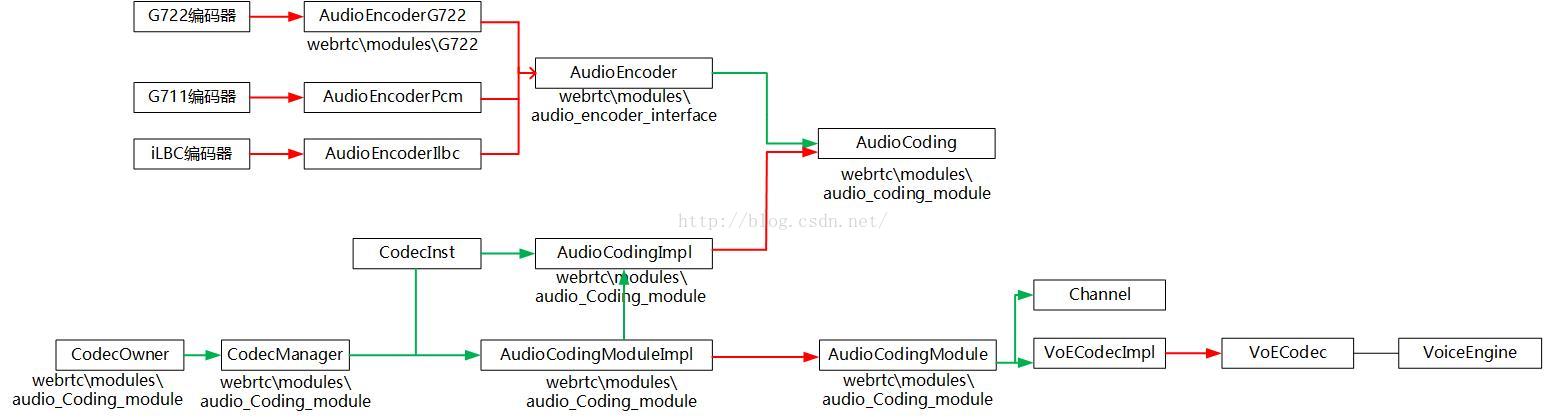

A G722 audio encoder like the one above is encapsulated in the audioencoder G722 class, which inherits audioencoder. Similarly, G711 encoder is encapsulated in AudioEncoderPcm class, which also inherits audioencoder; ILBC is encapsulated in the audioencoder iLBC class, which also inherits audioencoder. The definition of audioencoder is located in webrtc\modules\audio_encoder_interface, which contains the basic parameters and interface of an audio encoder. Audioencoder is further called by audiocoding class, which is located in webrtc\modules\audio_coding_module, as the name implies, AudioCoding is a class specially designed for audio encoding. Specifically, the AudioEncoder class is called in the following two interfaces.

virtual bool RegisterSendCodec(AudioEncoder* send_codec) = 0; virtual const AudioEncoder* GetSenderInfo() const = 0;

The specific implementation of these two interfaces is in the AudioCodingImpl class, which also inherits AudioCoding, but when we look at their specific implementation, we find that

bool AudioCodingImpl::RegisterSendCodec(AudioEncoder* send_codec) {

FATAL() << "Not implemented yet.";

return false;

}

const AudioEncoder* AudioCodingImpl::GetSenderInfo() const {

FATAL() << "Not implemented yet.";

return reinterpret_cast<const AudioEncoder*>(NULL);

}Instead

bool AudioCodingImpl::RegisterSendCodec(int encoder_type,

uint8_t payload_type,

int frame_size_samples) {

std::string codec_name;

int sample_rate_hz;

int channels;

if (!MapCodecTypeToParameters(

encoder_type, &codec_name, &sample_rate_hz, &channels)) {

return false;

}

webrtc::CodecInst codec;

AudioCodingModule::Codec(

codec_name.c_str(), &codec, sample_rate_hz, channels);

codec.pltype = payload_type;

if (frame_size_samples > 0) {

codec.pacsize = frame_size_samples;

}

return acm_old_->RegisterSendCodec(codec) == 0;

}and

const CodecInst* AudioCodingImpl::GetSenderCodecInst() {

if (acm_old_->SendCodec(¤t_send_codec_) != 0) {

return NULL;

}

return ¤t_send_codec_;

}ACM is called_ old_ Some methods in, and this acm_old_ The definition of is also in the AudioCodingImpl class, as follows

// TODO(henrik.lundin): All members below this line are temporary and should // be removed after refactoring is completed. rtc::scoped_ptr<acm2::AudioCodingModuleImpl> acm_old_; CodecInst current_send_codec_;

As you can see, there are some classes that may be cancelled in the future, but we still need to take a look at their contents. We saw ACM earlier_ old_ It is an object of AudioCodingModuleImpl class, which inherits the AudioCodingModule class. This class has similar functions to AudioCoding, except that it does not use AudioEncoder to represent each encoder, but uses CodecInst structure. These are all things in the old version of webrtc.

// Each codec supported can be described by this structure.

struct CodecInst {

int pltype;

char plname[RTP_PAYLOAD_NAME_SIZE];

int plfreq;

int pacsize;

int channels;

int rate; // bits/sec unlike {start,min,max}Bitrate elsewhere in this file!

bool operator==(const CodecInst& other) const {

return pltype == other.pltype &&

(STR_CASE_CMP(plname, other.plname) == 0) &&

plfreq == other.plfreq &&

pacsize == other.pacsize &&

channels == other.channels &&

rate == other.rate;

}

bool operator!=(const CodecInst& other) const {

return !(*this == other);

}

};Next, let's look at the implementation of RegisterSendCodec and SendCodec in AudioCodingModuleImpl

// Can be called multiple times for Codec, CNG, RED.

int AudioCodingModuleImpl::RegisterSendCodec(const CodecInst& send_codec) {

CriticalSectionScoped lock(acm_crit_sect_);

return codec_manager_.RegisterEncoder(send_codec);

}

// Get current send codec.

int AudioCodingModuleImpl::SendCodec(CodecInst* current_codec) const {

CriticalSectionScoped lock(acm_crit_sect_);

return codec_manager_.GetCodecInst(current_codec);

}You can see that codec is called_ manager_ It is an object of CodecManager class. The definition is also located in webrtc\modules\audio_coding_module. In conclusion, the new version of webrtc puts forward a new idea of using AudioCoding to manage the encoder, but the specific implementation has not been completed, and the old method is still used at present. Let's take a look at codec_ manager_. Register encoder (send_codec). In addition to some basic checks, we can see that codec is the main call_ owner_. Setencoders() method. codec_owner_ Is an object of codeowner class, located in webrtc\modules\audio_coding_module. codec_owner_. The CreateSpeechEncoder method is invoked in SetEncoders (). At present, it is to assign the pointer of the specific encoder, unlike maintaining an encoder linked list like ffmpeg.

Looking back, let's see who called the AudioCodingModule class. Our goal is to find the corresponding call in VoiceEngine all the way. What is more striking is the call of two classes to it. One is the Channel class in the voe namespace, which we will see later. The other is the call to it in the VoECodecImpl class, which inherits VoECodec. The voicecodecimpl class is directly inherited by the VoiceEngineImpl class, and voicecodec is one of the core members of VoiceEngine.

The above analysis corresponds to the following figure, in which the red line represents inheritance relationship and the green line represents calling relationship

The above are all parts of the encoder, and some parts of the decoder are also noted in the middle. For example, webrtc \ modules \ audio_ encoder_ Webrtc \ modules \ audio corresponding to interface_ decoder_ Interface, in which the AudioDecoder class is also integrated by many decoder classes corresponding to the encoder, such as AudioDecoder g722 class, etc., which are all in the webrtc\module\neteq directory. Let's not press the table below for the moment, and then talk about the sender of audio related content.

Let's look at the preprocessing steps such as echo cancellation AEC, gain control AGC, high pass filtering, Noise Suppression, mute detection VAD, and so on. Take echo cancellation technology as an example. Echo cancellation in webrtc has two implementations for mobile terminal and non mobile terminal, respectively in webrtc \ modules \ audio_ In addition to the aec and aecm directories of processing, there are corresponding SSE implementations for x86 platforms supporting SSE, which are located in webrtc\modules\audio_processing_sse2 directory. The specific echo cancellation code is encapsulated in the echocancelationimpl class. In addition to the specific implementation, the echocancelationimpl class also contains some handle operations, which are inherited from the ProcessingComponent class. The parent class of EchoCancellationImpl is EchoCancellation. The object of this parent class is directly called in the VoEAudioProcessing impl class, and its definition is located in webrtc\voice_engine. The parent class of VoEAudioProcessing impl is VoEAudioProcessing, which is also one of the core members of VoiceEngine. The relationship is very clear. These preprocessing related contents are simpler than those of the encoder.

Now let's take a look at the part of audio decoding. As mentioned earlier, the specific decoder classes are in the webrtc\module\neteq directory. In fact, some other contents at the decoding end, such as jitter buffer, are in this directory. Another important function of the decoder, namely the mixing function, is in webrtc \ modules \ audio_ conference_ Under the mixer directory. neteq module, the core technology of GIPS in that year, combines decoding, adaptive jitter buffering and packet loss concealment to achieve excellent voice quality and low delay. Let's start with the simple decoding module I'm most familiar with. The AudioDecoder class mentioned earlier is similar to the AudioEncoder class, which is also in audio_coding_module,codec_manager,codec_owner has been called. In addition, he can be seen in neteq's work related to decoding. neteq, as a sub module of the audio receiver, is called in the AcmReceiver class, which is one of the members of AudioCodingModuleImpl. Therefore, its relationship with VoiceEngine can be traced.

The above has completed the analysis and summary of audio codec, preprocessing and neteq content in VoiceEngine.

Now let's take a look at the audio device part. This part of the code is located in webrtc\modules\audio_devices, the related class is AudioDeviceModule, which supports audio devices of multiple platforms, and also includes many functions, such as volume control of microphone and speaker, mute control, sampling rate selection, stereo, mixing, etc. We will not look at the specific implementation for the time being, but only the interface relationship between it and VoiceEngine. It should be noted that although there is a VoEHardware class in VoiceEngine, the initialization of audio devices is implemented in VoEBase class.

virtual int Init(AudioDeviceModule* external_adm = NULL,

AudioProcessing* audioproc = NULL) = 0;Mixing is in the AudioConferenceMixer class and is called by the OutputMixer class of VoiceEngine Other auxiliary classes: AcmDump is used to output debugging information; The MediaFile class is used for the input and output of audio files. It is also called by the OutputMixer class of VoiceEngine (through FileRecorder and FilePlayer)

rtp\rtcp contains all contents of RTP and RTCP transmission, such as network channel data report, retransmission request, video key frame request, bit rate control, etc. The following table is not for the time being, and detailed analysis will be carried out later. It also comes with remote_bitrate_estimator\paced_sender\bitrate_controler. RtpRtcp is also naturally called by the Channel class of VoiceEngine. So far, the modules related to audio transmission under webrtc\module have been basically analyzed and how they are connected with VoiceEngine,

In the next article, you will use VoiceEngine to complete a voice call example.