Note: This article is a study note of the book "detailed explanation of Linux device driver development: Based on the latest Linux 4.0 kernel by song Baohua", and most of the content is in the book.

Books can be viewed directly in wechat reading: Linux device driver development details: Based on the latest Linux 4 0 kernel - Song Baohua - wechat reading (qq.com)

1 concurrency and contention

Concurrency: multiple execution units execute simultaneously and are executed concurrently. The access of concurrent execution units to shared resources (global variables, static variables, hardware resources, etc.) is easy to lead to race. The way to solve the race problem is to ensure mutually exclusive access to shared resources. The so-called mutually exclusive access means that when an execution unit accesses shared resources, other execution units are prohibited from accessing.

Code areas that access shared resources are called Critical Sections, which need to be protected by some mutual exclusion mechanism. Common mutual exclusion mechanisms: interrupt shielding, atomic operation, spin lock, semaphore, mutex, etc.

2 interrupt mask

The CPU generally has the functions of shielding interrupts and opening interrupts. This function can ensure that the executing kernel execution path is not preempted by the interrupt handler and prevent some race conditions. Specifically, interrupt masking will make the concurrency between interrupts and processes no longer occur, and because the process scheduling and other operations of Linux kernel rely on interrupts, the kernel preemption of concurrency between processes can also be avoided.

Methods of interrupt shielding:

local_irq_disable() //Mask this CPU interrupt ... critical_secion //Critical zone ... local_irq_enable() //Folio CPU interrupt

The interrupt time should not be too long. After shielding the interrupt, the current kernel execution path should execute the code in the critical area as soon as possible.

Interrupt shielding can only shield the interrupts of the CPU. This method is not very good.

3 atomic operation

Atomic operation can ensure that the modification of an integer data is exclusive.

The Linux kernel provides two types of functions to implement atomic operations, respectively for bit and integer variables.

3.1 integer atomic operation

(1) Sets the value of the atomic variable

/** * atomic_set - set atomic variable * @v: pointer of type atomic_t * @i: required value * * Atomically sets the value of @v to @i. */ #define atomic_set(v, i) (((v)->counter) = (i)) atomic_t v = ATOMIC_INIT(0); //Define the atomic variable v and initialize it to 0

(2) Gets the value of the atomic variable

/* Returns the value of an atomic variable */ #define atomic_read(v) ACCESS_ONCE((v)->counter)

(3) Atomic variable plus / minus

/* Atomic variable increase i */ void atomic_add(int i, atomic_t *v); /* Atomic variable reduction i */ void atomic_sub(int i, atomic_t *v);

(4) Atomic variable self increasing / self decreasing

/* Atomic variable increases by 1 */ void atomic_inc(atomic_t *v); /* Atomic variable reduced by 1 */ void atomic_dec(atomic_t *v);

(5) Operate and test

/* Perform self increasing, self creating and subtracting operations on atomic variables to test whether they are 0. If it is 0, it returns true; otherwise, it returns false */ #define atomic_sub_and_test(i, v) (atomic_sub_return((i), (v)) == 0) #define atomic_dec_and_test(v) (atomic_dec_return(v) == 0) #define atomic_inc_and_test(v) (atomic_inc_return(v) == 0)

(6) Operation and return

#define atomic_dec_return(v) atomic_sub_return(1, (v)) #define atomic_inc_return(v) atomic_add_return(1, (v)) int atomic_add_return(int i, atomic_t *v); int atomic_sub_return(int i, atomic_t *v);

3.2 bit atomic operation

(1) Set bit

/* Set the nr bit of addr address and write this bit as 1 */ void set_bit(int nr, volatile unsigned long *addr);

(2) Clear bit

/* Clear the nr bit of addr address, which writes 0 */ void clear_bit(int nr, volatile unsigned long *addr);

(3) Change bit

/* addr Reverse the nr bit of the address */ void change_bit(int nr, volatile unsigned long *addr);

(4) Test bit

/* Returns the nr bit of the addr address */ int test_bit(int nr, const volatile unsigned long *addr);

(5) Test and operate bit

int test_and_set_bit(int nr, volatile unsigned long *addr); int test_and_clear_bit(int nr, volatile unsigned long *addr); int test_and_change_bit(int nr, volatile unsigned long *addr);

The following code uses atomic operation to make the device open only by one process:

static atomic_t xxx_available = ATOMIC_INIT(1); /* Defining atomic variables */

static int xxx_open(struct inode *inode, struct file *filp)

{

...

if (!atomic_dec_and_test(&xxx_available)) {

atomic_inc(&xxx_available);

return - EBUSY; /* Already open */

}

...

return 0; /* success */

}

static int xxx_release(struct inode *inode, struct file *filp)

{

atomic_inc(&xxx_available); /* release device */

return 0;

}

4 spin lock

Spin Lock is a typical means of mutually exclusive access to critical resources, and its name comes from its working mode. In order to obtain a Spin Lock, the code running on a CPU needs to perform an atomic operation, which tests and sets a memory variable. Because it is an atomic operation, it is impossible for other execution units to access this memory variable until the operation is completed. If the test results show that the lock is idle, the program obtains the Spin Lock and continues to execute; If the test results show that the lock is still occupied, the program will repeat the "test and set" operation in a small cycle, that is, the so-called "spin", which is commonly referred to as "spin in place". When the Spin Lock holder releases the Spin Lock by resetting the variable, a waiting "test and set" operation reports to its caller that the lock has been released.

4.1 spin lock correlation

4.1.1 operation function

(1) Define spin lock

spinlock_t lock;

(2) Initialize spin lock

#define spin_lock_init(_lock) \

do { \

spinlock_check(_lock); \

raw_spin_lock_init(&(_lock)->rlock); \

} while (0)

(3) Acquire spin lock

/* Obtain the spin lock. If the lock can be obtained immediately, return immediately. Otherwise, spin in place until it is obtained */ void spin_lock(spinlock_t *lock);

/* Try to obtain the spin lock. If it can be obtained immediately, obtain and return true. Otherwise, return false immediately and do not spin in place */ int spin_trylock(spinlock_t *lock);

(4) Release spin lock

/* Release the spin lock, and spin_ lock/spin_ Use with trylock */ void spin_unlock(spinlock_t *lock);

4.1.2 use mode of spin lock

The usage is as follows:

spinlock_t lock; //Define a spin lock spin_lock_init(&lock); //Initialize spin lock spin_lock(&lock); //Acquire spin lock and protect critical zone ...; //Critical zone spin_unlock(&lock); //Release spin lock

Spin lock is mainly used for SMP or single CPU but the kernel can preempt. For systems with single CPU and kernel that do not support preemption, spin lock degenerates to null operation. In the case of multi-core SMP, if any core gets the spin lock, the preemption scheduling on that core is also temporarily prohibited, but the preemption scheduling of another core is not prohibited.

Although the use of spin lock can ensure that the critical area is not disturbed by other CPUs and preemptive processes in this CPU, the code path to get the lock may also be affected by interrupts and bottom half (BH, which will be introduced in later chapters) when executing the critical area. In order to prevent this effect, spin lock derivation is needed. spin_lock()/spin_unlock() is the basis of the spin lock mechanism. They are the same as the off interrupt local_irq_disable() / disconnect local_irq_enable(), close bottom half local_bh_disable() / open bottom half local_bh_enable(), turn off and save the status word local_irq_save() / interrupt and restore status word local_ irq_ The combination of restore() forms a complete set of spin lock mechanism, and the relationship is as follows:

spin_lock_irq() = spin_lock() + local_irq_disable() spin_unlock_irq() = spin_unlock() + local_irq_enable() spin_lock_irqsave() = spin_lock() + local_irq_save() spin_unlock_irqrestore() = spin_unlock() + local_irq_restore() spin_lock_bh() = spin_lock() + local_bh_disable() spin_unlock_bh() = spin_unlock() + local_bh_enable()

4.1.3 precautions

The spin lock should be used carefully, and the following problems should be paid special attention to in use.

1) The spin lock is actually a busy lock. When the lock is unavailable, the CPU cycles to "test and set" the lock until it is available to obtain the lock. The CPU does not do any useful work when waiting for the spin lock, but just wait. Therefore, it is reasonable to use spin lock only when the lock occupation time is very short. When the critical area is large or there are shared devices, it takes a long time to occupy the lock. The use of spin lock will reduce the performance of the system.

2) Spin lock may cause system deadlock. The most common case causing this problem is to recursively use a spin lock, that is, if a CPU that already has a spin lock wants to obtain the spin lock a second time, the CPU will deadlock.

3) Functions that may cause process scheduling cannot be called during spin lock locking. If the process gets the spin lock and then blocks, such as calling copy_from_user(),copy_ to_ Functions such as user (), kmalloc() and msleep() may cause the kernel to crash.

4) When programming in the case of single core, you should also think that your CPU is multi-core, and the driver emphasizes the concept of cross platform. For example, in the case of a single CPU, if the interrupt and the process may access the same critical area, the process calls spin_lock_irqsave() is safe. Spin is not called in interrupt_ Lock () is no problem, because spin_lock_irqsave() ensures that the interrupt service program of this CPU cannot be executed. However, if the CPU becomes multi-core, spin_lock_irqsave() cannot mask interrupts from another core, so another core may cause concurrency problems. Therefore, in any case, we should call spin in the interrupt service program_ lock().

4.1.4 use case code

The following code uses spin lock to enable the device to be opened by at most one process:

int xxx_count = 0; //Define file open count

spinlock_t xxx_lock;

static int xxx_open(struct inode *inode, struct file *filp)

{

...;

spin_lock(&xxx_lock);

if (xxx_count) { //file already open

spin_unlock(&xxx_lock);

return -EBUSY;

}

xxx_count++; //Increase usage count

spin_unlock(&xxx_lock);

...;

return 0;

}

static int xxx_release(struct inode *inode, struct file *filp)

{

...;

spin_lock(&xxx_lock);

xxx_count--; //Reduce usage count

spin_unlock(&xxx_lock);

return 0;

}

4.2 read write spin lock

Spin lock doesn't care what operation is going on in the critical area of locking. It treats equally whether it is reading or writing. Even if multiple execution units read critical resources at the same time, they will be locked.

In fact, when accessing a shared resource concurrently, multiple execution units will not have a problem reading it at the same time. The spin lock derivative lock read-write spin lock (rwlock) allows concurrent reading. Read / write spin lock is a lock mechanism with smaller granularity than spin lock. It retains the concept of "spin", but in terms of write operation, there can only be one write process at most. In terms of read operation, there can be multiple read execution units at the same time. Of course, reading and writing cannot be done at the same time.

4.2.1 operation function

(1) Define and initialize read / write spinlocks

rwlock_t rwlock; rwlock_init(&rwlock);

(2) Read lock

void read_lock(rwlock_t *lock); void read_lock_irqsave(rwlock_t *lock, unsigned long flags); void read_lock_irq(rwlock_t *lock); void read_lock_bh(rwlock_t *lock);

(3) Read unlock

void read_unlock(rwlock_t *lock); void read_unlock_irqrestore(rwlock_t *lock, unsigned long flags); void read_unlock_irq(rwlock_t *lock); void read_unlock_bh(rwlock_t *lock);

Before reading shared resources, you should call the read lock function first, and then call the read unlock function.

(4) Write lock

void write_lock(rwlock_t *lock); void write_lock_irqsave(rwlock_t *lock, unsigned long flags); void write_lock_irq(rwlock_t *lock); void write_lock_bh(rwlock_t *lock); int write_trylock(rwlock_t *lock); //An attempt to acquire a read-write spin lock is returned immediately regardless of success or failure

(5) Write unlock

void write_unlock(rwlock_t *lock); void write_unlock_irqrestore(rwlock_t *lock, unsigned long flags); void write_unlock_irq(rwlock_t *lock); void write_unlock_bh(rwlock_t *lock);

4.2.2 use mode

Before writing to a shared resource, you should call the write lock function first, and then call the write unlock function.

Usage of read-write lock:

rwlock_t lock; /* Define rwlock */ rwlock_init(&lock); /* Initialize rwlock */ read_lock(&lock); /* Acquire lock on read */ ... /* Critical resources */ read_unlock(&lock); write_lock_irqsave(&lock, flags); /* Acquire lock on write */ ... /* Critical resources */ write_unlock_irqrestore(&lock, flags);

4.3 sequence lock

seqlock is an optimization of read-write locks. If a sequence lock is used, the read execution unit will not be blocked by the write execution unit, that is, the read execution unit can continue to read when the write execution unit writes to the shared resources protected by the sequence lock without waiting for the write execution unit to complete the write operation, The write execution unit does not need to wait for all read execution units to complete the read operation before performing the write operation. However, the write execution unit and the write execution unit are still mutually exclusive, that is, if a write execution unit is performing a write operation, other write execution units must spin there until the write execution unit releases the sequence lock.

For sequential locks, although the reads and writes are not mutually exclusive, if the read execution unit has a write operation during the read operation, the read execution unit must re read the data to ensure that the obtained data is complete. Therefore, in this case, the reader may repeatedly read the same area many times in order to read valid data.

4.3.1 write execution unit sequence lock operation

The sequence lock operations involved in the write execution unit are as follows:

(1) Acquire sequence lock

void write_seqlock(seqlock_t *sl); int write_tryseqlock(seqlock_t *sl); write_seqlock_irqsave(lock, flags) write_seqlock_irq(lock) write_seqlock_bh(lock)

(2) Release sequence lock

void write_sequnlock(seqlock_t *sl); write_sequnlock_irqrestore(lock, flags) write_sequnlock_irq(lock) write_sequnlock_bh(lock)

Write execution unit uses sequential lock mode:

write_seqlock(&seqlock_a); .../* Write operation code block */ write_sequnlock(&seqlock_a);

4.3.2 read execution unit sequence lock operation

The sequence lock operations involved in the read execution unit are as follows:

(1) Read start

The read execution unit needs to call this function before accessing the shared resources protected by the sequence lock s1, and the function returns the current sequence number of the sequence lock s1.

unsigned read_seqbegin(const seqlock_t *sl); read_seqbegin_irqsave(lock, flags)

(2) Reread

After accessing the shared resources protected by the sequence lock s1, the read execution unit needs to call this function to check whether there is a write operation during the read access. If there is a write operation, the read execution unit needs to repeat the read operation.

int read_seqretry(const seqlock_t *sl, unsigned iv); read_seqretry_irqrestore(lock, iv, flags)

The mode of sequential lock used by the read execution unit is as follows:

do {

seqnum = read_seqbegin(&seqlock_a);

/* Read operation code block */

...

} while (read_seqretry(&seqlock_a, seqnum));

4.4 read copy update

RCU (read copy update), which is named based on its principle.

Unlike spin locks, the RCU reader has no overhead of locks, memory barriers and atomic instructions. It can almost be considered as direct reading (just simply indicating the start and end of reading). The RCU write execution unit first copies a copy before accessing its shared resources, and then modifies the copy, Finally, a callback mechanism is used to re point the pointer to the original data to the new modified data at an appropriate time. This time is when all CPU s referencing the data exit the read operation of shared data. This period of waiting for the right time is called Grace Period.

5 semaphore

Semaphore is the most typical means for synchronization and mutual exclusion in the operating system. The value of semaphore can be 0, 1 or n. Semaphores correspond to the classic concept PV operation in the operating system.

P (s): ① reduce the value of semaphore s by 1, that is, S=S-1; ② If s ≥ 0, the process continues; Otherwise, the process is set to the waiting state and is queued.

V (s): ① add 1 to the value of semaphore s, that is, S=S+1; ② If s > 0, wake up the processes waiting for semaphores in the queue.

5.1 related operations

(1) Define semaphore

struct semaphore;

(2) Initialization semaphore

/* Initialize the semaphore and set the value of sem to val */ void sema_init(struct semaphore *sem, int val);

(3) Get semaphore

/* Acquiring semaphores will cause sleep and cannot be used in interrupt context */ void down(struct semaphore *sem);

/* Similar to down, the process entering the sleep state can be interrupted by the signal, and the signal will also cause the function to return. At this time, the return value is not 0 */ int down_interruptible(struct semaphore *sem);

/* Try to obtain a semaphore. If you can obtain it immediately, you can obtain it and return 0. Otherwise, returning a non-0 value will not cause the caller to sleep. It can be used in the interrupt context */ int down_trylock(struct semaphore *sem);

(4) Release semaphore

void up(struct semaphore *sem);

As a possible means of mutual exclusion, semaphores can protect the critical area. Only the process that obtains the semaphore can execute the critical area code. When the semaphore cannot be obtained, the process enters the sleep waiting state.

Since the new Linux kernel tends to directly use mutex as a means of mutual exclusion, semaphores as mutual exclusion are no longer recommended.

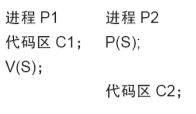

Semaphores can also be used for synchronization. One process A executes down() to wait for semaphores, and the other process B executes up() to release semaphores. In this way, process A waits for process B synchronously. The process is similar:

6 mutex

Although semaphores can realize mutual exclusion, mutex is still common in Linux kernel.

(1) Define and initialize:

struct mutex my_mutex; mutex_init(&my_mutex);

(2) Get mutex

void mutex_lock(struct mutex *lock); //The resulting sleep cannot be interrupted by signals int mutex_lock_interruptible(struct mutex *lock); //Sleep can be interrupted by signals int mutex_trylock(struct mutex *lock); //Trying to get can't lead to sleep

(3) Release mutex

void mutex_unlock(struct mutex *lock);

Usage of mutex:

struct mutex my_mutex; /* Define mutex */ mutex_init(&my_mutex); /* Initialize mutex */ mutex_lock(&my_mutex); /* Get mutex */ ... /* Critical resources */ mutex_unlock(&my_mutex); /* Release mutex */

Spin lock and mutex are the basic means to solve the mutex problem. How to use them according to different scenarios needs to be based on the nature of the critical region and the characteristics of the system.

Strictly speaking, mutex and spin lock belong to different levels of mutex means, and the implementation of the former depends on the latter. In the implementation of mutex itself, spin lock is needed to ensure the atomicity of mutex structure access. Therefore, spin lock belongs to the lower means.

The mutex is process level, which is used for the mutual exclusion of resources between multiple processes. Although it is also in the kernel, the kernel execution path competes for resources on behalf of the process as a process. If the contention fails, process context switching will occur, the current process will enter the sleep state, and the CPU will run other processes. In view of the high cost of process context switching, mutex is a better choice only when the process takes a long time.

When the access time of the critical area to be protected is relatively short, it is very convenient to use spin lock, because it can save the time of context switching. However, if the CPU cannot get the spin lock, it will idle there until other execution units are unlocked, so it is required that the lock cannot stay in the critical area for a long time, otherwise the efficiency of the system will be reduced.

Therefore, three principles for selecting self spin lock and mutex can be summarized.

1) When the lock cannot be obtained, the cost of using the mutex is the process context switching time, and the cost of using the spin lock is waiting to obtain the spin lock (determined by the execution time of the critical region). If the critical region is small, spin lock should be used. If the critical region is large, mutex should be used.

2) The critical area protected by mutex can contain code that may cause blocking, while spin lock must be avoided to protect the critical area containing such code. Because blocking means process switching. If another process attempts to obtain this spin lock after the process is switched out, a deadlock will occur.

3) Mutex exists in the process context. Therefore, if the protected shared resource needs to be used in the case of interrupt or soft interrupt, only spin lock can be selected between mutex and spin lock. Of course, if you must use mutex, you can only use mutex_trylock() method. If you can't get it, return it immediately to avoid blocking.

7 completed quantity

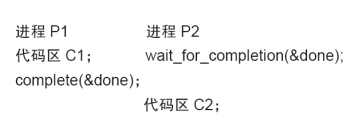

Linux provides Completion, which is used for one execution unit to wait for another execution unit to complete something.

7.1 related operations

(1) Define completed quantity

struct completion my_completion;

(2) Initialization completion

init_completion(&my_completion); reinit_completion(&my_completion) //Reinitialize to 0

(3) Waiting for completion

void wait_for_completion(struct completion *c);

(4) Wake up completion

void complete(struct completion *c); //Wake up a unit waiting for execution void complete_all(struct completion *c); //Release all execution units waiting for the same completion quantity

Synchronization process of completed quantity:

8. Add global MEM device driver after concurrency control

In the read-write function of globalmem(), copy is called_ from_ user(),copy_to_user() these functions may cause blocking, so spin locks cannot be used, and mutexes should be used.

Drive engineers are used to putting spin locks, mutexes and other auxiliary means used by a device in the device structure, and initializing this semaphore in the module initialization function.

The complete code is as follows:

#include <linux/module.h>

#include <linux/fs.h>

#include <linux/init.h>

#include <linux/cdev.h>

#include <linux/slab.h>

#include <linux/uaccess.h>

/* It is unreasonable to directly use immediate as a command, which is tentative */

#define MEM_CLEAR 0x1

#define GLOBALMEM_MAJOR 230

#define DEVICE_NUM 10

#define GLOBALMEM_SIZE 0x1000

static int globalmem_major = GLOBALMEM_MAJOR;

module_param(globalmem_major, int, S_IRUGO);

/* Equipment structure */

struct globalmem_dev {

struct cdev cdev;

unsigned char mem[GLOBALMEM_SIZE];

struct mutex mutex;

};

struct globalmem_dev *globalmem_devp;

static int globalmem_open(struct inode *inode, struct file *filp)

{

struct globalmem_dev *dev = container_of(inode->i_cdev, struct globalmem_dev, cdev);

/* Get globalmem using the private data of the file as_ Instance pointer to dev */

filp->private_data = dev;

return 0;

}

static int globalmem_release(struct inode *inode, struct file *filp)

{

return 0;

}

/**

* Device ioctl function

* @param[in] filp: File structure pointer

* @param[in] cmd: Command, currently only MEM is supported_ CLEAR

* @param[in] arg: Command parameters

* @return 0 is returned if successful, and the error code is returned if there is an error

*/

static long globalmem_ioctl(struct file *filp, unsigned int cmd,

unsigned long arg)

{

struct globalmem_dev *dev = filp->private_data;

switch (cmd) {

case MEM_CLEAR:

mutex_lock(&dev->mutex);

memset(dev->mem, 0, GLOBALMEM_SIZE);

mutex_unlock(&dev->mutex);

printk(KERN_INFO "globalmem is set to zero\n");

break;

default:

return -EINVAL;

}

return 0;

}

/**

* Reading device

* @param[in] filp: File structure pointer

* @param[out] buf: The user space memory address cannot be read or written directly in the kernel

* @param[in] size: Bytes read

* @param[in/out] ppos: The read position is equivalent to the offset of the file header

* @return The number of bytes actually read is returned if successful, and the error code is returned if there is an error

*/

static ssize_t globalmem_read(struct file *filp,

char __user *buf, size_t size, loff_t *ppos)

{

unsigned long p = *ppos;

unsigned long count = size;

int ret = 0;

struct globalmem_dev *dev = filp->private_data;

if (p >= GLOBALMEM_SIZE)

return 0;

if (count > GLOBALMEM_SIZE - p)

count = GLOBALMEM_SIZE - p;

mutex_lock(&dev->mutex);

/* Kernel space to user space cache copy */

if (copy_to_user(buf, dev->mem + p, count)) {

ret = -EFAULT;

} else {

*ppos += count;

ret = count;

printk(KERN_INFO "read %lu bytes(s) from %lu\n", count, p);

}

mutex_unlock(&dev->mutex);

return ret;

}

/**

* Write device

* @param[in] filp: File structure pointer

* @param[in] buf: The user space memory address cannot be read or written directly in the kernel

* @param[in] size: Bytes written

* @param[in/out] ppos: The write position is equivalent to the offset of the file header

* @return The number of bytes actually written is returned if successful, and the error code is returned if there is an error

*/

static ssize_t globalmem_write(struct file *filp,

const char __user *buf, size_t size, loff_t *ppos)

{

unsigned long p = *ppos;

unsigned long count = size;

int ret = 0;

struct globalmem_dev *dev = filp->private_data;

if (p >= GLOBALMEM_SIZE)

return 0;

if (count > GLOBALMEM_SIZE - p)

count = GLOBALMEM_SIZE - p;

mutex_lock(&dev->mutex);

/* Copy from user space cache to kernel space cache */

if (copy_from_user(dev->mem + p, buf, count))

ret = -EFAULT;

else {

*ppos += count;

ret = count;

printk(KERN_INFO "written %lu bytes(s) from %lu\n", count, p);

}

mutex_unlock(&dev->mutex);

return ret;

}

/**

* File offset settings

* @param[in] filp: File structure pointer

* @param[in] offset: Offset value size

* @param[in] orig: Start offset position

* @return The current location of the file is returned if successful, and the error code is returned if there is an error

*/

static loff_t globalmem_llseek(struct file *filp, loff_t offset, int orig)

{

loff_t ret = 0;

switch (orig) {

case 0: /* Set offset from header position */

if (offset < 0) {

ret = -EINVAL;

break;

}

if ((unsigned int)offset > GLOBALMEM_SIZE) {

ret = -EINVAL;

break;

}

filp->f_pos = (unsigned int)offset;

ret = filp->f_pos;

break;

case 1: /* Set offset from current position */

if ((filp->f_pos + offset) > GLOBALMEM_SIZE) {

ret = -EINVAL;

break;

}

if ((filp->f_pos + offset) < 0) {

ret = -EINVAL;

break;

}

filp->f_pos += offset;

ret = filp->f_pos;

break;

default:

ret = -EINVAL;

break;;

}

return ret;

}

static const struct file_operations globalmem_fops = {

.owner = THIS_MODULE,

.llseek = globalmem_llseek,

.read = globalmem_read,

.write = globalmem_write,

.unlocked_ioctl = globalmem_ioctl,

.open = globalmem_open,

.release = globalmem_release,

};

static void globalmem_setup_cdev(struct globalmem_dev *dev, int index)

{

int err, devno = MKDEV(globalmem_major, index);

/* Initialize cdev */

cdev_init(&dev->cdev, &globalmem_fops);

dev->cdev.owner = THIS_MODULE;

/* Register device */

err = cdev_add(&dev->cdev, devno, 1);

if (err)

printk(KERN_NOTICE "Error %d adding globalmem%d", err, index);

}

/* Driver module loading function */

static int __init globalmem_init(void)

{

int i;

int ret;

dev_t devno = MKDEV(globalmem_major, 0);

/* Get device number */

if (globalmem_major)

ret = register_chrdev_region(devno, DEVICE_NUM, "globalmem");

else {

ret = alloc_chrdev_region(&devno, 0, DEVICE_NUM, "globalmem");

globalmem_major = MAJOR(devno);

}

if (ret < 0)

return ret;

/* Request memory */

globalmem_devp = kzalloc(sizeof(struct globalmem_dev) * DEVICE_NUM, GFP_KERNEL);

if (!globalmem_devp) {

ret = -ENOMEM;

goto fail_malloc;

}

mutex_init(&globalmem_devp->mutex);

for (i = 0; i < DEVICE_NUM; i++)

globalmem_setup_cdev(globalmem_devp + i, i);

return 0;

fail_malloc:

unregister_chrdev_region(devno, DEVICE_NUM);

return ret;

}

module_init(globalmem_init);

/* Driver module unloading function */

static void __exit globalmem_exit(void)

{

int i;

for (i = 0; i < DEVICE_NUM; i++)

cdev_del(&(globalmem_devp + i)->cdev);

kfree(globalmem_devp);

/* Release device number */

unregister_chrdev_region(MKDEV(globalmem_major, 0), DEVICE_NUM);

}

module_exit(globalmem_exit);

MODULE_AUTHOR("MrLayfolk");

MODULE_LICENSE("GPL v2");

Makefile:

KVERS = $(shell uname -r) # Kernel modules obj-m += mutex_globalmem.o # Specify flags for the module compilation. #EXTRA_CFLAGS=-g -O0 build: kernel_modules kernel_modules: make -C /lib/modules/$(KVERS)/build M=$(CURDIR) modules clean: make -C /lib/modules/$(KVERS)/build M=$(CURDIR) clean

9 summary

Concurrency and race state exist widely. Interrupt shielding, atomic operation, spin lock and mutex are all mechanisms to solve the concurrency problem. Interrupt masking is rarely used alone, and atomic operations can only be performed on integers, so spinlocks and mutexes are most widely used.

Spin locking will lead to dead cycle. Blocking is not allowed during locking, so the critical area of locking is required to be small. Mutex allows the critical region to be blocked, which can be applied to the case of large critical region.