In depth understanding of synchronized (Part 1)

Thread safety problems caused by Java shared memory model

public class SyncDemo {

private static int count = 0;

public static void increment(){

count++;

}

public static void decrement(){

count--;

}

public static void main(String[] args) throws InterruptedException {

Thread t1 = new Thread(()->{

for (int i = 0; i < 5000; i++) {

increment();

}

},"t1");

Thread t2 = new Thread(() -> {

for (int i = 0; i < 5000; i++) {

decrement();

}

},"t2");

t1.start();

t2.start();

t1.join();

t2.join();

System.out.println(count);

}

}

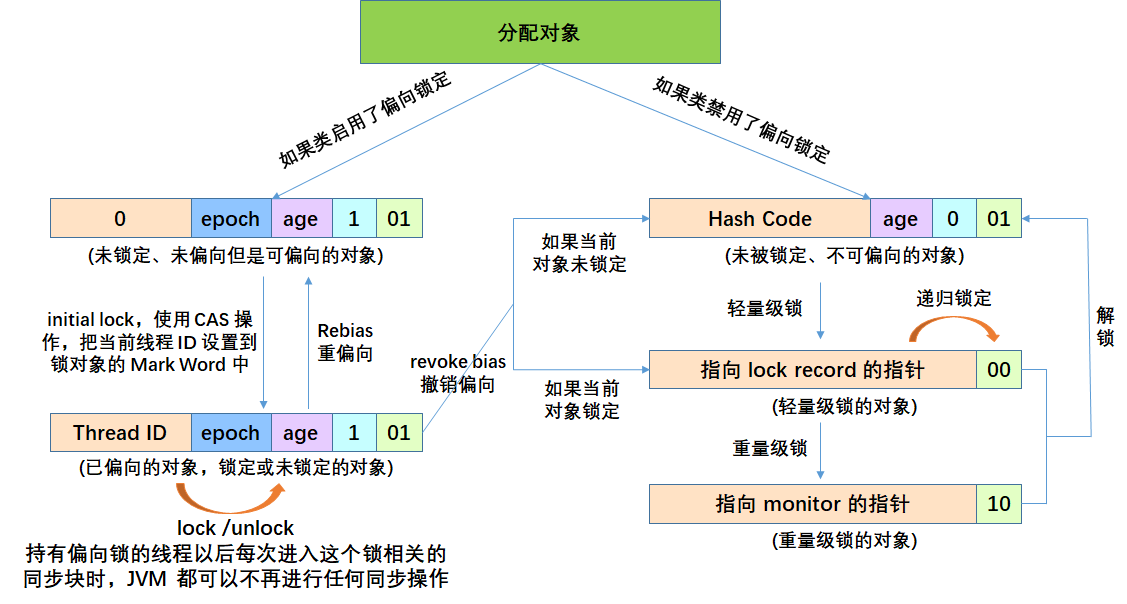

The above results may be positive, negative or zero. Why? Because the self increment and self decrement of static variables in Java are not atomic operations. We can view the JVM bytecode instructions of i + + and i – (i is a static variable) (a jclasslib plug-in can be installed in the idea)

JVM bytecode instruction for i + +

getstatic i // Gets the value of the static variable i iconst_1 // Push int constant 1 into operand stack iadd // Self increasing putstatic i // Store the modified value into the static variable i

i – JVM bytecode instruction

getstatic i // Gets the value of the static variable i iconst_1 // Push int constant 1 into operand stack isub // Self subtraction putstatic i // Store the modified value into the static variable i

If it is a single thread, the above 8 lines of code are executed in sequence, and there is no problem. However, under multithreading, these 8 lines of code may run alternately.

Critical Section

If there are multithreaded read-write operations on shared resources in a code block, this code block is called critical area, and its shared resources are critical resources.

In the above code, increment() and increment() are critical areas, and static int count is critical resources.

Race Condition

When multiple threads execute in the critical area, the results cannot be predicted due to different execution sequences of code, which is called race condition.

In order to avoid the occurrence of race conditions in the critical zone, there are many means to achieve the purpose:

- Blocking solution: synchronized, Lock

- Non blocking solution: atomic variables (CAS)

Use of synchronized

synchronized synchronization block is an atomic built-in lock provided by Java. Every object in Java can use it as a synchronization lock. These built-in locks invisible to Java users are called built-in locks, also known as monitor locks.

Use synchronized to solve the problems in the above code.

private static String lock = "";

public static void increment(){

synchronized (lock) {

count++;

}

}

public static void decrement(){

synchronized (lock) {

count--;

}

}

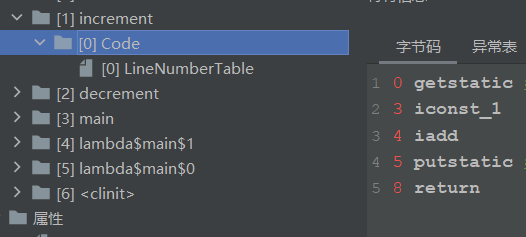

synchronized underlying principle

Mutex is a built-in mutex, which is based on the JVM's low-level operating system. Of course, the JVM built-in Lock has been greatly optimized after version 1.5, such as Lock Coarsening, Lock Elimination, Lightweight Locking, Biased Locking, Adaptive Spinning and other technologies to reduce the overhead of Lock operation. The concurrency performance of built-in Lock has been basically the same as that of Lock.

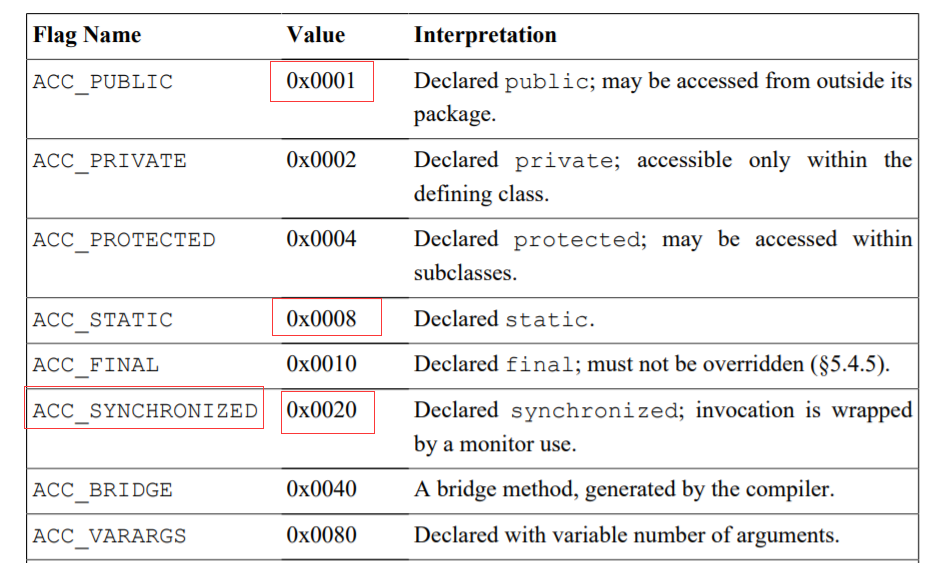

The Java virtual machine supports the synchronization of methods and instruction sequences in methods through a synchronization structure: monitor. The synchronization method is through access in the method_ Set ACC in flags_ Synchronized flag; Synchronous code blocks are implemented through monitorenter (locking) and monitorexit (unlocking). The execution of the two instructions is realized by the JVM by calling the mutex primitive of the operating system. The blocked thread will be suspended and waiting for rescheduling, which will lead to switching back and forth between the "user state and kernel state", which has a great impact on the performance.

public static void increment(){

synchronized (lock) {

count++;

}

}

Bytecode instruction of the above code

Why are there two monitorexits. When the program is running normally, after the first monitorexit is executed, a goto return s on line 24. If an exception occurs, a second monitorexit is executed. When using lock, we need to execute lock in finally Unlock operation. But synchronized is not required.

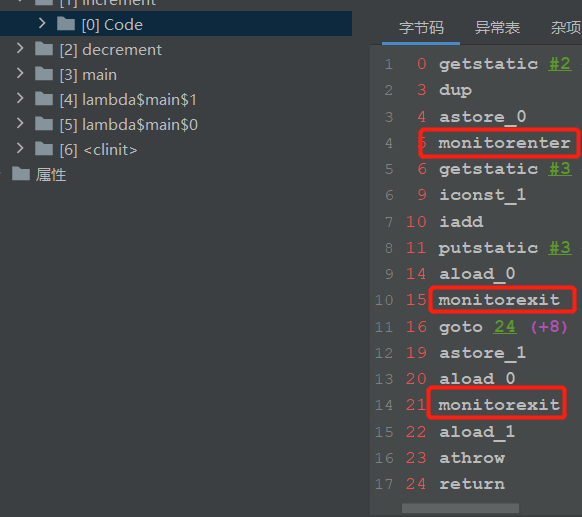

public static synchronized void increment(){

count++;

}

When loading on synchronized method

There will be no monitorenter and monitorexit instructions inside the method. But in method access_ ACC will be set on the flags_ Synchronized flag.

public static synchronized adds up to 0x0029.

Monitor (tube side / monitor)

Monitor is literally translated as "monitor", while the field of operating system is generally translated as "management". Management refers to the process of managing shared variables and operating on shared variables to make them support concurrency. Before Java 1.5, the only concurrent language provided by the Java language was management. The SDK and contracting provided after Java 1.5 were also based on management. In addition to Java, C/C + +, c# and other high-level languages also support management. The synchronized keyword and the three methods of wait(), notify(), and notifyAll() are the components of implementing management technology in Java.

MESA model

In the development history of pipe process, there have been three different pipe process models, namely Hasen model, Hoare model and MESA model. MESA model is now widely used. The following describes the MESA model:

According to the above figure, the waiting queue of the entry is easy to understand. Conditional variable waiting queue is mainly used to solve the synchronization problem.

For example, a thread came in. Other threads are blocked into the waiting queue. At this time, the thread needs the result of another thread. If the thread executes the wati() method, it will be in the waiting state and release the lock at the same time, then the other thread can execute. When another thread finishes executing, execute notifyAll() method to wake up the waiting thread. The waiting thread is in the condition variable waiting queue and gets the lock first.

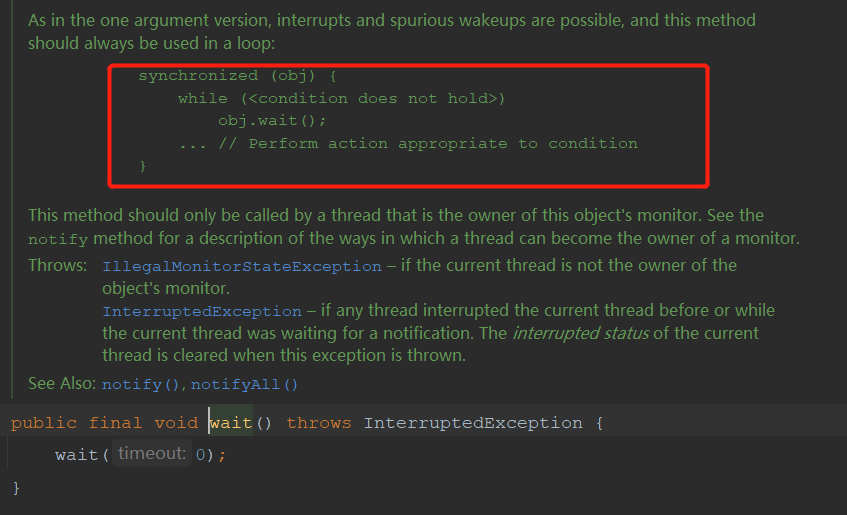

For MESA pipe process, there is a programming paradigm:

while(Conditions not met) {

wait();

}

Prevent other threads from calling notifyAll() for false wake-up. When the awakened thread executes again, the condition may not be satisfied, so the loop inspection condition is not satisfied. The wait() method of MESA model also has a timeout parameter to avoid permanent blocking of threads entering the waiting queue.

This is mentioned in the comment of the wait() method.

When are notify() and notifyAll() used

notify() can be used when the following three conditions are met, and notifyAll() can be used in other cases:

- All waiting threads have the same waiting conditions;

- After all waiting threads are awakened, perform the same operation;

- Just wake up one thread.

The built-in process of Java language is synchronized

Java refers to the MESA model, and the language's built-in synchronized simplifies the MESA model. In the MESA model, there can be multiple conditional variables, and there is only one conditional variable in the built-in pipe of Java language. The model is shown in the figure below.

Implementation of Monitor mechanism in Java

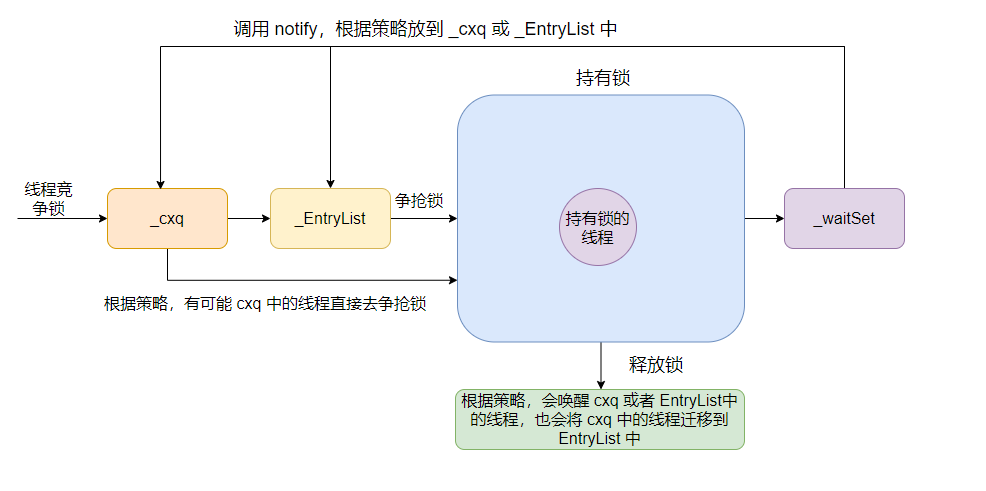

java.lang.Object class defines wait(), notify(), notifyAll() methods. The specific implementation of these methods depends on the implementation of ObjectMonitor, which is a set of mechanism based on C + + implementation within the JVM.

The main data structure of ObjectMonitor is as follows (hotspot source code ObjectMonitor.hpp):

ObjectMonitor() {

_header = NULL; //Object header markOop

_count = 0;

_waiters = 0,

_recursions = 0; // Number of lock reentries

_object = NULL; //Store lock object

_owner = NULL; // Identify the thread that owns the monitor (the thread that currently acquires the lock)

_WaitSet = NULL; // A two-way circular linked list composed of waiting threads (calling wait)_ WaitSet is the first node

_WaitSetLock = 0 ;

_Responsible = NULL ;

_succ = NULL ;

_cxq = NULL ; //The multi-threaded contention lock will be stored in this one-way linked list first (FILO stack structure)

FreeNext = NULL ;

_EntryList = NULL ; //Store threads that are blocked when entering or re entering (also threads that fail to store contention locks)

_SpinFreq = 0 ;

_SpinClock = 0 ;

OwnerIsThread = 0 ;

_previous_owner_tid = 0;

Explain the important attribute: owner points to thread 1 when thread 1 holds the lock. cxq enters the linked list after the thread fails to compete for the lock. When the thread holding the lock executes the wait method, it may enter cxq or EntryList according to different strategies.

When acquiring a lock, the current thread is inserted into the head of cxq. When releasing the lock, the default policy (QMode=0) is: if the EntryList is empty, insert the elements in cxq into the EntryList in the original order and wake up the first thread. That is, when the EntryList is empty, the subsequent thread acquires the lock first_ EntryList is not empty, it is directly from_ Wake up thread in EntryList.

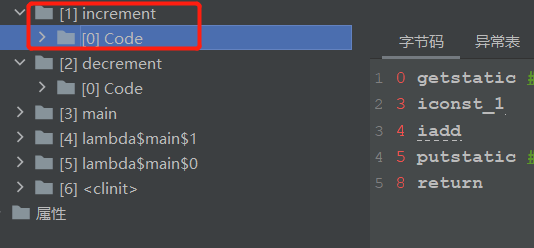

Lock identification in object

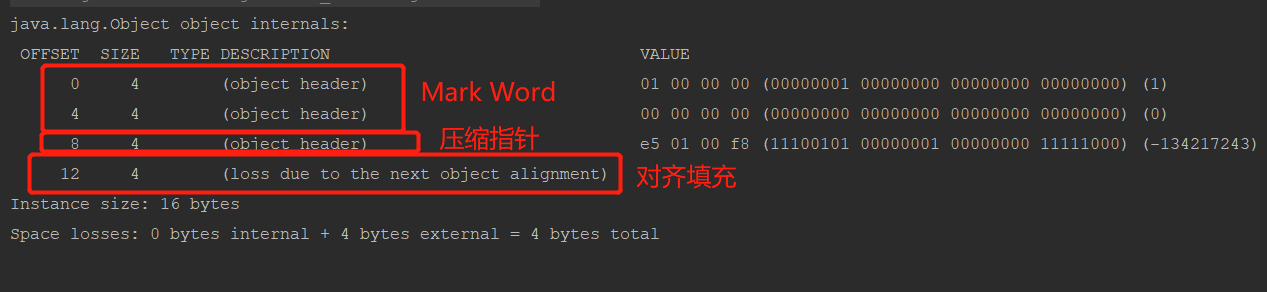

Object's memory layout

synchronized locks are added to objects. How do lock objects record lock status?

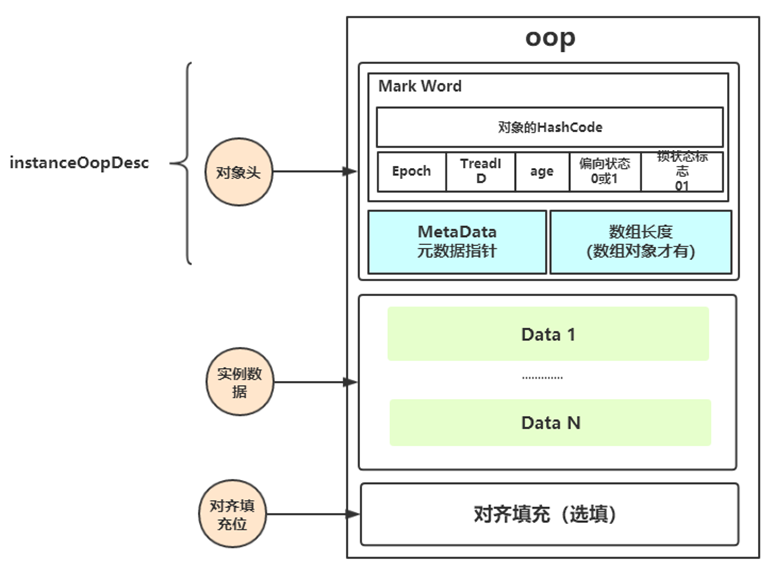

In the Hotspot virtual machine, the layout of objects stored in memory can be divided into three areas: object Header, Instance Data and Padding.

- Object header: such as hash code, age of the object, object lock, lock status flag, bias lock (thread) ID, bias time, array length (only for array objects), etc.

- Instance data: store the attribute data information of the class, including the attribute information of the parent class;

- Aligned padding: because the virtual machine requires that the starting address of the object must be an integer multiple of 8 bytes. Padding data does not have to exist, just for byte alignment.

This part will be discussed in the special topic of jvm.

Back to the previous question: how does the object record the lock state when synchronized locks are added to the object? The lock status is recorded in Mark Word in the object header of each object

How does the object header record the lock status

The object header is divided into three parts: Mark Word, Klass Pointer and array length (only array objects have)

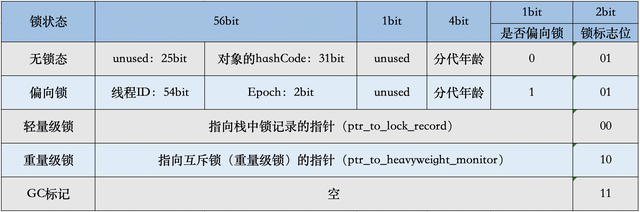

Mark Word: used to store the runtime data of the object itself, such as HashCode, GC generation age, lock status flag, lock held by thread, biased thread ID, biased timestamp, etc. the length of this part of data is 32bit and 64bit in 32-bit and 64 bit virtual machines respectively, which is officially called "Mark Word".

The other two are analyzed in the jvm topic.

Recommend a tool JOL(JAVA OBJECT LAYOUT) that can view the internal layout of ordinary java objects. With this tool, you can view the internal layout of a java object from new and how many bytes an ordinary java object occupies. Introducing maven dependency

<!-- see Java Object layout, size tools --> <groupId>org.openjdk.jol</groupId> <artifactId>jol-core</artifactId> <version>0.10</version>

test

public static void main(String[] args) {

Object obj = new Object();

//View object internal information

System.out.println(ClassLayout.parseInstance(obj).toPrintable());

}

result:

OFFSET: OFFSET address, in bytes;

SIZE: occupied memory SIZE, in bytes;

TYPE DESCRIPTION: TYPE DESCRIPTION, where object header is the object header;

VALUE: corresponding to the VALUE currently stored in memory, binary 32 bits;

Important attributes of Mark Word:

Hash: saves the hash code of the object. Call system. During runtime Identityhashcode () to calculate, delay the calculation, and assign the result here.

Age: save the generation age of the object. Indicates the number of times the object is GC. When the number reaches the threshold, the object will move to the old age.

biased_lock: bias lock identification bit. Since the lock identification of no lock and biased lock is 01, there is no way to distinguish them. Here, a biased lock identification bit of one bit is introduced.

Lock: Lock status identification bit. Distinguish the lock status. For example, 11 indicates the state of the object to be recycled by GC, and only the last two lock identifiers (11) are valid.

JavaThread *: save the thread ID holding the partial lock. When the mode is biased, when a thread holds an object, the object will be set as the ID of the thread. In the later operation, there is no need to attempt to obtain the lock. This thread ID is not the thread ID number assigned by the JVM. It is the same as the ID in Java Thread.

epoch: save the offset timestamp. Bias lock during CAS lock operation, the bias flag indicates which lock the object prefers

Object structure description under 64 bit JVM

- ptr_to_lock_record: pointer to the lock record in the stack in the lightweight lock state. When lock acquisition is non competitive, the JVM uses atomic operations instead of OS mutual exclusion. This technology is called lightweight locking. In the case of lightweight locking, the JVM sets the pointer to the lock record in the Mark Word of the object through CAS operation.

- ptr_to_heavyweight_monitor: pointer to the object monitor in the heavyweight lock state. If two different threads compete on the same object at the same time, you must upgrade the lightweight lock to monitor to manage the waiting threads. In the case of heavyweight locking, the JVM is in the PTR of the object_ to_ heavyweight_ Monitor sets the pointer to monitor

According to the lock flag bit in the figure above, since both the unlocked state and the bias lock are 01, an additional bit is required to judge whether it is a bias lock. According to the various flag bits of the lock in the figure above:

Identification without lock: 001

Deflection lock: 101

Light weight lock: 00

Weight lock: 10

Lock tag enumeration in Mark Word:

enum { locked_value = 0, //00 lightweight lock

unlocked_value = 1, //001 no lock

monitor_value = 2, //10 monitor lock, also known as expansion lock, also known as heavyweight lock

marked_value = 3, //11 GC marking

biased_lock_pattern = 5 //101 bias lock

More intuitive way to understand:

Use the JOL tool to track lock mark changes

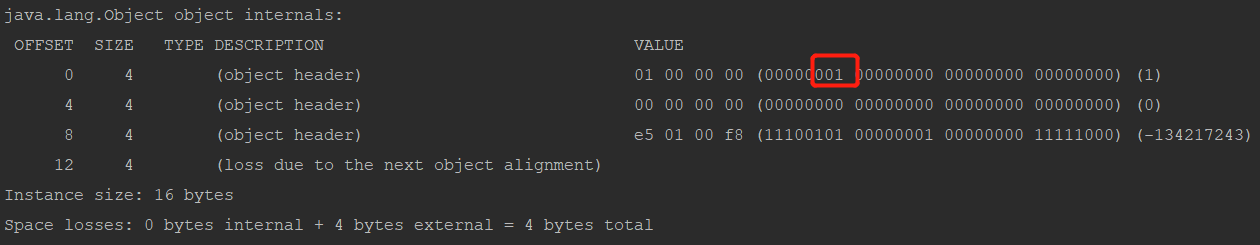

Before, we tested the code used by JOL tool

public static void main(String[] args) {

Object obj = new Object();

//View object internal information

System.out.println(ClassLayout.parseInstance(obj).toPrintable());

}

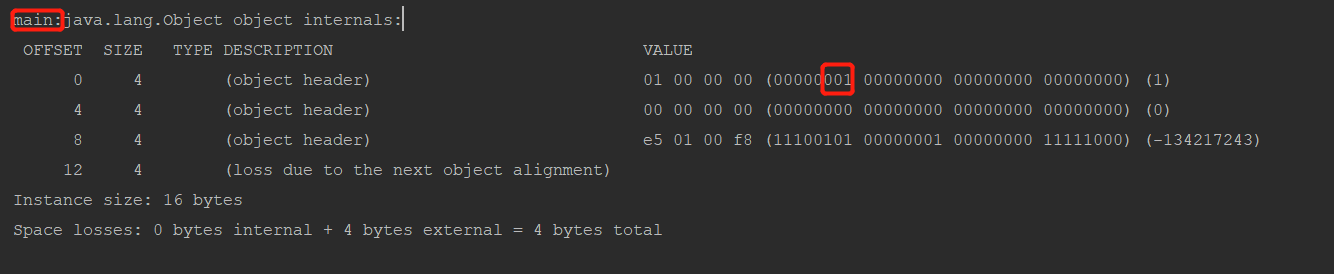

result:

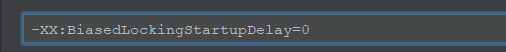

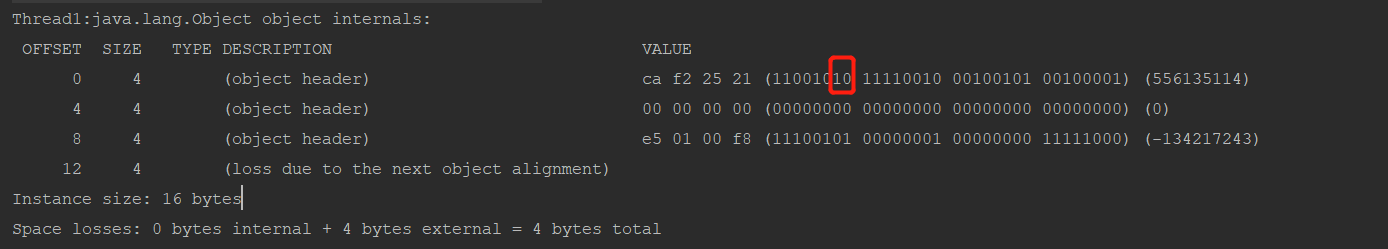

Look at the number 001 in the red box. This is the flag bit of the lock. Corresponding to 001 mentioned above, it means no lock

The above code is modified to

public static void main(String[] args) {

Object obj = new Object();

//View object internal information

new Thread(()->{

synchronized (obj) {

System.out.println(Thread.currentThread().getName()+":"+ClassLayout.parseInstance(obj).toPrintable());

}

},"Thread1").start();

}

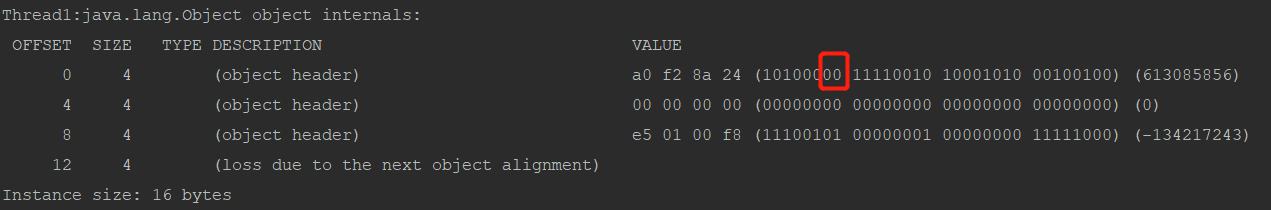

00 represents a lightweight lock, and the last three 8 bits refer to the memory address of the lock record in the stack holding the lock thread.

JDK1. After 5, the synchronized is optimized, and the concepts of biased lock and lightweight lock are introduced. Why not biased lock?

Here is a concept called biased lock delay. Before explaining this concept, let's understand what biased lock is

Bias lock

Biased lock is an optimization method for locking operation. After research, it is found that in most cases, locks not only do not have multi-threaded competition, but are always obtained by the same thread many times. Therefore, biased lock is introduced to eliminate the overhead of lock reentry (CAS operation) without competition. For occasions without lock competition, biased lock has a good optimization effect.

/***StringBuffer Internal synchronization***/

public synchronized int length() {

return count;

}

//System.out.println unconscious use of locks

public void println(String x) {

synchronized (this) {

print(x); newLine();

}

Like the system we use out. Println and the append method of StringBuffer contain synchronized. In order to avoid the overhead of single thread lock performance (without competition), biased lock is introduced.

Bias lock delay bias

The biased lock mode has a biased lock delay mechanism: the HotSpot virtual machine will start the biased lock mode for each new object after a delay of 4s. When the JVM starts, it will carry out a series of complex activities, such as loading configuration, system class initialization and so on. In this process, a large number of synchronized keywords will be used to lock objects, and most of these locks are not biased locks. To reduce initialization time, the JVM delays loading biased locks by default.

//Closing delay opening bias lock -XX:BiasedLockingStartupDelay=0 //No deflection lock -XX:-UseBiasedLocking //Enable deflection lock -XX:+UseBiasedLocking

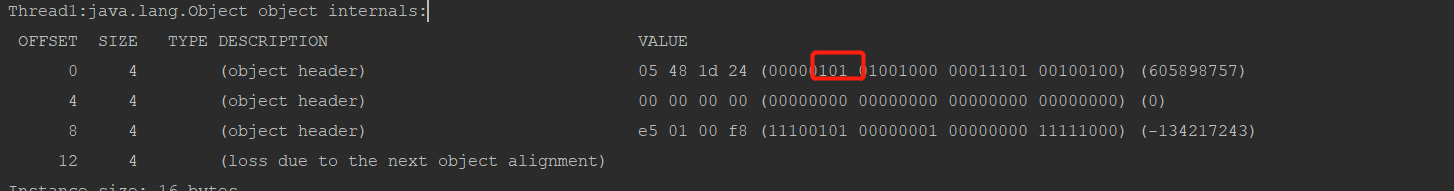

We turn off the bias lock delay

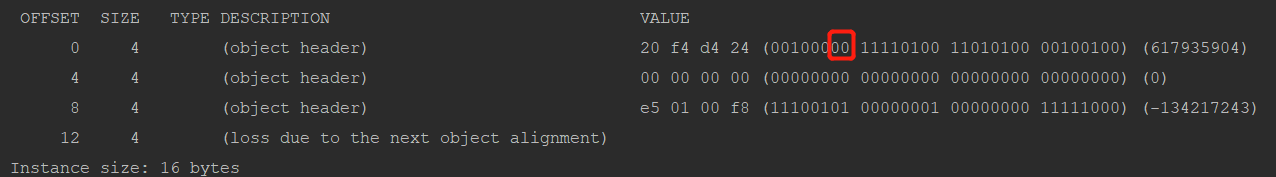

Execute the above code again:

Now use the default case, that is, without adding any jvm parameters, modify the above code before execution

public static void main(String[] args) throws InterruptedException {

Thread.sleep(5000);

Object obj = new Object();

//View object internal information

new Thread(()->{

synchronized (obj) {

System.out.println(Thread.currentThread().getName()+":"+ClassLayout.parseInstance(obj).toPrintable());

}

},"Thread1").start();

}

101 is the sign of bias lock. This verifies the bias lock delay.

Bias lock elimination and upgrade

There is a question: if the object calls hashCode, will the biased lock mode be enabled?

public static void main(String[] args) throws InterruptedException {

Thread.sleep(5000);

Object obj = new Object();

obj.hashCode();

//View object internal information

new Thread(()->{

synchronized (obj) {

System.out.println(Thread.currentThread().getName()+":"+ClassLayout.parseInstance(obj).toPrintable());

}

},"Thread1").start();

}

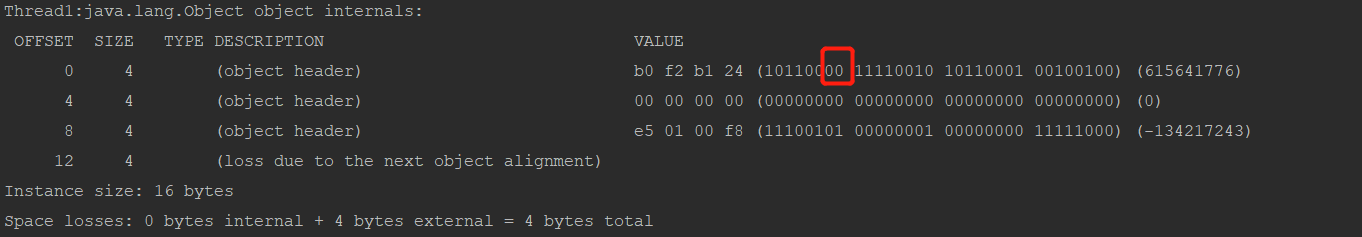

When the lock object calls hashCode, the biased lock becomes a lightweight lock. Why refer to the object structure description diagram under 64 bit JVM.

Call obj. Of the lock object Hashcode() or system The identityhashcode (obj) method will cause the bias lock of the object to be revoked. Because for an object, its hashcode will only be generated and saved once, and there is no place to save hashcode in partial lock

- Lightweight locks record hashCode in the lock record

- The heavyweight lock will record the hashCode in the Monitor

Consider another case. The hashCode called above is called biased state before synchronized. If synchronized is called, it is called biased state. What if hashCode is called when the state has been biased?

public static void main(String[] args) throws InterruptedException {

Thread.sleep(5000);

Object obj = new Object();

//View object internal information

new Thread(()->{

synchronized (obj) {

obj.hashCode();

System.out.println(Thread.currentThread().getName()+":"+ClassLayout.parseInstance(obj).toPrintable());

}

},"Thread1").start();

}

Bias locks are directly upgraded to heavyweight locks.

Conclusion: when the object is biased (that is, the thread ID is 0) and biased, calling HashCode calculation will make the object no longer biased:

- When the object can be biased, MarkWord will become unlocked and can only be upgraded to lightweight lock;

- When the object is in a bias lock, calling HashCode will force the bias lock to be upgraded to a weight lock.

Looking at such a situation, what is the situation if wait or notify is called in the biased state

public static void main(String[] args) throws InterruptedException {

Thread.sleep(5000);

Object obj = new Object();

//View object internal information

new Thread(() -> {

synchronized (obj) {

try {

obj.wait(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName() + ":" + ClassLayout.parseInstance(obj).toPrintable());

}

}, "Thread1").start();

}

Upgrade directly to heavyweight lock. As mentioned earlier, the synchronized keyword, wait(), notify(), and notifyAll() are implemented based on the Monitor mechanism, so the biased lock is directly upgraded to a heavyweight lock.

Let's look at notify()

public static void main(String[] args) throws InterruptedException {

Thread.sleep(5000);

Object obj = new Object();

//View object internal information

new Thread(() -> {

synchronized (obj) {

obj.notify();

System.out.println(Thread.currentThread().getName() + ":" + ClassLayout.parseInstance(obj).toPrintable());

}

}, "Thread1").start();

}

After calling notify(), the biased lock is upgraded to a lightweight lock. It's strange why wait() method is upgraded to heavyweight and notify() is upgraded to lightweight. I don't know how to implement the underlying jvm.

When the bias lock is eliminated, it is not necessarily a lightweight lock or a heavyweight lock after upgrading.

Summarize the circumstances that lead to the elimination and upgrading of bias locks.

- Call hashCode method

- Call the wait() method and notify() method.

Lightweight Locking

If the bias lock fails, the virtual machine will not be upgraded to a heavyweight lock immediately. It will also try to use an optimization method called lightweight lock. At this time, the structure of Mark Word will also become a lightweight lock structure. The scenario of lightweight lock is that threads execute synchronization blocks alternately. If multiple threads access the same lock at the same time, it will lead to the expansion of lightweight lock into heavyweight lock

package com.tuling.jucdemo.test;

import org.openjdk.jol.info.ClassLayout;

public class test1 {

public static void main(String[] args) throws InterruptedException {

Thread.sleep(5000);

Object obj = new Object();

//View object internal information

Thread thread1 = new Thread(() -> {

synchronized (obj) {

System.out.println(Thread.currentThread().getName() + ":" + ClassLayout.parseInstance(obj).toPrintable());

}

}, "Thread1");

Thread thread2 = new Thread(() -> {

synchronized (obj) {

System.out.println(Thread.currentThread().getName() + ":" + ClassLayout.parseInstance(obj).toPrintable());

}

}, "Thread2");

thread1.start();

thread1.join();

thread2.start();

thread2.join();

System.out.println(Thread.currentThread().getName() + ":" + ClassLayout.parseInstance(obj).toPrintable());

}

}

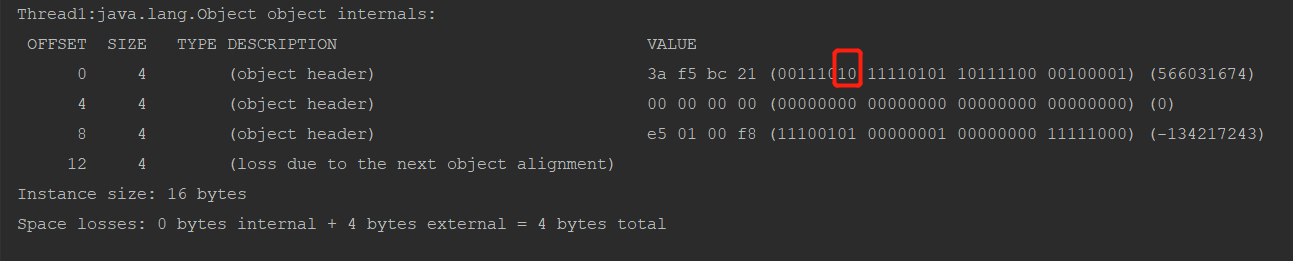

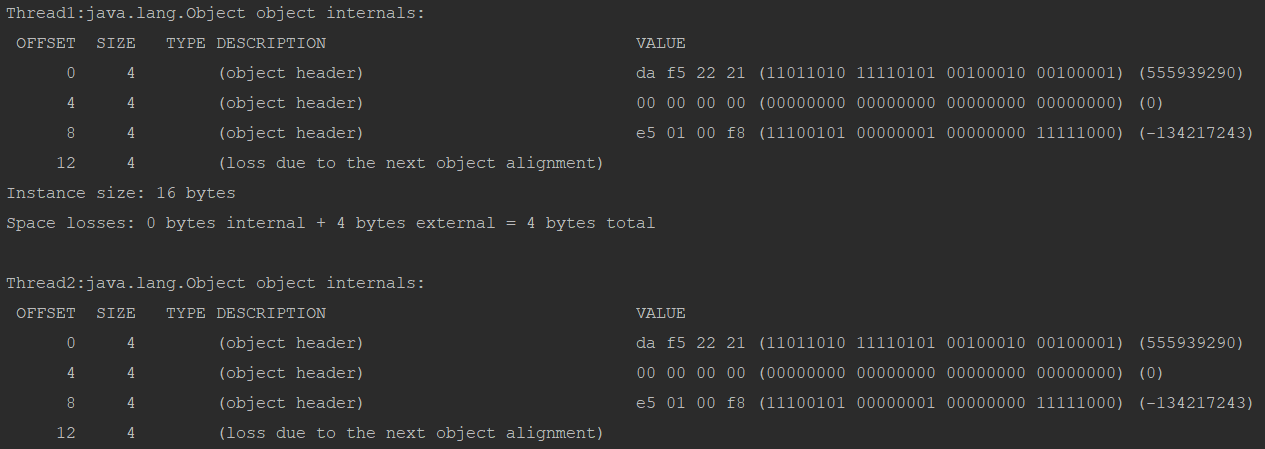

result:

Upgrade from biased lock to lightweight lock, and then become lockless. But this is not the only result. The state of running lock multiple times is not necessarily the same.

Upgrade lightweight lock to heavyweight lock. When multiple threads access a resource at the same time.

public static void main(String[] args) throws InterruptedException {

Thread.sleep(5000);

Object obj = new Object();

//View object internal information

Thread thread1 = new Thread(() -> {

synchronized (obj) {

System.out.println(Thread.currentThread().getName() + ":" + ClassLayout.parseInstance(obj).toPrintable());

}

}, "Thread1");

Thread thread2 = new Thread(() -> {

synchronized (obj) {

System.out.println(Thread.currentThread().getName() + ":" + ClassLayout.parseInstance(obj).toPrintable());

}

}, "Thread2");

thread1.start();

thread2.start();

}

There are two more questions:

- Can lightweight locks be downgraded to biased locks?

sure. As mentioned before, the biased lock has a biased lock delay. The lightweight lock is obtained during the biased lock delay. After the biased lock delay (4S by default), the lightweight lock will become a biased lock. Refer to the example of tracking lock mark changes with JOL tool - After the heavyweight lock is released, it becomes unlocked. At this time, a new thread calls the synchronization block. What lock will it obtain?

Bias lock, lightweight lock and heavyweight lock are possible.

summary

For synchronized, biased lock and lightweight lock operate in the object header. When there is no synchronized keyword, the object is in a lock free state, and the lock ID of the object header is 001. When synchronized is used, there is no concurrency, biased lock, and the lock ID of the object header is 101. When there is slight competition, the lock ID of the object header is 00. At this time, the lock is only operated in the user state. When high concurrency occurs, it will be upgraded to heavyweight lock. At this time, the lock is operated through the Monitor mechanism, which is the transition from user state to kernel state.

Lock object state transition