1. The Linux kernel implements the creation of namespace

1.1 ip netns command

You can complete various operations on the Network Namespace with the help of the ip netns command. The ip netns command comes from the iproute installation package. Generally, the system will install it by default. If not, please install it yourself.

Note: sudo permission is required when the ip netns command modifies the network configuration.

You can complete the operations related to the Network Namespace through the ip netns command. You can view the command help information through the ip netns help:

[root@localhost ~]# ip netns help / / because this command is based on the kernel, that is, the kernel built-in command does not use -- help Usage: ip netns list // List owned namespaces ip netns add NAME // Add namespace ip netns attach NAME PID //Add a pid to the namespace ip netns set NAME NETNSID //Set the pid of the namespace ip [-all] netns delete [NAME] //Delete the specified namespace. If the - all option is specified, delete all ip netns identify [PID] // The process used to view the namespace. If the pid is not specified, the namespace of the current process will be displayed ip netns pids NAME //This command is used to view the pid of the process in the specified namespace. In fact, this command is to check all processes under / proc ip [-all] netns exec [NAME] cmd ... // Used to specify commands in the specified namespace, such as ip netns exec + namespace + commands to be executed, such as ip a. ip netns monitor //It is used to monitor operations on namespaces, such as deleting or adding namespaces, and the corresponding notification information will be displayed ip netns list-id [target-nsid POSITIVE-INT] [nsid POSITIVE-INT] NETNSID := auto | POSITIVE-INT

1.2 creating a Network Namespace

Create a namespace named ns1

[root@localhost ~]# ip netns list / / lists namespaces [root@localhost ~]# ip netns add ns1 / / add a namespace. ns1 is the name of the namespace. You can choose any name [root@localhost ~]# ip netns list ns1

The newly created Network Namespace will appear in the / var/run/netns / directory. If a namespace with the same name already exists, the command will report the error of "Cannot create namespace file" / var/run/netns/ns0 ": File exists.

[root@localhost ~]# ls /var/run/netns/ ns1 [root@localhost ~]# ip netns add ns1 Cannot create namespace file "/var/run/netns/ns1": File exists // Some people will wonder if they can manually create a command that does not use ip netns. Let's try it [root@localhost netns]# pwd /var/run/netns [root@localhost netns]# ls ns1 [root@localhost netns]# touch ns2 [root@localhost netns]# ll total 0 -r--r--r-- 1 root root 0 Dec 5 18:23 ns1 -rw-r--r-- 1 root root 0 Dec 5 18:33 ns2 [root@localhost netns]# chmod u-w ns2 [root@localhost netns]# ll total 0 -r--r--r-- 1 root root 0 Dec 5 18:23 ns1 -r--r--r-- 1 root root 0 Dec 5 18:33 ns2 [root@localhost netns]# ip netns ls / / an error is reported when an error is found Error: Peer netns reference is invalid. Error: Peer netns reference is invalid. ns2 ns1 [root@localhost netns]# rm -f ns2 [root@localhost netns]# ip netns list ns1

1.3 operation Network Namespace

The ip command provides the ip netns exec subcommand, which can be executed in the corresponding Network Namespace.

// View the network card information of the newly created Network Namespace

[root@localhost netns]# ip netns exec ns1 ip a / / the ip a command will be executed after the namespace is included

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000 //You can see that the network card is disabled

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

You can see that a lo loopback network card will be created by default in the newly created Network Namespace, and the network card is closed at this time. At this time, if you try to ping the lo loopback network card, you will be prompted that Network is unreachable

[root@localhost netns]# ip netns exec ns1 ping -c 3 127.0.0.1 PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data. --- 127.0.0.1 ping statistics --- 3 packets transmitted, 0 received, 100% packet loss, time 2056ms

//Turn on the network card through the following command

// Open lo network card

[root@localhost netns]# ip netns exec ns1 ip link set lo up

[root@localhost netns]# ip netns exec ns1 ipa

exec of "ipa" failed: No such file or directory

[root@localhost netns]# ip netns exec ns1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

[root@localhost netns]# IP netns exec NS1 Ping - C 3 127.0.0.1 / / Ping for three times automatically exits

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.035 ms

64 bytes from 127.0.0.1: icmp_seq=2 ttl=64 time=0.087 ms

64 bytes from 127.0.0.1: icmp_seq=3 ttl=64 time=0.063 ms

--- 127.0.0.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2062ms

rtt min/avg/max/mdev = 0.035/0.061/0.087/0.023 ms

1.4 transfer equipment

We can transfer devices (such as veth) between different network namespaces. Since a device can only belong to one Network Namespace, the device cannot be seen in the Network Namespace after transfer.

Among them, veth devices are transferable devices, while many other devices (such as lo, vxlan, ppp, bridge, etc.) are not transferable.

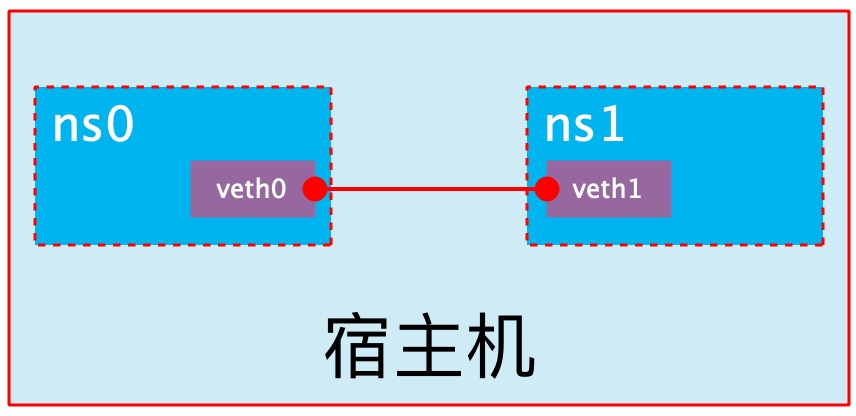

1.5 veth pair

The full name of veth pair is Virtual Ethernet Pair. It is a pair of ports. All packets entering from one end of the pair of ports will come out from the other end, and vice versa.

veth pair is introduced to communicate directly in different network namespaces. It can be used to connect two network namespaces directly.

1.6 create veth pair

// You can see that we only have three network cards now

[root@localhost ~]# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:5f:b4:28 brd ff:ff:ff:ff:ff:ff

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:35:05:b8:22 brd ff:ff:ff:ff:ff:ff

[root@localhost ~]# ip link add type veth / / add a veth type network card, because veth belongs to a device that can be transferred

[root@localhost ~]# ip link show / / you can see that a pair of Veth pairs are added to the system to connect the two virtual network cards veth0 and veth1. At this time, the pair of Veth pairs are in "not enabled" status.

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:5f:b4:28 brd ff:ff:ff:ff:ff:ff

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:35:05:b8:22 brd ff:ff:ff:ff:ff:ff

4: veth0@veth1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether e2:df:23:a3:37:e0 brd ff:ff:ff:ff:ff:ff

5: veth1@veth0: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether ca:d4:ee:ed:ce:86 brd ff:ff:ff:ff:ff:ff

1.7 realize communication between network namespaces

Next, we use veth pair to realize the communication between two different network namespaces. Just now, we have created a Network Namespace named ns1. Next, we will create another information Network Namespace named ns2

[root@localhost ~]# ip netns add ns2 [root@localhost ~]# ip netns list ns2 ns1

We add veth0 to ns1 and veth1 to the real machine for testing

[root@localhost ~]# ip link set veth0 netns ns1

[root@localhost ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: veth0@if5: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether e2:df:23:a3:37:e0 brd ff:ff:ff:ff:ff:ff link-netnsid 0

// Let's test veth1 on the real machine first

[root@localhost ~]# ip addr add 192.168.2.2/24 dev veth1 / / add IP to the veth1 network card of the real machine

We configure ip addresses for these Veth pairs and enable them

[root@localhost ~]# ip netns exec ns1 ip link set veth0 up

[root@localhost ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: veth0@if5: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether e2:df:23:a3:37:e0 brd ff:ff:ff:ff:ff:ff link-netns ns2

[root@localhost ~]# ip netns exec ns1 ip addr add 192.168.2.1/24 dev veth0

[root@localhost ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: veth0@if5: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether e2:df:23:a3:37:e0 brd ff:ff:ff:ff:ff:ff link-netns ns2

inet 192.168.2.1/24 scope global veth0

valid_lft forever preferred_lft forever

[root@localhost ~]# ip link set veth1 up / / open the veth1 network card of the real machine

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:5f:b4:28 brd ff:ff:ff:ff:ff:ff

inet 192.168.182.150/24 brd 192.168.182.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5f:b428/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:35:05:b8:22 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

7: veth1@if6: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 2e:31:0c:42:29:6e brd ff:ff:ff:ff:ff:ff link-netns ns1

inet 192.168.2.2/24 scope global veth1

valid_lft forever preferred_lft forever

//Test for communication

[root@localhost ~]# ping -c2 192.168.2.1 PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data. 64 bytes from 192.168.2.1: icmp_seq=1 ttl=64 time=0.113 ms 64 bytes from 192.168.2.1: icmp_seq=2 ttl=64 time=0.083 ms --- 192.168.2.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1021ms rtt min/avg/max/mdev = 0.083/0.098/0.113/0.015 ms

Turn on the lo network card of ns2 first

[root@localhost ~]# ip netns exec ns2 ip link show

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

[root@localhost ~]# ip netns exec ns2 ip link set lo up

Adding veth1 to ns1

[root@localhost ~]# ip link set veth1 netns ns2

Configure the ip address on the veth pair and start

From the following, we can find that the configured IP disappears after the veth2 of the real machine is moved to ns2, because it belongs to different namespaces, and the namespaces are independent

[root@localhost ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

7: veth1@if6: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 2e:31:0c:42:29:6e brd ff:ff:ff:ff:ff:ff link-netns ns1

[root@localhost ~]# ip netns exec ns2 ip link set veth1 up / / start veth1

[root@localhost ~]# ip netns exec ns2 ip addr add 192.168.2.2/24 dev veth1 / / set the IP address for veth1

[root@localhost ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

7: veth1@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 2e:31:0c:42:29:6e brd ff:ff:ff:ff:ff:ff link-netns ns1

inet 192.168.2.2/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::2c31:cff:fe42:296e/64 scope link

valid_lft forever preferred_lft forever

//Test ping with ns2 to see if ns2 can pass

[root@localhost ~]# IP netns exec NS2 Ping - C 2 192.168.2.1 / / Ping twice before exiting PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data. 64 bytes from 192.168.2.1: icmp_seq=1 ttl=64 time=0.084 ms 64 bytes from 192.168.2.1: icmp_seq=2 ttl=64 time=0.082 ms --- 192.168.2.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1045ms rtt min/avg/max/mdev = 0.082/0.083/0.084/0.001 ms

1.8 veth device renaming

[root@localhost ~]# ip netns exec ns1 ip link set veth0 down / / close the veth0 network card first

[root@localhost ~]# ip netns exec ns1 ip link set dev veth0 name eth0

[root@localhost ~]# ip netns exec ns1 ip link set eth0 up / / start the network card

[root@localhost ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 1e:2a:ff:f9:96:e0 brd ff:ff:ff:ff:ff:ff link-netns ns2

inet 192.168.2.1/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::1c2a:ffff:fef9:96e0/64 scope link

valid_lft forever preferred_lft forever

// Rename the veth1 network card of ns2

[root@localhost ~]# ip netns exec ns2 ip link set veth1 down

[root@localhost ~]# ip netns exec ns2 ip link set dev veth1 name eth0

[root@localhost ~]# ip netns exec ns2 ip link set eth0 up

[root@localhost ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

7: eth0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 2e:31:0c:42:29:6e brd ff:ff:ff:ff:ff:ff link-netns ns1

inet 192.168.2.2/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::2c31:cff:fe42:296e/64 scope link

valid_lft forever preferred_lft forever

2. Configuration of four network modes

2.1 bridge mode configuration (default mode)

// Through comparison, it can be found that there is no difference between the two creation methods

[root@localhost ~]# docker run -it --rm --network bridge busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@localhost ~]# docker run -it --rm busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

2.2 none mode configuration

[root@localhost ~]# docker run -it --rm --network none busybox / / there is only one lo network card

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2.3 container mode configuration

Start the first container

[root@localhost ~]# docker run -it --rm busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

14: eth0@if15: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

Start the second container

[root@localhost ~]# docker run -it --rm busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

16: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

You can see that the IP address of the second container is 172.17.0.3, which is not the same as that of the first container, that is, there is no shared network. At this time, if we change the startup mode of the second container, we can make the IP of the second container consistent with that of the first container, that is, sharing IP, but not sharing file system.

[root@localhost ~]# docker run -it --rm --network container:20e6ef4032fa busybox / / it is found that the IP of the first container is consistent with that of the second container

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

14: eth0@if15: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

At this point, we create a directory on a container

/ # mkdir -p /opt/123

Check the / opt directory on the second container and you will find that it does not exist because the file system is isolated and only shares the network.

/ # ls /opt ls: /opt: No such file or directory

Deploy a site on the second container

/ # echo 'linux' > /var/www/index.html / # /bin/httpd -f -h /var/www/ [root@localhost ~]# docker exec -it bbbd1d60444a /bin/sh / / enter the second container / # netstat -anlt / / view the port number Active Internet connections (servers and established) Proto Recv-Q Send-Q Local Address Foreign Address State tcp 0 0 :::80 :::* LISTEN

Access this site with a local address on the first container

/ # wget -O - -q 127.0.0.1 linux // It can be seen that the relationship between containers in container mode is equivalent to two different processes on a host

2.4 host mode configuration

//Directly indicate that the mode is host when starting the container

[root@localhost ~]# docker run -it --rm --network host busybox / / share network card with real machine

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel qlen 1000

link/ether 00:0c:29:5f:b4:28 brd ff:ff:ff:ff:ff:ff

inet 192.168.182.150/24 brd 192.168.182.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5f:b428/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue

link/ether 02:42:35:05:b8:22 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:35ff:fe05:b822/64 scope link

valid_lft forever preferred_lft forever

/ # echo 'RHCAS' > /var/www/index.html

/ # /bin/httpd -h /var/www/

/ # netstat -anlt

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN

tcp 0 0 192.168.182.150:22 192.168.182.1:53545 ESTABLISHED

tcp 0 0 192.168.182.150:22 192.168.182.1:53648 ESTABLISHED

tcp 0 0 192.168.182.150:22 192.168.182.1:64580 ESTABLISHED

tcp 0 0 192.168.182.150:22 192.168.182.1:64579 ESTABLISHED

tcp 0 0 :::80 :::* LISTEN

tcp 0 0 :::22 :::* LISTEN

[root@localhost ~]# curl 192.168.182.150

RHCAS

3. Common operations of containers

3.1 viewing the host name of the container

[root@localhost ~]# docker run -it --rm busybox / # hostname 5993c4638ee9

3.2 inject the host name when the container starts

[root@localhost ~]# docker run -it --rm --hostname admin busybox / # hostname admin / # cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.17.0.2 admin // Host name to IP mapping is automatically created when host name is injected / # cat /etc/resolv.conf # Generated by NetworkManager nameserver 192.168.182.2 // DNS is also automatically configured as the DNS of the host / # ping -c1 baidu.com PING baidu.com (220.181.38.251): 56 data bytes 64 bytes from 220.181.38.251: seq=0 ttl=127 time=29.478 ms --- baidu.com ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 29.478/29.478/29.478 ms

3.3 manually specify the DNS to be used by the container

[root@localhost ~]# docker run -it --rm --hostname tom --dns 114.114.114.114 busybox / # cat /etc/resolv.conf nameserver 114.114.114.114

3.4 manually inject the mapping from host name to IP address into the / etc/hosts file

[root@localhost ~]# docker run -it --rm --hostname admin --add-host www.test.com:100.1.1.1 busybox / # cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 100.1.1.1 www.test.com 172.17.0.2 admin

3.5 open container port

When docker run is executed, there is a - p option to map the application ports in the container to the host, so that the external host can access the applications in the container by accessing a port of the host.

-The p option can be used multiple times, and the port it can expose must be the port that the container is actually listening to.

-Use format of p option:

- -p <containerPort>

- Maps the specified container port to a dynamic port at all addresses of the host

- -p <hostPort>:<containerPort>

- Map the container port < containerport > to the specified host port < hostport >

- -p ::<containerPort>

- Map the specified container port < containerport > to the dynamic port specified by the host < IP >

- -p :<hostPort>:<containerPort>

- Map the specified container port < containerport > to the host specified port < hostport >

Dynamic ports refer to random ports. The specific mapping results can be viewed using the docker port command.

[root@localhost ~]# docker run -d --name web --rm -p 80 httpd / / create a container using the httpd image and - d run in the background. If no port number is specified, 80 will be randomly mapped to a port number of the real machine // It can be seen that port 80 of the container is exposed to port 49153 of the host. At this time, we can access this port on the host to see if we can access the sites in the container [root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES fd294d368bd6 httpd "httpd-foreground" 11 seconds ago Up 9 seconds 0.0.0.0:49153->80/tcp, :::49153->80/tcp web [root@localhost ~]# curl 192.168.182.150:49153 <html><body><h1>It works!</h1></body></html> // Method 1: [root@localhost ~]# docker run -d --name web --rm -p 80:80 httpd / / map the container's 80 to the host's 80. The first 80 is the host's 80, and the last 80 is the container's 80 07b4f20fc0a9aed9df32c81f064d8e1d5fd7009a0c3afd28be4eb2383c948abf [root@localhost ~]# curl 192.168.182.150 <html><body><h1>It works!</h1></body></html> // Method 2: [root@localhost ~]# docker run -d --name web --rm -p 996:80 httpd / / randomly specify which port number to map to 84cb7b0bc47c5401ed44d0758b60c062dd390a2b6f152f8b3ea776f07dd3b182 [root@localhost ~]# docker port web 80/tcp -> 0.0.0.0:996 80/tcp -> :::996 [root@localhost ~]# curl 192.168.182.150:996 <html><body><h1>It works!</h1></body></html> Method 3: [root@localhost ~]# docker run -d --name web --rm -p 192.168.182.150::80 httpd / / map the container's 80 to the host's 49153 port number. It can only be accessed using the IP address 192.168.182.150 dbe29d63cf6a5f3c70db059f5bfd3cd5d1c84f40f31403b046e136e470e42c6a [root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES dbe29d63cf6a httpd "httpd-foreground" 8 seconds ago Up 7 seconds 192.168.182.150:49153->80/tcp web [root@localhost ~]# curl 192.168.182.150:49153 <html><body><h1>It works!</h1></body></html> // Method 4: [root@localhost ~]# docker run -d --name web --rm -p 127.0.0.1:80:80 httpd / / map the container's 80 port number to the IP's 80 port number 9cb016186b08a944b55b3bf4bbdebb66c9dc70d880a3aa6f33d15c87dae00b18 [root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9cb016186b08 httpd "httpd-foreground" 9 seconds ago Up 7 seconds 127.0.0.1:80->80/tcp web [root@localhost ~]# curl 127.0.0.1 <html><body><h1>It works!</h1></body></html>

iptables firewall rules will be automatically generated when the container is created, deleted when the container is deleted, and deleted when the container is closed.

// Maps the container port to the port number of the specified IP

[root@localhost ~]# docker run -d --name web --rm -p 127.0.0.1:80:80 httpd

907277a6d75ec4435427cc2c4aca0bff9d6a69b83eb39ea7a100b609d5cebfd6

// View port mapping

[root@localhost ~]# docker port web

80/tcp -> 127.0.0.1:80

[root@localhost ~]# iptables -t nat -nvL / / view iptables rules

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

5 260 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

3 194 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

0 0 MASQUERADE tcp -- * * 172.17.0.2 172.17.0.2 tcp dpt:80

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

6 360 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 127.0.0.1 tcp dpt:80 to:172.17.0.2:80

[root@localhost ~]# docker run -d --name web -p 80:80 httpd

df6243779bab274b209ea3f4962a738c02eae085e25a98e1cd7ce5e3814955d0

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

df6243779bab httpd "httpd-foreground" 20 seconds ago Up 18 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp web

[root@localhost ~]# iptables -t nat -nvL

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

5 260 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

3 194 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

0 0 MASQUERADE tcp -- * * 172.17.0.2 172.17.0.2 tcp dpt:80

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

6 360 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:80 to:172.17.0.2:80

[root@localhost ~]# docker stop web / / stop the container

web

[root@localhost ~]# docker ps -a | grep web

df6243779bab httpd "httpd-foreground" 3 minutes ago Exited (0) About a minute ago web

[root@localhost ~]# iptables -t nat -nvL. / / it is found that the rule is missing

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

5 260 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

3 194 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

6 360 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

3.6 network attribute information of custom docker0 Bridge

To customize the network attribute information of docker0 bridge, you need to modify the / etc/docker/daemon.json configuration file

Official reference documents

// The core option is bip, which means bridge ip. It is used to specify the IP address of docker0 bridge itself; Other options can be calculated from this address.

[root@localhost docker]# pwd

/etc/docker

[root@localhost docker]# cat daemon.json

{

"bip": "192.168.1.1/24"

}

[root@localhost docker]# systemctl restart docker.service / / restart the docker service

[root@localhost docker]# docker start web / / because the container will stop after the docker service is restarted

[root@localhost docker]# docker inspect web | grep -w IPAddress

"IPAddress": "192.168.1.2",

"IPAddress": "192.168.1.2",

// Restore to default

[root@localhost docker]# cat daemon.json

{

"bip": "172.17.0.1/24"

}

[root@localhost docker]# systemctl restart docker.service / / restart the service to make it effective

[root@localhost docker]# ip a | grep -w docker0 | grep inet | awk -F '[ /]+' '{print $3}'

172.17.0.1

3.7 docker remote connection

The C/S of the dockerd daemon only listens to the address in Unix Socket format (/ var/run/docker.sock) by default. If you want to use TCP sockets, you need to modify the / etc/docker/daemon.json configuration file, add the following contents, and then restart the docker service:

"hosts": ["tcp://0.0.0.0:2375", "unix:///var/run/docker.sock"] On the client dockerd Direct transmission“-H|--host"Option specifies which host to control docker container docker -H 192.168.10.145:2375 ps

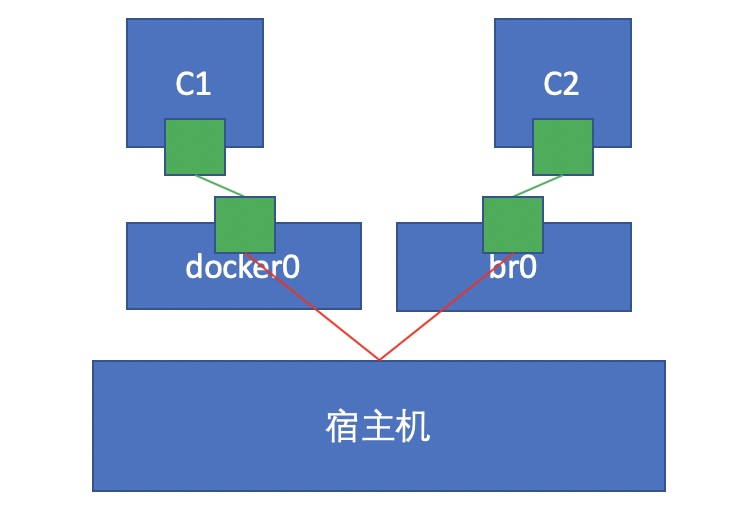

3.8 docker creating a custom bridge

Create an additional custom bridge, which is different from docker0

[root@localhost ~]# docker network ls / / view the network mode

NETWORK ID NAME DRIVER SCOPE

c20dbcdb1e57 bridge bridge local

981fad12ece5 host host local

98d506fcdbf1 none null local

[root@localhost ~]# Docker network create - D bridge -- subnet '192.168.2.0 / 24' -- gateway '192.168.2.1' br0 / / - D is the specified mode, - subnet is the specified network segment, gateway is 2.1, and the name of the bridge is br0

593afb169be7c90484a1e633ebc1913ac9501383e6931a77f59c3b5685210e89

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

593afb169be7 br0 bridge local

c20dbcdb1e57 bridge bridge local

981fad12ece5 host host local

98d506fcdbf1 none null local

// Create a container using the newly created custom bridge:

[root@localhost ~]# docker run -it --rm --network br0 busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

45: eth0@if46: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:c0:a8:02:02 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.2/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

// Create another container and use the default bridge:

[root@localhost ~]# docker run -it --rm busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

47: eth0@if48: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

Through comparison, we can find that the network segments of the custom bridge and the default bridge mode are different. How to realize communication?

We can connect the default bridge to br0 Interworking on the bridge.

[root@localhost ~]# docker network connect br0 7d1f75c55c1d

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

47: eth0@if48: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

49: eth1@if50: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:c0:a8:02:03 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.3/24 brd 192.168.2.255 scope global eth1

valid_lft forever preferred_lft forever

/ # ping -c2 192.168.182.2

PING 192.168.182.2 (192.168.182.2): 56 data bytes

64 bytes from 192.168.182.2: seq=0 ttl=127 time=0.363 ms

64 bytes from 192.168.182.2: seq=1 ttl=127 time=0.241 ms

--- 192.168.182.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.241/0.302/0.363 ms

[root@localhost ~]# docker network connect bridge da6a9c90c609 / / this id is the id of the br0 bridge

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

45: eth0@if46: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:c0:a8:02:02 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.2/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

51: eth1@if52: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth1

valid_lft forever preferred_lft forever

/ # ping -c2 172.17.0.2

PING 172.17.0.2 (172.17.0.2): 56 data bytes

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.245 ms

64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.107 ms

--- 172.17.0.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.107/0.176/0.245 ms