1. The following configuration is provided in the http module. When the agent encounters a status code of 404, we direct the 404 page to Baidu.

error_page 404 https://www.baidu.com; # Error page

However, careful friends can find that this configuration does not work.

If we want it to work, we must use it with the following configuration

proxy_intercept_errors on; #If the status code returned by the proxy server is 400 or greater than 400, set error_page configuration works. The default is off.

2. If our proxy is only allowed to accept get, post is one of the request methods

proxy_method get; #Support client request methods. post/get;

3. Set supported http protocol versions

proxy_http_version 1.0 ; #The http protocol version of the proxy service provided by the Nginx server is 1.0 and 1.1. The default setting is 1.0

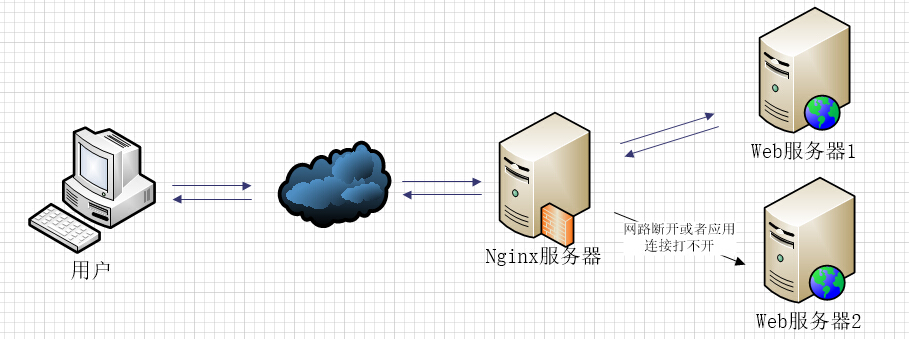

4. If your nginx server acts as a proxy for two web servers, and the load balancing algorithm uses polling, when one of your machine's Web programs iis is closed, that is, the web cannot be accessed, the nginx server will still distribute requests to the inaccessible web server. If the response connection time here is too long, the client's page will be waiting for a response, For users, the experience is discounted. How can we avoid this. Here I'll attach a picture to illustrate the problem.

If this happens to web2 in load balancing, nginx will first go to web1 to request, but nginx will continue to distribute the request channel web2 in case of improper configuration, and then wait for web2 response. We will not redistribute the request to web1 until our response time expires. If the response time is too long, the user will wait longer.

The following configuration is one of the solutions.

proxy_connect_timeout 1; #The timeout for the nginx server to establish a connection with the proxy server. The default is 60 seconds

proxy_read_timeout 1; #After the nginx server wants to be sent a read request by the proxy server group, the timeout for waiting for the response is 60 seconds by default.

proxy_send_timeout 1; #After the nginx server wants to be sent a write request by the proxy server group, the timeout for waiting for the response is 60 seconds by default.

proxy_ignore_client_abort on; #When the client is disconnected, whether the nginx server requests the proxy server from the terminal. The default is off.

5. If a group of servers are configured as proxy servers by using the upstream instruction, the access algorithm in the server follows the configured load balancing rules. At the same time, the instruction can be used to configure which exceptions occur and hand over the requests to the next group of servers in turn.

proxy_next_upstream timeout; #The status value returned by the proxy server when the server group set in the reverse proxy upstream fails.

error|timeout|invalid_header|http_500|http_502|http_503|http_504|http_404|off

Error: the server encountered an error when establishing a connection or sending a request or reading response information to the proxy server.

Timeout: a timeout occurs when the proxy server wants to send a request or read the response information to establish a connection.

invalid_header: the response header returned by the proxy server is abnormal.

off: the request cannot be distributed to the proxy server.

http_400,....: The status code returned by the proxy server is 400500502, etc.

6. If you want to get the real ip address of the client through http instead of the ip address of the proxy server, you should do the following settings.

proxy_set_header Host $host; #As long as the domain name accessed by the user in the browser is bound with VIP, there is RS under VIP; Then use $host; Host is the domain name and port in the access URL www.taobao.com com:80

proxy_set_header X-Real-IP $remote_addr; #Assign the source IP [$remote_addr, the information in the HTTP connection header] to X-Real-IP; In this way, $X-Real-IP is in the code to obtain the source IP

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;#When nginx is used as a proxy server, the IP list set will record the passing machine IP and proxy machine IP, separated by [,];

Used in code echo $x-forwarded-for |awk -F, '{print $1}' As a source IP

For some articles about X-Forwarded-For and X-Real-IP, I recommend a blogger: X-Forwarded-For in HTTP request header , this blogger has a series of articles on http protocol. I recommend you to pay attention to it.

7. The following is my profile section on agent configuration for reference only.

include mime.types; #File extension and file type mapping table

default_type application/octet-stream; #The default file type is text/plain

#access_log off; #Cancel service log

log_format myFormat ' $remote_addr–$remote_user [$time_local] $request $status $body_bytes_sent $http_referer

$http_user_agent $http_x_forwarded_for'; #Custom format

access_log log/access.log myFormat; #combined is the default value for log format

sendfile on; #sendfile mode is allowed to transfer files. The default is off. It can be in http block, server block and location block.

sendfile_max_chunk 100k; #The number of transfers per call of each process cannot be greater than the set value. The default value is 0, that is, there is no upper limit.

keepalive_timeout 65; #The connection timeout, which is 75s by default, can be set in http, server and location blocks.

proxy_connect_timeout 1; #The timeout for the nginx server to establish a connection with the proxy server. The default is 60 seconds

proxy_read_timeout 1; #After the nginx server wants to be sent a read request by the proxy server group, the timeout for waiting for the response is 60 seconds by default.

proxy_send_timeout 1; #After the nginx server wants to be sent a write request by the proxy server group, the timeout for waiting for the response is 60 seconds by default.

proxy_http_version 1.0 ; #The http protocol version of the proxy service provided by the Nginx server is 1.0 and 1.1. The default setting is 1.0.

#proxy_method get; #Support client request methods. post/get;

proxy_ignore_client_abort on; #When the client is disconnected, whether the nginx server requests the proxy server from the terminal. The default is off.

proxy_ignore_headers "Expires" "Set-Cookie"; #The Nginx server does not process the header fields in the set http corresponding posts. Multiple fields can be set here separated by spaces.

proxy_intercept_errors on; #If the status code returned by the proxy server is 400 or greater than 400, set error_page configuration works. The default is off.

proxy_headers_hash_max_size 1024; #The maximum capacity of the hash table for storing http message headers is 512 characters by default.

proxy_headers_hash_bucket_size 128; #The size of the hash table that the nginx server requests to store http message headers. The default is 64 characters.

proxy_next_upstream timeout; #The status value returned by the proxy server when the server group set in the reverse proxy upstream fails.

error|timeout|invalid_header|http_500|http_502|http_503|http_504|http_404|off

#proxy_ssl_session_reuse on; The default is on. If we find "ssl3_get_finished: digest check failed" in the error log, we can set the instruction to off.

Detailed explanation of Nginx load balancing

In the last article, I talked about the load balancing algorithms in nginx. I'll give you a detailed description of the operation configuration.

First, let's talk about the upstream configuration. This configuration is to write a group of proxy server addresses, and then configure the load balancing algorithm. The proxy server address here is written in 2.

upstream mysvr {

server 192.168.10.121:3333;

server 192.168.10.122:3333;

}

server {

....

location ~*^.+$ {

proxy_pass http://mysvr; # The request goes to the list of servers defined by mysvr

}

upstream mysvr {

server http://192.168.10.121:3333;

server http://192.168.10.122:3333;

}

server {

....

location ~*^.+$ {

proxy_pass mysvr; #The request goes to the list of servers defined by mysvr

}

Then, something practical.

1. Hot standby: if you have two servers, only when one server has an accident can you enable the second server to provide services. The order in which the server processes requests: AAAAA suddenly A hangs up, BBBB

upstream mysvr {

server 127.0.0.1:7878;

server 192.168.10.121:3333 backup; #Hot standby

}

2. Polling: nginx is polling by default, and its weight is 1 by default. The order in which the server processes requests: ABAB

upstream mysvr {

server 127.0.0.1:7878;

server 192.168.10.121:3333;

}

3. Weighted polling: distribute different number of requests to different servers according to the configured weight. If not set, it defaults to 1. The request order of the following server is: abbabbabbabbabbab

upstream mysvr {

server 127.0.0.1:7878 weight=1;

server 192.168.10.121:3333 weight=2;

}

4,ip_hash:nginx will make the same client IP request the same server.

upstream mysvr {

server 127.0.0.1:7878;

server 192.168.10.121:3333;

ip_hash;

}

5. If you don't understand the above four equalization algorithms very well, it may be easier to understand if you look at the pictures in my last article.

Here, do you feel that nginx's load balancing configuration is particularly simple and powerful? Then it's not over. Let's continue. Here's the egg.

Explain several state parameters of nginx load balancing configuration.

-

down indicates that the current server does not participate in load balancing temporarily.

-

Backup, reserved backup machine. When all other non backup machines fail or are busy, the backup machine will be requested, so the pressure on this machine is the least.

-

max_ Failures, the number of times a request is allowed to fail. The default value is 1. When the maximum number of times is exceeded, proxy is returned_ next_ Error in upstream module definition.

-

fail_timeout, after experiencing max_ The time the service is suspended after failures. max_ Failures can be compared with fail_ Use with timeout.

upstream mysvr {

server 127.0.0.1:7878 weight=2 max_fails=2 fail_timeout=2;

server 192.168.10.121:3333 weight=1 max_fails=2 fail_timeout=1;

}

It can be said that nginx's built-in load balancing algorithm is out of stock. If you want to know more about nginx's load balancing algorithm, nginx officially provides some plug-ins for you to understand.

Original address: https://www.cnblogs.com/knowledgesea/p/5199046.html