Implementation of resource constraints

Kubernetes' restriction on resources is actually controlled by cgroup, which is a set of related attributes of the container used to control how the kernel runs the process. There are corresponding cgroups for memory, CPU and various devices.

By default, Pod runs without CPU and memory limits. This means that any Pod in the system can consume enough CPU and memory like the node machine where the Pod is executed. Generally, the Pod resources of some applications are subject to resource restrictions. This resource restriction is realized through requests and limits of resources.

Two types of restrictions on resources

Kubernetes uses two restriction types: request and limit to allocate resources.

1. Request: that is, the node running the Pod must meet the most basic requirements for running the Pod before running the Pod.

2. Limit (resource limit): that is, during the operation of Pod, the memory usage may increase, and the maximum amount of memory can be used, which is the resource limit.

Resource type unit

1,CPU

The unit of CPU is the number of cores, and the unit of memory is bytes.

If a container applies for 0.5 CPUs, it is equivalent to applying for half of one CPU. You can also add a suffix m to represent the concept of one thousandth.

For example, a 100m (100 Hao) CPU is equivalent to 0.1 CPU.

2. Memory unit:

K. M, G, T, P, E ## usually take 1000 as the conversion standard.

Ki, Mi, Gi, Ti, Pi, Ei ## are usually converted to 1024.

Demo environment

server1: 172.25.38.1 harbor Warehouse end server2: 172.25.38.2 k8s master end server3: 172.25.38.3 k8s node end server4: 172.25.38.4 k8s node end

Memory limit

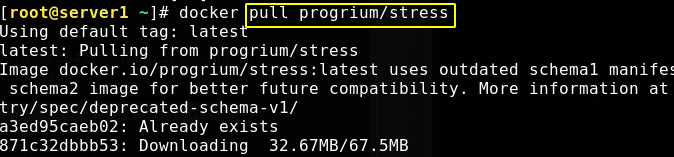

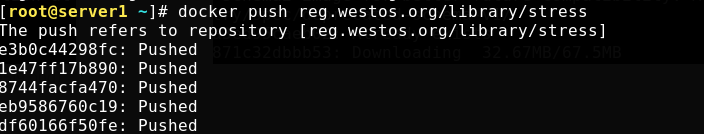

Use mirror stress in the container for stress testing, and specify the number of resources for stress testing. First, pull the image from the warehouse side and upload it for k8s the master side.

#The stress image actually encapsulates the stress command (simulating the scenario when the system load is high)

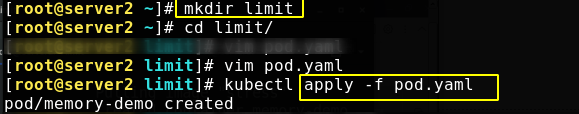

Create a new directory and create a pod mirrored by stress, as follows:

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args: #Provides parameters for container startup

- --vm

- "1" #Simulate a process

- --vm-bytes

- 200M #Each process uses 200M memory

resources:

requests:

memory: 50Mi #A minimum of 50Mi memory is required

limits:

memory: 100Mi #Up to 100Mi

Create pod from application file

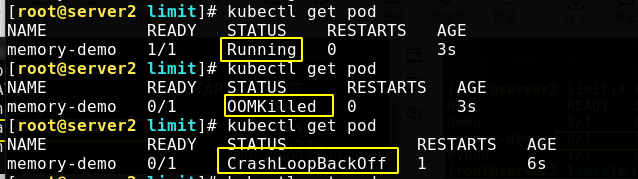

You can see that the status changes are as follows. Ommilled means that the memory limit is exceeded.

We ask to start a process using 200M memory at startup, but we limit it to 100M at most, so it will naturally exceed the limit. If a container exceeds its memory request, its Pod may be evicted when the node is out of memory.

Crashdropbackoff means that the pod hangs, restarts and hangs back and forth.

If the container exceeds its memory limit, it is terminated. If it is restartable, kubelet will restart it like all other types of runtime failures.

CPU limit

Create a pod with stress image for cpu pressure test

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: stress #Using stress mirroring

resources:

limits:

cpu: "10" #Use up to 10 CPU s

requests:

cpu: "5" #Minimum 5 CPU s

args: #Provides parameters for container startup

- -c #CPU

- "2" #Specifies that the container starts with 2 CPU s

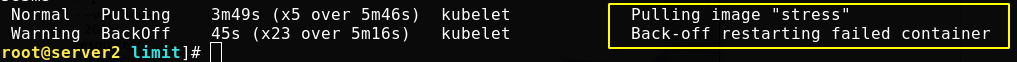

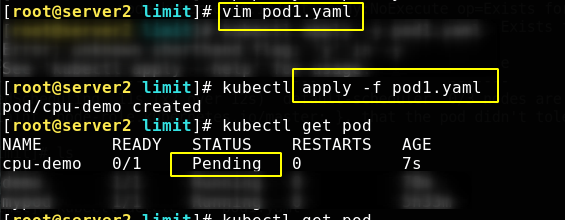

The application file appears in the following status and needs to be scheduled. This is because we need at least 5 CPUs to set the container, but we don't have 5 CPUs at all (I only gave one CPU to the virtual machine), that is, there is no qualified node, so we can't successfully schedule the pod

LimitRange

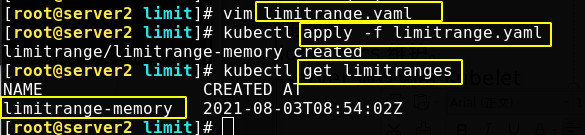

The minimum and maximum memory limits imposed by LimitRange in the namespace are only applied when creating and updating pods. Changing the LimitRange will not affect the previously created Pod.

Set resource limits for namespace

Define the resource limits under default namespaces. The file contents are as follows:

apiVersion: v1

kind: LimitRange #Resource type

metadata:

name: limitrange-memory

spec:

limits:

- default: #When the number of resources is not specified in the pod definition file, a maximum of 0.5 CPUs and 512Mi memory are used under this namespace

cpu: 0.5

memory: 512Mi

defaultRequest: #When the number of resources is not specified in the pod definition file, at least 0.1 cpu and 256Mi memory are required under this namespace

cpu: 0.1

memory: 256Mi

max: #No matter whether the number of resources is specified in the pod definition file or not, a maximum of 1 cpu and 1Gi of memory are used under this namespace

cpu: 1

memory: 1Gi

min: #No matter whether the number of resources is specified in the pod definition file or not, at least 0.1 cpu and 100Mi memory are required under this namespace

cpu: 0.1

memory: 100Mi

type: Container

The application file creates a resource limit belonging to the default namespace

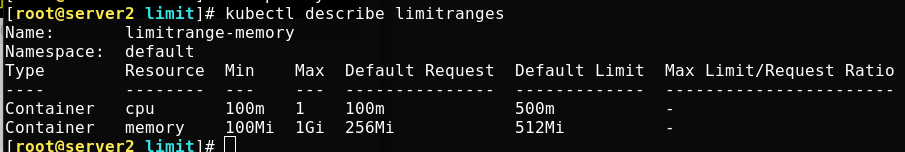

You can view the detailed description

Write a definition file for creating a pod that limits memory, as follows:

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: nginx

resources:

requests:

memory: 50Mi #A minimum of 50Mi memory is required

limits:

memory: 100Mi #Up to 100Mi

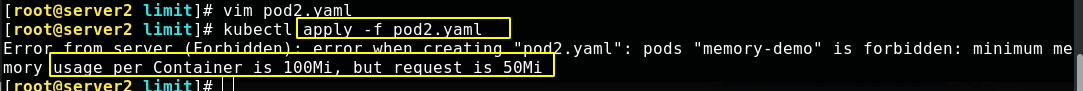

The application file failed to create a pod because the limitrange above requires at least 100Mi, and we set 50Mi, so an error occurred

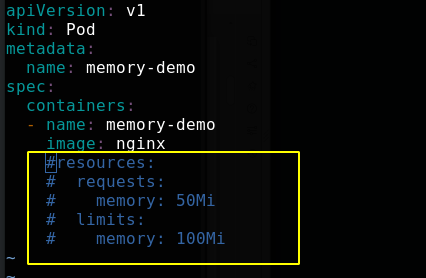

Note out the part in the file as shown in the figure below, that is, no resource limit is specified

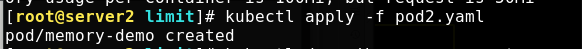

Reapply

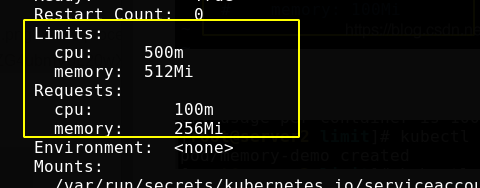

Execute kubectl describe pod memory demo to see that the resource limit is automatically added due to the LimitRange

ResourceQuota

LimitRange is the resource limit set for a single pod, and ResourceQuota is the total resource set for all pods.

The created ResourceQuota object will add the following restrictions to the default namespace:

Each container must set memory request, memory limit, cpu request and cpu limit.

Set resource quota for namespace

Define the resource quota under default namespaces. The file contents are as follows:

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1" #The total number of CPU requests for all containers must not exceed 1 CPU

requests.memory: 1Gi #Total memory requests for all containers must not exceed 1 GiB

limits.cpu: "2" #The total CPU quota of all containers shall not exceed 2 CPU s

limits.memory: 2Gi #The total memory quota for all containers must not exceed 2 GiB

Modify the above used POD2 Yaml file

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: nginx

resources:

requests:

cpu: 0.2

memory: 100Mi

limits:

cpu: 1

memory: 300Mi

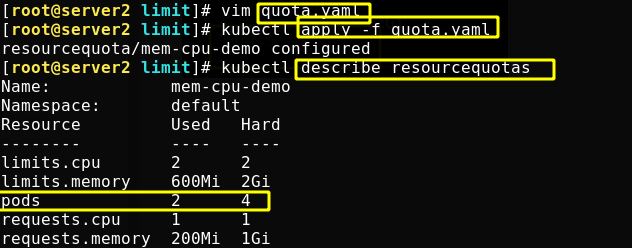

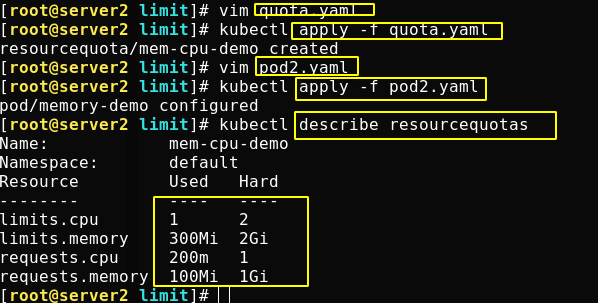

When the application creates a pod, you can see how much resource quota has been used

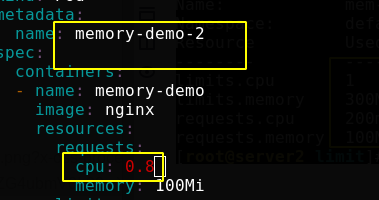

Modify the file again, re create a pod, and set the resource request as shown in the figure below

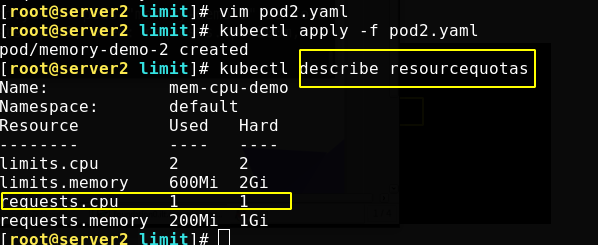

Looking at the resource quota, you can see the total accumulated resources

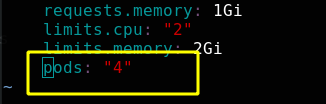

Modify the resource quota definition file to limit the total number of pod s to 4

Reapply the file, and the resource quota is updated successfully