in TensorFlow, you can not only build neural networks through the bottom implementation of custom weights, but also directly call the high-level implementation of existing convolution layer classes to quickly build complex networks. We mainly take 2D convolution as an example to introduce how to realize convolution neural network layer.

1. Custom weight

in TensorFlow, 2D convolution operation can be easily realized through tf.nn.conv2d function. Tf.nn.conv2d based on input

X

:

[

b

,

h

,

w

,

c

i

n

]

\boldsymbol X:[b,h,w,c_{in}]

10: [b, h, W, CIN] and convolution kernel

W

:

[

k

,

k

,

c

i

n

,

c

o

u

t

]

\boldsymbol W:[k,k,c_{in},c_{out}]

W:[k,k,cin, cout] perform convolution operation to obtain the output

O

:

[

b

,

h

′

,

w

′

,

c

o

u

t

]

\boldsymbol O:[b,h',w',c_{out}]

O:[b,h ', w', cout], where

c

i

n

c_{in}

cin indicates the number of input channels,

c

o

u

t

c_{out}

cout denotes the number of convolution kernels, which is also the number of channels of the output characteristic graph.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential, losses, optimizers, datasets

x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5

# We need to create w tensor and four convolution kernels of 3 * 3 size according to [k,k,cin,cout] format

w = tf.random.normal([3, 3, 3, 4])

# Step size is 1 and padding is 0

out = tf.nn.conv2d(x, w, strides=1, padding=[[0, 0], [1, 1], [1, 1], [0, 0]])

# shape of output tensor

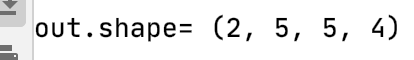

print('out.shape=', out.shape)

The operation results are shown in the figure below:

The setting format of padding parameter is:

padding=[[0, 0], [upper, lower], [Left, right], [0, 0]]

For example, if one unit is filled up, down, left and right, the padding parameter is set to

[

[

0

,

0

]

,

[

1

,

1

]

,

[

1

,

1

]

,

[

0

,

0

]

]

[[0,0],[1,1],[1,1],[0,0]]

[[0,0], [1,1], [1,1], [0,0]] are implemented as follows:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential, losses, optimizers, datasets

x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5

# We need to create w tensor and four convolution kernels of 3 * 3 size according to [k,k,cin,cout] format

w = tf.random.normal([3, 3, 3, 4])

# Step size is 1 and padding is 1

out = tf.nn.conv2d(x, w, strides=1, padding=[[0, 0], [1, 1], [1, 1], [0, 0]])

# shape of output tensor

print('out.shape=', out.shape)

The operation results are shown in the figure below:

in particular, by setting the parameters padding = 'SAME' and stripes = 1, the convolution layers with the SAME size of input and output can be directly obtained, in which the specific number of padding is automatically calculated by TensorFlow and the filling operation is completed. For example:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential, losses, optimizers, datasets

x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5

# We need to create w tensor and four convolution kernels of 3 * 3 size according to [k,k,cin,cout] format

w = tf.random.normal([3, 3, 3, 4])

# The step size is 1, and the padding is set to the same size as the input and output

# It should be noted that padding=same is the same size only when stripes = 1

out = tf.nn.conv2d(x, w, strides=1, padding='SAME')

# shape of output tensor

print('out.shape=', out.shape)

The operation results are shown in the figure below:

When s < 1, setting padding = 'SAME' will make the output height and width equal

1

s

\frac{1}{s}

s1 , times less. For example:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Sequential, losses, optimizers, datasets

x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5

# We need to create w tensor and four convolution kernels of 3 * 3 size according to [k,k,cin,cout] format

w = tf.random.normal([3, 3, 3, 4])

# The height and width are padded to the smallest integer 6 that can divide 3, and then 6 is reduced by 3 times to obtain 2 * 2

out = tf.nn.conv2d(x, w, strides=3, padding='SAME')

# shape of output tensor

print('out.shape=', out.shape)

The operation results are shown in the figure below:

the convolutional neural network layer is the same as the full connection layer, and the network band offset vector can be set. tf.nn.conv2d function does not realize the calculation of offset vector. To add offset, you only need to manually accumulate offset vector. For example:

# Create offset vector according to [out] format b = tf.zeros([4]) # Superimpose the offset vector on the convolution output, and it will automatically broadcast as [b,h',w',cout] out = out + b

2. Convolution layer class

through the convolution layer class layers.Conv2D, there is no need to manually define the convolution kernel

W

\boldsymbol W

W and offset

b

\boldsymbol b

b tensor, directly call the class instance to complete the forward calculation of the convolution layer, so as to achieve higher level and faster. In TensorFlow, API naming has certain rules. Objects with uppercase letters generally represent classes, and all lowercase objects generally represent functions. For example, layers.Conv2D represents convolution layer classes, and nn.conv2d represents convolution operation functions. Using the class method will automatically create the required weight tensor and offset vector (when creating a class or build ing). Users do not need to remember the definition format of convolution kernel tensor, so it is simpler and more convenient to use, but it is also slightly less flexible. The function interface needs to define its own weight and offset, which is more flexible and low-level.

when creating a convolution layer class, you only need to specify the convolution kernel number parameter filters, convolution kernel size kernel_size, step size, strings, padding, etc. Four are created as follows

3

×

3

3×3

three × The convolution layer of convolution kernel with size of 3, step size of 1, and padding scheme of 'SAME':

import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers, Sequential, losses, optimizers, datasets x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5 layer = layers.Conv2D(4, kernel_size=3, strides=1, padding='SAME') out = layer(x) print(out.shape)

The operation results are shown in the figure below:

if the convolution kernel has different height and width, and the step size and row and column direction are different, you need to set the kernel_ The size parameter is designed in tuple format

(

k

h

,

k

w

)

(k_h,k_w)

(kh, kw), the strips parameter is designed as

(

s

h

,

s

w

)

(s_h,s_w)

(sh,sw). Create 4 as follows

3

×

4

3×4

three × 4-size convolution kernel, moving step in the vertical direction

s

h

=

2

s_h=2

sh = 2, moving step in horizontal direction is

s

w

=

1

s_w=1

sw=1:

import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers, Sequential, losses, optimizers, datasets x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5 layer = layers.Conv2D(4, kernel_size=(3, 4), strides=(2, 1), padding='SAME') out = layer(x) print(out.shape)

The operation results are shown in the figure below:

after creation, forward calculation can be completed by calling the instance, for example:

import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers, Sequential, losses, optimizers, datasets x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5 layer = layers.Conv2D(4, kernel_size=3, strides=1, padding='SAME') out = layer(x) print(out.shape)

in class Conv2D, the convolution kernel tensor is preserved

W

\boldsymbol W

W and offset

b

\boldsymbol b

b. You can use class member trainable_variables returns directly

W

\boldsymbol W

W and

b

\boldsymbol b

b list of, for example:

import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers, Sequential, losses, optimizers, datasets x = tf.random.normal([2, 5, 5, 3]) # Analog input, 3 channels, height and width of 5 layer = layers.Conv2D(4, kernel_size=3, strides=1, padding='SAME') out = layer(x) print(out.shape) # Output a list of all tensors to be optimized print(layer.trainable_variables)

The operation results are as follows:

(2, 5, 5, 4)

[<tf.Variable 'conv2d/kernel:0' shape=(3, 3, 3, 4) dtype=float32, numpy=

array([[[[-0.06861021, 0.15635735, 0.23594084, 0.08823672],

[-0.07579896, 0.28215882, -0.07285108, 0.15759888],

[-0.04988965, -0.21231258, -0.08478491, 0.16820547]],

...,

dtype=float32)>, <tf.Variable 'conv2d/bias:0' shape=(4,) dtype=float32, numpy=array([0., 0., 0., 0.], dtype=float32)>]

By calling ` layer.trainable_variables can return the values maintained by the Conv2D class W \boldsymbol W W and b \boldsymbol b b tensor, this class member is very useful in obtaining the variables to be optimized in the network layer. You can also directly call the class instance layer.kernel and layer.bias name to access W \boldsymbol W W and b \boldsymbol b b tensor.