1, Introduction to reptiles

1. Introduction

(1) The English name of Web Crawler is Web Crawler or Web Spider. It is a program that automatically browse web pages and collect required information.

(2) The crawler starts from the URL of the initial web page and obtains the URL on the initial web page. In the process of grabbing the web page, it constantly extracts new URLs from the current page and puts them into the queue. It does not stop until the stop conditions given by the system are met.

(3) It can download web page data from the Internet for search engine, which is an important part of search engine.

2. Working process of reptile

(1)URL management module: initiate a request. Generally, requests are made to the target site through the HTTP library. It is equivalent to opening the browser and entering the web address.

(2) Download module: get the response. If the requested content exists on the server, the server will return the requested content, generally HTML, binary files (video, audio), documents, Json strings, etc.

(3) Parsing module: parsing content. For users, it is to find the information they need. For Python crawlers, it is to extract target information using regular expressions or other libraries.

(4) Enclosure: save data. The parsed data can be saved locally in many forms, such as text, audio and video.

2, Climb the ACM topic website of Nanyang Institute of Technology

1. Open the web page http://www.51mxd.cn/problemset.php-page=1.htm , check the page source code, and you can see that the information we want to crawl is in the TD tab

2. Create a new file and write the code

import requests# Import web request Library

from bs4 import BeautifulSoup# Import web page parsing library

import csv

from tqdm import tqdm

# Simulate browser access

Headers = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400'

# Header

csvHeaders = ['Question number', 'difficulty', 'title', 'Passing rate', 'Number of passes/Total submissions']

# Topic data

subjects = []

# Crawling problem

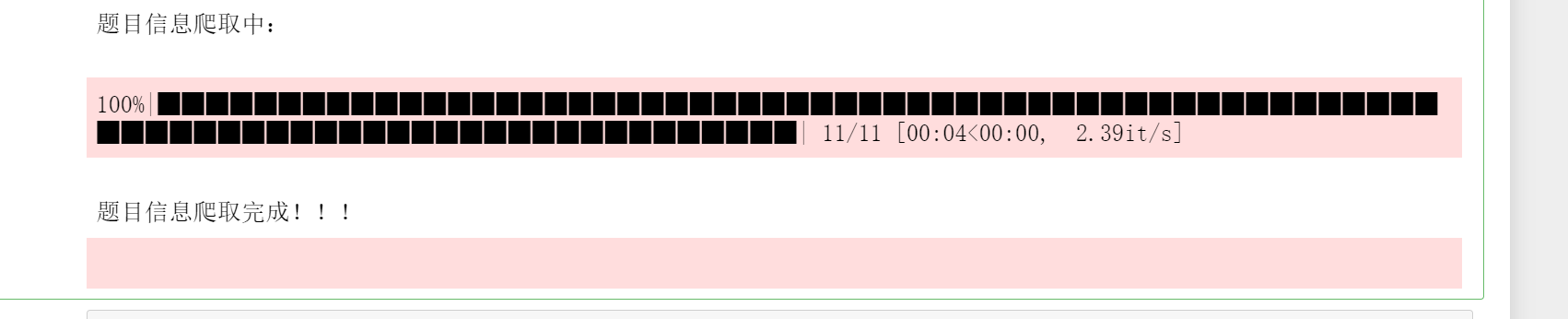

print('Topic information crawling:\n')

for pages in tqdm(range(1, 11 + 1)):

# Incoming URL

r = requests.get(f'http://www.51mxd.cn/problemset.php-page={pages}.htm', Headers)

r.raise_for_status()

r.encoding = 'utf-8'

# Resolve URL

soup = BeautifulSoup(r.text, 'html5lib')

#Find and crawl everything related to td

td = soup.find_all('td')

subject = []

for t in td:

if t.string is not None:

subject.append(t.string)

if len(subject) == 5:

subjects.append(subject)

subject = []

# Storage topic

with open('D:\zhangyun1.csv', 'w', newline='') as file:

fileWriter = csv.writer(file)

fileWriter.writerow(csvHeaders)

fileWriter.writerows(subjects)

print('\n Topic information crawling completed!!!')

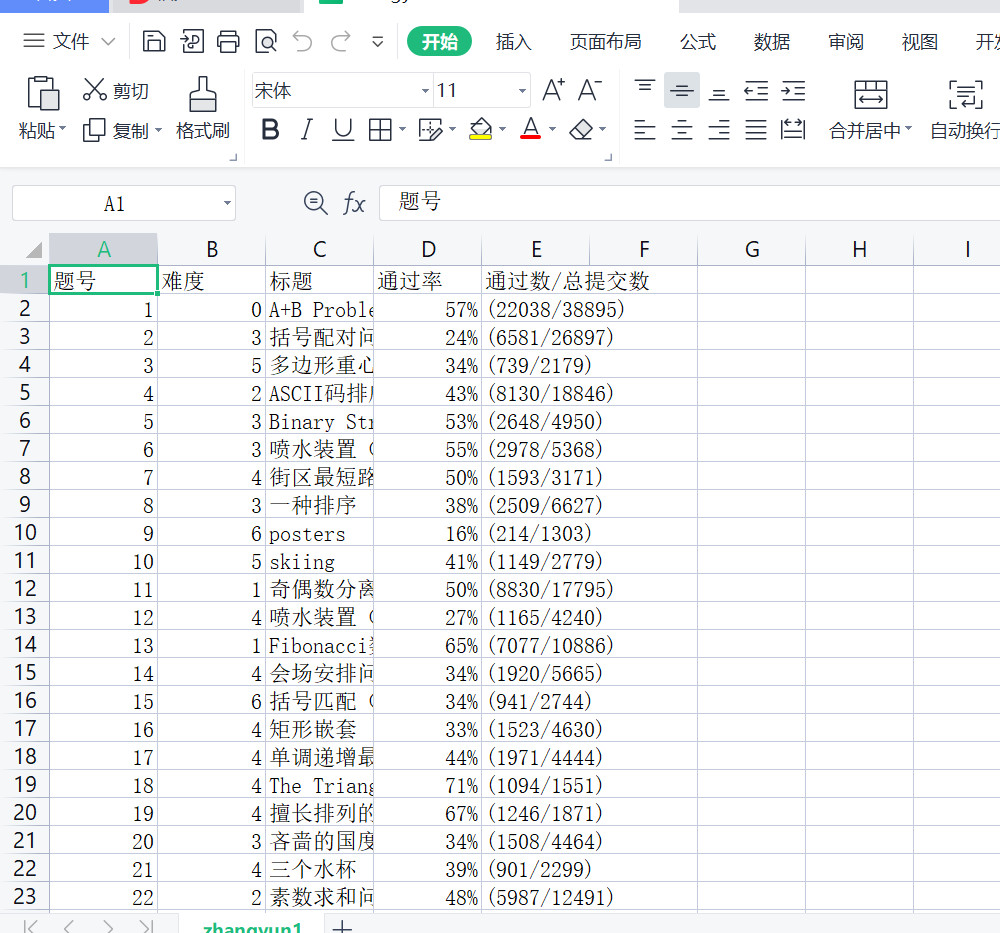

What's in the file

3, Information notice of climbing the school's official website

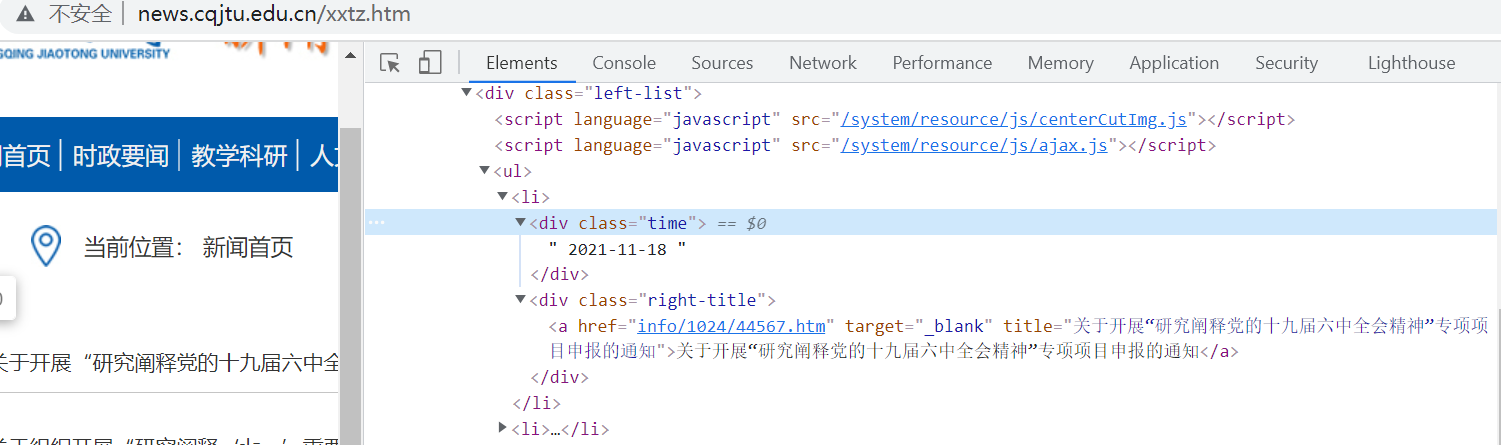

1. Analyze the web page source code and open the web page first http://news.cqjtu.edu.cn/xxtz.htm , the information to be seen this time is the time when all notices are sent and the title of the notice

2. Press F12 to see the content to be crawled

3. Code implementation

# -*- coding: utf-8 -*-

"""

Created on Wed Nov 17 14:39:03 2021

@author: 86199

"""

import requests

from bs4 import BeautifulSoup

import csv

from tqdm import tqdm

import urllib.request, urllib.error # Make URL to get web page data

# All news

subjects = []

# Simulate browser access

Headers = { # Simulate browser header information

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36 Edg/95.0.1020.53"

}

# Header

csvHeaders = ['time', 'title']

print('Information crawling:\n')

for pages in tqdm(range(1, 65 + 1)):

# Make a request

request = urllib.request.Request(f'http://news.cqjtu.edu.cn/xxtz/{pages}.htm', headers=Headers)

html = ""

# If the request is successful, get the web page content

try:

response = urllib.request.urlopen(request)

html = response.read().decode("utf-8")

except urllib.error.URLError as e:

if hasattr(e, "code"):

print(e.code)

if hasattr(e, "reason"):

print(e.reason)

# Parsing web pages

soup = BeautifulSoup(html, 'html5lib')

# Store a news item

subject = []

# Find all li Tags

li = soup.find_all('li')

for l in li:

# Find div tags that meet the criteria

if l.find_all('div',class_="time") is not None and l.find_all('div',class_="right-title") is not None:

# time

for time in l.find_all('div',class_="time"):

subject.append(time.string)

# title

for title in l.find_all('div',class_="right-title"):

for t in title.find_all('a',target="_blank"):

subject.append(t.string)

if subject:

print(subject)

subjects.append(subject)

subject = []

# Save data

with open('D:\cqjtu.csv', 'w', newline='',encoding='utf-8') as file:

fileWriter = csv.writer(file)

fileWriter.writerow(csvHeaders)

fileWriter.writerows(subjects)

print('\n Information crawling completed!!!')

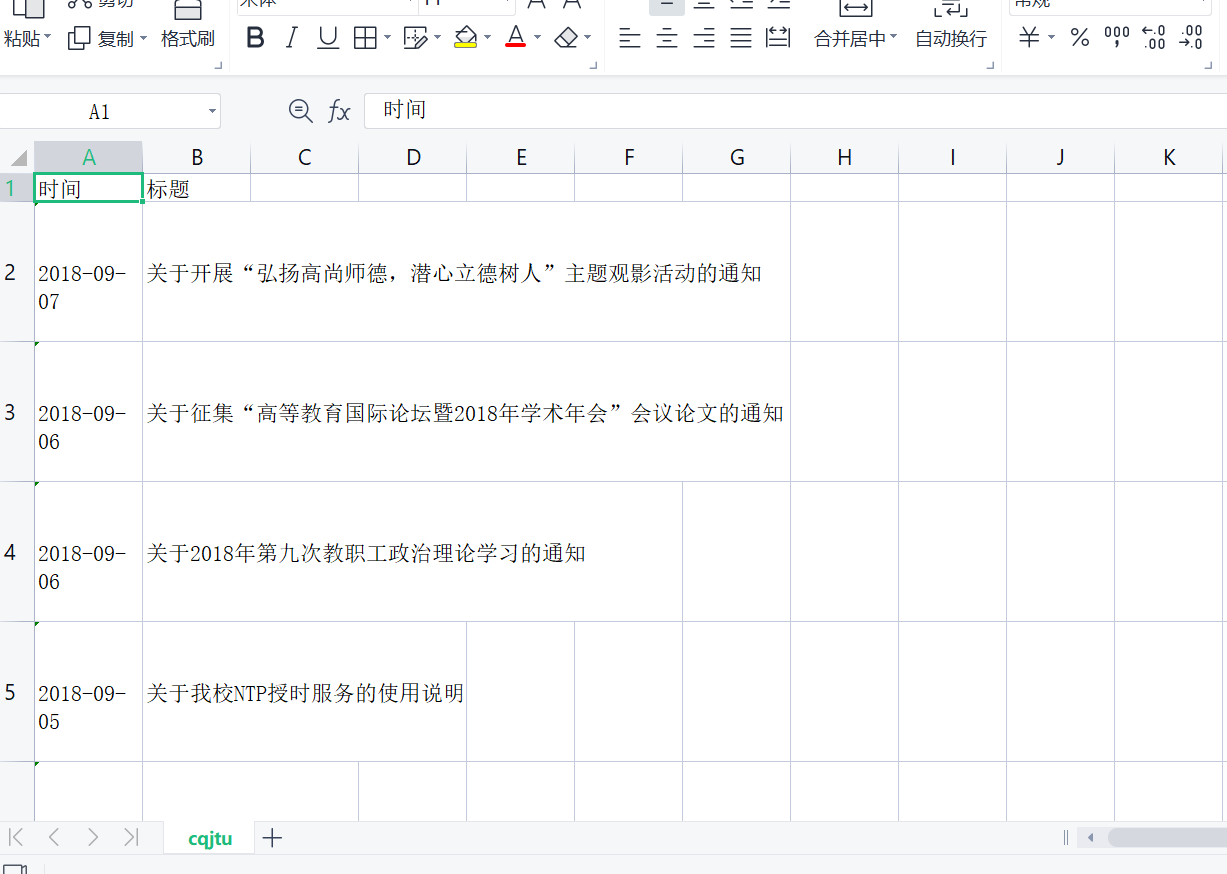

Open the generated cqjtu.csv file

4, Summary

This experiment is mainly to learn the example code, write detailed comments on the key code sentences, and complete the programming of the ACM topic website of Nanyang Institute of technology http://www.51mxd.cn/ Practice capturing and saving topic data; Rewrite the crawler sample code and put all the letters in the news website of Chongqing Jiaotong University in recent years( http://news.cqjtu.edu.cn/xxtz.htm )The release date and title of are all crawled down and written to the CSV spreadsheet.

5, Reference link

https://blog.csdn.net/weixin_56102526/article/details/121366806?spm=1001.2014.3001.5501