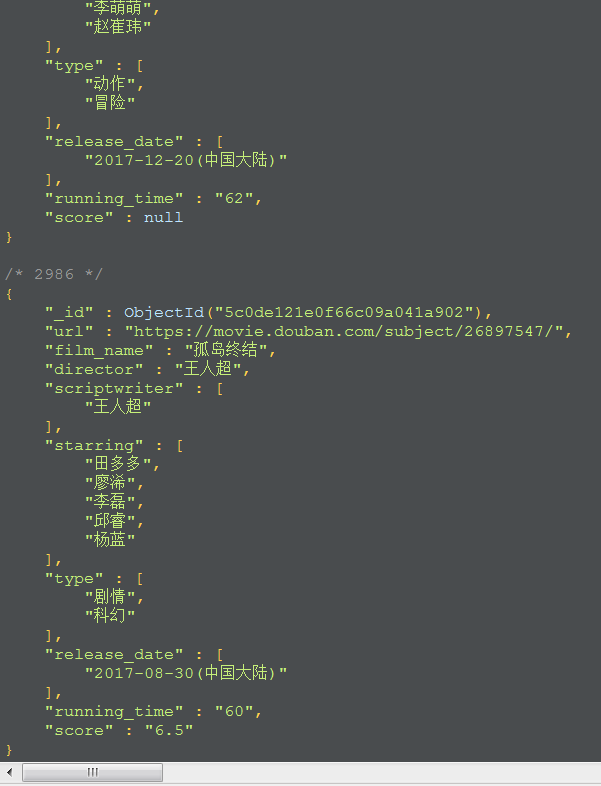

Yesterday, I wrote a little reptile, which crawled the film information of 2017 mainland China on Douban. The website is Douban film , crawled the movie name, director, screenwriter, star, type, release time, length, score and link, and saved them in MongoDB.

The IP address of the machine used at the beginning, without proxy IP, can't receive data after requesting more than a dozen Web pages, reporting HTTP error 302, and then using a browser to open the web page to try, and found that the browser is also 302...

But I am not afraid, I have proxy IP, ha ha ha! See my previous essay for details: Crawling agent IP.

After using the proxy IP, you can continue to receive data, but there are still 302 errors in the middle. It's OK. It's OK to use another proxy IP to request a new request. If you can't do it again, please do it again. If you can't do it again, please do it again. If you can't do it again, please do it again...

Please attach some codes below.

1. Crawler file

import scrapy

import json

from douban.items import DoubanItem

parse_url = "https://movie.douban.com/j/new_search_subjects?sort=U&range=0,10&tags=%E7%94%B5%E5%BD%B1&start={}&countries=%E4%B8%AD%E5%9B%BD%E5%A4%A7%E9%99%86&year_range=2017,2017"

class Cn2017Spider(scrapy.Spider):

name = 'cn2017'

allowed_domains = ['douban.com']

start_urls = ['https://movie.douban.com/j/new_search_subjects?sort=U&range=0,10&tags=%E7%94%B5%E5%BD%B1&start=0&countries=%E4%B8%AD%E5%9B%BD%E5%A4%A7%E9%99%86&year_range=2017,2017']

def parse(self, response):

data = json.loads(response.body.decode())

if data is not None:

for film in data["data"]:

print(film["url"])

item = DoubanItem()

item["url"] = film["url"]

yield scrapy.Request(

film["url"],

callback=self.get_detail_content,

meta={"item": item}

)

for page in range(20,3200,20):

yield scrapy.Request(

parse_url.format(page),

callback=self.parse

)

def get_detail_content(self,response):

item = response.meta["item"]

item["film_name"] = response.xpath("//div[@id='content']//span[@property='v:itemreviewed']/text()").extract_first()

item["director"] = response.xpath("//div[@id='info']/span[1]/span[2]/a/text()").extract_first()

item["scriptwriter"] = response.xpath("///div[@id='info']/span[2]/span[2]/a/text()").extract()

item["starring"] = response.xpath("//div[@id='info']/span[3]/span[2]/a[position()<6]/text()").extract()

item["type"] = response.xpath("//div[@id='info']/span[@property='v:genre']/text()").extract()

item["release_date"] = response.xpath("//div[@id='info']/span[@property='v:initialReleaseDate']/text()").extract()

item["running_time"] = response.xpath("//div[@id='info']/span[@property='v:runtime']/@content").extract_first()

item["score"] = response.xpath("//div[@class='rating_self clearfix']/strong/text()").extract_first()

# print(item)

if item["film_name"] is None:

# print("*" * 100)

yield scrapy.Request(

item["url"],

callback=self.get_detail_content,

meta={"item": item},

dont_filter=True

)

else:

yield item2.items.py file

import scrapy

class DoubanItem(scrapy.Item):

#Movie title

film_name = scrapy.Field()

#director

director = scrapy.Field()

#Screenwriter

scriptwriter = scrapy.Field()

#To star

starring = scrapy.Field()

#type

type = scrapy.Field()

#Release time

release_date = scrapy.Field()

#Film length

running_time = scrapy.Field()

#score

score = scrapy.Field()

#link

url = scrapy.Field()3. Middlewars.py file

from douban.settings import USER_AGENT_LIST

import random

import pandas as pd

class UserAgentMiddleware(object):

def process_request(self, request, spider):

user_agent = random.choice(USER_AGENT_LIST)

request.headers["User-Agent"] = user_agent

return None

class ProxyMiddleware(object):

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

ip_df = pd.read_csv(r"C:\Users\Administrator\Desktop\douban\douban\ip.csv")

ip = random.choice(ip_df.loc[:, "ip"])

request.meta["proxy"] = "http://" + ip

return None4.pipelines.py file

from pymongo import MongoClient

client = MongoClient()

collection = client["test"]["douban"]

class DoubanPipeline(object):

def process_item(self, item, spider):

collection.insert(dict(item))5.settings.py file

DOWNLOADER_MIDDLEWARES = {

'douban.middlewares.UserAgentMiddleware': 543,

'douban.middlewares.ProxyMiddleware': 544,

}

ITEM_PIPELINES = {

'douban.pipelines.DoubanPipeline': 300,

}

ROBOTSTXT_OBEY = False

DOWNLOAD_TIMEOUT = 10

RETRY_ENABLED = True

RETRY_TIMES = 10The program runs for 1 hour, 20 minutes, 21.473772 seconds and captures 2986 pieces of data.

Last,

Or happy duck every day!