cache

1. Introduction

1. What is Cache?

-

There is temporary data in memory.

-

Put the data frequently queried by users in the cache (memory), and users do not need to query from the disk (relational database data file) but from the cache to query the data, so as to improve the query efficiency and solve the performance problem of high concurrency system.

2. Why cache?

- Reduce the number of interactions with the database, reduce system overhead and improve system efficiency.

3. What kind of data can be cached?

- Frequently queried and infrequently changed data. [cache can be used]

2. Mybatis cache

- MyBatis includes a very powerful query caching feature that makes it easy to customize and configure caching. Caching can greatly improve query efficiency.

Two levels of cache are defined by default in MyBatis system: L1 cache and L2 cache.

-

By default, only L1 cache is on. (Sqlsession level cache, also known as local cache).

-

L2 cache needs to be manually enabled and configured. It is based on namespace level cache.

-

In order to improve scalability, MyBatis defines the Cache interface Cache. We can customize the L2 Cache by implementing the Cache interface

3. L1 cache

L1 cache is also called local cache:

-

The data queried during the same session with the database will be placed in the local cache.

-

In the future, if you need to obtain the same data, you can get it directly from the cache. You don't have to query the database again;

Test steps:

1. Create sub module mybatis-09

2. Copy configuration files, tool classes, and add User entity classes

@Data

@NoArgsConstructor

@AllArgsConstructor

public class User {

private int id;

private String name;

private String pwd;

}

3. Open log!

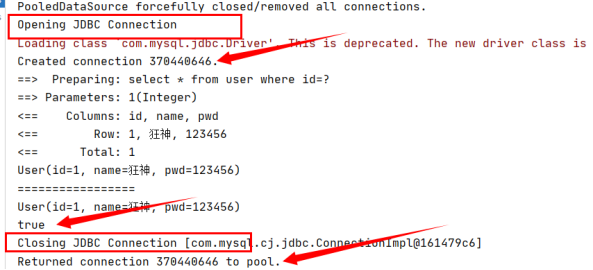

4. The test queries the same record twice in a Session

5. View log output

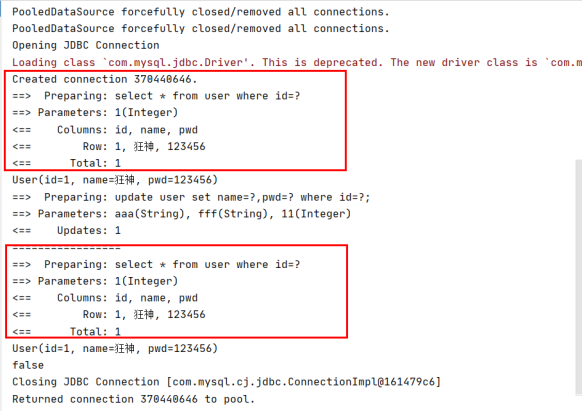

Cache invalidation:

1. Query different things

2. Adding, deleting and modifying may change the original data, so the cache will be refreshed!

3. Query different mapper xml

4. Manually clean up the cache!

@Test

public void test(){

SqlSession sqlSession = MybatisUtils.getSqlSession();

UserMapper mapper = sqlSession.getMapper(UserMapper.class);

User user = mapper.queryUserById(1);

System.out.println(user);

// mapper.updateUser(new User(11,"aaa","fff"));

sqlSession.clearCache();//Manually clean up the cache!

System.out.println("=================");

User user2 = mapper.queryUserById(1);

System.out.println(user2);

System.out.println(user==user2);

sqlSession.close();

}

Summary:

The L1 cache is enabled by default and is only valid for one SqlSession, that is, the interval from getting the connection to closing the connection! The first level cache is a Map

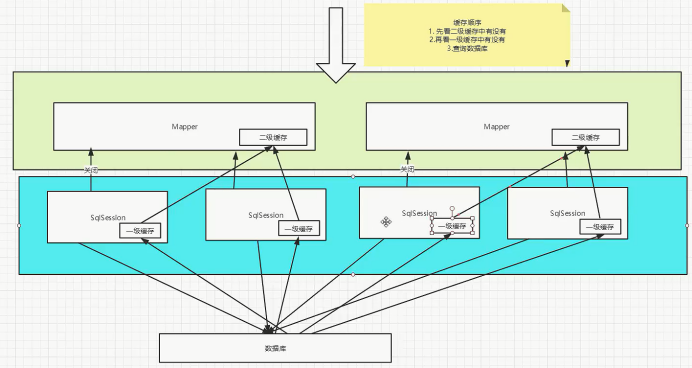

4. L2 cache

-

L2 cache is also called global cache. The scope of L1 cache is too low, so L2 cache was born

-

Based on the namespace level cache, a namespace corresponds to a L2 cache;

Working mechanism

-

When a session queries a piece of data, the data will be placed in the first level cache of the current session;

-

If the current session is closed, the L1 cache corresponding to the session is gone; But what we want is that the session is closed and the data in the L1 cache is saved to the L2 cache;

-

With the new session query information, you can get the content from the L2 cache

-

The data found by different mapper s will be placed in their corresponding cache (map);

Steps:

1. Turn on global cache

<!-- Show global cache enabled--> <setting name="cacheEnabled" value="true"/>

2. Turn on in Mapper where you want to use L2 cache

<!-- At present Mapper.xm1 Using L2 cache in-->

<cache/>

You can also customize parameters

<!-- At present Mapper.xm1 Using L2 cache in--> <cache eviction="FIFO" flushInterval="60000" size="512" readOnly="true"/>

3. Testing

@Test

public void test(){

SqlSession sqlSession = MybatisUtils.getSqlSession();

UserMapper mapper = sqlSession.getMapper(UserMapper.class);

SqlSession sqlSession2 = MybatisUtils.getSqlSession();

UserMapper mapper2 = sqlSession2.getMapper(UserMapper.class);

User user = mapper.queryUserById(1);

System.out.println(user);

sqlSession.close();

User user2 = mapper2.queryUserById(1);

System.out.println(user2);

System.out.println(user==user2);

sqlSession2.close();

}

Problem: we need to serialize the entity class! Otherwise, an error will be reported!

Caused by: java.io.NotSerializableException: com.kuang.pojo.User

Summary:

- As long as the L2 cache is enabled, it is valid under the same Mapper

- All data will be put in the first level cache first;

- Only when the session is submitted or closed will it be submitted to the secondary buffer!

5. Cache principle

6. Custom cache ehcache

Ehcache is a widely used open source Java distributed cache. Mainly for general cache

To use ehcache in the program, you must first import the package!

<!-- https://mvnrepository.com/artifact/org.mybatis/mybatis-ehcache -->

<dependency>

<groupId>org.mybatis</groupId>

<artifactId>mybatis-ehcache</artifactId>

<version>1.0.0</version>

</dependency>

Specify our ehcache cache implementation in mapper!

<!-- At present Mapper.xml Using L2 cache in --> <cache type = "org.mybatis.caches.ehcache.EhcacheCache" />

ehcache.xml

<?xml version="1.0" encoding="UTF-8"?>

<ehcache xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:noNamespaceSchemaLocation="http://ehcache.org/ehcache.xsd"

updateCheck="false">

<!--

diskStore: Is the cache path, ehcache There are two levels: memory and disk. This attribute defines the cache location of the disk. The parameters are explained as follows:

user.home – User home directory

user.dir – User's current working directory

java.io.tmpdir – Default temporary file path

-->

<diskStore path="./tmpdir/Tmp_EhCache"/>

<defaultCache

eternal="false"

maxElementsInMemory="10000"

overflowToDisk="false"

diskPersistent="false"

timeToIdleSeconds="1800"

timeToLiveSeconds="259200"

memoryStoreEvictionPolicy="LRU"/>

<cache

name="cloud_user"

eternal="false"

maxElementsInMemory="5000"

overflowToDisk="false"

diskPersistent="false"

timeToIdleSeconds="1800"

timeToLiveSeconds="1800"

memoryStoreEvictionPolicy="LRU"/>

<!--

defaultCache: Default cache policy, when ehcache This cache policy is used when the defined cache cannot be found. Only one can be defined.

-->

<!--

name:Cache name.

maxElementsInMemory:Maximum number of caches

maxElementsOnDisk: Maximum number of hard disk caches.

eternal:Whether the object is permanently valid, but once it is set, timeout Will not work.

overflowToDisk:Whether to save to disk when the system crashes

timeToIdleSeconds:Sets the allowed idle time (in seconds) of an object before it expires. Only if eternal=false It is used when the object is not permanently valid. It is an optional attribute. The default value is 0, that is, the idle time is infinite.

timeToLiveSeconds:Sets the allowable survival time (in seconds) of an object before expiration. The maximum time is between creation time and expiration time. Only if eternal=false Used when the object is not permanently valid. The default is 0.,That is, the survival time of the object is infinite.

diskPersistent: Cache virtual machine restart data Whether the disk store persists between restarts of the Virtual Machine. The default value is false.

diskSpoolBufferSizeMB: This parameter setting DiskStore(Cache size for disk cache). The default is 30 MB. each Cache Each should have its own buffer.

diskExpiryThreadIntervalSeconds: The running time interval of disk failure thread is 120 seconds by default.

memoryStoreEvictionPolicy: When reached maxElementsInMemory When restricted, Ehcache The memory will be cleaned according to the specified policy. The default policy is LRU(Least recently used). You can set it to FIFO(First in first out) or LFU(Less used).

clearOnFlush: Whether to clear when the amount of memory is maximum.

memoryStoreEvictionPolicy:Optional strategies are: LRU(Least recently used, default policy) FIFO(First in first out) LFU(Minimum number of visits).

FIFO,first in first out,This is the most familiar, first in, first out.

LFU, Less Frequently Used,This is the strategy used in the above example. To put it bluntly, it has always been the least used. As mentioned above, the cached element has a hit Properties, hit The smallest value will be flushed out of the cache.

LRU,Least Recently Used,The least recently used cache element has a timestamp. When the cache capacity is full and it is necessary to make room for caching new elements, the element with the farthest timestamp from the current time in the existing cache element will be cleared out of the cache.

-->

</ehcache>