Create Ceph file system

The process of building Ceph cluster is omitted, and the reference is as follows:

https://blog.csdn.net/mengshicheng1992/article/details/120567117

1. Create Ceph file system

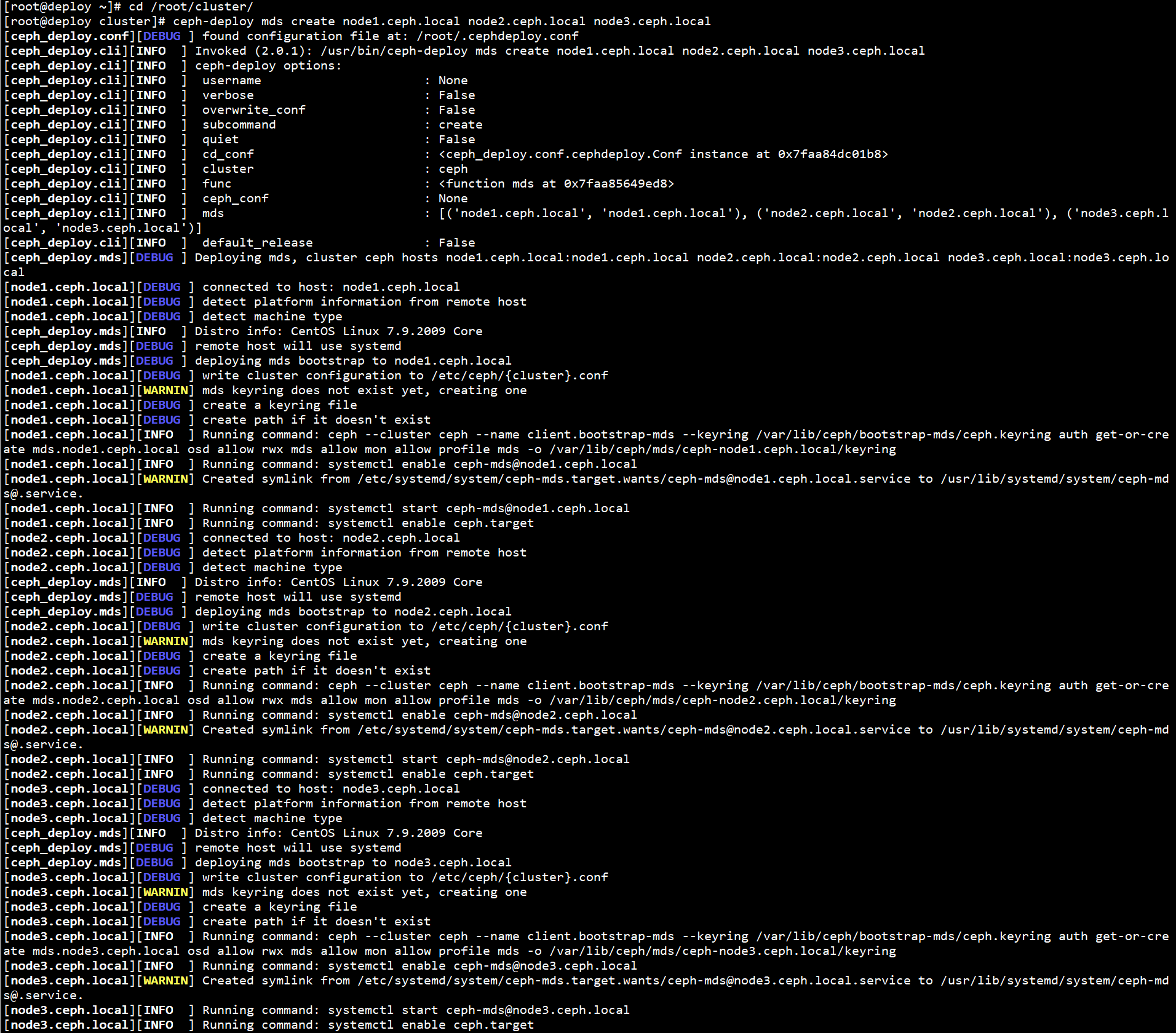

1. Initialize MDS node

Initialize the MDS node on the Deploy node:

cd /root/cluster/ ceph-deploy mds create node1.ceph.local node2.ceph.local node3.ceph.local

2. Create storage pool

Create a storage pool on the Deploy node:

ceph osd pool create cephfs_data 128

ceph osd pool create cephfs_metadata 128

3. Create file system

Create a file system on the Deploy node:

ceph fs new cephfs cephfs_metadata cephfs_data

4. View file system

To view the file system on the Deploy node:

ceph fs ls

5. View MDS status

To view MDS status on the Deploy node:

ceph mds stat

2. Using Ceph file system

2.1 mode 1: kernel driven mode

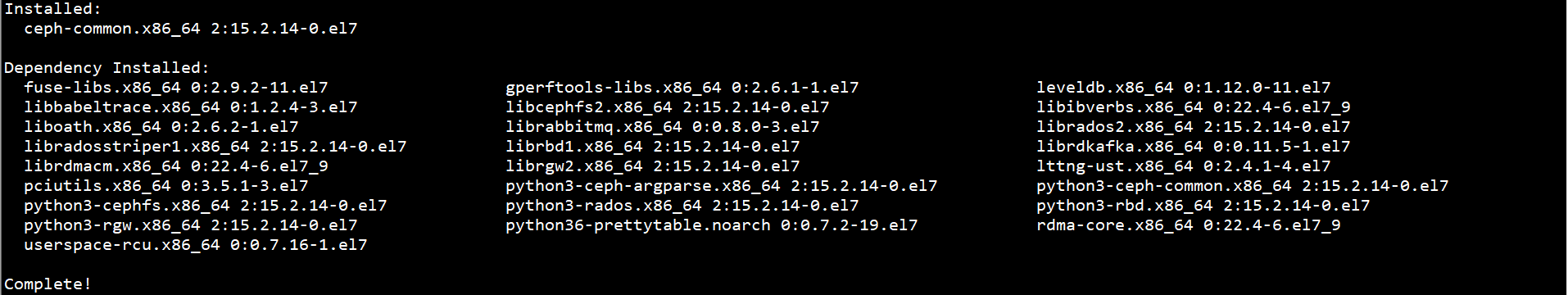

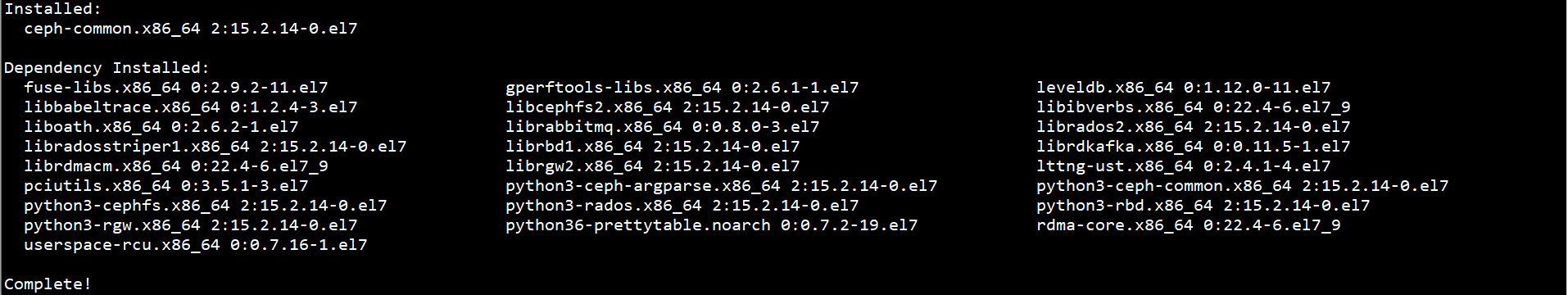

1. Install CEPH common

Install CEPH common on the Client node:

yum -y install ceph-common

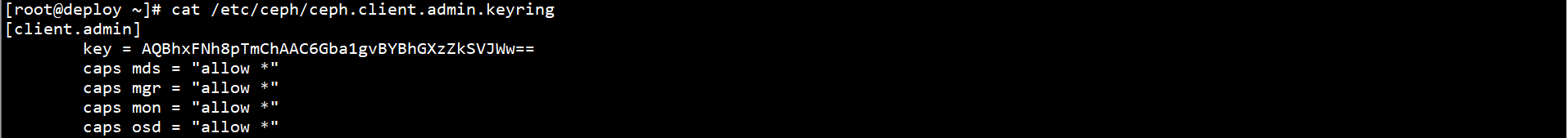

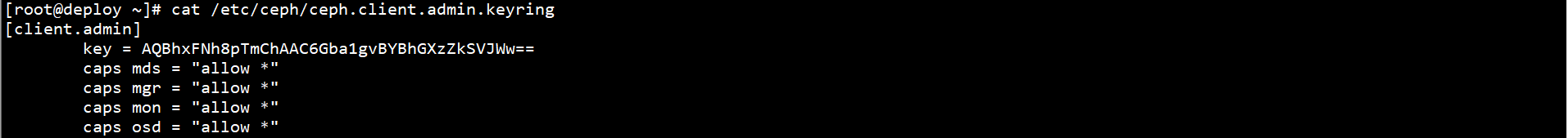

2. View key

To view the key on the Deploy node:

cat /etc/ceph/ceph.client.admin.keyring

3. Mount

Mount the file system on the Client node:

In kernel driven mode, the password is stored in the command line, and the restart is invalid

mkdir /mycephfs/ mount -t ceph 192.168.0.10:/ /mycephfs -o name=admin,secret=AQBhxFNh8pTmChAAC6Gba1gvBYBhGXzZkSVJWw==

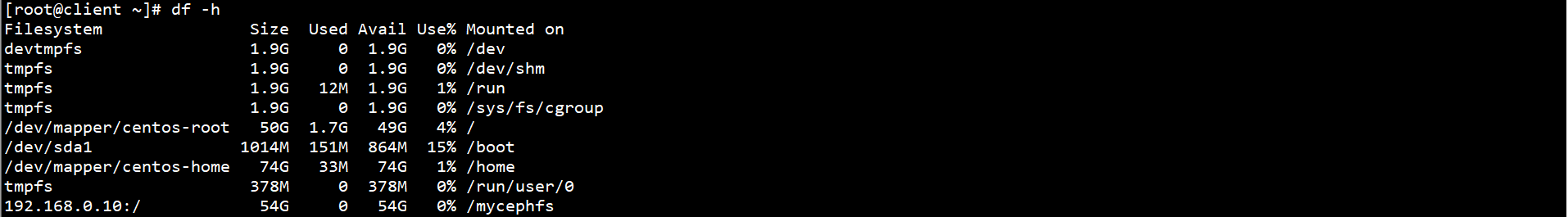

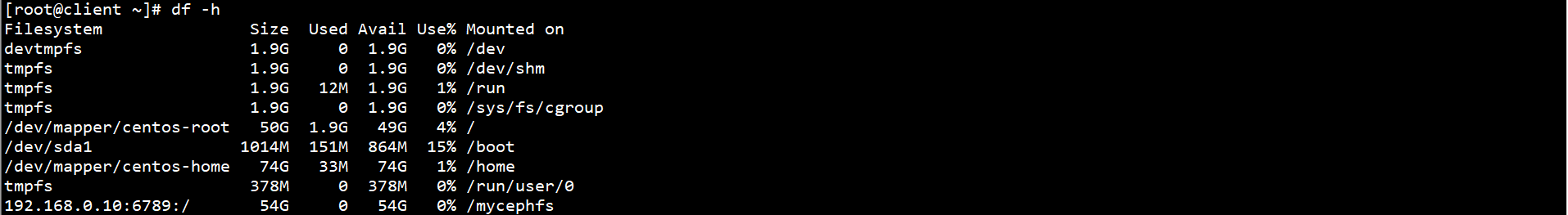

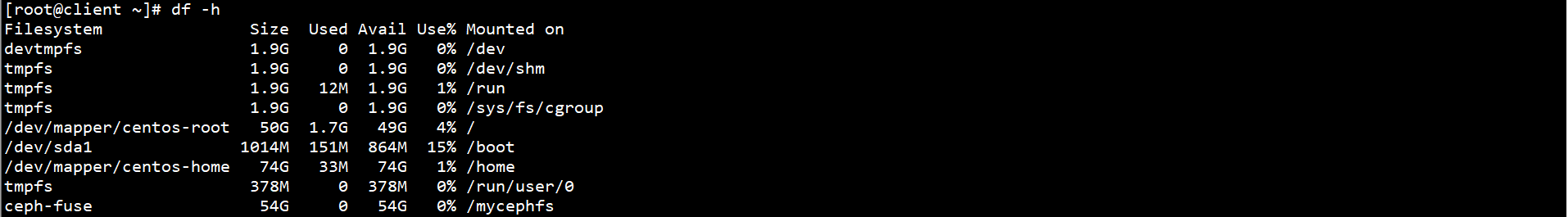

df -h

perhaps

1. Install CEPH common

Install CEPH common on the Client node:

yum -y install ceph-common

2. View key

To view the key on the Deploy node:

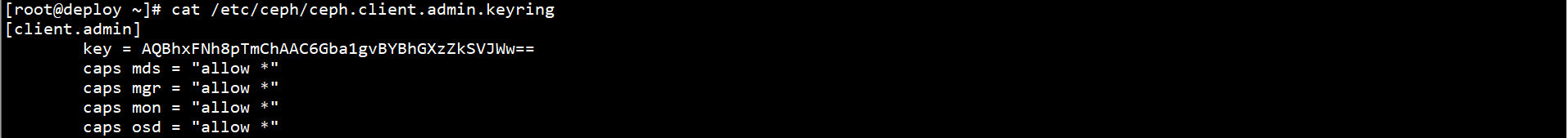

cat /etc/ceph/ceph.client.admin.keyring

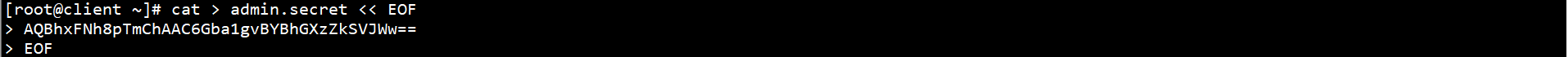

3. Mount

Mount the file system on the Client node:

Kernel driven mode. The password is stored in the file and the restart is invalid

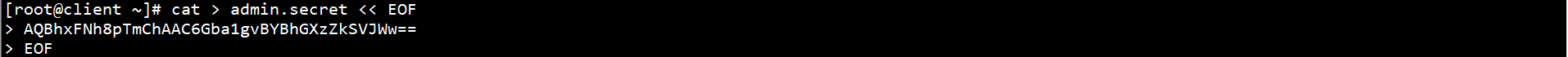

cat > admin.secret << EOF AQBhxFNh8pTmChAAC6Gba1gvBYBhGXzZkSVJWw== EOF

mkdir /mycephfs/ mount -t ceph 192.168.0.10:/ /mycephfs -o name=admin,secretfile=/root/admin.secret

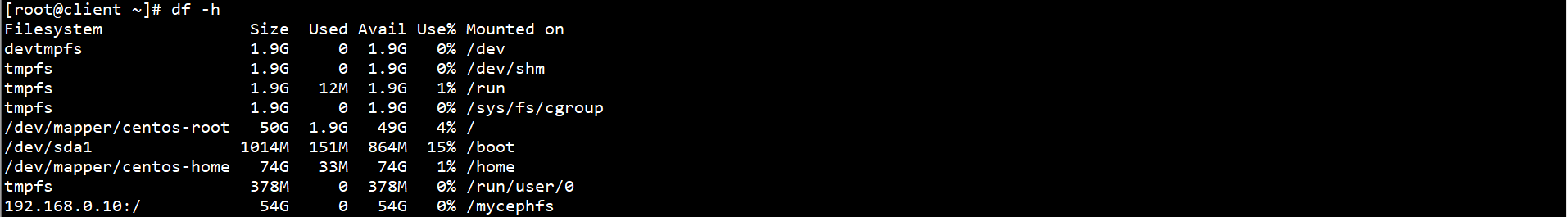

df -h

perhaps

1. Install CEPH common

Install CEPH common on the Client node:

yum -y install ceph-common

2. View key

To view the key on the Deploy node:

cat /etc/ceph/ceph.client.admin.keyring

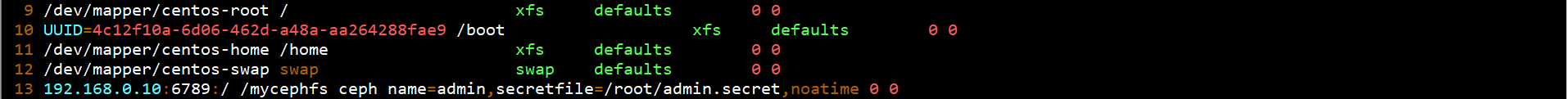

3. Mount

Mount the file system on the Client node:

In kernel driven mode, the password is stored in the file, and the restart is still effective

cat > admin.secret << EOF AQBhxFNh8pTmChAAC6Gba1gvBYBhGXzZkSVJWw== EOF

mkdir /mycephfs/

vim /etc/fstab 192.168.0.10:6789:/ /mycephfs ceph name=admin,secretfile=/root/admin.secret,noatime 0 0

mount -a

df -h

2.2 mode 2: user space file system mode

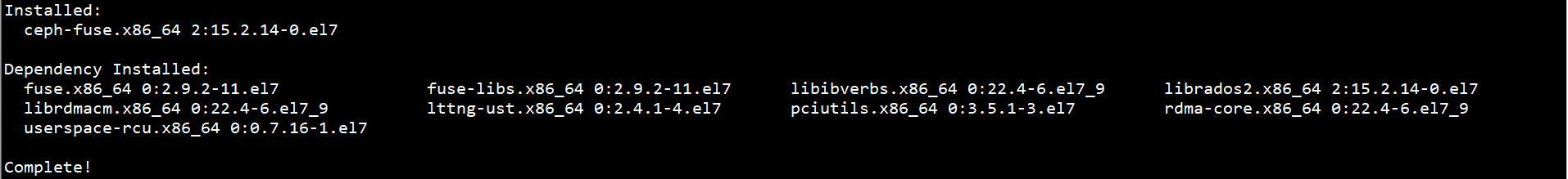

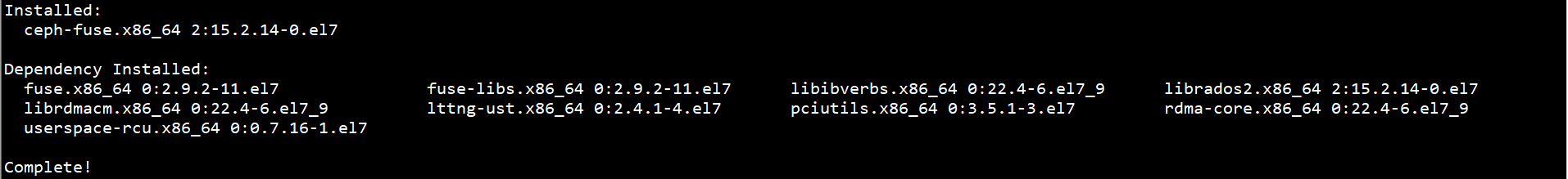

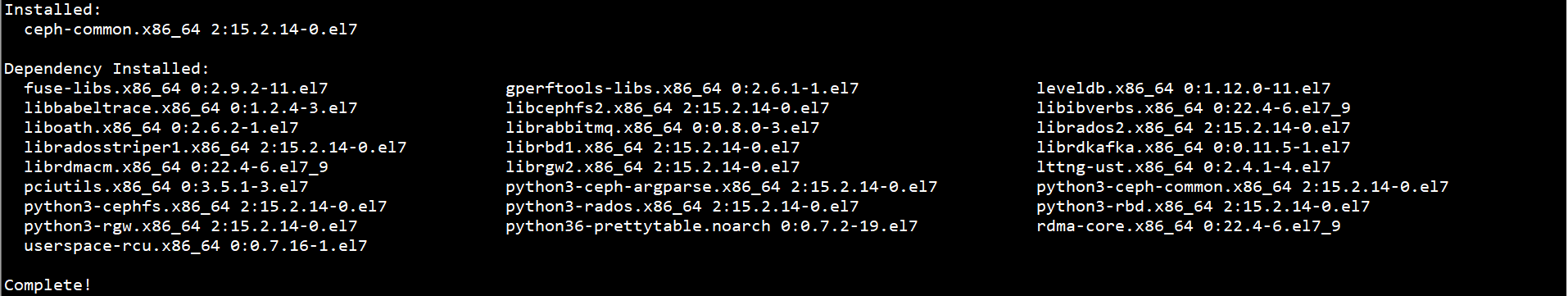

1. Installing CEPH fuse

Install CEPH fuse on the Client node:

yum -y install ceph-fuse

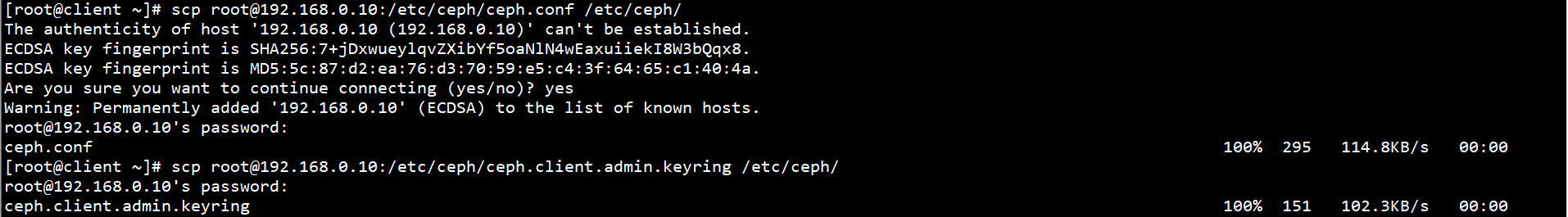

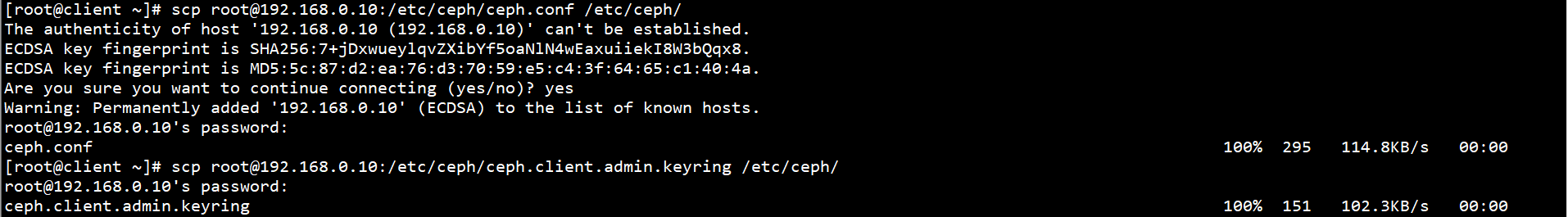

2. Copy keyring files and configuration files

On the Client node, copy the keyring file and configuration file on the Deploy node to the local node:

scp root@192.168.0.10:/etc/ceph/ceph.conf /etc/ceph/ scp root@192.168.0.10:/etc/ceph/ceph.client.admin.keyring /etc/ceph/

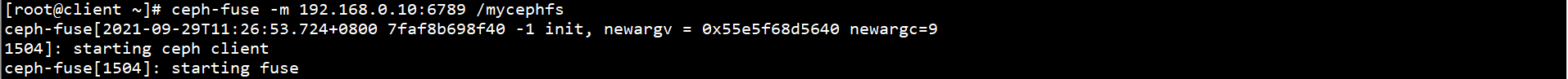

3. Mount

Mount the file system on the Client node:

FUSE mode, restart failure

mkdir /mycephfs

ceph-fuse -m 192.168.0.10:6789 /mycephfs

df -h

perhaps

1. Installing CEPH fuse

Install CEPH fuse on the Client node:

yum -y install ceph-fuse

2. Copy keyring files and configuration files

On the Client node, copy the keyring file and configuration file on the Deploy node to the local node:

scp root@192.168.0.10:/etc/ceph/ceph.conf /etc/ceph/ scp root@192.168.0.10:/etc/ceph/ceph.client.admin.keyring /etc/ceph/

3. Mount

Mount the file system on the Client node:

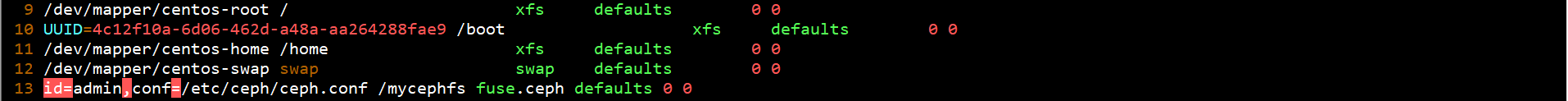

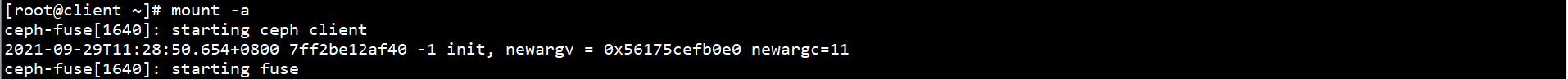

In FUSE mode, restart still takes effect

mkdir /mycephfs

vim /etc/fstab id=admin,conf=/etc/ceph/ceph.conf /mycephfs fuse.ceph defaults 0 0

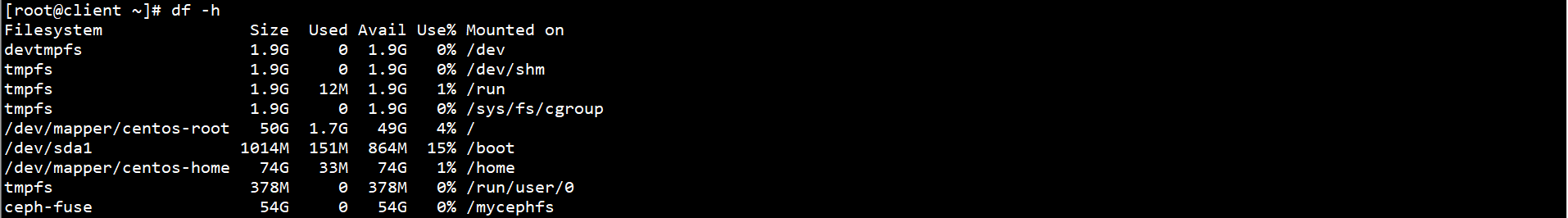

mount -a

df -h

4, Ceph block equipment

1. Create Ceph block device

1. Create storage pool

Create a storage pool on the Deploy node:

ceph osd pool create cephpool 128

2. Create block device image

Create a block device image on the Deploy node:

rbd create --size 1024 cephpool/cephimage --image-feature layering

3. Get image list

Get the image list on the Deploy node:

rbd list cephpool

4. View image information

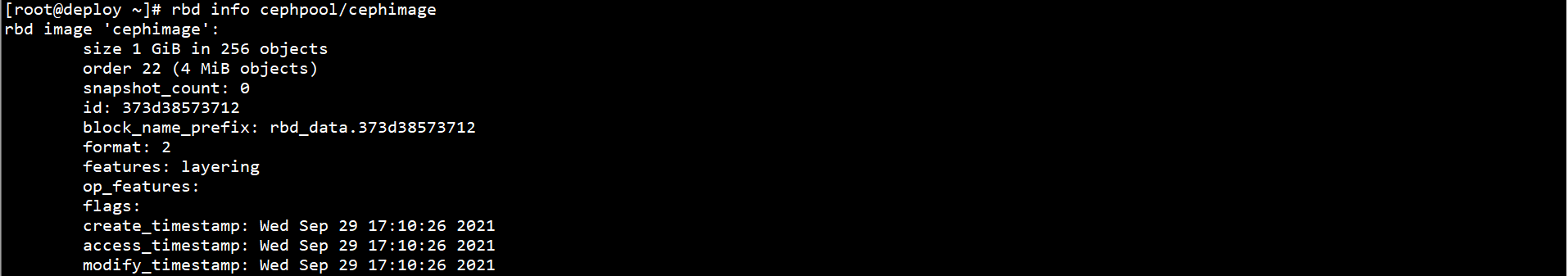

View image information on the Deploy node:

rbd info cephpool/cephimage

2. Using Ceph block devices

1. Install CEPH common

Install CEPH common on the Client node:

yum -y install ceph-common

2. Copy keyring files and configuration files

On the Client node, copy the keyring file and configuration file on the Deploy node to the local node:

scp root@192.168.0.10:/etc/ceph/ceph.conf /etc/ceph/ scp root@192.168.0.10:/etc/ceph/ceph.client.admin.keyring /etc/ceph/

3. Mapping block device

On the Client node, project the block device:

rbd map cephpool/cephimage --id admin

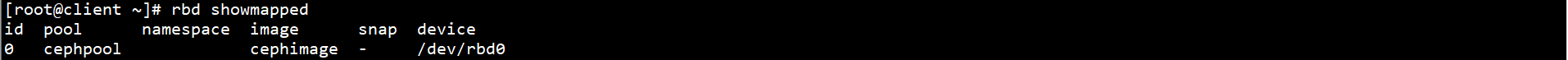

4. View block device

To view block devices on the Client node:

rbd showmapped

ll /dev/rbd/cephpool

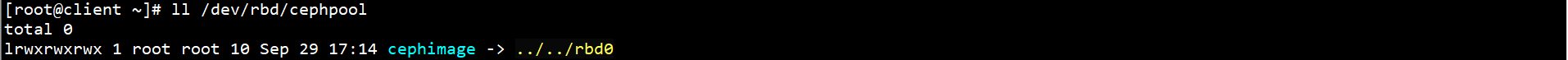

5. Format block device

Format the block device on the Client node:

mkfs.xfs /dev/rbd0

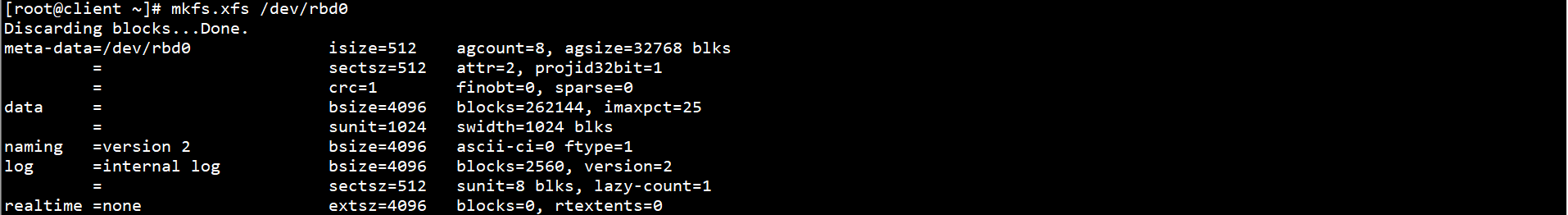

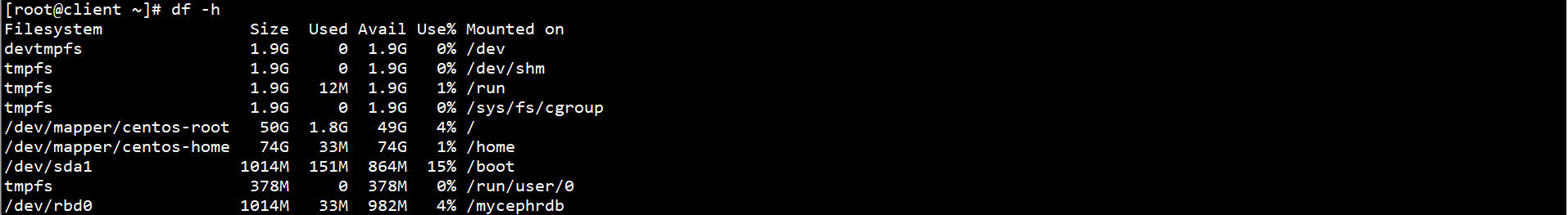

6. Mounting block equipment

To mount a block device on a Client node:

mkdir /mycephrdb mount /dev/rbd0 /mycephrdb

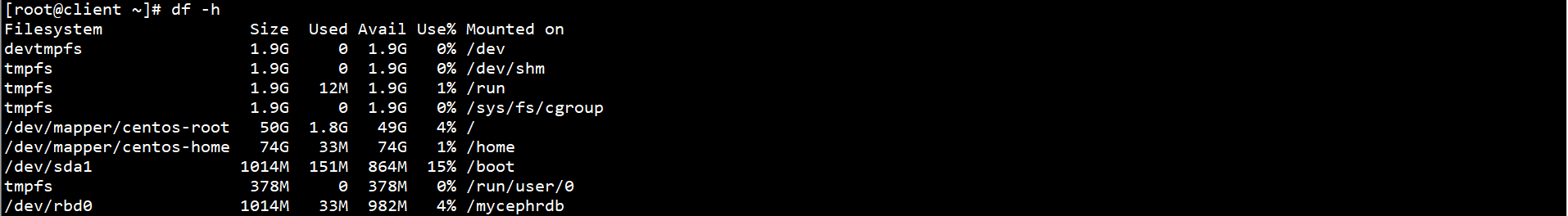

df -h

perhaps

mkdir /mycephrdb

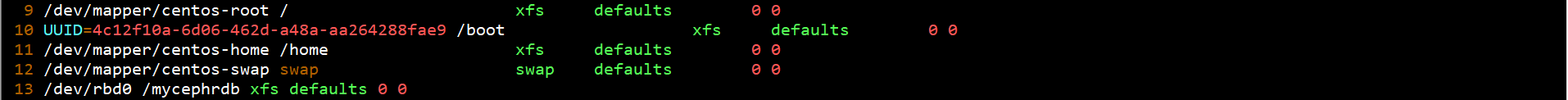

vim /etc/fstab /dev/rbd0 /mycephrdb xfs defaults 0 0

mount -a

df -h

5, Ceph object store

1. Create Ceph object store

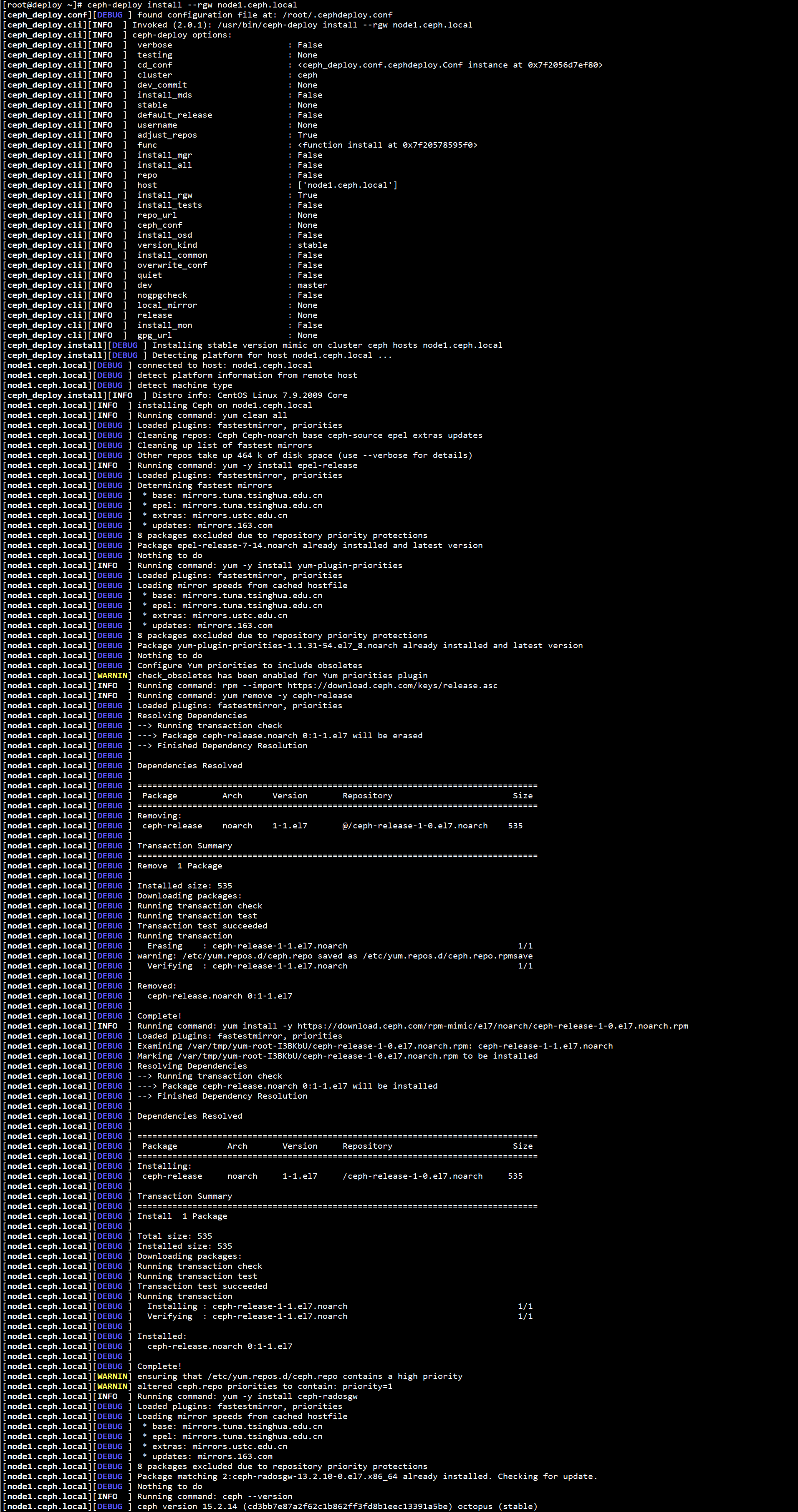

1. Install RGW node

Install the RGW node on the Deploy node:

ceph-deploy install --rgw node1.ceph.local ceph-deploy install --rgw node2.ceph.local ceph-deploy install --rgw node3.ceph.local

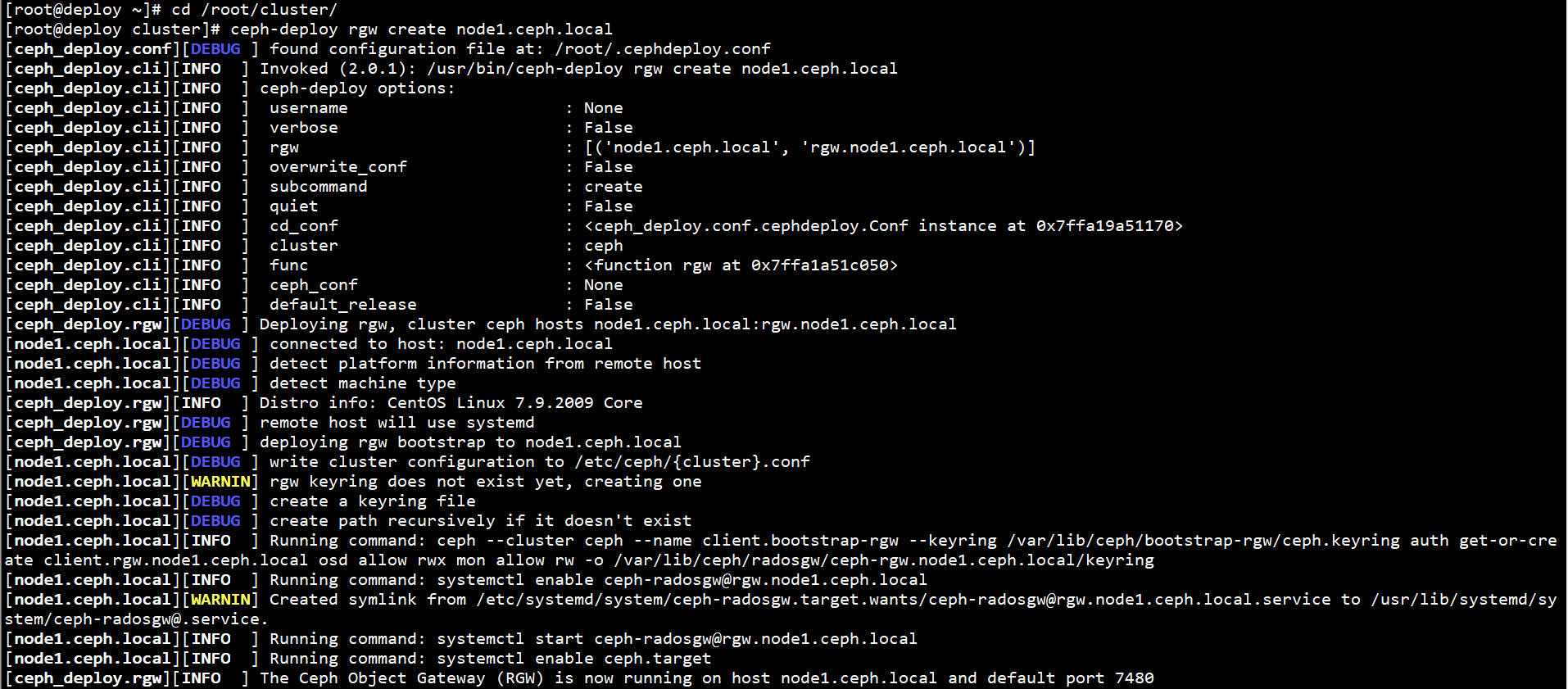

2. Initialize RGW node

Initialize the RGW node on the Deploy node:

cd /root/cluster/ ceph-deploy rgw create node1.ceph.local ceph-deploy rgw create node2.ceph.local ceph-deploy rgw create node3.ceph.local

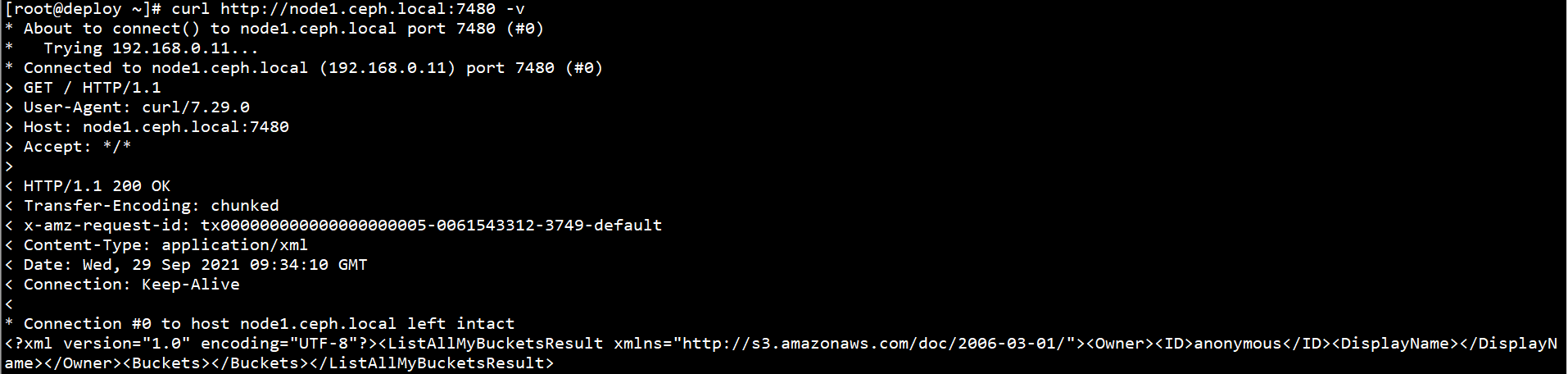

3. View services

To view services on the Deploy node:

curl http://node1.ceph.local:7480 -v

2. Using Ceph object storage

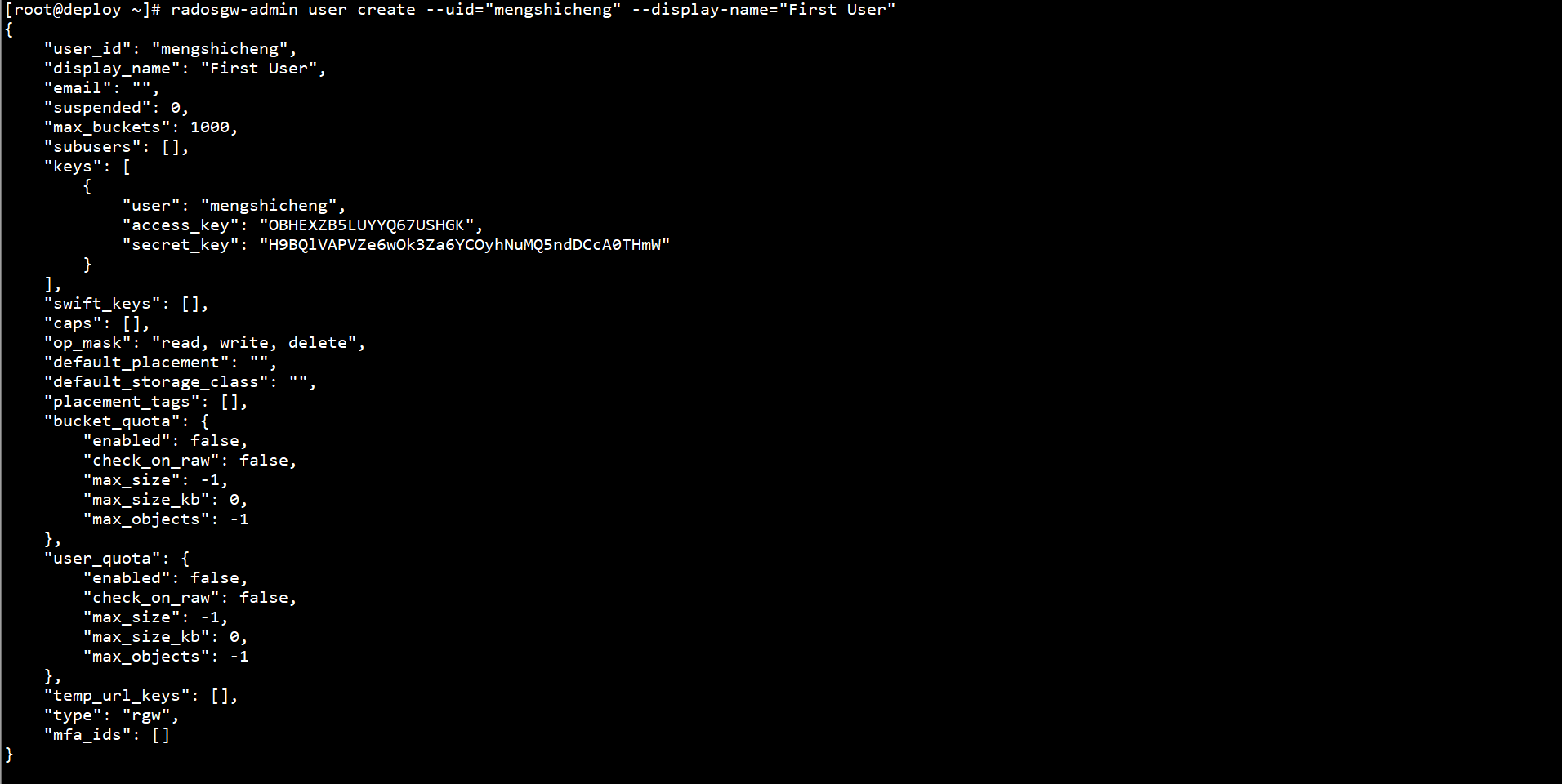

1. Create S3 user

Create an S3 user on the Deploy node:

radosgw-admin user create --uid="mengshicheng" --display-name="First User"

Record key

"access_key": "OBHEXZB5LUYYQ67USHGK", "secret_key": "H9BQlVAPVZe6wOk3Za6YCOyhNuMQ5ndDCcA0THmW"

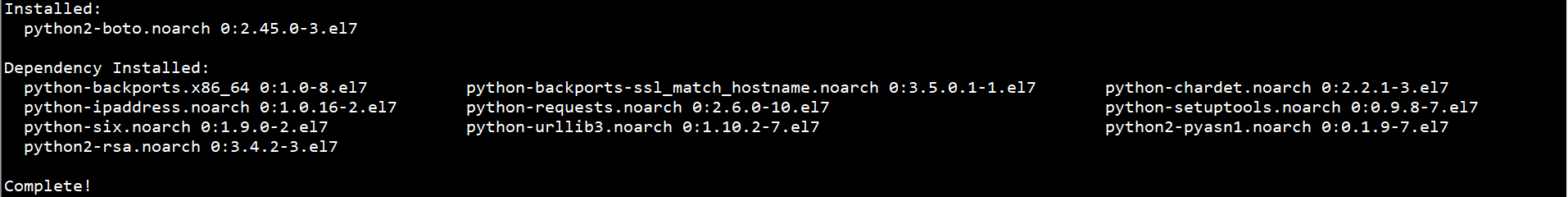

2. Install S3 client

Install S3 Client on Client node:

yum -y install python-boto

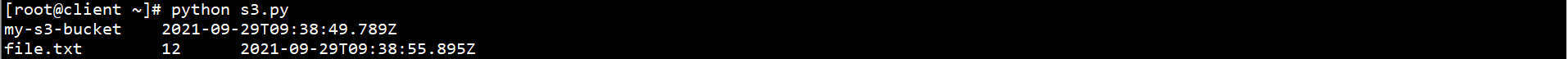

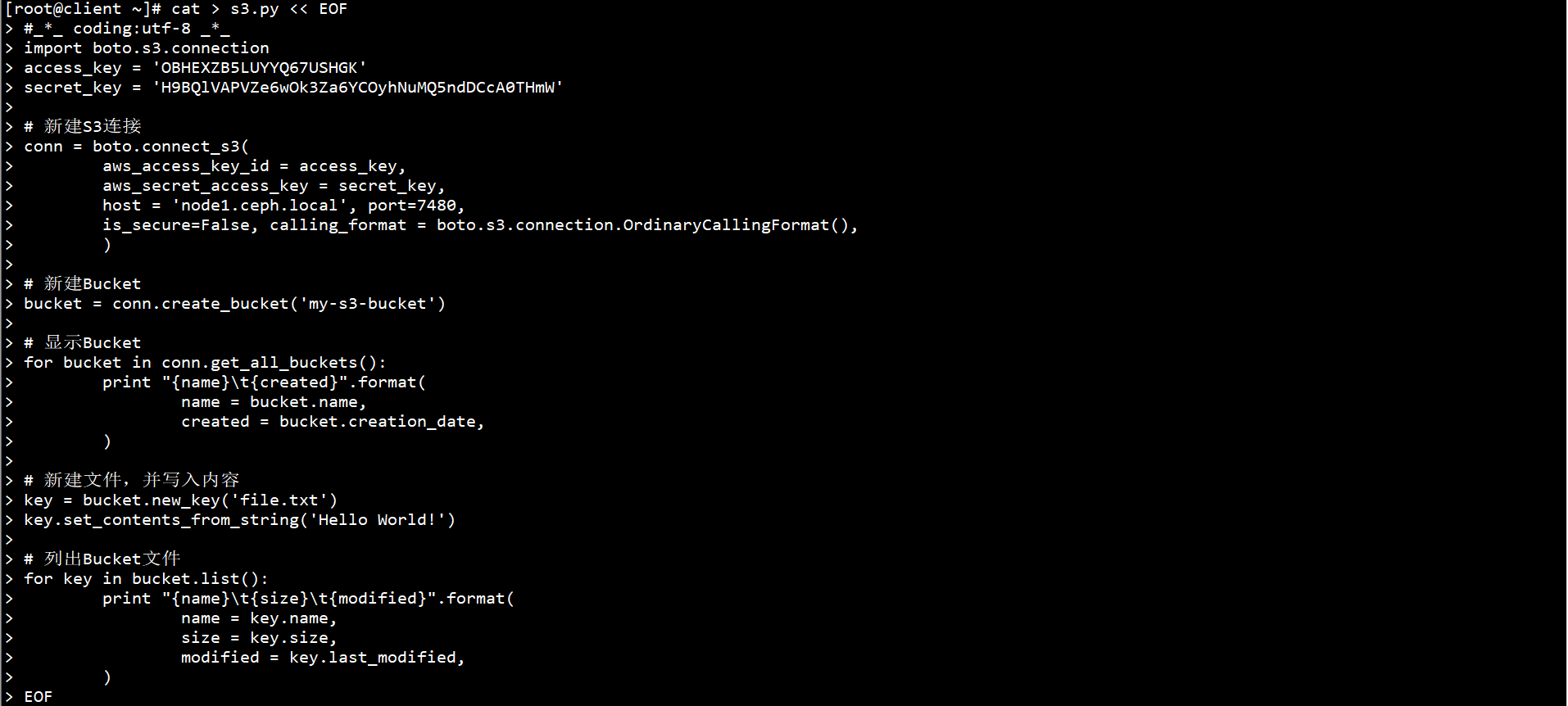

3. Test S3 interface

Test the S3 interface on the Client node:

cat > s3.py << EOF

#_*_ coding:utf-8 _*_

import boto.s3.connection

access_key = 'OBHEXZB5LUYYQ67USHGK'

secret_key = 'H9BQlVAPVZe6wOk3Za6YCOyhNuMQ5ndDCcA0THmW'

# New S3 connection

conn = boto.connect_s3(

aws_access_key_id = access_key,

aws_secret_access_key = secret_key,

host = 'node1.ceph.local', port=7480,

is_secure=False, calling_format = boto.s3.connection.OrdinaryCallingFormat(),

)

# New Bucket

bucket = conn.create_bucket('my-s3-bucket')

# Display Bucket

for bucket in conn.get_all_buckets():

print "{name}\t{created}".format(

name = bucket.name,

created = bucket.creation_date,

)

# Create a new file and write its contents

key = bucket.new_key('file.txt')

key.set_contents_from_string('Hello World!')

# List Bucket files

for key in bucket.list():

print "{name}\t{size}\t{modified}".format(

name = key.name,

size = key.size,

modified = key.last_modified,

)

EOF

python s3.py