RPC remote invocation can be cross-platform. HTTP protocol is generally adopted. Socket technology is used in the bottom layer, so long as the language supports socket technology, it can communicate.

Open platforms generally use http protocol because they support more languages. Reprint address: https://www.cnblogs.com/toov5/p/9990942.html

Local calls only support Java language and Java language development using rmi for communication between virtual machines and virtual machines

High Concurrent Current Limiting Solution

Why limit current?

Service Security (Traffic Attack DDOS) Avalanche Effect

Current Limiting for Protection Service

Current Limiting Algorithms for High Concurrent Current Limiting Solutions (Token Bucket, Leaky Bucket, Counter) and Application Layer Current Limiting Solutions (Nginx)

Current Limiting Algorithms

Common current limiting algorithms are token bucket and leaky bucket. The counter can also be used for rough current limiting.

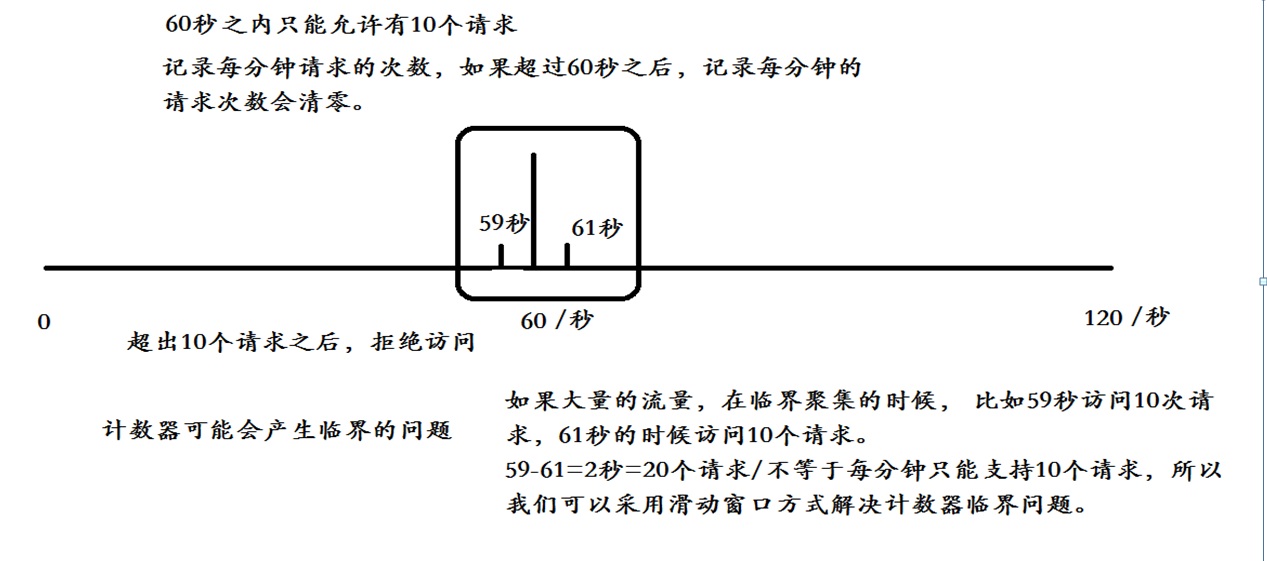

Counter

It is the simplest and easiest algorithm in the current limiting algorithm. For example, we require an interface that requests no more than 10 times in a minute. We can set a counter at the beginning of each request, and the counter + 1 for each request; if the value of the counter is greater than 10 and the time interval from the first request is 1 minute. If the time interval between the request and the first request is more than one minute, and the value of the counter is within the current limit, the counter is reset.

public class LimitService {

private int limtCount = 60;// Limit Maximum Access Capacity

AtomicInteger atomicInteger = new AtomicInteger(0); // Number of actual requests per second

private long start = System.currentTimeMillis();// Get the current system time

private int interval = 60;// 60 seconds interval

public boolean acquire() {

long newTime = System.currentTimeMillis();

if (newTime > (start + interval)) {

// Judging whether it is a cycle

start = newTime;

atomicInteger.set(0); // Clean up to 0

return true;

}

atomicInteger.incrementAndGet();// i++;

return atomicInteger.get() <= limtCount;

}

static LimitService limitService = new LimitService();

public static void main(String[] args) {

ExecutorService newCachedThreadPool = Executors.newCachedThreadPool();

for (int i = 1; i < 100; i++) {

final int tempI = i;

newCachedThreadPool.execute(new Runnable() {

public void run() {

if (limitService.acquire()) {

System.out.println("You're not limited to current,Normal access logic i:" + tempI);

} else {

System.out.println("You've been restricted. i:" + tempI);

}

}

});

}

}

}

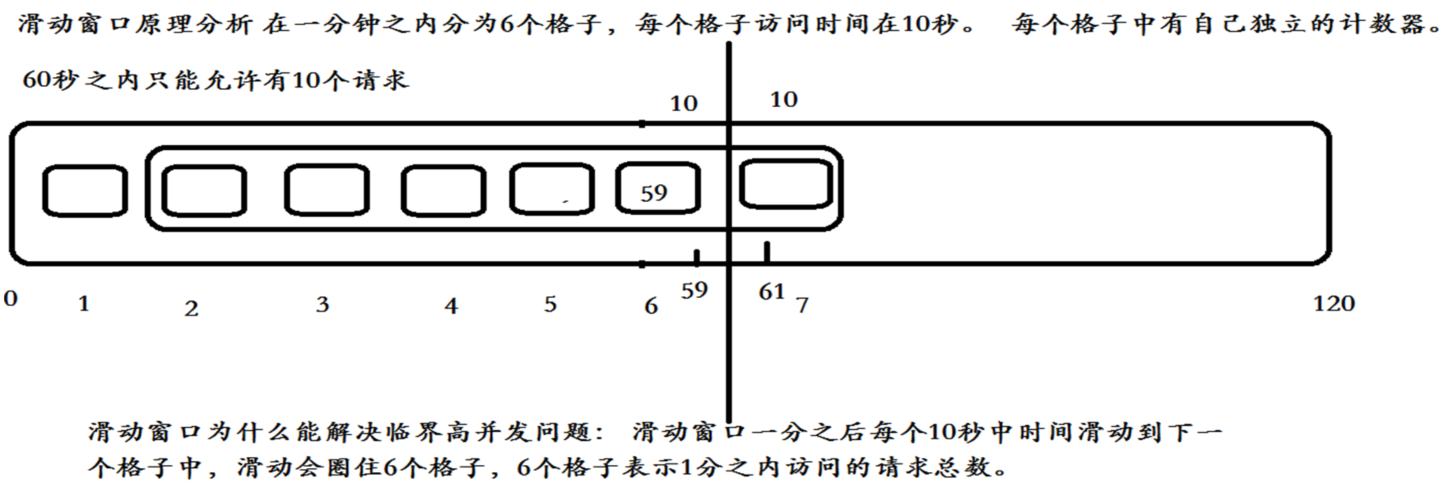

Sliding window counting

Sliding window counting has many usage scenarios, such as current limiting to prevent system avalanches. Compared with counting implementation, sliding window implementation is smoother and can automatically eliminate burrs.

The principle of sliding window is to determine whether the total number of visits in the first N units of time exceeds the set threshold, and to determine the number of requests on the current time slice + 1.

Comparing with the traditional breaking of the critical value, the shortcoming of the traditional counter is that the critical problem may violate the principle of defining fixed rate.

Move every 10 seconds

Token Bucket

Token guava current-limiting token algorithm

The token bucket algorithm is a bucket that stores a fixed capacity token and adds tokens to the bucket at a fixed rate. The token bucket algorithm is described as follows:

Assuming a limit of 2r/s, tokens are added to the bucket at a fixed rate of 500 milliseconds.

The maximum number of tokens in the bucket is b. When the bucket is full, the newly added token is discarded or rejected.

When an n-byte packet arrives, n tokens are deleted from the bucket, and then the packet is sent to the network.

If there are fewer than n tokens in the bucket, the token will not be deleted, and the packet will be current-limited (either discarded or buffered waiting).

Token Bucket Algorithms

The token bucket is divided into two actions

Action 1 Fixed Speed Recording Token in Bucket

Action 2 If the client wants to access the request, it first gets token from the bucket.

Client can access the server only when they get the token. This is the key. If they can't get the token, they will be degraded.

The tokens in the bucket will also be full. If they are full, they will not be filled. It can't be infinitely loaded. It has capacity.

One Save, One Take

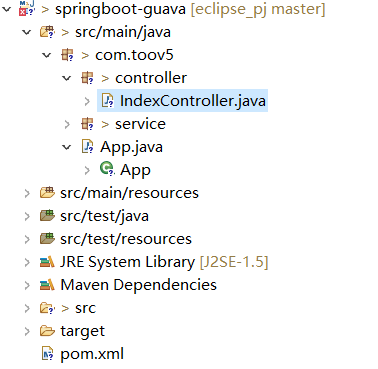

Using RateLimiter to Realize Token Bucket Current Limiting

RateLimiter is an implementation class based on token bucket algorithm provided by guava. It can accomplish current limiting stunt very simply and adjust the rate of token generation according to the actual situation of the system.

Usually it can be used to buy current limiting system to prevent overwhelming; to limit the amount of access in a certain interface and service unit time, for example, some third-party services will restrict the amount of access to users; to limit the network speed, only how many bytes can be uploaded and downloaded per unit time, and so on.

pom:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.toov5</groupId> <artifactId>springboot-guava</artifactId> <version>0.0.1-SNAPSHOT</version> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>2.0.0.RELEASE</version> </parent> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>com.google.guava</groupId> <artifactId>guava</artifactId> <version>25.1-jre</version> </dependency> </dependencies> </project> |

service

package com.toov5.service;

import org.springframework.stereotype.Service;

@Service

public class OrderService {

public boolean addOrder() {

System.out.println("db...Operating order form database");

return true;

}

}controller

package com.toov5.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import com.google.common.util.concurrent.RateLimiter;

import com.toov5.service.OrderService;

@RestController

public class IndexController {

@Autowired

private OrderService orderService;

//The create method passes in a constant rate value of 1r/s in seconds and stores a token in the bucket.

RateLimiter rateLimiter = RateLimiter.create(1); //Independent threads! It's a thread itself.

//Equivalent to interface accepting only one client request per second

@RequestMapping("/addOrder")

public String addOrder() {

//Current Limited Placement Gateway Gets to the Current Client Gets the corresponding token from the bucket. The result indicates the waiting time to get the token from the bucket.

//Wait if you can't get a token

double acquire = rateLimiter.acquire();

System.out.println("Get token waiting time from bucket"+acquire);

//Business Logic Processing

boolean addOrderResult = orderService.addOrder();

if (addOrderResult) {

return "Congratulations on the success of the rush to buy!";

}

return "Buy failure!";

}

}Start:

package com.toov5;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class App {

public static void main(String[] args) {

SpringApplication.run(App.class, args);

}

}

Running access:

Accelerated clicks are followed by waiting to be saved to token. If it's not enough, it's time to wait.

It's not easy to set up service degradation process by waiting all the time.

No downgrading was obtained within the prescribed time.

Modify and add:

package com.toov5.controller;

import java.util.concurrent.TimeUnit;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import com.google.common.util.concurrent.RateLimiter;

import com.toov5.service.OrderService;

@RestController

public class IndexController {

@Autowired

private OrderService orderService;

//The create method passes in a constant rate value of 1r/s in seconds and stores a token in the bucket.

RateLimiter rateLimiter = RateLimiter.create(1); //Independent threads! It's a thread itself.

//Equivalent to interface accepting only one client request per second

@RequestMapping("/addOrder")

public String addOrder() {

//Current Limited Placement Gateway Gets to the Current Client Gets the corresponding token from the bucket. The result indicates the waiting time to get the token from the bucket.

//Wait if you can't get a token

double acquire = rateLimiter.acquire();

System.out.println("Get token waiting time from bucket"+acquire);

boolean tryAcquire=rateLimiter.tryAcquire(500,TimeUnit.MILLISECONDS); //If you don't get a token in 500sms, go downgrade directly

if (!tryAcquire) {

System.out.println("Stop robbing, wait!");

return "Stop robbing, wait!";

}

//Business Logic Processing

boolean addOrderResult = orderService.addOrder();

if (addOrderResult) {

System.out.println("Congratulations on the success of the rush to buy!");

return "Congratulations on the success of the rush to buy!";

}

return "Buy failure!";

}

}

Operation results:

After acquisition, token is deleted from the bucket

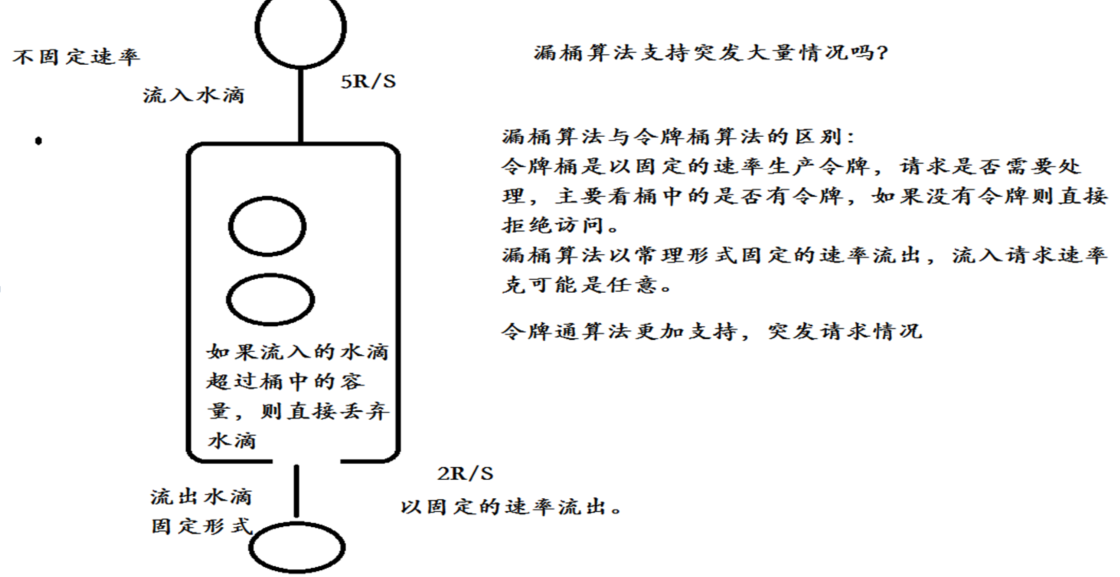

Leaky bucket algorithm

Leaky Bucket Algorithms as a Meter can be used for Traffic Shaping and Traffic Policing. Leaky Bucket Algorithms are described as follows:

A leaky bucket with a fixed capacity flows water droplets at a constant fixed rate.

If the barrel is empty, no water droplets need to flow out.

Water droplets can flow into the leaky bucket at any rate.

If the inflow droplets exceed the capacity of the bucket, the inflow droplets overflow (are discarded), and the capacity of the leaky bucket remains unchanged.

Token bucket versus leaky bucket:

The token bucket adds tokens to the bucket at a fixed rate. Whether the request is processed depends on whether the token in the bucket is sufficient. When the number of tokens is reduced to zero, the new request is rejected.

The leaky bucket outflows requests at a constant fixed rate, and the inflow request rate is arbitrary. When the number of requests accumulates to the leaky bucket capacity, the new inflow requests are rejected.

The token bucket limits the average inflow rate (allowing burst requests to be processed as long as there are tokens, supporting three tokens and four tokens at a time), and allowing a certain degree of burst traffic;

The leaky bucket limits the constant outflow rate (that is, the outflow rate is a constant value, such as the rate of all 1, but not one at a time and two at a time), thus smoothing the burst inflow rate.

The token bucket allows a certain degree of burst, and the main purpose of the leaky bucket is to smooth the inflow rate.

The implementation of the two algorithms can be the same, but the direction is opposite, and the effect of current limiting is the same for the same parameters.

In addition, sometimes we also use counters to limit the total concurrency, such as database connection pool, thread pool, secondkill concurrency; as long as the global total number of requests or the total number of requests for a certain period of time set threshold, we limit the current, which is a simple and rough total number of current limits, rather than the average speed. Rate current limiting.

The application layer can be Nginx http_limit.

Droplets flow out of buckets at a fixed rate. The capacity of droplets in buckets at any rate will not change.

The token bucket algorithm accesses at an average rate, while the leaky bucket algorithm accesses smoothly.