To train a network, we need to evaluate the network, and think about why and how to optimize the network according to the evaluation results. This is a closed loop.

How to evaluate the trained network

First, a parameter of the network is the loss value, which reflects the gap between the results obtained by your trained network and the real value. Check how the loss curve changes as the number of iterations increases, which helps to check whether the training is over fitted and whether the learning rate is too small.

I Generate loss curve

1. Save log file during training

nohup ./darknet detector train khadas_ai/khadas_ai.data khadas_ai/yolov3-khadas_ai.cfg_train darknet53.conv.74 -dont_show > train.log 2>&1 &

2. Use extract_ log. Log format required for py script conversion

import inspect

import os

import random

import sys

def extract_log(log_file,new_log_file,key_word):

with open(log_file, 'r') as f:

with open(new_log_file, 'w') as train_log:

#f = open(log_file)

#train_log = open(new_log_file, 'w')

for line in f:

if 'Syncing' in line:

continue

if 'nan' in line:

continue

if 'Region 82 Avg' in line:

continue

if 'Region 94 Avg' in line:

continue

if 'Region 106 Avg' in line:

continue

if 'total_bbox' in line:

continue

if 'Loaded' in line:

continue

if key_word in line:

train_log.write(line)

f.close()

train_log.close()

def extract_log2(log_file,new_log_file,key_word):

with open(log_file, 'r') as f:

with open(new_log_file, 'w') as train_log:

#f = open(log_file)

#train_log = open(new_log_file, 'w')

for line in f:

if 'Syncing' in line:

continue

if 'nan' in line:

continue

if 'Region 94 Avg' in line:

continue

if 'Region 106 Avg' in line:

continue

if 'total_bbox' in line:

continue

if 'Loaded' in line:

continue

if 'IOU: 0.000000' in line:

continue

if key_word in line:

del_num=line.replace("v3 (mse loss, Normalizer: (iou: 0.75, obj: 1.00, cls: 1.00) Region 82 Avg (", "")

train_log.write(del_num.replace(")", ""))

f.close()

train_log.close()

extract_log('train.log','train_log_loss.txt','images')

extract_log2('train.log','train_log_iou.txt','IOU')

Run extract_ log. After the PY script, it will parse the loss line and iou line of the log file to get two txt files.

3. Use train_loss_visualization.py script can draw loss curve

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

#%matplotlib inline

lines =18798 #Change to self generated Number of rows in train_log_loss.txt

#Adjusting the following two sets of numbers will help you view the details of the drawing

start_ite = 250 #Ignore Number of all lines starting in train_log_loss.txt

end_ite = 6000 #Ignore Number of all lines ending in train_log_loss.txt

result = pd.read_csv('train_log_loss.txt', skiprows=[x for x in range(lines) if ((x<start_ite) |(x>end_ite))] ,error_bad_lines=False, names=['loss', 'avg loss', 'rate', 'seconds', 'images'])

result.head()

result['loss']=result['loss'].str.split(' ').str.get(1)

result['avg']=result['avg loss'].str.split(' ').str.get(1)

result['rate']=result['rate'].str.split(' ').str.get(1)

result['seconds']=result['seconds'].str.split(' ').str.get(1)

result['images']=result['images'].str.split(' ').str.get(1)

result.head()

result.tail()

# print(result.head())

# print(result.tail())

# print(result.dtypes)

print(result['loss'])

#print(result['avg'])

#print(result['rate'])

#print(result['seconds'])

#print(result['images'])

result['loss']=pd.to_numeric(result['loss'])

result['avg']=pd.to_numeric(result['avg'])

result['rate']=pd.to_numeric(result['rate'])

result['seconds']=pd.to_numeric(result['seconds'])

result['images']=pd.to_numeric(result['images'])

result.dtypes

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

ax.plot(result['loss'].values,label='avg_loss')

ax.legend(loc='best')

ax.set_title('The loss curves')

ax.set_xlabel('batches')

fig.savefig('avg_loss')

Modify train_ loss_ visualization. Lines in py is train_log_loss.txt lines, and modify the number of lines to skip as needed:

skiprows=[x for x in range(lines) if ((x<start_ite) |(x>end_ite))]

Run train_loss_visualization.py will generate AVG in the path where the script is located_ loss. png.

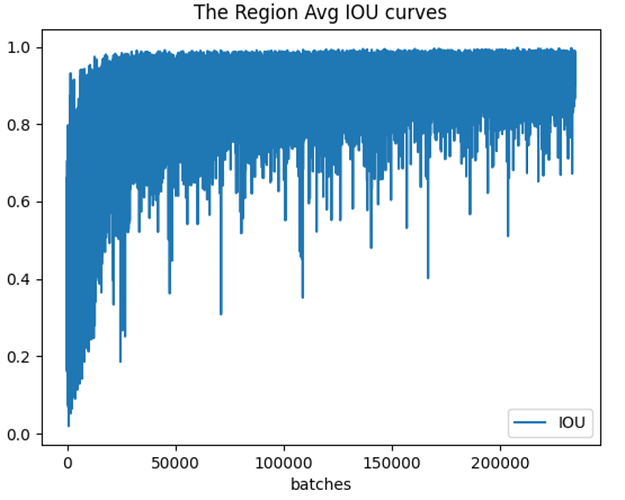

By analyzing the loss curve, the learning rate change strategy in cfg is modified. In addition to visualizing loss, you can also visualize parameters such as Avg IOU. You can use the script train_iou_visualization.py, usage and train_loss_visualization.py, train_ iou_ visualization. The PY script is as follows:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

#%matplotlib inline

lines = 234429 #Change to self generated Number of rows in train_log_iou.txt

#Adjusting the following two sets of numbers will help you view the details of the drawing

start_ite = 1 #Ignore Number of all lines starting in train_log_iou.txt

end_ite = 234429 #Ignore Number of all lines ending in train_log_iou.txt

result = pd.read_csv('train_log_iou.txt', skiprows=[x for x in range(lines) if ((x<start_ite) |(x>end_ite)) ] ,error_bad_lines=False, names=['IOU', 'count', 'class_loss', 'iou_loss', 'total_loss'])

result.head()

result['IOU']=result['IOU'].str.split(': ').str.get(1)

result['count']=result['count'].str.split(': ').str.get(1)

result['class_loss']=result['class_loss'].str.split('= ').str.get(1)

result['iou_loss']=result['iou_loss'].str.split('= ').str.get(1)

result['total_loss']=result['total_loss'].str.split('= ').str.get(1)

result.head()

result.tail()

# print(result.head())

# print(result.tail())

# print(result.dtypes)

print(result['IOU'])

#print(result['count'])

#print(result['class_loss'])

#print(result['iou_loss'])

#print(result['total_loss'])

result['IOU']=pd.to_numeric(result['IOU'])

result['count']=pd.to_numeric(result['count'])

result['class_loss']=pd.to_numeric(result['class_loss'])

result['iou_loss']=pd.to_numeric(result['iou_loss'])

result['total_loss']=pd.to_numeric(result['total_loss'])

result.dtypes

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

ax.plot(result['IOU'].values,label='IOU')

ax.legend(loc='best')

ax.set_title('The Region Avg IOU curves')

ax.set_xlabel('batches')

fig.savefig('Avg_IOU')

Run train_iou_visualization.py will generate AVG in the path where the script is located_ IOU. png.

- Region Avg IOU: This is the intersection of the predicted bbox and the actually marked bbox divided by their union. Obviously, the larger the value, the better the prediction result.

II View recall for training network

recall is the ratio of the number of correctly identified positive samples to the number of all positive samples in the test set. Obviously, the larger the value, the better the prediction result.

./darknet detector recall khadas_ai/khadas_ai.data khadas_ai/yolov3-khadas_ai.cfg_train khadas_ai/yolov3-khadas_ai_last.weights

Finally, the log obtained is as follows:

The output format is:

Number Correct Total Rps/Img IOU Recall

The specific explanations are as follows:

- Number indicates the number of pictures processed.

- Correct indicates the correct identification except for how many bbox. The steps to calculate this value are as follows: throw a picture into the network, and the network will predict many bbox. Each bbox has its confidence probability. The bbox with a probability greater than the threshold is the same as the actual bbox, that is, the txt content in the labels. Calculate the IOU and find the bbox with the largest IOU. If this maximum value is greater than the preset IOU threshold, correct plus one.

- Total indicates the actual number of bbox s.

- Rps/img indicates the average number of bbox predicted for each picture.

- IOU: This is the intersection of the predicted bbox and the actually labeled bbox divided by their union. Obviously, the larger the value, the better the prediction result.

- Recall refers to the number of detected objects divided by the number of all labeled objects. We can also see from the code that it is the value of Correct divided by Total.