1. Algorithm efficiency

There are two kinds of algorithm efficiency:

- The first is time efficiency, which is called time complexity.

- The second is spatial efficiency, which is called spatial complexity.

time complexity mainly measures the running speed of an algorithm, while space complexity mainly measures the additional space required by an algorithm. In the early stage of computer development, the storage capacity of computer is very small, so we pay much attention to space complexity. However, after the upgrading of computers, the storage capacity of computers has reached a high level, so now we don't need to pay special attention to the spatial complexity of an algorithm.

2. Time complexity

2.1 time complexity concept

Definition of time complexity: in computer science, the time complexity of an algorithm is a mathematical function, which quantitatively describes the running time of the algorithm. The time spent in the execution of an algorithm can only be measured by computer testing. It is not only troublesome, but also because this time is affected by computer configuration, it is not very appropriate to measure the time complexity of the algorithm. That's why we have the analysis method of time complexity. The time spent by an algorithm is directly proportional to the execution times of the statements in it. The execution times of the basic operations in the algorithm is called the time complexity of the algorithm.

2.2 asymptotic representation of large O

public static int func1(int N){

int count=0;

for(int i=0;i<N;i++){

for(int j=0;j<N;j++){

count++;

}

}

for(int k=0;k<2*N;k++){

count++;

}

int M=10;

while((M--)>0){

count++;

}

return count;

}

Basic operation times of func1:

f

(

N

)

=

N

2

+

2

N

+

10

f(N)=N^2+2N+10

f(N)=N2+2N+10

In fact, when we calculate the time complexity, we do not have to calculate the exact execution times, but only the approximate execution times. Here, we use the asymptotic representation of large O.

Large O symbol: a mathematical symbol used to describe the asymptotic behavior of a function.

2.3 derivation of large O-order method

- Replace all addition constants in the run time with constant 1.

- In the modified run times function, only the highest order term is retained.

- If the highest order term exists and is not 1, the constant multiplied by this item is removed. The result is large O-order.

After using the asymptotic representation of large O-order, the time complexity of func1 is:

O

(

N

2

)

O(N^2)

O(N2)

Through the above, we find that the asymptotic representation of large O removes those terms that have little impact on the results, and succinctly and clearly represents the execution times.

In addition, the time complexity of some algorithms has the best, average, and worst cases:

Worst case: enter the maximum number of runs of any scale (upper bound)

Average: enter the expected number of runs of any size

Best case: enter the minimum number of runs of any size (lower bound)

Example: search for a data x in an array of length N

Best case: 1 time

Worst case: found N times

Average: N/2 times

In practice, we generally focus on the worst-case operation of the algorithm, so the time complexity of searching data in the array is O(N)

2.4 common time complexity calculation examples

Example 1

public static int func2(int N){

int count=0;

for(int k=0;k<2*N;k++){

count++;

}

int M=10;

while((M--)>0){

count++;

}

return count;

}

Analysis: in example 1, the basic operation is performed 2N+10 times. It is known by deriving the large O-order method that the time complexity is O(N).

Example 2

public static int func3(int N,int M){

int count=0;

for(int k=0;k<M;k++){

count++;

}

for(int k=0;k<N;k++){

count++;

}

return count;

}

Analysis: the basic operation in example 2 is executed N+M times, with two unknowns m and N, and the time complexity O(M+N).

Example 3

public static int func(int N){

int count=0;

for(int k=0;k<100;k++){

count++;

}

return count;

}

Analysis: the basic operation of example 3 is performed 100 times. Through large O-order derivation, the time complexity is O(1).

Example 4

//Calculating the time complexity of bubble sorting

void bubbleSort(int[] array){

for(int end=array.length;end>0;end--){

boolean sorted =true;

for(int i=1;i<end;i++){

if(array[i-1]>array[i]){

Swap(array,i-1,i);

sorted=false;

}

}

if(sorted==true){

break;

}

}

}

Parsing: assuming that the number of elements in the array is N, the best case for bubble sorting is that the basic operation is executed N times;

Worst case scenario:

First bubbling: N-1 times

Second bubbling: N-2 times

The third bubbling: N-3 times

...

Bubbling of the N-2 trip: twice

Bubbling in the nth-1st trip: once

Total execution times = 1 + 2 +... + N-2+N-1=

N

(

N

−

1

)

2

\frac{N(N-1)}{2}

2N(N−1)

The time complexity generally depends on the worst case. Through the large O-order method, the time complexity of the bubble sorting is o(

N

2

N^2

N2).

Example 5

int binarySearch(int[]array,int value){

int begin=0;

int end=array.length-1;

while(begin<=end){

int mid=begin+((end-begin)/2);//Basic operation statement

if(array[mid]<value) {

begin = mid + 1;

}else if (array[mid]>value){

end=mid-1;

}else

return mid;

}

return -1;

}

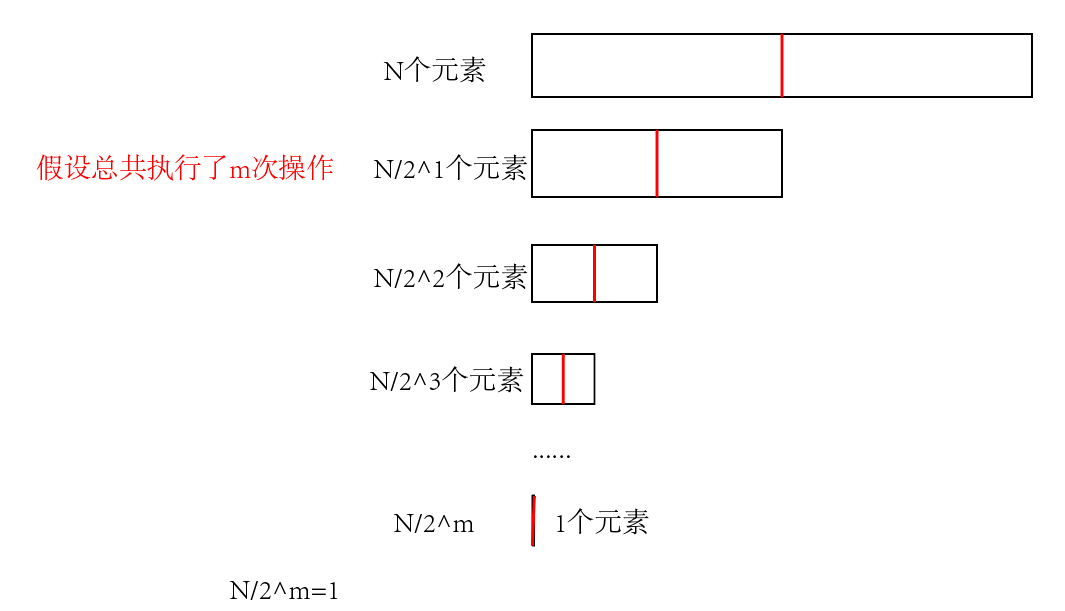

Analysis: the basic operation of binary search is executed once in the best case;

Worst case scenario:

m can be obtained by calculation=

l

o

g

2

N

log_2N

log2 N, so the time complexity of the binary search is

l

o

g

2

N

log_2N

log2N.

Example 6

//Time complexity of computing factorial recursive factorial

long factorial(int N){

return N<2?N:factorial(N-1)*N;

}

Parsing: the total execution times of general recursion = the execution times of basic statements in a single run × Total number of recursions

Total execution times of the algorithm = 1 × N=N, so the time complexity is O(N).

Example 7

//Computing the time complexity of fibonacci recursive fibonacci

int fibonacci(int N){

return N<2?N:fibonacci(N-1)+fibonacci(N-2);

}

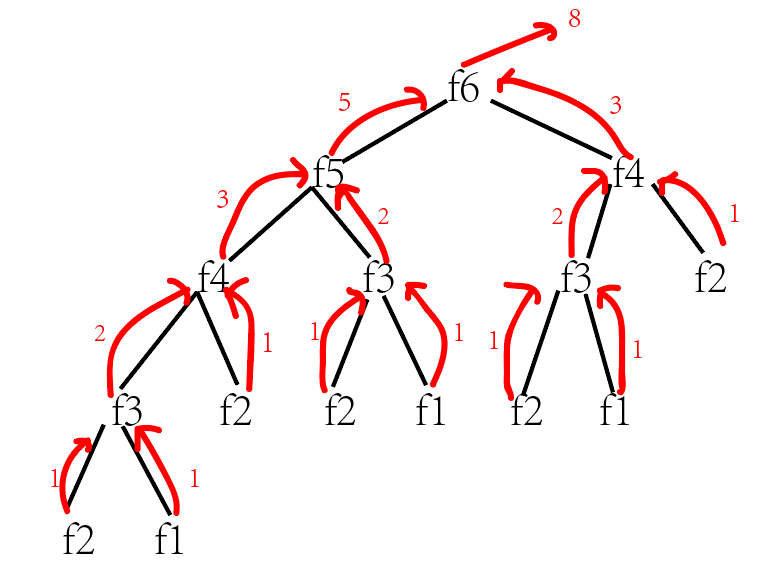

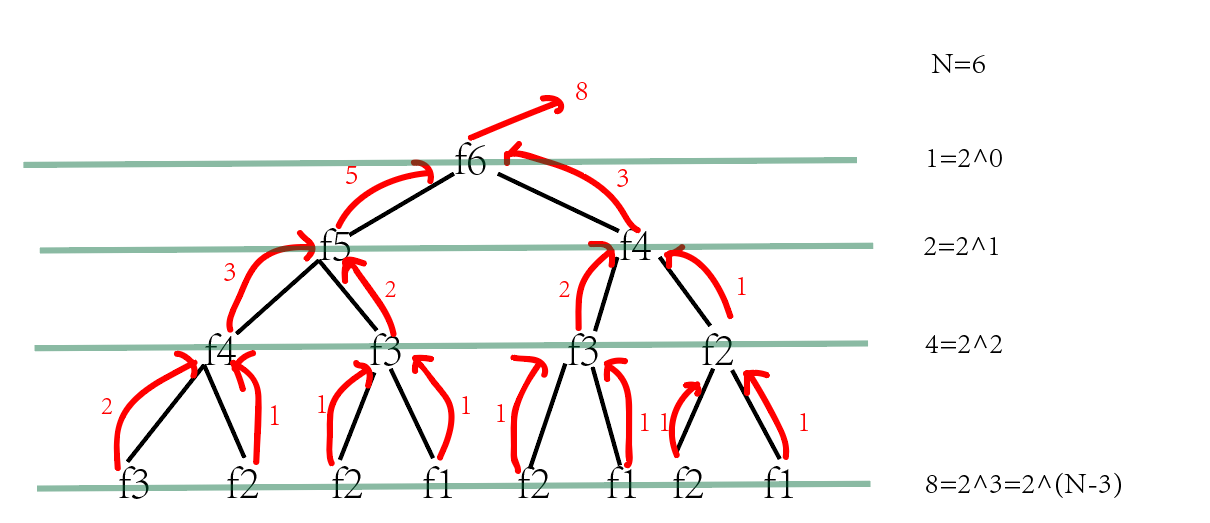

Resolution:

Move the lower left corner to the vacant place on the right

So the total number of recursions

f

(

N

)

=

2

0

+

2

1

+

2

2

+

.

.

.

.

.

.

+

2

N

−

3

f(N)=2^0+2^1+2^2+......+2^{N-3}

f(N)=20+21+22+......+2N−3

=

2

N

−

2

−

1

=2^{N-2}-1

=2N−2−1

Through the large O-order method, the time complexity of the Fibonacci recursion is

O

(

N

2

)

O(N^2)

O(N2).

3. Space complexity

Space complexity is a measure of the amount of storage space temporarily occupied by an algorithm during operation. Space complexity is not the number of bytes occupied by the program, but the number of variables. The calculation rules of space complexity are basically similar to the practical complexity, and the large O approach representation is also used.

3.1 space complexity example

Example 1

void bubbleSort(int[] array){

for(int end=array.length;end>0;end--){

boolean sorted =true;

for(int i=1;i<end;i++){

if(array[i-1]>array[i]){

Swap(array,i-1,i);

sorted=false;

}

}

if(sorted==true){

break;

}

}

}

The core of space complexity calculation: for general algorithms, we only need to see whether additional space is applied in the algorithm.

Calculation method of general recursive space complexity:

Space required for single recursion × Depth of recursion

Depth is actually the number of stack frames opened.

Analysis: Example 1 opens up an additional space, so the space complexity is O(1).

Example 2

long[] fibonacci(int n){

long[] fibArray=new long[n+1];

fibArray[0]=0;

fibArray[1]=1;

for(int i=2;i<=n;i++){

fibArray[i]=fibArray[i-1]+fibArray[i-2];

}

return fibArray;

}

Analysis: the algorithm dynamically opens up N spaces, and the space complexity is O(N).

Example 3

long factorial(int N){

return N<2?N:factorial(N-1)*N;

}

Resolution: recursively called N times and opened up N stack frames. Each stack frame uses a constant space with a space complexity of O(N).

Common time complexity:

O

(

1

)

,

O

(

l

o

g

2

N

)

,

O

(

N

)

,

O

(

N

l

o

g

2

N

)

,

O

(

N

2

)

,

O

(

N

3

)

,

O

(

2

N

)

,

O

(

N

!

)

O(1),O(log_2N),O(N),O(Nlog_2N),O(N^2),O(N^3),O(2^N),O(N!)

O(1),O(log2N),O(N),O(Nlog2N),O(N2),O(N3),O(2N),O(N!)

Common space complexity:

O

(

1

)

,

O

(

N

)

,

O

(

N

2

)

O(1),O(N),O(N^2)

O(1),O(N),O(N2)